21.26 mongodb介紹

MongoDB是一個基於分佈式文件存儲的數據庫,屬於文檔型的,雖然也是NoSQL數據庫的一種,但是與redis、memcached等數據庫有些區別。MongoDB由C++語言編寫。旨在爲WEB應用提供可擴展的高性能數據存儲解決方案。

MongoDB是一個介於關係數據庫和非關係數據庫之間的產品,是非關係數據庫當中功能最豐富,最像關係數據庫的。他支持的數據結構非常鬆散,是類似json的bson格式,因此可以存儲比較複雜的數據類型。Mongo最大的特點是他支持的查詢語言非常強大,其語法有點類似於面向對象的查詢語言,幾乎可以實現類似關係數據庫單表查詢的絕大部分功能,而且還支持對數據建立索引。

2007年10月,MongoDB由10gen團隊所發展。2009年2月首度推出。

MongoDB 可以將數據存儲爲一個文檔,數據結構由鍵值(key=>value)對組成。MongoDB 文檔類似於 JSON 對象。字段值可以包含其他文檔、數組及文檔數組。

MongoDB官網地址,目前最新版是4.0:

https://www.mongodb.com/

關於JSON的描述可以參考以下文章:

http://www.w3school.com.cn/json/index.asp

特點:

面向集合存儲,易存儲對象類型的數據

模式自由

支持動態查詢

支持完全索引,包含內部對象

支持查詢

支持複製和故障恢復

使用高效的二進制數據存儲,包括大型對象(如視頻等)

自動處理碎片,以支持雲計算層次的擴展性

支持RUBY,PYTHON,JAVA,C++,PHP,C#等多種語言

文件存儲格式爲BSON(一種JSON的擴展)

可通過網絡訪問

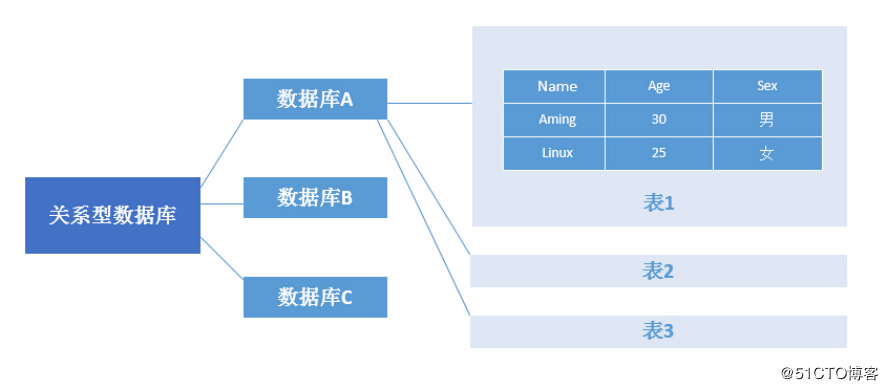

在mongodb中基本的概念是文檔、集合、數據庫,下圖是MongoDB和關係型數據庫的術語以及概念的對比:

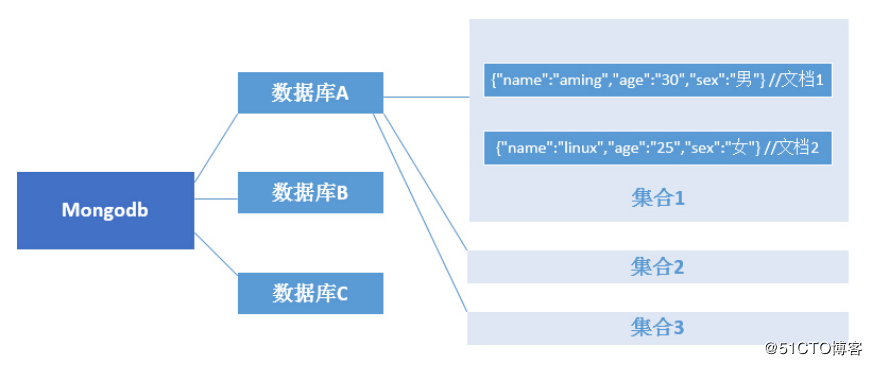

以下使用兩張圖對比一下關係型數據庫和MongoDB數據庫的數據存儲結構:

關係型數據庫數據結構:

MongoDB數據結構:

21.27 mongodb安裝

說明: MongoDB安裝就是弄一個官方的yum源,安裝即可

創建yum源

說明: 進入/etc/yum.repos.d/目錄,創建mongodb.repo

[root@localhost ~]# cd /etc/yum.repos.d/

[root@localhost yum.repos.d]# vim mongodb.repo #寫入如下內容

[mongodb-org-4.0]

name=MongoDB Repository

baseurl=https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/4.0/x86_64/

gpgcheck=1

enabled=1

gpgkey=https://www.mongodb.org/static/pgp/server-4.0.asc編輯保存完成之後yum list查看是否有mongodb相關的rpm包:

[root@localhost yum.repos.d]# yum list |grep mongodb

mongodb-org.x86_64 4.0.1-1.el7 mongodb-org-4.0

mongodb-org-mongos.x86_64 4.0.1-1.el7 mongodb-org-4.0

mongodb-org-server.x86_64 4.0.1-1.el7 mongodb-org-4.0

mongodb-org-shell.x86_64 4.0.1-1.el7 mongodb-org-4.0

mongodb-org-tools.x86_64 4.0.1-1.el7 mongodb-org-4.0直接使用yum安裝:

[root@localhost yum.repos.d]# yum install -y mongodb-org21.28 連接mongodb

1.mongodb的配置文件:

[root@localhost ~]# vim /etc/mongod.conf

# mongod.conf

# for documentation of all options, see:

# http://docs.mongodb.org/manual/reference/configuration-options/

# where to write logging data.

systemLog: # 這裏是定義日誌文件相關的

destination: file

logAppend: true

path: /var/log/mongodb/mongod.log

# Where and how to store data.

storage: # 數據存儲目錄相關

dbPath: /var/lib/mongo

journal:

enabled: true

# engine:

# mmapv1:

# wiredTiger:

# how the process runs

processManagement: # pid文件相關

fork: true # fork and run in background

pidFilePath: /var/run/mongodb/mongod.pid # location of pidfile

timeZoneInfo: /usr/share/zoneinfo

# network interfaces

net: # 定義監聽的端口以及綁定的IP,IP可以有多個使用逗號分隔即可 演示:( bindIp: 127.0.0.1,192.168.66.130)

port: 27017

bindIp: 127.0.0.1 # Enter 0.0.0.0,:: to bind to all IPv4 and IPv6 addresses or, alternatively, use the net.bindIpAll setting.

#security:

#operationProfiling:

#replication:

#sharding:

## Enterprise-Only Options

#auditLog:

#snmp:2.啓動 mongod 服務,並查看進程和監聽端口:

[root@localhost yum.repos.d]# systemctl start mongod

[root@localhost yum.repos.d]# ps aux |grep mongod

mongod 12194 9.7 6.0 1067976 60192 ? Sl 10:33 0:00 /usr/bin/mongod -f /etc/mongod.conf

root 12221 0.0 0.0 112720 972 pts/1 S+ 10:33 0:00 grep --color=auto mongod

[root@localhost yum.repos.d]# netstat -lnp|grep mongod

tcp 0 0 192.168.66.130:27017 0.0.0.0:* LISTEN 12194/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 12194/mongod

unix 2 [ ACC ] STREAM LISTENING 90072 12194/mongod /tmp/mongodb-27017.sock3.連接MongoDB

說明:連接MongoDB,使用Mongo命令即可連接

演示:

[root@localhost yum.repos.d]# mongo

MongoDB shell version v4.0.1

connecting to: mongodb://127.0.0.1:27017

MongoDB server version: 4.0.1

Welcome to the MongoDB shell.

For interactive help, type "help".

For more comprehensive documentation, see

http://docs.mongodb.org/

Questions? Try the support group

http://groups.google.com/group/mongodb-user

Server has startup warnings:

2018-08-26T10:33:15.470+0800 I CONTROL [initandlisten]

2018-08-26T10:33:15.470+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2018-08-26T10:33:15.470+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2018-08-26T10:33:15.470+0800 I CONTROL [initandlisten]

2018-08-26T10:33:15.471+0800 I CONTROL [initandlisten]

2018-08-26T10:33:15.471+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2018-08-26T10:33:15.471+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2018-08-26T10:33:15.471+0800 I CONTROL [initandlisten]

2018-08-26T10:33:15.471+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2018-08-26T10:33:15.471+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2018-08-26T10:33:15.471+0800 I CONTROL [initandlisten]

---

Enable MongoDB's free cloud-based monitoring service, which will then receive and display

metrics about your deployment (disk utilization, CPU, operation statistics, etc).

The monitoring data will be available on a MongoDB website with a unique URL accessible to you

and anyone you share the URL with. MongoDB may use this information to make product

improvements and to suggest MongoDB products and deployment options to you.

To enable free monitoring, run the following command: db.enableFreeMonitoring()

To permanently disable this reminder, run the following command: db.disableFreeMonitoring()

---

> •如果mongodb監聽端口並不是默認的27017,則在連接的時候需要加--port 選項,例如:

mongo --port 27018•連接遠程MongoDB,需要加--host選項: mongo --host 192.168.66.130

[root@localhost yum.repos.d]# mongo --host 192.168.66.130 --port 27017•如果MongoDB設置了驗證,則在連接時需要帶用戶名和密碼

格式:

mongo -u username -p passwd --authenticationDatabase db21.29 mongodb用戶管理

1.進入到MongoDB裏,切換到admin庫,因爲得到admin庫裏才能進行用戶相關的操作:

[root@localhost ~]# mongo

#切換到admin庫

> use admin

switched to db admin

> 2.創建用戶:

> db.createUser( { user: "admin", customData: {description: "superuser"}, pwd: "admin122", roles: [ { role: "root", db: "admin" } ] } )

Successfully added user: {

"user" : "admin",

"customData" : {

"description" : "superuser"

},

"roles" : [

{

"role" : "root",

"db" : "admin"

}

]

}以上我們創建了一個admin用戶,說明:小括號的級別是最大的,然後是方括號,再者是花括號,user指定用戶,customData說明字段,可以省略,pwd爲密碼,roles指定用戶的角色,role相當於指定權限,db指定庫名,創建用戶時必須針對一個庫。

3.db.system.users.find() --->列出所有的用戶,需要先切換到admin庫下

> db.system.users.find()

{ "_id" : "admin.admin", "user" : "admin", "db" : "admin", "credentials" : { "SCRAM-SHA-1" : { "iterationCount" : 10000, "salt" : "9sOjvYZsJhrO177B4xNJxg==", "storedKey" : "d3xRf6ZmyyKeIRRffwsxVfPV/V4=", "serverKey" : "IK5KBhAzowClzCBa4NNHmuWDvIM=" }, "SCRAM-SHA-256" : { "iterationCount" : 15000, "salt" : "F7/rtZ0WJiqByvtiZpZ/sKareDh0rI2kDtc0gw==", "storedKey" : "5ZDQ8uZhiTuqI8g1zeSTfj3mNOFlc8d19cL+dlQYUWI=", "serverKey" : "BTidtpburV7jXTRyL5a3VknUsXXVoVtMpkC19p5bgQg=" } }, "customData" : { "description" : "superuser" }, "roles" : [ { "role" : "root", "db" : "admin" } ] }- show users --->查看當前庫下所有的用戶

> show users { "_id" : "admin.admin", "user" : "admin", "db" : "admin", "customData" : { "description" : "superuser" }, "roles" : [ { "role" : "root", "db" : "admin" } ], "mechanisms" : [ "SCRAM-SHA-1", "SCRAM-SHA-256" ] }5.刪除用戶:

# 先創建一個用戶luo,密碼爲123456,用戶的角色爲read,庫爲testdb > db.createUser({user:"luo",pwd:"123456",roles:[{role:"read",db:"testdb"}]}) Successfully added user: { "user" : "luo", "roles" : [ { "role" : "read", "db" : "testdb" } ] } #查看所有的用戶,可以看到有兩個 > show users { "_id" : "admin.admin", "user" : "admin", "db" : "admin", "customData" : { "description" : "superuser" }, "roles" : [ { "role" : "root", "db" : "admin" } ], "mechanisms" : [ "SCRAM-SHA-1", "SCRAM-SHA-256" ] } { "_id" : "admin.luo", "user" : "luo", "db" : "admin", "roles" : [ { "role" : "read", "db" : "testdb" } ], "mechanisms" : [ "SCRAM-SHA-1", "SCRAM-SHA-256" ] }db.dropUser -->刪除一個用戶 ,把剛纔建的用戶luo刪除

> db.dropUser("luo") true # 返回true說明刪除成功6.使用新建用戶名和密碼登錄MongoDB

我們之前創建了一個admin用戶,但是若要用戶密碼生效,還需要編輯啓動腳本vim /usr/lib/systemd/system/mongod.service,在OPTIONS=後面增--auth:[root@localhost ~]# vim /usr/lib/systemd/system/mongod.service ................ Environment="OPTIONS=-f /etc/mongod.conf" #更改爲: Environment="OPTIONS=--auth -f /etc/mongod.conf" ...............重啓服務:

[root@localhost ~]# systemctl restart mongod Warning: mongod.service changed on disk. Run 'systemctl daemon-reload' to reload units. #說明:因爲剛修改了啓動腳本,所以提示需要reload一下

[root@localhost ~]# systemctl daemon-reload

[root@localhost ~]# systemctl restart mongod

查看進程(可以看到多了 --auth):[root@localhost ~]# ps aux|grep mongod

mongod 12294 8.7 5.2 1067972 52052 ? Sl 10:55 0:01 /usr/bin/mongod --auth -f /etc/mongod.conf

root 12325 0.0 0.0 112720 972 pts/1 S+ 10:56 0:00 grep --color=auto mongod

7.使用admin用戶登錄MongoDB:[root@localhost ~]# mongo -u "admin" -p "admin122" --authenticationDatabase "admin" --host 192.168.66.130 --port 27017

8.創建庫和用戶:當use一個不存在的庫時會自動創建

use db1

switched to db db1

#創建test1用戶,並授權:

db.createUser( { user: "test1", pwd: "123aaa", roles: [ { role: "readWrite", db: "db1" }, {role: "read", db: "db2" } ] } )

Successfully added user: {

"user" : "test1",

"roles" : [

{

"role" : "readWrite",

"db" : "db1"

},

{

"role" : "read",

"db" : "db2"

}

]

}•說明:test1用戶對db1庫讀寫,對db2只讀. 之所以先創建db1庫,表示用戶在db1庫中創建,就一定要db1庫驗證身份,即用戶的信息跟隨數據庫. 比如test1用戶雖然有db2庫的讀取權限,但是一定要先在db1庫進行身份驗證,直接訪問會提示驗證失敗. 在db1中驗證身份:db.auth('test1','123aaa')

1驗證完之後纔可以在db2庫中進行相關的操作

use db2

switched to db db2**關於MongoDB用戶角色:**  ### 21.30 mongodb創建集合、數據管理 創建集合語法:db.createCollection(name,options)

name就是集合的名字,options可選,用來配置集合的參數。

例如我要創建一個名爲mycol的集合,命令如下:

> db.createCollection("mycol", { capped : true, size : 6142800, max : 10000 } )

{ "ok" : 1 }

> 以上命令創建了一個名爲mycol的集合,在參數中指定了啓用封頂集合,並且設置該集合的大小爲6142800個字節,以及設置該集合允許在文件的最大數量爲10000。

可配置集合的參數如下:

capped true/false (可選)如果爲true,則啓用封頂集合。封頂集合是固定大小的集合,當它達到其最大大小,會自動覆蓋最早的條目。如果指定true,則也需要指定尺寸參數。

autoindexID true/false (可選)如果爲true,自動創建索引_id字段的默認值是false。

size (可選)指定最大大小字節封頂集合。如果封頂如果是 true,那麼你還需要指定這個字段。單位B

max (可選)指定封頂集合允許在文件的最大數量。MongoDB其他的一些常用命令:

1.show collections命令可以查看集合,或者使用show tables也可以:

> show collections

mycol

> show tables

mycol2.插入數據

說明: 當集合不存在時,在給集合插入數據時,會自動創建這個集合.

> db.Account.insert({AccountID:1,UserName:"test",password:"123456"})

WriteResult({ "nInserted" : 1 })

> show tables

Account

mycol

> db.mycol.insert({AccountID:1,UserName:"test",password:"123456"})

WriteResult({ "nInserted" : 1 })3.更新數據

// $set是一個動作,以下這條語句是在集合中新增了一個名爲Age的key,設置的value爲20

> db.Account.update({AccountID:1},{"$set":{"Age":20}})

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })- 查看所有文檔

#先插入一條數據 > db.Account.insert({AccountID:2,UserName:"test2",password:"123456"}) WriteResult({ "nInserted" : 1 }) > db.Account.find() // 查看指定集合中的所有文檔 { "_id" : ObjectId("5a5377cb503451a127782146"), "AccountID" : 1, "UserName" : "test", "password" : "123456", "Age" : 20 } { "_id" : ObjectId("5a537949503451a127782149"), "AccountID" : 2, "UserName" : "test2", "password" : "123456" }可以根據條件進行查詢,例如我要指定id進行查看:

> db.Account.find({AccountID:1}) { "_id" : ObjectId("5a5377cb503451a127782146"), "AccountID" : 1, "UserName" : "test", "password" : "123456", "Age" : 20 } > db.Account.find({AccountID:2}) { "_id" : ObjectId("5a537949503451a127782149"), "AccountID" : 2, "UserName" : "test2", "password" : "123456" }根據條件刪除數據:

> db.Account.remove({AccountID:1}) WriteResult({ "nRemoved" : 1 }) > db.Account.find() { "_id" : ObjectId("5a537949503451a127782149"), "AccountID" : 2, "UserName" : "test2", "password" : "123456" }5.刪除集合

> db.Account.drop() true > show tables mycol6查看集合的狀態

> db.printCollectionStats() mycol { "ns" : "db1.mycol", "size" : 0, "count" : 0, "storageSize" : 4096, "capped" : true, "max" : 10000, "maxSize" : 6142976, "sleepCount" : 0, "sleepMS" : 0, "wiredTiger" : { "metadata" : { "formatVersion" : 1 } .............................. .............................. 信息太長,省略21.31 php的mongodb擴展

php的官方給出了兩個mongodb的擴展,一個是mongodb.so,另一個是mongo.so。mongodb.so是針對新版本的php擴展,而mongo.so則是對舊版本的php擴展。

以下是官方給出的關於兩個擴展的參考文檔:

https://docs.mongodb.com/ecosystem/drivers/php/

以下是官方的解釋:

MongoDB.so :

目前維護的驅動程序是PECL提供的mongodb擴展。這個驅動程序可以獨立使用,雖然它是非常裸體的。您應該考慮使用帶有免費PHP庫的驅動程序,該庫可在裸機驅動程序之上實現更全功能的API。有關此架構的更多信息可以在PHP.net文檔中找到。

Mongo.so:

mongo擴展是針對的PHP 5.x的舊版驅動程序。該mongo擴展不再保持,新的項目建議使用mongodb擴展和PHP庫。社區開發的Mongo PHP適配器項目mongo使用新的mongodb擴展和PHP庫來實現舊 擴展的API ,這對於希望遷移現有應用程序的用戶來說可能是有用的。由於現在新舊版本的php都有在使用,所以我們需要了解兩種擴展的安裝方式,首先介紹mongodb.so的安裝方式:

有兩種方式可以安裝mongodb.so,第一種是通過git安裝:

[root@localhost ~]# cd /usr/local/src/

[root@localhost /usr/local/src]# git clone https://github.com/mongodb/mongo-php-driver

[root@localhost /usr/local/src/mongo-php-driver]# git submodule update --init

[root@localhost /usr/local/src/mongo-php-driver]# /usr/local/php/bin/phpize

[root@localhost /usr/local/src/mongo-php-driver]# ./configure --with-php-config=/usr/local/php/bin/php-config

[root@localhost /usr/local/src/mongo-php-driver]# make && make install

[root@localhost /usr/local/src/mongo-php-driver]# vim /usr/local/php/etc/php.ini

extension = mongodb.so // 增加這一行

[root@localhost /usr/local/src/mongo-php-driver]# /usr/local/php/bin/php -m |grep mongodb

mongodb

[root@localhost /usr/local/src/mongo-php-driver]#由於國內連GitHub不是很流暢,所以這種安裝方式會有點慢。

第二種是通過源碼包安裝:

[root@localhost ~]# cd /usr/local/src/

[root@localhost /usr/local/src]# wget https://pecl.php.net/get/mongodb-1.3.0.tgz

[root@localhost /usr/local/src]# tar zxvf mongodb-1.3.0.tgz

[root@localhost /usr/local/src]# cd mongodb-1.3.0

[root@localhost /usr/local/src/mongodb-1.3.0]# /usr/local/php/bin/phpize

[root@localhost /usr/local/src/mongodb-1.3.0]# ./configure --with-php-config=/usr/local/php/bin/php-config

[root@localhost /usr/local/src/mongodb-1.3.0]# make && make install

[root@localhost /usr/local/src/mongodb-1.3.0]# vim /usr/local/php/etc/php.ini

extension = mongodb.so // 增加這一行

[root@localhost /usr/local/src/mongodb-1.3.0]# /usr/local/php/bin/php -m |grep mongodb

mongodb

[root@localhost /usr/local/src/mongodb-1.3.0]#21.32 php的mongo擴展

安裝過程如下:

[root@localhost ~]# cd /usr/local/src/

[root@localhost /usr/local/src]# wget https://pecl.php.net/get/mongo-1.6.16.tgz

[root@localhost /usr/local/src]# tar -zxvf mongo-1.6.16.tgz

[root@localhost /usr/local/src]# cd mongo-1.6.16/

[root@localhost /usr/local/src/mongo-1.6.16]# /usr/local/php/bin/phpize

[root@localhost /usr/local/src/mongo-1.6.16]# ./configure --with-php-config=/usr/local/php/bin/php-config

[root@localhost /usr/local/src/mongo-1.6.16]# make && make install

[root@localhost /usr/local/src/mongo-1.6.16]# vim /usr/local/php/etc/php.ini

extension = mongo.so // 增加這一行

[root@localhost /usr/local/src/mongo-1.6.16]# /usr/local/php/bin/php -m |grep mongo

mongo

mongodb測試mongo擴展:

1.先去掉MongoDB的用戶認證,然後編輯測試頁:

[root@localhost ~]# vim /usr/lib/systemd/system/mongod.service # 將--auth去掉

[root@localhost ~]# systemctl daemon-reload

[root@localhost ~]# systemctl restart mongod.service

[root@localhost ~]# vim /data/wwwroot/abc.com/index.php # 編輯測試頁

<?php

$m = new MongoClient(); # 連接

$db = $m->test; # 獲取名稱爲 "test" 的數據庫

$collection = $db->createCollection("runoob");

echo "集合創建成功";

?>2.訪問測試頁:

[root@localhost ~]# curl localhost/index.php

集合創建成功3.到MongoDB裏看看集合是否存在:

[root@localhost ~]# mongo --host 192.168.66.130 --port 27017

> use test

switched to db test

> show tables

runoob # 集合創建成功就代表沒問題了

>關於php連接MongoDB可以參考以下文章:

http://www.runoob.com/mongodb/mongodb-php.html

擴展內容

mongodb安全設置

http://www.mongoing.com/archives/631

mongodb執行js腳本

http://www.jianshu.com/p/6bd8934bd1ca

21.33 mongodb副本集介紹

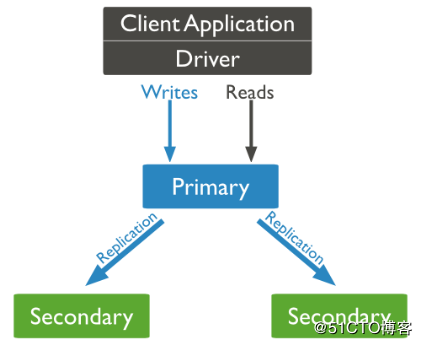

副本集(Replica Set)是一組MongoDB實例組成的集羣,由一個主(Primary)服務器和多個備份(Secondary)服務器構成。通過Replication,將數據的更新由Primary推送到其他實例上,在一定的延遲之後,每個MongoDB實例維護相同的數據集副本。通過維護冗餘的數據庫副本,能夠實現數據的異地備份,讀寫分離和自動故障轉移。

也就是說如果主服務器崩潰了,備份服務器會自動將其中一個成員升級爲新的主服務器。使用複製功能時,如果有一臺服務器宕機了,仍然可以從副本集的其他服務器上訪問數據。如果服務器上的數據損壞或者不可訪問,可以從副本集的某個成員中創建一份新的數據副本。

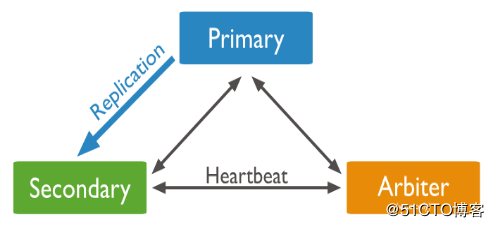

早期的MongoDB版本使用master-slave,一主一從和MySQL類似,但slave在此架構中爲只讀,當主庫宕機後,從庫不能自動切換爲主。目前已經淘汰master-slave模式,改爲副本集,這種模式下有一個主(primary),和多個從(secondary),只讀。支持給它們設置權重,當主宕掉後,權重最高的從切換爲主。在此架構中還可以建立一個仲裁(arbiter)的角色,它只負責裁決,而不存儲數據。此架構中讀寫數據都是在主上,要想實現負載均衡的目的需要手動指定讀庫的目標server。

簡而言之MongoDB 副本集是有自動故障恢復功能的主從集羣,有一個Primary節點和一個或多個Secondary節點組成。類似於MySQL的MMM架構。更多關於副本集的介紹請見官方文檔:

https://docs.mongodb.com/manual/replication/

副本集架構圖:

原理很簡單一個primary,secondary至少是一個,也可以是多個secondary,除了多個secondary之外,還可以加一個Arbiter,Arbiter叫做仲裁,當Primary宕機後,Arbiter可以很準確的告知Primary宕掉了,但可能Primary認爲自己沒有宕掉,這樣的話就會出現腦裂,爲了防止腦裂就增加了Arbiter這個角色,尤其是數據庫堅決不能出現腦裂的狀態,腦裂會導致數據會紊亂,數據一旦紊亂恢復就非常麻煩.

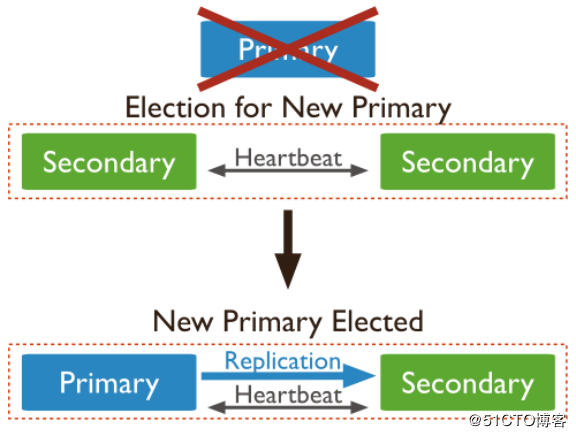

變遷圖

說明:Primary宕機後,其中secondary就成爲一個新的Primary,另外一個secondary依然是secondary的角色. 對於MySQL主從來講,即使做一主多從,萬一master宕機後,可以讓從成爲新的主,但這過程是需要手動的更改的. 但是在MongoDB副本集架構當中呢,它完全都是自動的,rimary宕機後,其中secondary就成爲一個新的Primary,另外一個secondary可以自動識別新的primary.

21.34 mongodb副本集搭建

使用三臺機器搭建副本集:

192.168.66.130 (primary)

192.168.66.131 (secondary)

192.168.66.132 (secondary)這三臺機器上都已經安裝好了MongoDB

開始搭建:

1.編輯三臺機器的配置文件,更改或增加以下內容:

Primary機器:

[root@primary ~]# vim /etc/mongod.conf

net:

port: 27017

bindIp: 127.0.0.1,192.168.66.130 # Enter 0.0.0.0,:: to bind to all IPv4 and IPv6 addresses or, alternatively, use the net.bindIpAll setting..

#說明:做副本集bindIp 要監聽本機IP和內網IP

#replication: //把#去掉,並增兩行

replication:

oplogSizeMB: 20 # 增加這一行配置定義oplog的大小,注意前面需要有兩個空格

replSetName: luo # 定義複製集的名稱,同樣的前面需要有兩個空格

#重啓mongod服務

[root@primary ~]# systemctl restart mongod

[root@primary ~]# ps aux |grep mongod

mongod 13085 9.6 5.7 1100792 56956 ? Sl 13:30 0:00 /usr/bin/mongod -f /etc/mongod.conf

root 13120 0.0 0.0 112720 972 pts/1 S+ 13:30 0:00 grep --color=auto mongod

Secondary1機器:

[root@secondary1 ~]# vim /etc/mongod.conf

net:

port: 27017

bindIp: 127.0.0.1,192.168.66.131 # Enter 0.0.0.0,:: to bind to all IPv4 and IPv6 addresses or, alternatively, use the net.bindIpAll setting..

#說明:做副本集bindIp 要監聽本機IP和內網IP

#replication: //把#去掉,並增兩行

replication:

oplogSizeMB: 20 # 增加這一行配置定義oplog的大小,注意前面需要有兩個空格

replSetName: luo # 定義複製集的名稱,同樣的前面需要有兩個空格

#重啓mongod服務

[root@secondary1 ~]# ps aux |grep mongod

mongod 4012 0.8 5.6 1104640 55916 ? Sl 13:33 0:03 /usr/bin/mongod -f /etc/mongod.conf

Secondary2機器:

[root@secondary2 ~]# vim /etc/mongod.conf

net:

port: 27017

bindIp: 127.0.0.1,192.168.66.132 # Enter 0.0.0.0,:: to bind to all IPv4 and IPv6 addresses or, alternatively, use the net.bindIpAll setting..

#說明:做副本集bindIp 要監聽本機IP和內網IP

#replication: //把#去掉,並增兩行

replication:

oplogSizeMB: 20 # 增加這一行配置定義oplog的大小,注意前面需要有兩個空格

replSetName: luo # 定義複製集的名稱,同樣的前面需要有兩個空格

#重啓mongod服務

[root@secondary2 ~]# systemctl restart mongod

[root@secondary2 ~]# ps aux |grep mongod

mongod 1407 17.0 5.3 1101172 53064 ? Sl 13:41 0:01 /usr/bin/mongod -f /etc/mongod.conf

root 1442 0.0 0.0 112720 964 pts/0 R+ 13:41 0:00 grep --color=auto mongod2.關閉三臺機器的防火牆,或者清空iptables規則

3.連接主機器的MongoDB,在主機器上運行命令mongo,然後配置副本集

[root@primary ~]# mongo

# 分別配置三臺機器的ip

> config={_id:"luo",members:[{_id:0,host:"192.168.66.130:27017"},{_id:1,host:"192.168.66.131:27017"},{_id:2,host:"192.168.66.132:27017"}]}

{

"_id" : "luo",

"members" : [

{

"_id" : 0,

"host" : "192.168.66.130:27017"

},

{

"_id" : 1,

"host" : "192.168.66.131:27017"

},

{

"_id" : 2,

"host" : "192.168.66.132:27017"

}

]

}

> rs.initiate(config) # 初始化

{

"ok" : 1,

"operationTime" : Timestamp(1535266738, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535266738, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

luo:SECONDARY>rs.status() # 查看狀態

{

"set" : "luo",

"date" : ISODate("2018-08-26T06:59:18.812Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535266751, 5),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535266751, 5),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1535266751, 5),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1535266751, 5),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1535266751, 1),

"members" : [

{

"_id" : 0,

"name" : "192.168.66.130:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 168,

"optime" : {

"ts" : Timestamp(1535266751, 5),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-26T06:59:11Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1535266749, 1),

"electionDate" : ISODate("2018-08-26T06:59:09Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.66.131:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 19,

"optime" : {

"ts" : Timestamp(1535266751, 5),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1535266751, 5),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-26T06:59:11Z"),

"optimeDurableDate" : ISODate("2018-08-26T06:59:11Z"),

"lastHeartbeat" : ISODate("2018-08-26T06:59:17.408Z"),

"lastHeartbeatRecv" : ISODate("2018-08-26T06:59:17.957Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.66.130:27017",

"syncSourceHost" : "192.168.66.130:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.66.132:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 19,

"optime" : {

"ts" : Timestamp(1535266751, 5),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1535266751, 5),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-26T06:59:11Z"),

"optimeDurableDate" : ISODate("2018-08-26T06:59:11Z"),

"lastHeartbeat" : ISODate("2018-08-26T06:59:17.408Z"),

"lastHeartbeatRecv" : ISODate("2018-08-26T06:59:17.959Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.66.130:27017",

"syncSourceHost" : "192.168.66.130:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1535266751, 5),

"$clusterTime" : {

"clusterTime" : Timestamp(1535266751, 5),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}以上我們需要關注三臺機器的stateStr狀態,主機器的stateStr狀態需要爲PRIMARY,兩臺從機器的stateStr狀態需要爲SECONDARY纔是正常。

如果出現兩個從上的stateStr狀態爲"stateStr" : "STARTUP", 則需要進行如下操作:

> config={_id:"luo",members:[{_id:0,host:"192.168.66.130:27017"},{_id:1,host:"192.168.66.131:27017"},{_id:2,host:"192.168.66.132:27017"}]}

> rs.reconfig(config)然後再次查看狀態:rs.status(),確保從的狀態變爲SECONDARY。

21.35 mongodb副本集測試

1.在主機器上創建庫以及創建集合:

luo:PRIMARY> use testdb

switched to db testdb

luo:PRIMARY> db.test.insert({AccountID:1,UserName:"luo",password:"123456"})

WriteResult({ "nInserted" : 1 })

luo:PRIMARY> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

testdb 0.000GB

luo:PRIMARY> show tables

test2.然後到從機器上查看是否有同步主機器上的數據:

luo:SECONDARY> show dbs

2018-08-26T15:04:48.981+0800 E QUERY [js] Error: listDatabases failed:{

"operationTime" : Timestamp(1535267081, 1),

"ok" : 0,

"errmsg" : "not master and slaveOk=false",

"code" : 13435,

"codeName" : "NotMasterNoSlaveOk",

"$clusterTime" : {

"clusterTime" : Timestamp(1535267081, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

} :

_getErrorWithCode@src/mongo/shell/utils.js:25:13

Mongo.prototype.getDBs@src/mongo/shell/mongo.js:67:1

shellHelper.show@src/mongo/shell/utils.js:876:19

shellHelper@src/mongo/shell/utils.js:766:15

@(shellhelp2):1:1

luo:SECONDARY> rs.slaveOk() #出現以上錯誤執行這個命令

luo:SECONDARY> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

testdb 0.000GB

luo:SECONDARY> use testdb

switched to db testdb

luo:SECONDARY> show tables

test如上可以看到數據已經成功同步到從機器上了。

副本集更改權重模擬主宕機

使用rs.config()命令可以查看每臺機器的權重:

luo:PRIMARY> rs.config()

{

"_id" : "luo",

"version" : 1,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" : "192.168.66.130:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "192.168.66.131:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "192.168.66.132:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("5b824fb2deea499ddf787ebe")

}

}priority的值表示該機器的權重,默認都爲1。

增加一條防火牆規則來阻斷通信模擬主機器宕機:

# 注意這是在主機器上執行

[root@primary mongo]# iptables -I INPUT -p tcp --dport 27017 -j DROP然後到從機器上查看狀態:

luo:SECONDARY> rs.status()

{

"set" : "luo",

"date" : ISODate("2018-08-26T07:10:19.959Z"),

"myState" : 1,

"term" : NumberLong(2),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535267410, 1),

"t" : NumberLong(2)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535267410, 1),

"t" : NumberLong(2)

},

"appliedOpTime" : {

"ts" : Timestamp(1535267410, 1),

"t" : NumberLong(2)

},

"durableOpTime" : {

"ts" : Timestamp(1535267410, 1),

"t" : NumberLong(2)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1535267410, 1),

"members" : [

{

"_id" : 0,

"name" : "192.168.66.130:27017",

"health" : 0,

"state" : 8,

"stateStr" : "(not reachable/healthy)",

"uptime" : 0,

"optime" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDurable" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDate" : ISODate("1970-01-01T00:00:00Z"),

"optimeDurableDate" : ISODate("1970-01-01T00:00:00Z"),

"lastHeartbeat" : ISODate("2018-08-26T07:10:12.109Z"),

"lastHeartbeatRecv" : ISODate("2018-08-26T07:10:19.645Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "Couldn't get a connection within the time limit",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : -1

},

{

"_id" : 1,

"name" : "192.168.66.131:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 842,

"optime" : {

"ts" : Timestamp(1535267410, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2018-08-26T07:10:10Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1535267391, 1),

"electionDate" : ISODate("2018-08-26T07:09:51Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 2,

"name" : "192.168.66.132:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 679,

"optime" : {

"ts" : Timestamp(1535267410, 1),

"t" : NumberLong(2)

},

"optimeDurable" : {

"ts" : Timestamp(1535267410, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2018-08-26T07:10:10Z"),

"optimeDurableDate" : ISODate("2018-08-26T07:10:10Z"),

"lastHeartbeat" : ISODate("2018-08-26T07:10:19.555Z"),

"lastHeartbeatRecv" : ISODate("2018-08-26T07:10:19.302Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.66.131:27017",

"syncSourceHost" : "192.168.66.131:27017",

"syncSourceId" : 1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1535267410, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535267410, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}如上,可以看到 192.168.66.130 的 stateStr 的值變成 not reachable/healthy 了,而 192.168.66.131 自動切換成主了,也可以看到192.168.66.131 的 stateStr 的值變成 了PRIMARY。因爲權重是相同的,所以切換是有一定的隨機性的。

接下來我們指定每臺機器權重,讓權重高的機器自動切換爲主。

1.先刪除192.168.66.130的防火牆規則:

[root@primary mongo]# iptables -D INPUT -p tcp --dport 27017 -j DROP2.因爲剛纔192.168.66.131切換爲主了,所以我們在131上指定各個機器的權重:

luo:PRIMARY> cfg = rs.conf()

luo:PRIMARY> cfg.members[0].priority = 3 #設置id爲0的機器權重爲3,也就是130機器

3

luo:PRIMARY> cfg.members[1].priority = 2

2

luo:PRIMARY> cfg.members[2].priority = 1

1

luo:PRIMARY> rs.reconfig(cfg)

{

"ok" : 1,

"operationTime" : Timestamp(1535267733, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1535267733, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}3.這時候192.168.66.130應該就切換成主了,到192.168.66.130上執行rs.config()進行查看:

luo:PRIMARY> rs.config()

{

"_id" : "luo",

"version" : 2,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" : "192.168.66.130:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 3,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "192.168.66.131:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 2,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "192.168.66.132:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("5b824fb2deea499ddf787ebe")

}

}可以看到三臺機器的權重分別爲3,2,1,如果主宕機,根據權重選擇候選主節點

21.36 mongodb分片介紹

分片(sharding)是指將數據庫拆分,將其分散在不同的機器上的過程。將數據分散到不同的機器上,不需要功能強大的服務器就可以存儲更多的數據和處理更大的負載。基本思想就是將集合切成小塊,這些塊分散到若干片裏,每個片只負責總數據的一部分,最後通過一個均衡器來對各個分片進行均衡(數據遷移)。通過一個名爲mongos的路由進程進行操作,mongos知道數據和片的對應關係(通過配置服務器)。大部分使用場景都是解決磁盤空間的問題,對於寫入有可能會變差,查詢則儘量避免跨分片查詢。使用分片的時機:

1,機器的磁盤不夠用了。使用分片解決磁盤空間的問題。

2,單個mongod已經不能滿足寫數據的性能要求。通過分片讓寫壓力分散到各個分片上面,使用分片服務器自身的資源。

3,想把大量數據放到內存裏提高性能。和上面一樣,通過分片使用分片服務器自身的資源。

所以簡單來說分片就是將數據庫進行拆分,將大型集合分隔到不同服務器上,所以組成分片的單元是副本集。比如,本來100G的數據,可以分割成10份存儲到10臺服務器上,這樣每臺機器只有10G的數據,一般分片在大型企業或者數據量很大的公司纔會使用MongoDB通過一個mongos的進程(路由分發)實現分片後的數據存儲與訪問,也就是說mongos是整個分片架構的核心,是分片的總入口,對客戶端而言是不知道是否有分片的,客戶端只需要把讀寫操作轉達給mongos即可。

雖然分片會把數據分隔到很多臺服務器上,但是每一個節點都是需要有一個備用角色的,這樣才能保證數據的高可用。

當系統需要更多空間或者資源的時候,分片可以讓我們按照需求方便的橫向擴展,只需要把mongodb服務的機器加入到分片集羣中即可

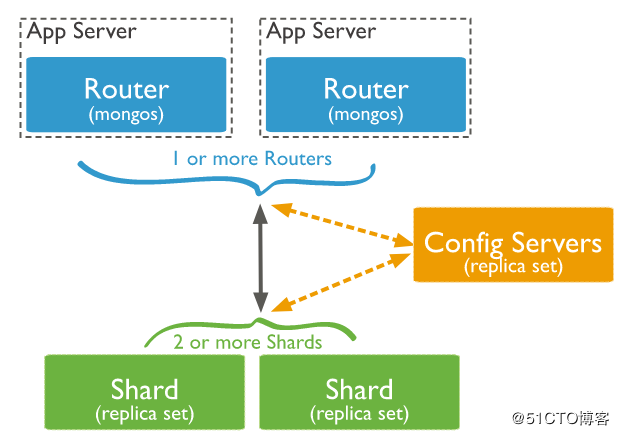

MongoDB分片架構圖:

MongoDB分片相關概念:

•mongos: 數據庫集羣請求的入口,所有的請求都通過mongos進行協調,不需要在應用程序添加一個路由選擇器,mongos自己就是一個請求分發中心,它負責把對應的數據請求請求轉發到對應的shard服務器上。在生產環境通常有多mongos作爲請求的入口,防止其中一個掛掉所有的mongodb請求都沒有辦法操作。

•config server: 配置服務器,存儲所有數據庫元信息(路由、分片)的配置。mongos本身沒有物理存儲分片服務器和數據路由信息,只是緩存在內存裏,配置服務器則實際存儲這些數據。mongos第一次啓動或者關掉重啓就會從 config server 加載配置信息,以後如果配置服務器信息變化會通知到所有的 mongos 更新自己的狀態,這樣 mongos 就能繼續準確路由。在生產環境通常有多個 config server 配置服務器,因爲它存儲了分片路由的元數據,防止數據丟失!

•shard: 存儲了一個集合部分數據的MongoDB實例,每個分片是單獨的mongodb服務或者副本集,在生產環境中,所有的分片都應該是副本集。

21.37/21.38/21.39 mongodb分片搭建

分片搭建 -服務器規劃:

資源有限,我這裏使用三臺機器 A B C 作爲演示:

A搭建:mongos、config server、副本集1主節點、副本集2仲裁、副本集3從節點

B搭建:mongos、config server、副本集1從節點、副本集2主節點、副本集3仲裁

C搭建:mongos、config server、副本集1仲裁、副本集2從節點、副本集3主節點

端口分配:mongos 20000、config server 21000、副本集1 27001、副本集2 27002、副本集3 27003

三臺機器全部關閉firewalld服務和selinux,或者增加對應端口的規則

三臺機器的IP分別是:

A機器:192.168.77.128

B機器:192.168.77.130

C機器:192.168.77.134

分片搭建 – 創建目錄:

分別在三臺機器上創建各個角色所需要的目錄:

mkdir -p /data/mongodb/mongos/log

mkdir -p /data/mongodb/config/{data,log}

mkdir -p /data/mongodb/shard1/{data,log}

mkdir -p /data/mongodb/shard2/{data,log}

mkdir -p /data/mongodb/shard3/{data,log}分片搭建–config server配置:

mongodb3.4版本以後需要對config server創建副本集

添加配置文件(三臺機器都操作)

[root@localhost ~]# mkdir /etc/mongod/

[root@localhost ~]# vim /etc/mongod/config.conf # 加入如下內容

pidfilepath = /var/run/mongodb/configsrv.pid

dbpath = /data/mongodb/config/data

logpath = /data/mongodb/config/log/congigsrv.log

logappend = true

bind_ip = 0.0.0.0 # 綁定你的監聽ip

port = 21000

fork = true

configsvr = true #declare this is a config db of a cluster;

replSet=configs #副本集名稱

maxConns=20000 #設置最大連接數啓動三臺機器的config server:

[root@localhost ~]# mongod -f /etc/mongod/config.conf # 三臺機器都要操作

about to fork child process, waiting until server is ready for connections.

forked process: 4183

child process started successfully, parent exiting

[root@localhost ~]# ps aux |grep mongo

mongod 2518 1.1 2.3 1544488 89064 ? Sl 09:57 0:42 /usr/bin/mongod -f /etc/mongod.conf

root 4183 1.1 1.3 1072404 50992 ? Sl 10:56 0:00 mongod -f /etc/mongod/config.conf

root 4240 0.0 0.0 112660 964 pts/0 S+ 10:57 0:00 grep --color=auto mongo

[root@localhost ~]# netstat -lntp |grep mongod

tcp 0 0 192.168.77.128:21000 0.0.0.0:* LISTEN 4183/mongod

tcp 0 0 192.168.77.128:27017 0.0.0.0:* LISTEN 2518/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 2518/mongod

[root@localhost ~]#登錄任意一臺機器的21000端口,初始化副本集:

[root@localhost ~]# mongo --host 192.168.77.128 --port 21000

> config = { _id: "configs", members: [ {_id : 0, host : "192.168.77.128:21000"},{_id : 1, host : "192.168.77.130:21000"},{_id : 2, host : "192.168.77.134:21000"}] }

{

"_id" : "configs",

"members" : [

{

"_id" : 0,

"host" : "192.168.77.128:21000"

},

{

"_id" : 1,

"host" : "192.168.77.130:21000"

},

{

"_id" : 2,

"host" : "192.168.77.134:21000"

}

]

}

> rs.initiate(config) # 初始化副本集

{

"ok" : 1,

"operationTime" : Timestamp(1515553318, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1515553318, 1),

"electionId" : ObjectId("000000000000000000000000")

},

"$clusterTime" : {

"clusterTime" : Timestamp(1515553318, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

configs:SECONDARY> rs.status() # 確保每臺機器都正常

{

"set" : "configs",

"date" : ISODate("2018-08-25T03:03:40.244Z"),

"myState" : 1,

"term" : NumberLong(1),

"configsvr" : true,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1515553411, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1515553411, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1515553411, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1515553411, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.77.128:21000",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 415,

"optime" : {

"ts" : Timestamp(1515553411, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-25T03:03:31Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1515553329, 1),

"electionDate" : ISODate("2018-08-25T03:02:09Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 1,

"name" : "192.168.77.130:21000",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 101,

"optime" : {

"ts" : Timestamp(1515553411, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1515553411, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-25T03:03:31Z"),

"optimeDurableDate" : ISODate("2018-08-25T03:03:31Z"),

"lastHeartbeat" : ISODate("2018-08-25T03:03:39.973Z"),

"lastHeartbeatRecv" : ISODate("2018-08-25T03:03:38.804Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.77.134:21000",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.77.134:21000",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 101,

"optime" : {

"ts" : Timestamp(1515553411, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1515553411, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-25T03:03:31Z"),

"optimeDurableDate" : ISODate("2018-08-25T03:03:31Z"),

"lastHeartbeat" : ISODate("2018-08-25T03:03:39.945Z"),

"lastHeartbeatRecv" : ISODate("2018-08-25T03:03:38.726Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.77.128:21000",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1515553411, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1515553318, 1),

"electionId" : ObjectId("7fffffff0000000000000001")

},

"$clusterTime" : {

"clusterTime" : Timestamp(1515553411, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

configs:PRIMARY>分片搭建–分片配置:

添加配置文件(三臺機器都需要操作):

[root@localhost ~]# vim /etc/mongod/shard1.conf

pidfilepath = /var/run/mongodb/shard1.pid

dbpath = /data/mongodb/shard1/data

logpath = /data/mongodb/shard1/log/shard1.log

logappend = true

logRotate=rename

bind_ip = 0.0.0.0 # 綁定你的監聽IP

port = 27001

fork = true

replSet=shard1 #副本集名稱

shardsvr = true #declare this is a shard db of a cluster;

maxConns=20000 #設置最大連接數

[root@localhost ~]# vim /etc/mongod/shard2.conf //加入如下內容

pidfilepath = /var/run/mongodb/shard2.pid

dbpath = /data/mongodb/shard2/data

logpath = /data/mongodb/shard2/log/shard2.log

logappend = true

logRotate=rename

bind_ip = 0.0.0.0 # 綁定你的監聽IP

port = 27002

fork = true

replSet=shard2 #副本集名稱

shardsvr = true #declare this is a shard db of a cluster;

maxConns=20000 #設置最大連接數

[root@localhost ~]# vim /etc/mongod/shard3.conf //加入如下內容

pidfilepath = /var/run/mongodb/shard3.pid

dbpath = /data/mongodb/shard3/data

logpath = /data/mongodb/shard3/log/shard3.log

logappend = true

logRotate=rename

bind_ip = 0.0.0.0 # 綁定你的監聽IP

port = 27003

fork = true

replSet=shard3 #副本集名稱

shardsvr = true #declare this is a shard db of a cluster;

maxConns=20000 #設置最大連接數都配置完成之後逐個進行啓動,三臺機器都需要啓動:

1.先啓動shard1:

[root@localhost ~]# mongod -f /etc/mongod/shard1.conf # 三臺機器都要操作

about to fork child process, waiting until server is ready for connections.

forked process: 13615

child process started successfully, parent exiting

[root@localhost ~]# ps aux |grep shard1

root 13615 0.7 1.3 1023224 52660 ? Sl 17:16 0:00 mongod -f /etc/mongod/shard1.conf

root 13670 0.0 0.0 112660 964 pts/0 R+ 17:17 0:00 grep --color=auto shard1

[root@localhost ~]#然後登錄128或者130機器的27001端口初始化副本集,134之所以不行,是因爲shard1我們把134這臺機器的27001端口作爲了仲裁節點:

[root@localhost ~]# mongo --host 192.168.77.128 --port 27001

> use admin

switched to db admin

> config = { _id: "shard1", members: [ {_id : 0, host : "192.168.77.128:27001"}, {_id: 1,host : "192.168.77.130:27001"},{_id : 2, host : "192.168.77.134:27001",arbiterOnly:true}] }

{

"_id" : "shard1",

"members" : [

{

"_id" : 0,

"host" : "192.168.77.128:27001"

},

{

"_id" : 1,

"host" : "192.168.77.130:27001"

},

{

"_id" : 2,

"host" : "192.168.77.134:27001",

"arbiterOnly" : true

}

]

}

> rs.initiate(config) # 初始化副本集

{ "ok" : 1 }

shard1:SECONDARY> rs.status() # 查看狀態

{

"set" : "shard1",

"date" : ISODate("2018-08-25T09:21:37.682Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1515576097, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1515576097, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1515576097, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1515576097, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.77.128:27001",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 317,

"optime" : {

"ts" : Timestamp(1515576097, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-25T09:21:37Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1515576075, 1),

"electionDate" : ISODate("2018-08-25T09:21:15Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 1,

"name" : "192.168.77.130:27001",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 33,

"optime" : {

"ts" : Timestamp(1515576097, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1515576097, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-25T09:21:37Z"),

"optimeDurableDate" : ISODate("2018-08-25T09:21:37Z"),

"lastHeartbeat" : ISODate("2018-08-25T09:21:37.262Z"),

"lastHeartbeatRecv" : ISODate("2018-08-25T09:21:36.213Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.77.128:27001",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.77.134:27001",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER", # 可以看到134是仲裁節點

"uptime" : 33,

"lastHeartbeat" : ISODate("2018-08-25T09:21:37.256Z"),

"lastHeartbeatRecv" : ISODate("2018-08-25T09:21:36.024Z"),

"pingMs" : NumberLong(0),

"configVersion" : 1

}

],

"ok" : 1

}

shard1:PRIMARY>2.shard1配置完畢之後啓動shard2:

[root@localhost ~]# mongod -f /etc/mongod/shard2.conf # 三臺機器都要進行啓動操作

about to fork child process, waiting until server is ready for connections.

forked process: 13910

child process started successfully, parent exiting

[root@localhost ~]# ps aux |grep shard2

root 13910 1.9 1.2 1023224 50096 ? Sl 17:25 0:00 mongod -f /etc/mongod/shard2.conf

root 13943 0.0 0.0 112660 964 pts/0 S+ 17:25 0:00 grep --color=auto shard2

[root@localhost ~]#登錄130或者134任何一臺機器的27002端口初始化副本集,128之所以不行,是因爲shard2我們把128這臺機器的27002端口作爲了仲裁節點:

[root@localhost ~]# mongo --host 192.168.77.130 --port 27002

> use admin

switched to db admin

> config = { _id: "shard2", members: [ {_id : 0, host : "192.168.77.128:27002" ,arbiterOnly:true},{_id : 1, host : "192.168.77.130:27002"},{_id : 2, host : "192.168.77.134:27002"}] }

{

"_id" : "shard2",

"members" : [

{

"_id" : 0,

"host" : "192.168.77.128:27002",

"arbiterOnly" : true

},

{

"_id" : 1,

"host" : "192.168.77.130:27002"

},

{

"_id" : 2,

"host" : "192.168.77.134:27002"

}

]

}

> rs.initiate(config)

{ "ok" : 1 }

shard2:SECONDARY> rs.status()

{

"set" : "shard2",

"date" : ISODate("22018-08-25T17:26:12.250Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1515605171, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1515605171, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1515605171, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1515605171, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.77.128:27002",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER", # 仲裁節點

"uptime" : 42,

"lastHeartbeat" : ISODate("22018-08-25T17:26:10.792Z"),

"lastHeartbeatRecv" : ISODate("2018-08-25T17:26:11.607Z"),

"pingMs" : NumberLong(0),

"configVersion" : 1

},

{

"_id" : 1,

"name" : "192.168.77.130:27002",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY", # 主節點

"uptime" : 546,

"optime" : {

"ts" : Timestamp(1515605171, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-25T17:26:11Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1515605140, 1),

"electionDate" : ISODate("2018-08-25T17:25:40Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 2,

"name" : "192.168.77.134:27002",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY", # 從節點

"uptime" : 42,

"optime" : {

"ts" : Timestamp(1515605161, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1515605161, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-25T17:26:01Z"),

"optimeDurableDate" : ISODate("2018-08-25T17:26:01Z"),

"lastHeartbeat" : ISODate("2018-08-25T17:26:10.776Z"),

"lastHeartbeatRecv" : ISODate("2018-08-25T17:26:10.823Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.77.130:27002",

"configVersion" : 1

}

],

"ok" : 1

}

shard2:PRIMARY>3.接着啓動shard3:

[root@localhost ~]# mongod -f /etc/mongod/shard3.conf # 三臺機器都要操作

about to fork child process, waiting until server is ready for connections.

forked process: 14204

child process started successfully, parent exiting

[root@localhost ~]# ps aux |grep shard3

root 14204 2.2 1.2 1023228 50096 ? Sl 17:36 0:00 mongod -f /etc/mongod/shard3.conf

root 14237 0.0 0.0 112660 960 pts/0 S+ 17:36 0:00 grep --color=auto shard3

[root@localhost ~]#然後登錄128或者134任何一臺機器的27003端口初始化副本集,130之所以不行,是因爲shard3我們把130這臺機器的27003端口作爲了仲裁節點:

[root@localhost ~]# mongo --host 192.168.77.128 --port 27003

> use admin

switched to db admin

> config = { _id: "shard3", members: [ {_id : 0, host : "192.168.77.128:27003"}, {_id : 1, host : "192.168.77.130:27003", arbiterOnly:true}, {_id : 2, host : "192.168.77.134:27003"}] }

{

"_id" : "shard3",

"members" : [

{

"_id" : 0,

"host" : "192.168.77.128:27003"

},

{

"_id" : 1,

"host" : "192.168.77.130:27003",

"arbiterOnly" : true

},

{

"_id" : 2,

"host" : "192.168.77.134:27003"

}

]

}

> rs.initiate(config)

{ "ok" : 1 }

shard3:SECONDARY> rs.status()

{

"set" : "shard3",

"date" : ISODate("2018-08-26T09:39:47.530Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1515577180, 2),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1515577180, 2),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1515577180, 2),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1515577180, 2),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.77.128:27003",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY", # 主節點

"uptime" : 221,

"optime" : {

"ts" : Timestamp(1515577180, 2),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-26T09:39:40Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1515577179, 1),

"electionDate" : ISODate("2018-08-26T09:39:39Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 1,

"name" : "192.168.77.130:27003",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER", # 仲裁節點

"uptime" : 18,

"lastHeartbeat" : ISODate("2018-08-26T09:39:47.477Z"),

"lastHeartbeatRecv" : ISODate("2018-08-26T09:39:45.715Z"),

"pingMs" : NumberLong(0),

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.77.134:27003",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY", # 從節點

"uptime" : 18,

"optime" : {

"ts" : Timestamp(1515577180, 2),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1515577180, 2),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-26T09:39:40Z"),

"optimeDurableDate" : ISODate("2018-08-26T09:39:40Z"),

"lastHeartbeat" : ISODate("2018-08-26T09:39:47.477Z"),

"lastHeartbeatRecv" : ISODate("2018-08-26T09:39:45.779Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.77.128:27003",

"configVersion" : 1

}

],

"ok" : 1

}

shard3:PRIMARY>分片搭建–配置路由服務器

mongos放在最後面配置是因爲它需要知道作爲config server的是哪個機器,以及作爲shard副本集的機器。

1添加配置文件(三臺機器都操作):

[root@localhost ~]# vim /etc/mongod/mongos.conf # 加入如下內容

pidfilepath = /var/run/mongodb/mongos.pid

logpath = /data/mongodb/mongos/log/mongos.log

logappend = true

bind_ip = 0.0.0.0 # 綁定你的監聽ip

port = 20000

fork = true

#監聽的配置服務器,只能有1個或者3個,configs爲配置服務器的副本集名字

configdb = configs/192.168.77.128:21000, 192.168.77.130:21000, 192.168.77.134:21000

maxConns=20000 #設置最大連接數2.然後三臺機器上都啓動mongos服務,注意命令,前面都是mongod,這裏是mongos:

[root@localhost ~]# mongos -f /etc/mongod/mongos.conf # 三臺機器上都需要執行

2018-08-26T18:26:02.566+0800 I NETWORK [main] getaddrinfo(" 192.168.77.130") failed: Name or service not known

2018-08-26T18:26:22.583+0800 I NETWORK [main] getaddrinfo(" 192.168.77.134") failed: Name or service not known

about to fork child process, waiting until server is ready for connections.

forked process: 15552

child process started successfully, parent exiting

[root@localhost ~]# ps aux |grep mongos # 三臺機器上都需要檢查進程是否已啓動

root 15552 0.2 0.3 279940 15380 ? Sl 18:26 0:00 mongos -f /etc/mongod/mongos.conf

root 15597 0.0 0.0 112660 964 pts/0 S+ 18:27 0:00 grep --color=auto mongos

[root@localhost ~]# netstat -lntp |grep mongos # 三臺機器上都需要檢查端口是否已監聽

tcp 0 0 0.0.0.0:20000 0.0.0.0:* LISTEN 15552/mongos

[root@localhost ~]#分片搭建–啓用分片

1.登錄任意一臺機器的20000端口,然後把所有分片和路由器串聯:

[root@localhost ~]# mongo --host 192.168.77.128 --port 20000

# 串聯shard1

mongos> sh.addShard("shard1/192.168.77.128:27001,192.168.77.130:27001,192.168.77.134:27001")

{

"shardAdded" : "shard1", # 這裏得對應的是shard1才行

"ok" : 1, # 注意,這裏得是1纔是成功

"$clusterTime" : {

"clusterTime" : Timestamp(1515580345, 6),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1515580345, 6)

}

# 串聯shard2

mongos> sh.addShard("shard2/192.168.77.128:27002,192.168.77.130:27002,192.168.77.134:27002")

{

"shardAdded" : "shard2", # 這裏得對應的是shard2才行

"ok" : 1, # 注意,這裏得是1纔是成功

"$clusterTime" : {

"clusterTime" : Timestamp(1515608789, 6),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1515608789, 6)

}

# 串聯shard3

mongos> sh.addShard("shard3/192.168.77.128:27003,192.168.77.130:27003,192.168.77.134:27003")

{

"shardAdded" : "shard3", # 這裏得對應的是shard3才行

"ok" : 1, # 注意,這裏得是1纔是成功

"$clusterTime" : {

"clusterTime" : Timestamp(1515608789, 14),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1515608789, 14)

}

mongos>使用sh.status()命令查詢分片狀態,要確認狀態正常:

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5a55823348aee75ba3928fea")

}

shards: # 成功的情況下,這裏會列出分片信息和狀態,state的值要爲1

{ "_id" : "shard1", "host" : "shard1/192.168.77.128:27001,192.168.77.130:27001", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/192.168.77.130:27002,192.168.77.134:27002", "state" : 1 }

{ "_id" : "shard3", "host" : "shard3/192.168.77.128:27003,192.168.77.134:27003", "state" : 1 }

active mongoses:

"3.6.1" : 1

autosplit:

Currently enabled: yes # 成功的情況下,這裏是yes

balancer:

Currently enabled: yes # 成功的情況下,這裏是yes

Currently running: no # 沒有創建庫和表的情況下,這裏是no,反之則得是yes

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0)

mongos>21.40 mongodb分片測試

1.登錄任意一臺20000端口:

[root@localhost ~]# mongo --host 192.168.77.128 --port 200002.進入admin庫,使用以下任意一條命令指定要分片的數據庫:

db.runCommand({ enablesharding : "testdb"})

sh.enableSharding("testdb")示例:

mongos> use admin

switched to db admin

mongos> sh.enableSharding("testdb")

{

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1515609562, 6),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1515609562, 6)

}

mongos>3.使用以下任意一條命令指定數據庫裏需要分片的集合和片鍵:

db.runCommand( { shardcollection : "testdb.table1",key : {id: 1} } )

sh.shardCollection("testdb.table1",{"id":1} )示例:

mongos> sh.shardCollection("testdb.table1",{"id":1} )

{

"collectionsharded" : "testdb.table1",

"collectionUUID" : UUID("f98762a6-8b2b-4ae5-9142-3d8acc589255"),

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1515609671, 12),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1515609671, 12)

}

mongos>4.進入剛剛創建的testdb庫裏插入測試數據:

mongos> use testdb

switched to db testdb

mongos> for (var i = 1; i <= 10000; i++) db.table1.save({id:i,"test1":"testval1"})

WriteResult({ "nInserted" : 1 })

mongos>5.然後創建多幾個庫和集合:

mongos> sh.enableSharding("db1")

mongos> sh.shardCollection("db1.table1",{"id":1} )

mongos> sh.enableSharding("db2")

mongos> sh.shardCollection("db2.table1",{"id":1} )

mongos> sh.enableSharding("db3")

mongos> sh.shardCollection("db3.table1",{"id":1} )6.查看狀態:

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5a55823348aee75ba3928fea")

}

shards:

{ "_id" : "shard1", "host" : "shard1/192.168.77.128:27001,192.168.77.130:27001", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/192.168.77.130:27002,192.168.77.134:27002", "state" : 1 }

{ "_id" : "shard3", "host" : "shard3/192.168.77.128:27003,192.168.77.134:27003", "state" : 1 }

active mongoses:

"3.6.1" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0)

{ "_id" : "db1", "primary" : "shard3", "partitioned" : true }

db1.table1

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shard3 1 # db1存儲到了shard3中

{ "id" : { "$minKey" : 1 } } -->> { "id" : { "$maxKey" : 1 } } on : shard3 Timestamp(1, 0)

{ "_id" : "db2", "primary" : "shard1", "partitioned" : true }

db2.table1

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shard1 1 # db2存儲到了shard1中

{ "id" : { "$minKey" : 1 } } -->> { "id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0)

{ "_id" : "db3", "primary" : "shard3", "partitioned" : true }

db3.table1

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shard3 1 # db3存儲到了shard3中

{ "id" : { "$minKey" : 1 } } -->> { "id" : { "$maxKey" : 1 } } on : shard3 Timestamp(1, 0)

{ "_id" : "testdb", "primary" : "shard2", "partitioned" : true }

testdb.table1

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shard2 1 # testdb存儲到了shard2中

{ "id" : { "$minKey" : 1 } } -->> { "id" : { "$maxKey" : 1 } } on : shard2 Timestamp(1, 0)

mongos> 如上,可以看到,剛剛創建的庫都存儲在了各個分片上,證明分片已經搭建成功。

使用以下命令可以查看某個集合的狀態:

db.集合名稱.stats()21.41 mongodb備份恢復

1.首先演示備份某個指定庫:

[root@localhost ~]# mkdir /tmp/mongobak # 先創建一個目錄用來存放備份文件

[root@localhost ~]# mongodump --host 192.168.77.128 --port 20000 -d testdb -o /tmp/mongobak

2018-08-26T20:47:51.893+0800 writing testdb.table1 to

2018-08-26T20:47:51.968+0800 done dumping testdb.table1 (10000 documents)

[root@localhost ~]# ls /tmp/mongobak/ # 備份成功後會生成一個目錄

testdb

[root@localhost ~]# ls /tmp/mongobak/testdb/ # 目錄裏則會生成相應的數據文件

table1.bson table1.metadata.json

[root@localhost /tmp/mongobak/testdb]# du -sh * # 可以看到,存放數據的是.bson文件

528K table1.bson

4.0K table1.metadata.json

[root@localhost /tmp/mongobak/testdb]#mongodump 命令中,-d指定需要備份的庫,-o指定備份路徑

2.備份所有庫示例:

[root@localhost ~]# mongodump --host 192.168.77.128 --port 20000 -o /tmp/mongobak

2018-08-26T20:52:28.231+0800 writing admin.system.version to

2018-08-26T20:52:28.233+0800 done dumping admin.system.version (1 document)

2018-08-26T20:52:28.233+0800 writing testdb.table1 to

2018-08-26T20:52:28.234+0800 writing config.locks to

2018-08-26T20:52:28.234+0800 writing config.changelog to

2018-08-26T20:52:28.234+0800 writing config.lockpings to

2018-08-26T20:52:28.235+0800 done dumping config.locks (15 documents)

2018-08-26T20:52:28.236+0800 writing config.chunks to

2018-08-26T20:52:28.236+0800 done dumping config.lockpings (10 documents)

2018-08-26T20:52:28.236+0800 writing config.collections to

2018-08-26T20:52:28.236+0800 done dumping config.changelog (13 documents)

2018-08-26T20:52:28.236+0800 writing config.databases to

2018-08-26T20:52:28.237+0800 done dumping config.collections (5 documents)

2018-08-26T20:52:28.237+0800 writing config.shards to

2018-08-26T20:52:28.237+0800 done dumping config.chunks (5 documents)

2018-08-26T20:52:28.237+0800 writing config.version to

2018-08-26T20:52:28.238+0800 done dumping config.databases (4 documents)

2018-08-26T20:52:28.238+0800 writing config.mongos to

2018-08-26T20:52:28.238+0800 done dumping config.version (1 document)

2018-08-26T20:52:28.238+0800 writing config.migrations to

2018-08-26T20:52:28.239+0800 done dumping config.mongos (1 document)

2018-08-26T20:52:28.239+0800 writing db1.table1 to

2018-08-26T20:52:28.239+0800 done dumping config.shards (3 documents)

2018-08-26T20:52:28.239+0800 writing db2.table1 to

2018-08-26T20:52:28.239+0800 done dumping config.migrations (0 documents)

2018-08-26T20:52:28.239+0800 writing db3.table1 to

2018-08-26T20:52:28.241+0800 done dumping db2.table1 (0 documents)

2018-08-26T20:52:28.241+0800 writing config.tags to

2018-08-26T20:52:28.241+0800 done dumping db1.table1 (0 documents)

2018-08-26T20:52:28.242+0800 done dumping db3.table1 (0 documents)

2018-08-26T20:52:28.243+0800 done dumping config.tags (0 documents)

2018-08-26T20:52:28.272+0800 done dumping testdb.table1 (10000 documents)

[root@localhost ~]# ls /tmp/mongobak/

admin config db1 db2 db3 testdb

[root@localhost ~]#沒有指定-d選項就會備份所有的庫。

3.除了備份庫之外,還可以備份某個指定的集合:

[root@localhost ~]# mongodump --host 192.168.77.128 --port 20000 -d testdb -c table1 -o /tmp/collectionbak

2018-08-26T20:56:55.300+0800 writing testdb.table1 to

2018-08-26T20:56:55.335+0800 done dumping testdb.table1 (10000 documents)

[root@localhost ~]# ls !$

ls /tmp/collectionbak

testdb

[root@localhost ~]# ls /tmp/collectionbak/testdb/

table1.bson table1.metadata.json

[root@localhost ~]#-c選項指定需要備份的集合,如果沒有指定-c選項,則會備份該庫的所有集合。

4.mongoexport 命令可以將集合導出爲json文件:

[root@localhost ~]# mongoexport --host 192.168.77.128 --port 20000 -d testdb -c table1 -o /tmp/table1.json # 導出來的是一個json文件

2018-08-26T21:00:48.098+0800 connected to: 192.168.77.128:20000

2018-08-26T21:00:48.236+0800 exported 10000 records

[root@localhost ~]# ls !$

ls /tmp/table1.json

/tmp/table1.json

[root@localhost ~]# tail -n5 !$ # 可以看到文件中都是json格式的數據

tail -n5 /tmp/table1.json

{"_id":{"$oid":"5a55f036f6179723bfb03611"},"id":9996.0,"test1":"testval1"}

{"_id":{"$oid":"5a55f036f6179723bfb03612"},"id":9997.0,"test1":"testval1"}

{"_id":{"$oid":"5a55f036f6179723bfb03613"},"id":9998.0,"test1":"testval1"}

{"_id":{"$oid":"5a55f036f6179723bfb03614"},"id":9999.0,"test1":"testval1"}

{"_id":{"$oid":"5a55f036f6179723bfb03615"},"id":10000.0,"test1":"testval1"}

[root@localhost ~]#mongodb恢復數據

1.上面我們已經備份好了數據,現在我們先把MongoDB中的數據都刪除:

[root@localhost ~]# mongo --host 192.168.77.128 --port 20000

mongos> use testdb

switched to db testdb

mongos> db.dropDatabase()

{

"dropped" : "testdb",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1515617938, 13),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1515617938, 13)

}

mongos> use db1

switched to db db1

mongos> db.dropDatabase()

{

"dropped" : "db1",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1515617993, 19),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1515617993, 19)

}

mongos> use db2

switched to db db2

mongos> db.dropDatabase()

{

"dropped" : "db2",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1515618003, 13),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1515618003, 13)

}

mongos> use db3

switched to db db3

mongos> db.dropDatabase()

{

"dropped" : "db3",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1515618003, 34),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1515618003, 34)

}

mongos> show databases

admin 0.000GB

config 0.001GB

mongos>2.恢復所有的庫:

[root@localhost ~]# rm -rf /tmp/mongobak/config/ # 因爲不需要恢復config和admin庫,所以先把備份文件刪掉

[root@localhost ~]# rm -rf /tmp/mongobak/admin/

[root@localhost ~]# mongorestore --host 192.168.77.128 --port 20000 --drop /tmp/mongobak/

2018-08-26T21:11:40.031+0800 preparing collections to restore from

2018-08-26T21:11:40.033+0800 reading metadata for testdb.table1 from /tmp/mongobak/testdb/table1.metadata.json

2018-08-26T21:11:40.035+0800 reading metadata for db2.table1 from /tmp/mongobak/db2/table1.metadata.json

2018-08-26T21:11:40.040+0800 reading metadata for db3.table1 from /tmp/mongobak/db3/table1.metadata.json

2018-08-26T21:11:40.050+0800 reading metadata for db1.table1 from /tmp/mongobak/db1/table1.metadata.json

2018-08-26T21:11:40.086+0800 restoring testdb.table1 from /tmp/mongobak/testdb/table1.bson

2018-08-26T21:11:40.100+0800 restoring db2.table1 from /tmp/mongobak/db2/table1.bson

2018-08-26T21:11:40.102+0800 restoring indexes for collection db2.table1 from metadata

2018-08-26T21:11:40.118+0800 finished restoring db2.table1 (0 documents)

2018-08-26T21:11:40.123+0800 restoring db3.table1 from /tmp/mongobak/db3/table1.bson

2018-08-26T21:11:40.124+0800 restoring indexes for collection db3.table1 from metadata

2018-08-26T21:11:40.126+0800 restoring db1.table1 from /tmp/mongobak/db1/table1.bson

2018-08-26T21:11:40.172+0800 finished restoring db3.table1 (0 documents)

2018-08-26T21:11:40.173+0800 restoring indexes for collection db1.table1 from metadata

2018-08-26T21:11:40.185+0800 finished restoring db1.table1 (0 documents)

2018-08-26T21:11:40.417+0800 restoring indexes for collection testdb.table1 from metadata

2018-08-26T21:11:40.437+0800 finished restoring testdb.table1 (10000 documents)

2018-08-26T21:11:40.437+0800 done

[root@localhost ~]# mongo --host 192.168.77.128 --port 20000

mongos> show databases; # 可以看到,所有的庫都恢復了

admin 0.000GB

config 0.001GB

db1 0.000GB

db2 0.000GB

db3 0.000GB

testdb 0.000GB

mongos>mongorestore 命令中的--drop可選,意思是當恢復之前先把之前的數據刪除,生產環境不建議使用

3.恢復指定的庫:

[root@localhost ~]# mongorestore --host 192.168.77.128 --port 20000 -d testdb --drop /tmp/mongobak/testdb/

2018-08-26T21:15:40.185+0800 the --db and --collection args should only be used when restoring from a BSON file. Other uses are deprecated and will not exist in the future; use --nsInclude instead

2018-08-26T21:15:40.185+0800 building a list of collections to restore from /tmp/mongobak/testdb dir

2018-08-26T21:15:40.232+0800 reading metadata for testdb.table1 from /tmp/mongobak/testdb/table1.metadata.json

2018-08-26T21:15:40.241+0800 restoring testdb.table1 from /tmp/mongobak/testdb/table1.bson

2018-08-26T21:15:40.507+0800 restoring indexes for collection testdb.table1 from metadata

2018-08-26T21:15:40.529+0800 finished restoring testdb.table1 (10000 documents)

2018-08-26T21:15:40.529+0800 done

[root@localhost ~]#恢復某個指定庫的時候要指定到具體的備份該庫的目錄。

4.恢復指定的集合:

[root@localhost ~]# mongorestore --host 192.168.77.128 --port 20000 -d testdb -c table1 --drop /tmp/mongobak/testdb/table1.bson

2018-08-26T21:18:14.097+0800 checking for collection data in /tmp/mongobak/testdb/table1.bson

2018-08-26T21:18:14.139+0800 reading metadata for testdb.table1 from /tmp/mongobak/testdb/table1.metadata.json

22018-08-26T21:18:14.149+0800 restoring testdb.table1 from /tmp/mongobak/testdb/table1.bson

2018-08-26T21:18:14.331+0800 restoring indexes for collection testdb.table1 from metadata

2018-08-26T21:18:14.353+0800 finished restoring testdb.table1 (10000 documents)

2018-08-26T21:18:14.353+0800 done

[root@localhost ~]#同樣的恢復某個指定集合的時候要指定到具體的備份該集合的.bson文件。

5.恢復json文件中的集合數據:

[root@localhost ~]# mongoimport --host 192.168.77.128 --port 20000 -d testdb -c table1 --file /tmp/table1.json恢復json文件中的集合數據使用的是mongoimport 命令,--file指定json文件所在路徑。