上一弹中我们规划并搭建了基本的架构组成,当然此架构存在诸多问题,我们在接下来的章节中将不断

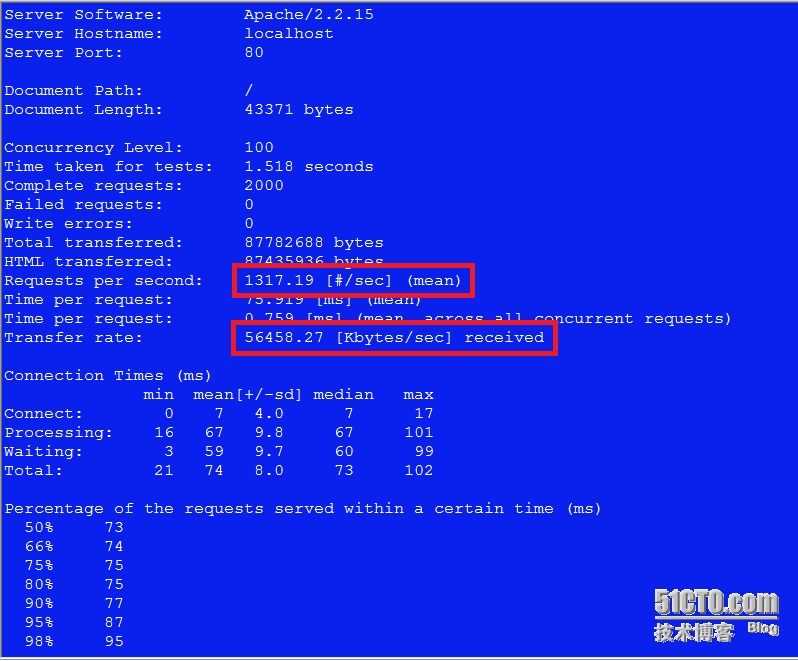

首先来对上一弹架构做基本的ab 并发100, 总量2000的测试,让我们对站点性能有所熟知,之后在之前的功能上我们添加多道

缓存对性能进行提升. (ps: 测试机器均为虚拟机环境 , 大约性能比主流服务器低 3.5 - 4.5 倍 , 测试参

数可做此对比评估)

新测试: 最近再一次申请微软的云服务帐号试用,双核,3G内存下,相同架构的软件环境,未调优结果如下

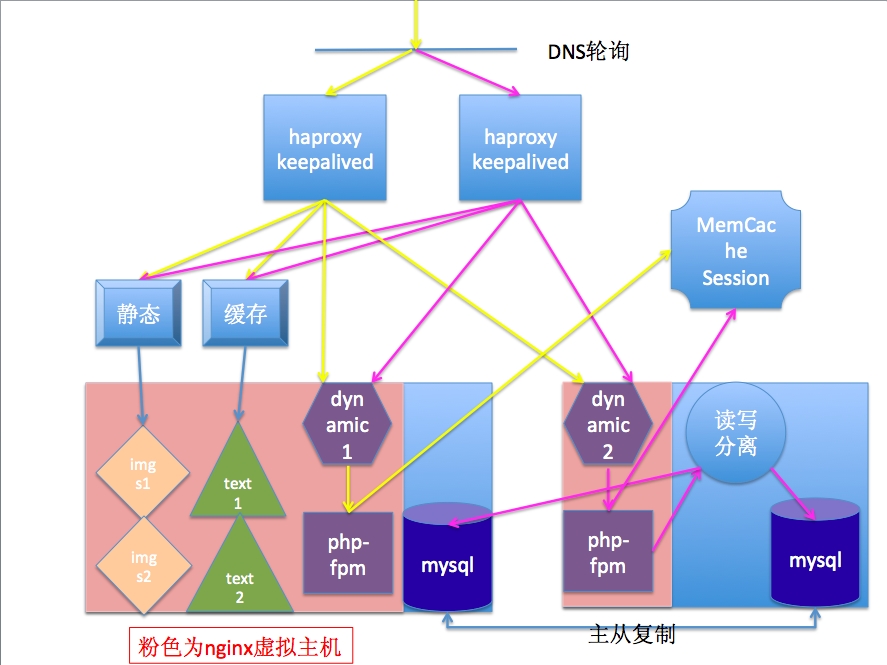

按照规划,我们在salve3.king.com中添加两实例的varnish,在slave4.king.com中添加memcache实现

session信息共享. 本期架构图如下,可与第一弹做对比学习 日均百万PV架构第一弹(基本架构搭建)

操作:

i) 安装配置varnish实现静态内容缓存

1. 准备如下文件(slave3.king.com) varnish-3.0.4-1.el6.x86_64.rpm varnish-docs-3.0.4-1.el6.x86_64.rpm varnish-libs-3.0.4-1.el6.x86_64.rpm varnish-libs-devel-3.0.4-1.el6.x86_64.rpm rpm -ivh varnish*.rpm

2. 配置varnish(slave3.king.com)

vim /etc/sysconfig/varnish

VARNISH_STORAGE="malloc,100M"

#

vim /etc/varnish/default.vcl

backend imgs1 {

.host = "imgs1.king.com";

.port = "80";

}

#

backend imgs2 {

.host = "imgs2.king.com";

.port = "80";

}

#

sub vcl_recv {

# 由于前端的haproxy已经做了负载均衡,这里直接按请求原路径处理

if (req.http.host == "imgs1.king.com") {

set req.backend = imgs1;

} else {

set req.backend = imgs2;

}

return(lookup);

}

#

sub vcl_deliver {

set resp.http.X-Age = resp.http.Age;

unset resp.http.Age;

#

if (obj.hits > 0) {

set resp.http.X-Cache = "HIT via " + server.hostname;

} else {

set resp.http.X-Cache = "MISS";

}

}3. 配置haproxy将流量转向varnish(slave1.king.com , slave2.king.com)

vim /etc/haproxy/haproxy.cfg

backend img_servers

balance roundrobin

server imgsrv1 imgs1.king.com:6081 check maxconn 4000

server imgsrv2 imgs2.king.com:6081 check maxconn 40004. 更改文本内容的设置 如上过程,也可以使用同一个varnish(略)

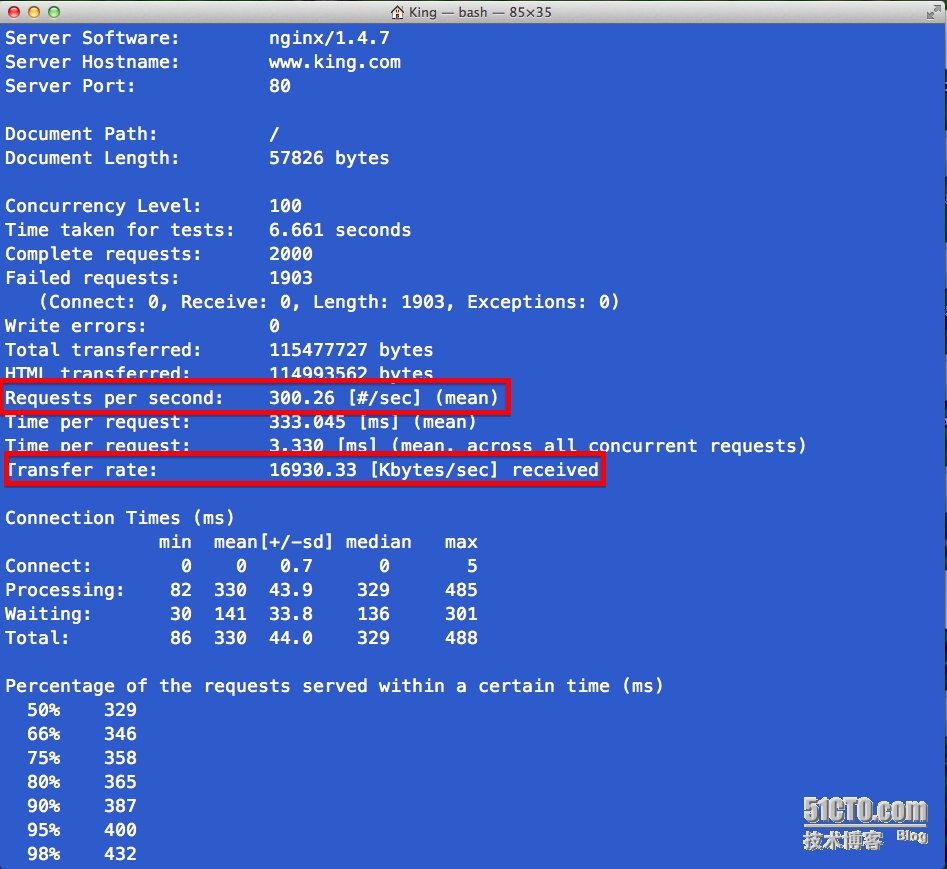

加入缓存后,站点测试

加入缓存后的ab 并发100 总量2000 测试

ii) 安装配置memcache实现session共享

1. 安装memcache(slave4.king.com)

################## 1. 解决依赖 ####################

tar xf libevent-2.0.21-stable.tar.gz

cd libevent-2.0.21-stable

./configure --prefix=/usr/local/libevent

make && make install

echo "/usr/local/libevent/lib" > /etc/ld.so.conf.d/libevent.conf

ldconfig

################## 2. 安装memcached ####################

tar xf memcached-1.4.15.tar.gz

cd memcached-1.4.15

./configure --prefix=/usr/local/memcached --with-libevent=/usr/local/libevent

make && make install

###### 3. 提供启动脚本(/etc/init.d/memcached) ###########

#!/bin/bash

#

# Init file for memcached

#

# chkconfig: - 86 14

# description: Distributed memory caching daemon

#

# processname: memcached

# config: /etc/sysconfig/memcached

#

. /etc/rc.d/init.d/functions

#

## Default variables

PORT="11211"

USER="nobody"

MAXCONN="1024"

CACHESIZE="64"

#

RETVAL=0

prog="/usr/local/memcached/bin/memcached"

desc="Distributed memory caching"

lockfile="/var/lock/subsys/memcached"

#

start() {

echo -n $"Starting $desc (memcached): "

daemon $prog -d -p $PORT -u $USER -c $MAXCONN -m $CACHESIZE

RETVAL=$?

[ $RETVAL -eq 0 ] && success && touch $lockfile || failure

echo

return $RETVAL

}

#

stop() {

echo -n $"Shutting down $desc (memcached): "

killproc $prog

RETVAL=$?

[ $RETVAL -eq 0 ] && success && rm -f $lockfile || failure

echo

return $RETVAL

}

#

restart() {

stop

start

}

#

reload() {

echo -n $"Reloading $desc ($prog): "

killproc $prog -HUP

RETVAL=$?

[ $RETVAL -eq 0 ] && success || failure

echo

return $RETVAL

}

#

case "$1" in

start)

start

;;

stop)

stop

;;

restart)

restart

;;

condrestart)

[ -e $lockfile ] && restart

RETVAL=$?

;;

reload)

reload

;;

status)

status $prog

RETVAL=$?

;;

*)

echo $"Usage: $0 {start|stop|restart|condrestart|status}"

RETVAL=1

esac

#

exit $RETVAL

################# 4. 配置脚本 ####################

chmod +x /etc/init.d/memcached

chkconfig --add memcached

service memcached start2. 配置php支持memcache(slave3.king.com , slave4.king.com) tar xf memcache-2.2.7.tgz cd memcache-2.2.7 /usr/local/php/bin/phpize ./configure --enable-memcache --with-php-config=/usr/local/php/bin/php-config make && make install # 安装结束时,会出现类似如下行, 将后半句复制 Installing shared extensions: /usr/local/php/lib/php/extensions/no-debug-non-zts-20100525/memcache.so # 编辑/etc/php.ini,在“dynamically loaded extension”相关的位置添加如下一行来载入memcache扩展: extension=/usr/local/php/lib/php/extensions/no-debug-non-zts-20100525/memcache.so

3. 实现session共享(slave3.king.com , slave4.king.com) 编辑php.ini文件,确保如下两个参数的值分别如下所示: session.save_handler = memcache session.save_path = "tcp://172.16.43.4:11211?persistent=1&weight=1&timeout=1&retry_interval=15" 最后重启 slave3.king.com , slave4.king.com 的 php-fpm 服务

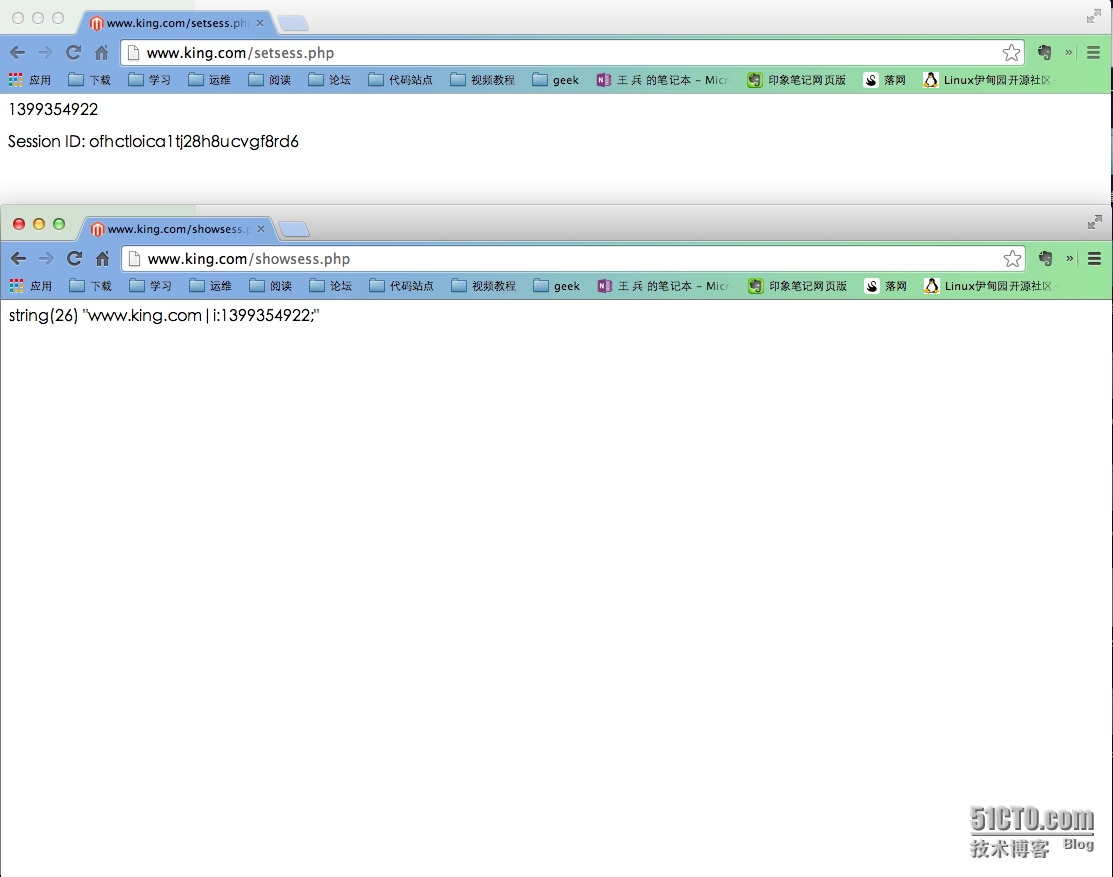

4. 测试

新建php页面 /nfsshared/html/setsess.php,为客户端设置启用session:

<?php

session_start();

if (!isset($_SESSION['www.king.com'])) {

$_SESSION['www.king.com'] = time();

}

print $_SESSION['www.king.com'];

print "<br><br>";

print "Session ID: " . session_id();

?>

#

新建php页面 /nfsshared/html/showsess.php,获取当前用户的会话ID:

<?php

session_start();

$memcache_obj = new Memcache;

$memcache_obj->connect('172.16.43.4', 11211);

$mysess=session_id();

var_dump($memcache_obj->get($mysess));

$memcache_obj->close();

?>加入memcache后, 反复刷新www.king.com在dns轮询,haproxy的调度算法下, 存储在memcache

中的session没有改变,证明session共享成功