前言

需求說明

用ELK收集MySQL的慢查詢日誌信息,並且使用grok插件將日誌信息格式化爲json格式。

部署安排

test101——10.0.0.101——部署MySQL、filebeat

test102——10.0.0.102——部署elasticsearch、kibana

test103——10.0.0.103——部署logstash

實驗環境版本

系統版本:centos7.3

數據庫版本:

本次測試了3個版本數據庫

1、yum安裝的mariadb5.5(Server version: 5.5.60-MariaDB MariaDB Server)

2、yum安裝的mariadb10.4(Server version: 10.4.0-MariaDB-log MariaDB Server)

3、源碼安裝的MySQL5.7.20

ELK版本:6.5.3

操作步驟(MariaDB5.5.60爲例)

1、安裝mariadb,準備數據庫環境

1.1 安裝服務

[root@test101 ~]# yum -y install mariadb* #這樣安裝默認是5.5.60版本 1.2 修改配置文件,開啓慢查詢日誌

在/etc/my.conf文件加入下面三行內容,開啓慢查詢日誌:

slow_query_log

long_query_time = 2 #闕值設置成2秒,只要超過2秒的操作,就會寫入slow-log文件

slow_query_log_file = "/var/lib/mysql/test101-slow.log" #設置慢查詢日誌重啓MySQL

[root@test101 ~]# systemctl restart mysql 1.3 創建測試庫,導入數據

1)先創建一個大數據量的文件:

[root@test101 ~]# seq 1 19999999 > /tmp/big2)登錄數據庫創建測試數據庫:

[root@test101 ~]# mysql

MariaDB [(none)]> create database test101; #創建測試庫

MariaDB [(none)]> use test101;

MariaDB [test101]> create table table1(id int(10)not null)engine=innodb; #創建測試表3)將剛剛創建好的大數據文件/tmp/big導入到MySQL的測試數據庫中,生成測試數據表:

MariaDB [test101]> show tables;

+----------------------+

| Tables_in_test101 |

+----------------------+

| table1 |

+-----------------------+

1 row in set (0.00 sec)

MariaDB [test101]> load data local infile '/tmp/big' into table table1; #注意:local不能少,少了local可能會報錯“ERROR 13 (HY000): Can't get stat of '/tmp/big' (Errcode: 2)”

Query OK, 19999999 rows affected (1 min 47.64 sec)

Records: 19999999 Deleted: 0 Skipped: 0 Warnings: 0

MariaDB [test101]> 測試數據導入成功

1.4 生成慢查詢日誌

在數據庫查詢一條數據:

MariaDB [test101]> select * from table1 where id=258;

+-----+

| id |

+-----+

| 258 |

+-----+

1 row in set (11.76 sec)

MariaDB [test101]> 然後查看慢查詢日誌文件,已經生成了相應的日誌。數據庫準備完成:

[root@test101 mysql]# tailf /var/lib/mysql/test101-slow.log

# Time: 181217 15:23:39

# User@Host: root[root] @ localhost []

# Thread_id: 2 Schema: test101 QC_hit: No

# Query_time: 11.758867 Lock_time: 0.000106 Rows_sent: 1 Rows_examined: 19999999

SET timestamp=1545031419;

select * from table1 where id=258;2、ELK工具部署

2.1 在test101安裝好filebeat、test102安裝elasticsearch/kibana

(安裝和基礎配置省略)

2.2 在test103服務器安裝好logstash

(安裝步驟和基礎配置省略)

1)創建logstash採集日誌文件:

[root@test103 conf.d]# cat /etc/logstash/conf.d/logstash-syslog.conf

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

beats {

port => 5044

}

}

output {

elasticsearch {

hosts => ["http://10.0.0.102:9200"]

index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

stdout {

codec => rubydebug

}

}2.3 啓動服務查看日誌採集

啓動101服務器的filebeat,102服務器的elasticsearch、前臺啓動103服務器的logstash,前臺查看日誌採集

1)在test101服務器的MySQL運行查詢語句(注意,運行查詢語句之前要保證logstash前臺啓動已經完成):

MariaDB [test101]> select * from table1 where id=358;

+-----+

| id |

+-----+

| 358 |

+-----+

1 row in set (13.47 sec)

MariaDB [test101]> 2)查看test101服務器上慢查詢日誌已經生成:

[root@test101 mysql]# tailf test101-slow.log

# Time: 181218 8:58:30

# User@Host: root[root] @ localhost []

# Thread_id: 3 Schema: test101 QC_hit: No

# Query_time: 13.405630 Lock_time: 0.000271 Rows_sent: 1 Rows_examined: 19999999

SET timestamp=1545094710;

select * from table1 where id=358;3)再查看logstash,已經採集到相關信息(但是上面的6句日誌信息,是被分成了6段messages採集的):

[root@test103 logstash]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logstash-syslog.conf

.......#省略若干行啓動信息

[INFO ] 2018-12-18 08:51:48.669 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

#就是出現這個提示的時候才運行上面的mysql查詢語句

{

"message" => "# Time: 181218 8:58:30",

"@timestamp" => 2018-12-18T00:58:33.396Z,

"prospector" => {

"type" => "log"

},

"input" => {

"type" => "log"

},

"@version" => "1",

"host" => {

"os" => {

"codename" => "Core",

"platform" => "centos",

"version" => "7 (Core)",

"family" => "redhat"

},

"name" => "test101",

"containerized" => true,

"architecture" => "x86_64",

"id" => "9fcb0c7f28e449aabad922d1ba1f0afc"

},

"beat" => {

"name" => "test101",

"hostname" => "test101",

"version" => "6.5.3"

},

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"offset" => 252,

"source" => "/var/lib/mysql/test101-slow.log"

}

{

"message" => "# User@Host: root[root] @ localhost []",

"@timestamp" => 2018-12-18T00:58:33.398Z,

"prospector" => {

"type" => "log"

},

"input" => {

"type" => "log"

},

"@version" => "1",

"host" => {

"containerized" => true,

"name" => "test101",

"architecture" => "x86_64",

"os" => {

"codename" => "Core",

"platform" => "centos",

"version" => "7 (Core)",

"family" => "redhat"

},

"id" => "9fcb0c7f28e449aabad922d1ba1f0afc"

},

"beat" => {

"name" => "test101",

"version" => "6.5.3",

"hostname" => "test101"

},

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"source" => "/var/lib/mysql/test101-slow.log",

"offset" => 276

}

{

"message" => "# Thread_id: 3 Schema: test101 QC_hit: No",

"@timestamp" => 2018-12-18T00:58:33.398Z,

"prospector" => {

"type" => "log"

},

"input" => {

"type" => "log"

},

"@version" => "1",

"host" => {

"os" => {

"family" => "redhat",

"platform" => "centos",

"version" => "7 (Core)",

"codename" => "Core"

},

"name" => "test101",

"containerized" => true,

"architecture" => "x86_64",

"id" => "9fcb0c7f28e449aabad922d1ba1f0afc"

},

"beat" => {

"name" => "test101",

"hostname" => "test101",

"version" => "6.5.3"

},

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"source" => "/var/lib/mysql/test101-slow.log",

"offset" => 315

}

{

"message" => "# Query_time: 13.405630 Lock_time: 0.000271 Rows_sent: 1 Rows_examined: 19999999",

"@timestamp" => 2018-12-18T00:58:33.398Z,

"prospector" => {

"type" => "log"

},

"input" => {

"type" => "log"

},

"@version" => "1",

"host" => {

"name" => "test101",

"architecture" => "x86_64",

"os" => {

"codename" => "Core",

"platform" => "centos",

"version" => "7 (Core)",

"family" => "redhat"

},

"containerized" => true,

"id" => "9fcb0c7f28e449aabad922d1ba1f0afc"

},

"beat" => {

"name" => "test101",

"hostname" => "test101",

"version" => "6.5.3"

},

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"source" => "/var/lib/mysql/test101-slow.log",

"offset" => 359

}

{

"message" => "SET timestamp=1545094710;",

"@timestamp" => 2018-12-18T00:58:33.398Z,

"prospector" => {

"type" => "log"

},

"input" => {

"type" => "log"

},

"@version" => "1",

"host" => {

"containerized" => true,

"name" => "test101",

"architecture" => "x86_64",

"os" => {

"codename" => "Core",

"platform" => "centos",

"version" => "7 (Core)",

"family" => "redhat"

},

"id" => "9fcb0c7f28e449aabad922d1ba1f0afc"

},

"beat" => {

"name" => "test101",

"hostname" => "test101",

"version" => "6.5.3"

},

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"source" => "/var/lib/mysql/test101-slow.log",

"offset" => 443

}

{

"message" => "select * from table1 where id=358;",

"@timestamp" => 2018-12-18T00:58:33.398Z,

"prospector" => {

"type" => "log"

},

"input" => {

"type" => "log"

},

"@version" => "1",

"host" => {

"architecture" => "x86_64",

"os" => {

"codename" => "Core",

"platform" => "centos",

"version" => "7 (Core)",

"family" => "redhat"

},

"name" => "test101",

"containerized" => true,

"id" => "9fcb0c7f28e449aabad922d1ba1f0afc"

},

"beat" => {

"name" => "test101",

"version" => "6.5.3",

"hostname" => "test101"

},

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"source" => "/var/lib/mysql/test101-slow.log",

"offset" => 469

}3、日誌採集優化

因爲慢日誌裏面,一條信息被分成了6段,不便於日誌的分析,因此需要將上面採集到的6個messages優化合併成一個

3.1 修改filebeat配置,將上面的6段messages合併成一句日誌信息

修改filebeat配置文件,在日誌路徑後面加入下面三行,然後重啓filebeat:

paths:

- /var/lib/mysql/test101-slow.log

#- c:\programdata\elasticsearch\logs\*

#加入下面三行

multiline.pattern: "^# User@Host:"

multiline.negate: true

multiline.match: after說明:

multiline.pattern:正則表達式,去匹配指定的一行,這裏去匹配的以“# User@Host:”開頭的那一行;

multiline.negate:true 或 false;默認是false,就是將multiline.pattern匹配到的那一行合併到上一行;如果配置是true,就是將除了multiline.pattern匹的那一行的其他所有行合併到其上一行;

multiline.match:after 或 before,就是指定將要合併到上一行的內容,合併到上一行的末尾或開頭;

3.2 檢查修改後的採集日誌格式

1)再在MySQL執行一次查詢語句:

MariaDB [test101]> select * from table1 where id=368;

+-----+

| id |

+-----+

| 368 |

+-----+

1 row in set (12.54 sec)

MariaDB [test101]> 2)查看看查詢日誌,已經生成日誌:

[root@test101 mysql]# tailf test101-slow.log

# Time: 181218 9:17:42

# User@Host: root[root] @ localhost []

# Thread_id: 3 Schema: test101 QC_hit: No

# Query_time: 12.541603 Lock_time: 0.000493 Rows_sent: 1 Rows_examined: 19999999

SET timestamp=1545095862;

select * from table1 where id=368;3)查看logstash的實時輸出,採集到的日誌就變成了一行:

[root@test103 logstash]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logstash-syslog.conf

.......#省略若干行啓動信息

{

"message" => # User@Host: root[root] @ localhost []\n# Thread_id: 3 Schema: test101 QC_hit: No\n# Query_time: 12.541603 Lock_time: 0.000493 Rows_sent: 1 Rows_examined: 19999999\nSET timestamp=1545095862;\nselect * from table1 where id=368;",

#這一行就是在slow-log中的信息,已經被合併成了一行,慢日誌的“"# Time: 181218 9:17:42” 單獨成行,在前面沒有貼出來

"prospector" => {

"type" => "log"

},

"input" => {

"type" => "log"

},

"host" => {

"architecture" => "x86_64",

"os" => {

"codename" => "Core",

"platform" => "centos",

"version" => "7 (Core)",

"family" => "redhat"

},

"containerized" => true,

"name" => "test101",

"id" => "9fcb0c7f28e449aabad922d1ba1f0afc"

},

"log" => {

"flags" => [

[0] "multiline"

]

},

"@timestamp" => 2018-12-18T01:17:45.336Z,

"@version" => "1",

"beat" => {

"name" => "test101",

"hostname" => "test101",

"version" => "6.5.3"

},

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"source" => "/var/lib/mysql/test101-slow.log",

"offset" => 504

}4、logstash配置grok插件,格式化分析日誌

在實現了上一步驟,即將日誌的messages合併之後,就需要採用grok插件將messages格式化成json格式了。

4.1 編輯logstash-syslog.conf文件配置grok插件

編輯logstash-syslog.conf文件,在input和output中間,加入整個filter模塊,配置如下:

[root@test103 conf.d]# cat logstash-syslog.conf

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

beats {

port => 5044

}

}

filter {

#這一步格式化messages爲json格式

grok {

match => [ "message", "(?m)^# User@Host: %{USER:query_user}\[[^\]]+\] @ (?:(?<query_host>\S*) )?\[(?:%{IP:query_ip})?\]\s# Thread_id:\s+%{NUMBER:thread_id:int}\s+Schema: %{USER:schema}\s+QC_hit: %{WORD:QC_hit}\s*# Query_time: %{NUMBER:query_time:float}\s+Lock_time: %{NUMBER:lock_time:float}\s+Rows_sent: %{NUMBER:rows_sent:int}\s+Rows_examined: %{NUMBER:rows_examined:int}\s*(?:use %{DATA:database};\s*)?SET timestamp=%{NUMBER:timestamp};\s*(?<query>(?<action>\w+)\s+.*)" ]

}

#這一步是將日誌中的時間那一行(如:# Time: 181218 9:17:42)加上一個“drop”的tag

grok {

match => { "message" => "# Time: " }

add_tag => [ "drop" ]

tag_on_failure => []

}

#刪除標籤中含有drop的行。也就是要刪除慢日誌裏面的“# Time: 181218 9:17:42”這樣的內容

if "drop" in [tags] {

drop {}

}

# 時間轉換

date {

match => ["mysql.slowlog.timestamp", "UNIX", "YYYY-MM-dd HH:mm:ss"]

target => "@timestamp"

timezone => "Asia/Shanghai"

}

ruby {

code => "event.set('[@metadata][today]', Time.at(event.get('@timestamp').to_i).localtime.strftime('%Y.%m.%d'))"

}

#刪除字段message

mutate {

remove_field => [ "message" ]

}

}

output {

elasticsearch {

hosts => ["http://10.0.0.102:9200"]

#index => "%{tags[0]}"

index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

stdout {

codec => rubydebug

}

}4.2 檢查格式化後的日誌採集

1)清除elasticsearch的舊索引,保證索引乾淨:

[root@test102 filebeat]# curl 10.0.0.102:9200/_cat/indices

green open .kibana_1 udOUvbprSnKWUJISwD0r_g 1 0 8 0 74.4kb 74.4kb

[root@test102 filebeat]# 2)重啓filebeat,重新啓動logstash前臺輸出,在mariadb執行查詢語句:

MariaDB [test101]> select * from table1 where id=588;

+-----+

| id |

+-----+

| 588 |

+-----+

1 row in set (13.00 sec)

MariaDB [test101]> 3)查看慢查詢日誌已經生成:

[root@test101 mysql]# tailf test101-slow.log

# Time: 181218 14:39:38

# User@Host: root[root] @ localhost []

# Thread_id: 4 Schema: test101 QC_hit: No

# Query_time: 12.999487 Lock_time: 0.000303 Rows_sent: 1 Rows_examined: 19999999

SET timestamp=1545115178;

select * from table1 where id=588;4)查看logstash的實時採集輸出,已經是json格式了:

[INFO ] 2018-12-18 14:25:02.802 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

{

"QC_hit" => "No",

"rows_sent" => 1,

"action" => "select",

"input" => {

"type" => "log"

},

"offset" => 1032,

"@version" => "1",

"query_host" => "localhost",

"beat" => {

"name" => "test101",

"hostname" => "test101",

"version" => "6.5.3"

},

"query" => "select * from table1 where id=428;",

"@timestamp" => 2018-12-18T06:25:34.017Z,

"prospector" => {

"type" => "log"

},

"lock_time" => 0.000775,

"query_user" => "root",

"log" => {

"flags" => [

[0] "multiline"

]

},

"schema" => "test101",

"timestamp" => "1545114330",

"thread_id" => 4,

"query_time" => 14.155133,

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"host" => {

"name" => "test101",

"id" => "9fcb0c7f28e449aabad922d1ba1f0afc",

"containerized" => true,

"os" => {

"codename" => "Core",

"family" => "redhat",

"version" => "7 (Core)",

"platform" => "centos"

},

"architecture" => "x86_64"

},

"rows_examined" => 19999999,

"source" => "/var/lib/mysql/test101-slow.log"

{

"QC_hit" => "No",

"rows_sent" => 1,

"action" => "select",

"input" => {

"type" => "log"

},

"offset" => 1284,

"@version" => "1",

"query_host" => "localhost",

"beat" => {

"name" => "test101",

"hostname" => "test101",

"version" => "6.5.3"

},

"query" => "select * from table1 where id=588;",

"@timestamp" => 2018-12-18T06:39:44.082Z,

"prospector" => {

"type" => "log"

},

"lock_time" => 0.000303,

"query_user" => "root",

"log" => {

"flags" => [

[0] "multiline"

]

},

"schema" => "test101",

"timestamp" => "1545115178",

"thread_id" => 4,

"query_time" => 12.999487,

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"host" => {

"name" => "test101",

"id" => "9fcb0c7f28e449aabad922d1ba1f0afc",

"containerized" => true,

"os" => {

"codename" => "Core",

"family" => "redhat",

"version" => "7 (Core)",

"platform" => "centos"

},

"architecture" => "x86_64"

},

"rows_examined" => 19999999,

"source" => "/var/lib/mysql/test101-slow.log"

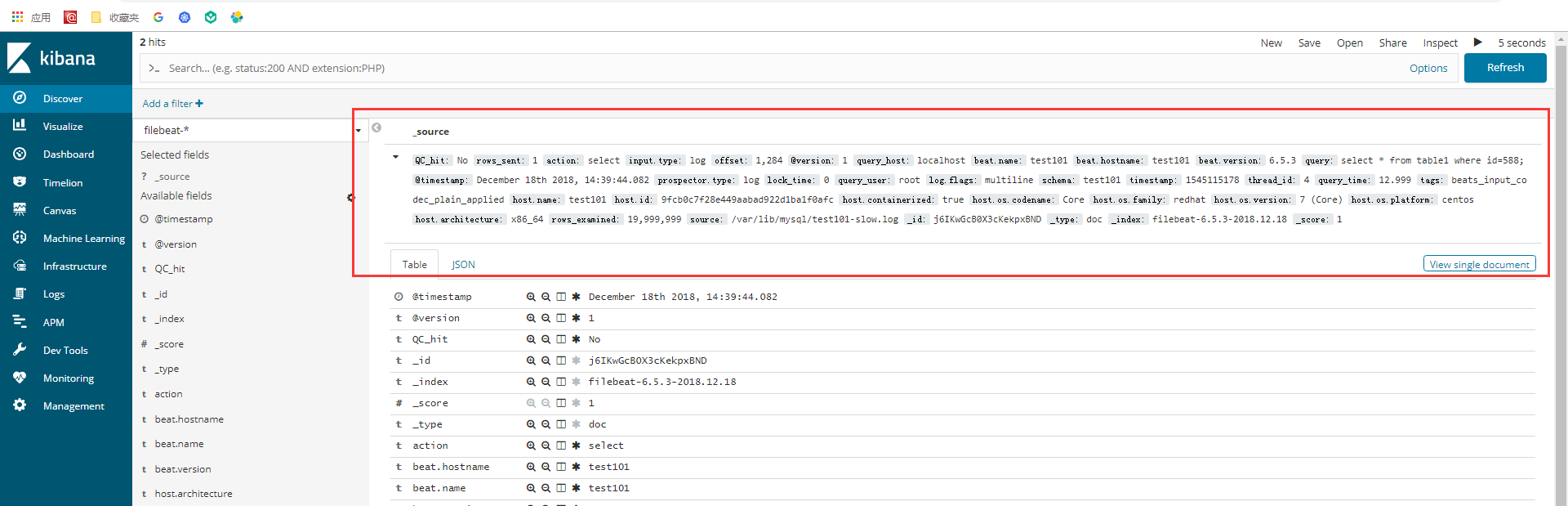

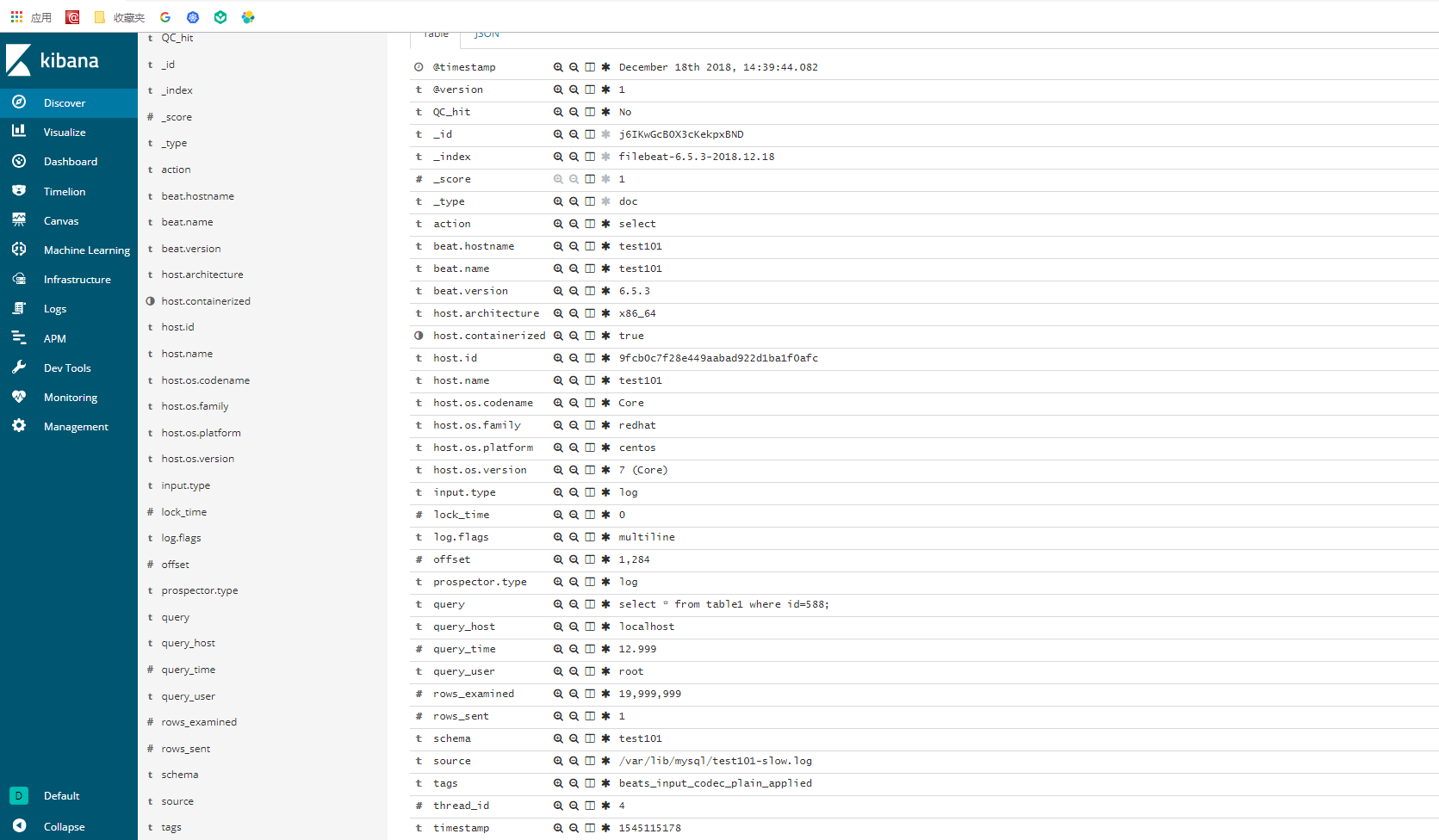

}再打開kibana界面,建立索引,然後查看kibana的界面,日誌OK:

其他版本MySQL慢日誌及grok語句

1、MySQL5.7.20版本

mysql5.7.20版本慢日誌:

# Time: 2018-12-18T08:43:24.828892Z

# User@Host: root[root] @ localhost [] Id: 7

# Query_time: 15.819314 Lock_time: 0.000174 Rows_sent: 1 Rows_examined: 19999999

SET timestamp=1545122604;

select * from table1 where id=258;grok語法:

match => [ "message", "(?m)^# User@Host: %{USER:query_user}\[[^\]]+\] @ (?:(?<query_host>\S*) )?\[(?:%{IP:query_ip})?\]\s+Id:\s+%{NUMBER:id:int}\s# Query_time: %{NUMBER:query_time:float}\s+Lock_time: %{NUMBER:lock_time:float}\s+Rows_sent: %{NUMBER:rows_sent:int}\s+Rows_examined: %{NUMBER:rows_examined:int}\s*(?:use %{DATA:database};\s*)?SET timestamp=%{NUMBER:timestamp};\s*(?<query>(?<action>\w+)\s+.*)" ]logstash採集查詢結果:

{

"rows_examined" => 19999999,

"input" => {

"type" => "log"

},

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"query_user" => "root",

"query_time" => 15.461892,

"query_host" => "localhost",

"@version" => "1",

"query" => "select * from table1 where id=258;",

"host" => {

"containerized" => true,

"os" => {

"platform" => "centos",

"version" => "7 (Core)",

"family" => "redhat",

"codename" => "Core"

},

"id" => "9fcb0c7f28e449aabad922d1ba1f0afc",

"name" => "test101",

"architecture" => "x86_64"

},

"source" => "/home/data/test101-mysql5.7.20-slow.log",

"action" => "select",

"lock_time" => 0.000181,

"prospector" => {

"type" => "log"

},

"offset" => 1416,

"log" => {

"flags" => [

[0] "multiline"

]

},

"rows_sent" => 1,

"timestamp" => "1545122934",

"beat" => {

"hostname" => "test101",

"version" => "6.5.3",

"name" => "test101"

},

"@timestamp" => 2018-12-18T08:48:58.531Z,

"id" => 7

}2、MariaDB10.4版本

mariadb10.4配置慢查詢和5.5.60不同,是在/etc/my.cnf.d/server.cnf文件的[mysqld]模塊下添加配置開啓慢查詢,不是改/etc/my.conf:

[root@test101 filebeat]# cat /etc/my.cnf.d/server.cnf

...... #省略若干行

[mysqld]

slow_query_log

long_query_time = 2

slow_query_log_file = "/var/lib/mysql/test101-slow.log"

...... #省略若干行mariadb10.4版本慢日誌:

# Time: 181219 13:01:17

# User@Host: root[root] @ localhost []

# Thread_id: 8 Schema: test101 QC_hit: No

# Query_time: 18.711204 Lock_time: 0.000443 Rows_sent: 1 Rows_examined: 19999999

# Rows_affected: 0 Bytes_sent: 80 #比5.5.60版本多了 Rows_affected和 Bytes_sent:字段

SET timestamp=1545195677;

select * from test101.table1 where id=888;grok語法:

match => [ "message", "(?m)^# User@Host: %{USER:query_user}\[[^\]]+\] @ (?:(?<query_host>\S*) )?\[(?:%{IP:query_ip})?\]\s# Thread_id:\s+%{NUMBER:thread_id:int}\s+Schema: %{USER:schema}\s+QC_hit: %{WORD:QC_hit}\s*# Query_time: %{NUMBER:query_time:float}\s+Lock_time: %{NUMBER:lock_time:float}\s+Rows_sent: %{NUMBER:rows_sent:int}\s+Rows_examined: %{NUMBER:rows_examined:int}\s*# Rows_affected: %{NUMBER:rows_affected:int}\s+Bytes_sent: %{NUMBER:bytes_sent:int}\s*(?:use %{DATA:database};\s*)?SET timestamp=%{NUMBER:timestamp};\s*(?<query>(?<action>\w+)\s+.*)" ]logstash採集查詢結果:

{

"source" => "/var/lib/mysql/test101-slow.log",

"@version" => "1",

"query_time" => 18.711204,

"offset" => 319,

"query_user" => "root",

"prospector" => {

"type" => "log"

},

"schema" => "test101",

"query_host" => "localhost",

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"action" => "select",

"bytes_sent" => 80,

"host" => {

"id" => "9fcb0c7f28e449aabad922d1ba1f0afc",

"architecture" => "x86_64",

"os" => {

"family" => "redhat",

"codename" => "Core",

"platform" => "centos",

"version" => "7 (Core)"

},

"name" => "test101",

"containerized" => true

},

"rows_examined" => 19999999,

"@timestamp" => 2018-12-19T05:01:23.310Z,

"rows_sent" => 1,

"query" => "select * from test101.table1 where id=888;",

"log" => {

"flags" => [

[0] "multiline"

]

},

"thread_id" => 8,

"beat" => {

"hostname" => "test101",

"name" => "test101",

"version" => "6.5.3"

},

"QC_hit" => "No",

"input" => {

"type" => "log"

},

"rows_affected" => 0,

"lock_time" => 0.000443,

"timestamp" => "1545195677"

}