說明:本文檔涉及docker鏡像,yaml文件下載地址

鏈接:https://pan.baidu.com/s/1QuVelCG43_VbHiOs04R3-Q 密碼:70q2

1. 環境

1.1 服務器信息

| 主機名 | IP地址 | os 版本 | 節點 |

| k8s01 | 172.16.50.131 | CentOS Linux release 7.4.1708 (Core) | mmaster |

| k8s02 | 172.16.50.132 | CentOS Linux release 7.4.1708 (Core) | node |

1.2 軟件版本信息

| 名稱 | 版本 | ||

| kubernetes | v1.10.4 | ||

| docker | 1.13.1 |

2. Master部署

2.1 服務器初始化

基礎軟件安裝

yum install vim net-tools git -y

關閉selinux

編輯 /etc/sysconfig/selinux SELINUX=disabled

關閉firewall防火牆

systemctl disable firewalld && systemctl stop firewalld

更新服務器後重啓

yum upgrade -y && reboot

修改內核參數

創建/etc/sysctl.d/k8s.conf文件

net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 sysctl -p /etc/sysctl.d/k8s.conf # 生效 如遇錯誤提示: sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory 執行: modprobe br_netfilter

2.2 kubeadm,kubectl,kubelet ,docker 安裝

2.2.1 添加yum源

創建/etc/yum.repos.d/k8s.repo文件

[kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

2.2.2 安裝

yum install kubeadm kubectl kubelet docker -y systemctl enable docker && systemctl start docker systemctl enable kubelet

2.3 導入docker鏡像

鏡像包列表

[root@k8s01 images]# ll total 1050028 -rw-r--r-- 1 root root 193461760 Jun 14 11:15 etcd-amd64_3.1.12.tar -rw-r--r-- 1 root root 45306368 Jun 14 11:15 flannel_v0.10.0-amd64.tar -rw-r--r-- 1 root root 75337216 Jun 14 11:15 heapster-amd64_v1.5.3.tar -rw-r--r-- 1 root root 41239040 Jun 14 11:15 k8s-dns-dnsmasq-nanny-amd64_1.14.8.tar -rw-r--r-- 1 root root 50727424 Jun 14 11:15 k8s-dns-kube-dns-amd64_1.14.8.tar -rw-r--r-- 1 root root 42481152 Jun 14 11:15 k8s-dns-sidecar-amd64_1.14.8.tar -rw-r--r-- 1 root root 225355776 Jun 14 11:16 kube-apiserver-amd64_v1.10.4.tar -rw-r--r-- 1 root root 148135424 Jun 14 11:16 kube-controller-manager-amd64_v1.10.4.tar -rw-r--r-- 1 root root 98951168 Jun 14 11:16 kube-proxy-amd64_v1.10.4.tar -rw-r--r-- 1 root root 102800384 Jun 14 11:16 kubernetes-dashboard-amd64_v1.8.3.tar -rw-r--r-- 1 root root 50658304 Jun 14 11:16 kube-scheduler-amd64_v1.10.4.tar -rw-r--r-- 1 root root 754176 Jun 14 11:16 pause-amd64_3.1.tar

導入

for j in `ls ./`; do docker load --input ./$j ; done

查看鏡像

docker images REPOSITORY TAG IMAGE ID CREATED SIZE k8s.gcr.io/kube-proxy-amd64 v1.10.4 3f9ff47d0fca 7 days ago 97.1 MB k8s.gcr.io/kube-controller-manager-amd64 v1.10.4 1a24f5586598 7 days ago 148 MB k8s.gcr.io/kube-apiserver-amd64 v1.10.4 afdd56622af3 7 days ago 225 MB k8s.gcr.io/kube-scheduler-amd64 v1.10.4 6fffbea311f0 7 days ago 50.4 MB k8s.gcr.io/heapster-amd64 v1.5.3 f57c75cd7b0a 6 weeks ago 75.3 MB k8s.gcr.io/etcd-amd64 3.1.12 52920ad46f5b 3 months ago 193 MB k8s.gcr.io/kubernetes-dashboard-amd64 v1.8.3 0c60bcf89900 4 months ago 102 MB quay.io/coreos/flannel v0.10.0-amd64 f0fad859c909 4 months ago 44.6 MB k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64 1.14.8 c2ce1ffb51ed 5 months ago 41 MB k8s.gcr.io/k8s-dns-sidecar-amd64 1.14.8 6f7f2dc7fab5 5 months ago 42.2 MB k8s.gcr.io/k8s-dns-kube-dns-amd64 1.14.8 80cc5ea4b547 5 months ago 50.5 MB k8s.gcr.io/pause-amd64 3.1 da86e6ba6ca1 5 months ago 742 kB

2.4 證書生成

拉取github腳本

git clone https://github.com/fandaye/k8s-tls.git && cd k8s-tls/

編輯apiserver.json文件

{

"CN": "kube-apiserver",

"hosts": [

"172.16.50.131", #本機IP地址

"10.96.0.1",

"k8s01", #本機主機名

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

}

}執行 ./run.sh

進去到/etc/kubernetes/pki目錄

編輯kubelet.json文件

{

"CN": "system:node:k8s01", #k8s01爲主機名

"hosts": [""],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"O": "system:nodes"

}

]

}編輯node.sh 文件

ip="172.16.50.131" NODE="k8s01"

執行

./node.sh

查看證書

-rw-r--r-- 1 root root 1436 Jun 19 15:04 apiserver.crt -rw------- 1 root root 1679 Jun 19 15:04 apiserver.key -rw-r--r-- 1 root root 1257 Jun 19 15:04 apiserver-kubelet-client.crt -rw------- 1 root root 1679 Jun 19 15:04 apiserver-kubelet-client.key -rw-r--r-- 1 root root 1143 Jun 19 15:04 ca.crt -rw------- 1 root root 1679 Jun 19 15:04 ca.key -rw-r--r-- 1 root root 289 Jun 19 15:04 config.json -rw-r--r-- 1 root root 1143 Jun 19 15:04 front-proxy-ca.crt -rw------- 1 root root 1679 Jun 19 15:04 front-proxy-ca.key -rw-r--r-- 1 root root 1208 Jun 19 15:04 front-proxy-client.crt -rw------- 1 root root 1679 Jun 19 15:04 front-proxy-client.key -rw-r--r-- 1 root root 114 Jun 19 15:04 kube-controller-manager.json -rw-r--r-- 1 root root 157 Jun 19 15:07 kubelet.json -rw-r--r-- 1 root root 141 Jun 19 15:04 kubernetes-admin.json -rw-r--r-- 1 root root 105 Jun 19 15:04 kube-scheduler.json -rwxr-xr-x 1 root root 3588 Jun 19 15:07 node.sh -rw-r--r-- 1 root root 887 Jun 19 15:04 sa.key -rw-r--r-- 1 root root 272 Jun 19 15:04 sa.pub

查看配置文件

# ll ../ | grep conf -rw------- 1 root root 5789 Jun 19 15:10 admin.conf -rw------- 1 root root 5849 Jun 19 15:10 controller-manager.conf -rw------- 1 root root 5821 Jun 19 15:10 kubelet.conf -rw------- 1 root root 5797 Jun 19 15:10 scheduler.conf

2.5 初始化master

# kubeadm init --apiserver-advertise-address=172.16.50.131 --pod-network-cidr=10.244.0.0/16 --kubernetes-version=v1.10.4 [init] Using Kubernetes version: v1.10.4 [init] Using Authorization modes: [Node RBAC] [preflight] Running pre-flight checks. [WARNING FileExisting-crictl]: crictl not found in system path Suggestion: go get github.com/kubernetes-incubator/cri-tools/cmd/crictl [preflight] Starting the kubelet service [certificates] Using the existing ca certificate and key. [certificates] Using the existing apiserver certificate and key. [certificates] Using the existing apiserver-kubelet-client certificate and key. [certificates] Using the existing sa key. [certificates] Using the existing front-proxy-ca certificate and key. [certificates] Using the existing front-proxy-client certificate and key. [certificates] Generated etcd/ca certificate and key. [certificates] Generated etcd/server certificate and key. [certificates] etcd/server serving cert is signed for DNS names [localhost] and IPs [127.0.0.1] [certificates] Generated etcd/peer certificate and key. [certificates] etcd/peer serving cert is signed for DNS names [k8s01] and IPs [172.16.50.131] [certificates] Generated etcd/healthcheck-client certificate and key. [certificates] Generated apiserver-etcd-client certificate and key. [certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki" [kubeconfig] Using existing up-to-date KubeConfig file: "/etc/kubernetes/admin.conf" [kubeconfig] Using existing up-to-date KubeConfig file: "/etc/kubernetes/kubelet.conf" [kubeconfig] Using existing up-to-date KubeConfig file: "/etc/kubernetes/controller-manager.conf" [kubeconfig] Using existing up-to-date KubeConfig file: "/etc/kubernetes/scheduler.conf" [controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml" [controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml" [controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml" [etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml" [init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests". [init] This might take a minute or longer if the control plane images have to be pulled. [apiclient] All control plane components are healthy after 16.504170 seconds [uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [markmaster] Will mark node k8s01 as master by adding a label and a taint [markmaster] Master k8s01 tainted and labelled with key/value: node-role.kubernetes.io/master="" [bootstraptoken] Using token: z9is98.bjzc1t2kyzhx247v [bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: kube-dns [addons] Applied essential addon: kube-proxy Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join 172.16.50.131:6443 --token z9is98.bjzc1t2kyzhx247v --discovery-token-ca-cert-hash sha256:aefae64bbe8e0d6ce173a272c2d2fb36bee6f2b5553301a68500c4c931d8f488

後續操作

mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config

查看pod

kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system etcd-k8s01 1/1 Running 0 1m kube-system kube-apiserver-k8s01 1/1 Running 0 1m kube-system kube-controller-manager-k8s01 1/1 Running 0 1m kube-system kube-dns-86f4d74b45-p2n2h 0/3 Pending 0 2m kube-system kube-proxy-54kkv 1/1 Running 0 2m kube-system kube-scheduler-k8s01 1/1 Running 0 1m

2.6 部署網絡插件flannel

在壓縮包yaml目錄

cd /data/v1.10.4/yaml # kubectl create -f kube-flannel.yaml clusterrole.rbac.authorization.k8s.io "flannel" created clusterrolebinding.rbac.authorization.k8s.io "flannel" created serviceaccount "flannel" created configmap "kube-flannel-cfg" created daemonset.extensions "kube-flannel-ds" created

查看pod

kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system etcd-k8s01 1/1 Running 0 1m kube-system kube-apiserver-k8s01 1/1 Running 0 1m kube-system kube-controller-manager-k8s01 1/1 Running 0 1m kube-system kube-dns-86f4d74b45-p2n2h 3/3 Running 0 2m kube-system kube-flannel-ds-fvzsz 1/1 Running 0 1m kube-system kube-proxy-54kkv 1/1 Running 0 2m kube-system kube-scheduler-k8s01 1/1 Running 0 1m

3. Node部署

按照 2.1 ,2.2 ,2.3 步驟操作,如有多個node節點操作相同

加入集羣

# kubeadm join 172.16.50.131:6443 --token z9is98.bjzc1t2kyzhx247v --discovery-token-ca-cert-hash sha256:aefae64bbe8e0d6ce173a272c2d2fb36bee6f2b5553301a68500c4c931d8f488 [preflight] Running pre-flight checks. [WARNING FileExisting-crictl]: crictl not found in system path Suggestion: go get github.com/kubernetes-incubator/cri-tools/cmd/crictl [preflight] Starting the kubelet service [discovery] Trying to connect to API Server "172.16.50.131:6443" [discovery] Created cluster-info discovery client, requesting info from "https://172.16.50.131:6443" [discovery] Requesting info from "https://172.16.50.131:6443" again to validate TLS against the pinned public key [discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "172.16.50.131:6443" [discovery] Successfully established connection with API Server "172.16.50.131:6443" This node has joined the cluster: * Certificate signing request was sent to master and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the master to see this node join the cluster.

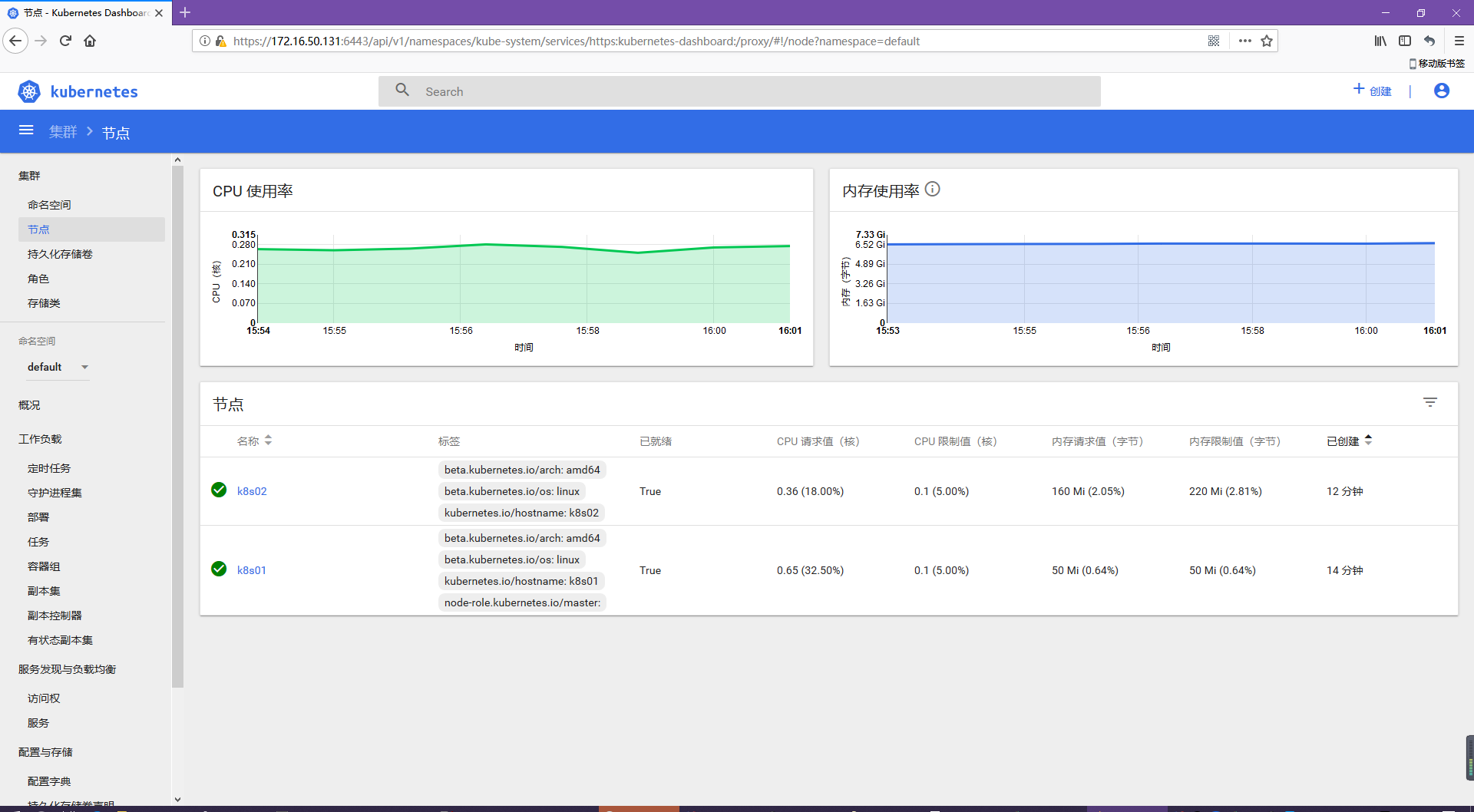

4.查看集羣節點狀態

kubectl get nodes NAME STATUS ROLES AGE VERSION k8s01 Ready master 15m v1.10.4 k8s02 Ready <none> 35s v1.10.4

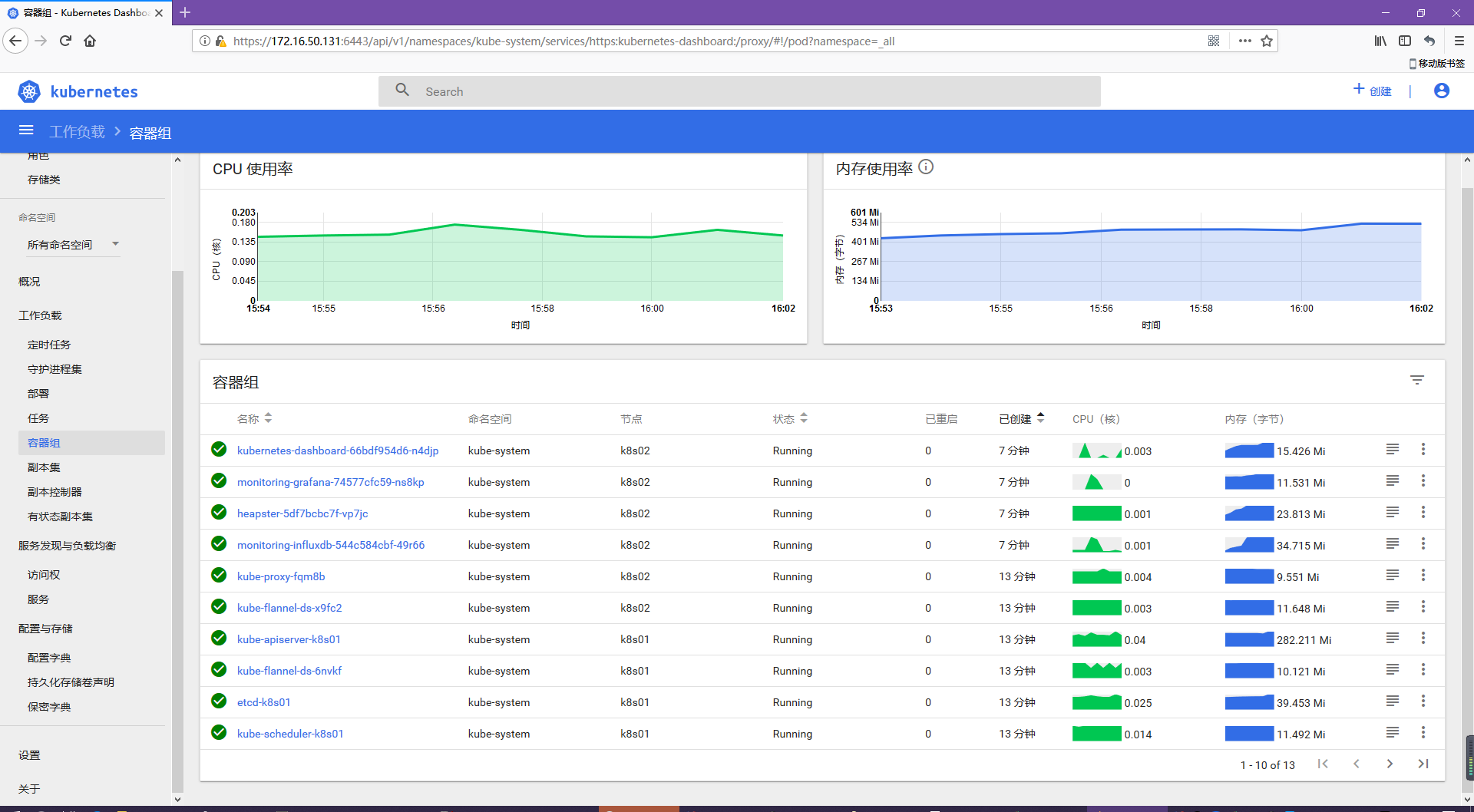

5. 插件部署(可選)

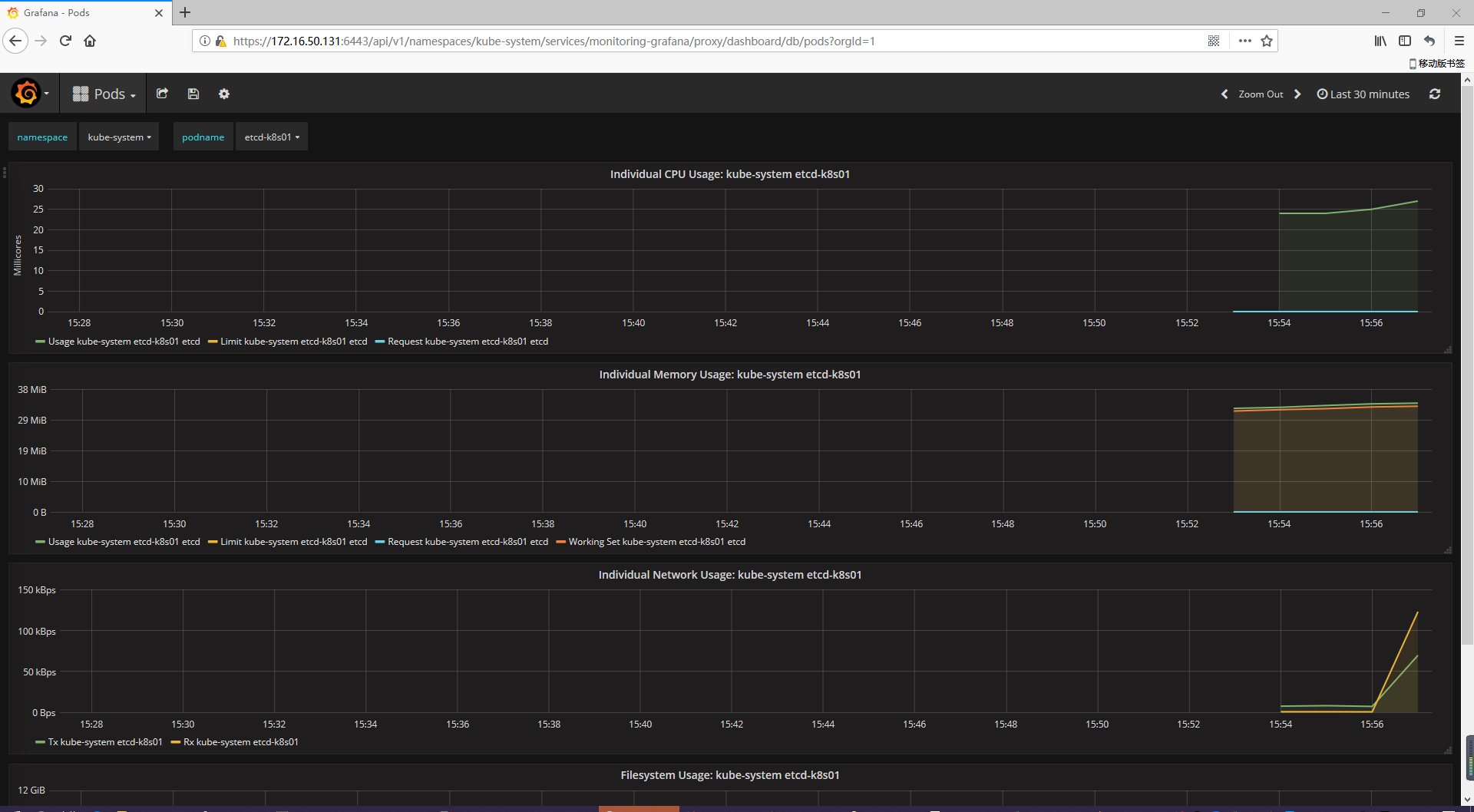

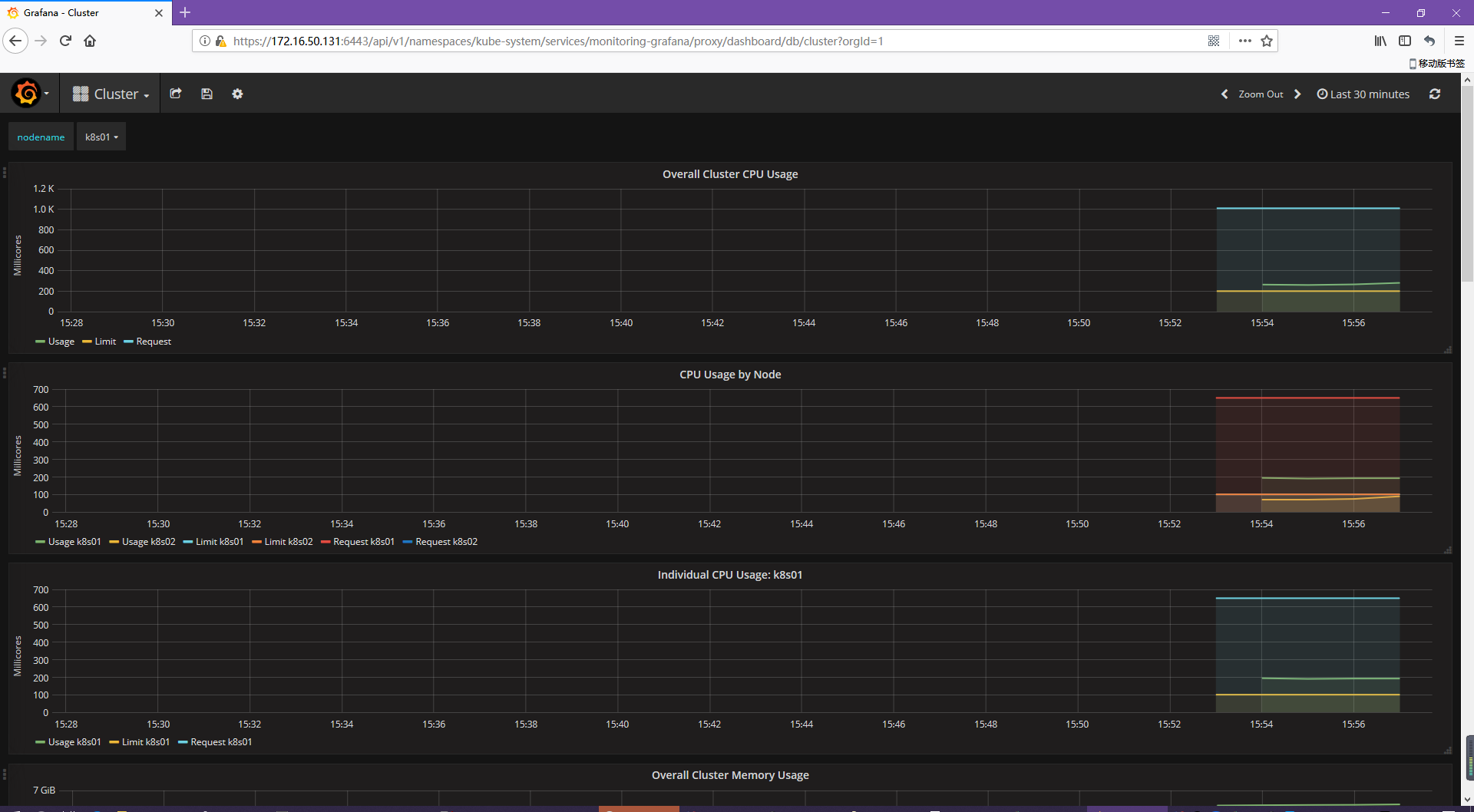

5.1 監控插件

部署時序性數據庫容器 用於存儲監控數據

kubectl create -f influxdb.yaml deployment.extensions "monitoring-influxdb" created service "monitoring-influxdb" created 查看運行狀態 kubectl get pods --all-namespaces | grep influxdb kube-system monitoring-influxdb-544c584cbf-w2x58 1/1 Running 0 2m

Heapster默認設置爲使用influxdb存儲後端。Heapster作爲集羣中的一個容器運行,Heapster pod發現集羣中的所有節點,並從節點的Kubelet查詢使用情況信息,Kubelet本身從cAdvisor獲取數據

# kubectl create -f heapster.yaml clusterrolebinding.rbac.authorization.k8s.io "heapster" created serviceaccount "heapster" created deployment.extensions "heapster" created service "heapster" created 查看運行狀態 # kubectl get pods --all-namespaces | grep heapster kube-system heapster-5df7bcbc7f-9wggz 1/1 Running 0 15s

部署grafana容器

# kubectl create -f grafana.yaml deployment.extensions "monitoring-grafana" created service "monitoring-grafana" created 查看運行狀態 kubectl get pods --all-namespaces | grep grafana kube-system monitoring-grafana-74577cfc59-jr75m 1/1 Running 0 1m

5.2 dashboard插件

# kubectl create -f kubernetes-dashboard.yaml secret "kubernetes-dashboard-certs" created serviceaccount "kubernetes-dashboard" created role.rbac.authorization.k8s.io "kubernetes-dashboard-minimal" created rolebinding.rbac.authorization.k8s.io "kubernetes-dashboard-minimal" created deployment.apps "kubernetes-dashboard" created service "kubernetes-dashboard" created serviceaccount "kubernetes-dashboard-admin" created clusterrolebinding.rbac.authorization.k8s.io "kubernetes-dashboard-admin" created 查看運行狀態 # kubectl get pods --all-namespaces | grep dashboard kube-system kubernetes-dashboard-66bdf954d6-qgknc 1/1 Running 0 1m

6. 導出證書

cat /etc/kubernetes/admin.conf | grep client-certificate-data | awk -F ': ' '{print $2}' | base64 -d > /etc/kubernetes/pki/client.crt

cat /etc/kubernetes/admin.conf | grep client-key-data | awk -F ': ' '{print $2}' | base64 -d > /etc/kubernetes/pki/client.key

openssl pkcs12 -export -inkey /etc/kubernetes/pki/client.key -in /etc/kubernetes/pki/client.crt -out /etc/kubernetes/pki/client.pfx下載 /etc/kubernetes/pki/client.pfx 文件,導入瀏覽器,建議使用火狐瀏覽器。

7.監控查看

https://172.16.50.131:6443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

8.儀表盤訪問

使用token認證,查看token

# kubectl describe sa kubernetes-dashboard-admin -n kube-system Name: kubernetes-dashboard-admin Namespace: kube-system Labels: k8s-app=kubernetes-dashboard Annotations: <none> Image pull secrets: <none> Mountable secrets: kubernetes-dashboard-admin-token-wtk6d Tokens: kubernetes-dashboard-admin-token-wtk6d Events: <none> # kubectl describe secrets kubernetes-dashboard-admin-token-wtk6d -n kube-system Name: kubernetes-dashboard-admin-token-wtk6d Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name=kubernetes-dashboard-admin kubernetes.io/service-account.uid=e38d6bdf-6fa7-11e8-acf2-1e0086000052 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1143 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi13dGs2ZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImUzOGQ2YmRmLTZmYTctMTFlOC1hY2YyLTFlMDA4NjAwMDA1MiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.dVQ8CgMrYe0bJ2vt0EZIBeyl5TtG9pD4D-jkkvNGOR37efeyCAUGgSyUnR_Bqt0MbgPPWXLkpkXKbz3LIzker9mSBToeRYgEWSfmyVvhiKxpqkS0S_YiLgYL_KqvOPKNHFegNq8YerplK64kLw2-Tm4Ccnkf3d0iADJLzXuuw3A

9.Nginx Ingress 部署

yaml 文件參考如下:

--- apiVersion: v1 kind: Namespace metadata: name: ingress-nginx --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: default-http-backend labels: app: default-http-backend namespace: ingress-nginx spec: replicas: 1 selector: matchLabels: app: default-http-backend template: metadata: labels: app: default-http-backend spec: terminationGracePeriodSeconds: 60 containers: - name: default-http-backend # Any image is permissible as long as: # 1. It serves a 404 page at / # 2. It serves 200 on a /healthz endpoint image: registry.cn-hangzhou.aliyuncs.com/googles_containers/defaultbackend:1.4 livenessProbe: httpGet: path: /healthz port: 8080 scheme: HTTP initialDelaySeconds: 30 timeoutSeconds: 5 ports: - containerPort: 8080 resources: limits: cpu: 10m memory: 20Mi requests: cpu: 10m memory: 20Mi --- apiVersion: v1 kind: Service metadata: name: default-http-backend namespace: ingress-nginx labels: app: default-http-backend spec: ports: - port: 80 targetPort: 8080 selector: app: default-http-backend --- kind: ConfigMap apiVersion: v1 metadata: name: nginx-configuration namespace: ingress-nginx labels: app: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: tcp-services namespace: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: udp-services namespace: ingress-nginx --- apiVersion: v1 kind: ServiceAccount metadata: name: nginx-ingress-serviceaccount namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: nginx-ingress-clusterrole rules: - apiGroups: - "" resources: - configmaps - endpoints - nodes - pods - secrets verbs: - list - watch - apiGroups: - "" resources: - nodes verbs: - get - apiGroups: - "" resources: - services verbs: - get - list - watch - apiGroups: - "extensions" resources: - ingresses verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - "extensions" resources: - ingresses/status verbs: - update --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: Role metadata: name: nginx-ingress-role namespace: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - pods - secrets - namespaces verbs: - get - apiGroups: - "" resources: - configmaps resourceNames: # Defaults to "<election-id>-<ingress-class>" # Here: "<ingress-controller-leader>-<nginx>" # This has to be adapted if you change either parameter # when launching the nginx-ingress-controller. - "ingress-controller-leader-nginx" verbs: - get - update - apiGroups: - "" resources: - configmaps verbs: - create - apiGroups: - "" resources: - endpoints verbs: - get --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: RoleBinding metadata: name: nginx-ingress-role-nisa-binding namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: nginx-ingress-role subjects: - kind: ServiceAccount name: nginx-ingress-serviceaccount namespace: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: nginx-ingress-clusterrole-nisa-binding roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: nginx-ingress-clusterrole subjects: - kind: ServiceAccount name: nginx-ingress-serviceaccount namespace: ingress-nginx --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: nginx-ingress-controller namespace: ingress-nginx spec: replicas: 1 selector: matchLabels: app: ingress-nginx template: metadata: labels: app: ingress-nginx annotations: prometheus.io/port: '10254' prometheus.io/scrape: 'true' spec: hostNetwork: true serviceAccountName: nginx-ingress-serviceaccount containers: - name: nginx-ingress-controller image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:0.15.0 args: - /nginx-ingress-controller - --default-backend-service=$(POD_NAMESPACE)/default-http-backend - --configmap=$(POD_NAMESPACE)/nginx-configuration - --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services - --udp-services-configmap=$(POD_NAMESPACE)/udp-services - --publish-service=$(POD_NAMESPACE)/ingress-nginx - --annotations-prefix=nginx.ingress.kubernetes.io env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace ports: - name: http containerPort: 80 - name: https containerPort: 443 livenessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 securityContext: runAsNonRoot: false --- apiVersion: v1 kind: Service metadata: name: ingress-nginx namespace: ingress-nginx spec: type: NodePort ports: - name: http port: 80 targetPort: 80 protocol: TCP - name: https port: 443 targetPort: 443 protocol: TCP selector: app: ingress-nginx

官方yaml文件中Deployment - nginx-ingress-controller 未暴露在本地端口,添加了hostNetwork: true,其中鏡像改成了阿里雲;官方參考:https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/mandatory.yaml

官方Server - ingress-nginx 是獨立的一個yaml,在這裏添加到了一個yaml文件中,官方參考:https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/provider/baremetal/service-nodeport.yaml

創建Ingerss http

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: demo spec: rules: - host: demo.bs.com http: paths: - path: / backend: serviceName: nginx servicePort: 80

測試

172.16.50.104 爲nginx-ingress-controller容器運行的服務器

echo "172.16.50.132 demo.bs.com" >> /etc/hosts

curl demo.bs.com

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>待更新....