keepalived實現nginx高可用

1、環境說明

| IP | 服務 | 作用 |

|---|---|---|

| 192.168.1.101 | nginx + keepalived | master |

| 192.168.1.102 | nginx + keepalived | backup |

| 192.168.1.103 | 虛擬ip(VIP) |

- 說明:

系統:CentOS 6.10

master配一個,backup可以配置多個;

虛擬ip(VIP):192.168.1.103,對外提供服務的ip,也可稱作浮動ip

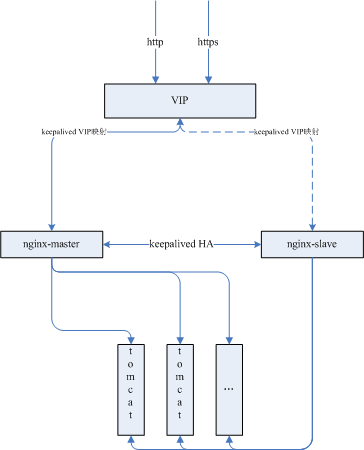

各個組件之間的關係圖如下:

tomcat的安裝不在本博客範圍之內;

2、nginx 安裝與配置

2.1、安裝nginx

master和backup所有節點都安裝

配置nginx官方源

vim /etc/yum.repos.d/nginx.repo添加如下內容:

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=0

enabled=1安裝

yum install nginx -y2.2、master節點配置

2.2.1、刪除沒用的配置內容(可選)

vim /etc/nginx/conf.d/default.conf改爲如下:

server {

listen 80;

server_name localhost;

access_log /var/log/nginx/host.access.log main;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

error_page 404 /404.html;

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}2.2.2、修改nginx默認顯示內容

vim /usr/share/nginx/html/index.html只修改第14行內容,如下:

1 <!DOCTYPE html>

2 <html>

3 <head>

4 <title>Welcome to nginx!</title>

5 <style>

6 body {

7 width: 35em;

8 margin: 0 auto;

9 font-family: Tahoma, Verdana, Arial, sans-serif;

10 }

11 </style>

12 </head>

13 <body>

14 <h1>Welcome to nginx! test keepalived master!</h1>

15 <p>If you see this page, the nginx web server is successfully installed and

16 working. Further configuration is required.</p>

17

18 <p>For online documentation and support please refer to

19 <a href="http://nginx.org/">nginx.org</a>.<br/>

20 Commercial support is available at

21 <a href="http://nginx.com/">nginx.com</a>.</p>

22

23 <p><em>Thank you for using nginx.</em></p>

24 </body>

25 </html>2.3、backup節點配置

只把/usr/share/nginx/html/index.html的第14行改爲如下,其它和master一致。

<h1>Welcome to nginx! test keepalived backup!</h1>3、keepalived服務

3.1、keepalived 是什麼?

Keepalived 一方面具有配置管理LVS的功能,同時還具有對LVS下面節點進行健康檢查的功能,另一方面也可實現系統網絡服務的高可用功能,用來防止單點故障。

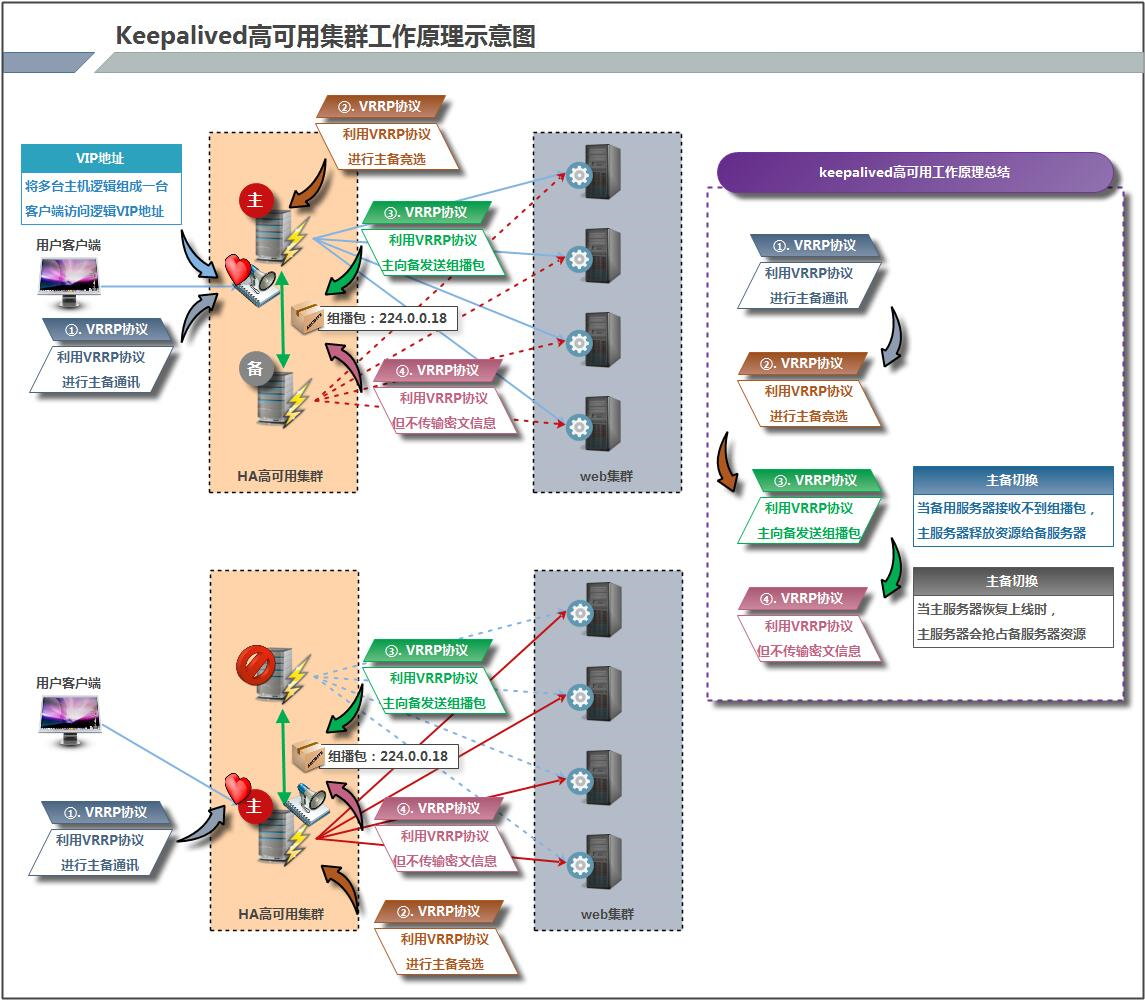

3.2、keepalived 工作原理

keepalived 是以 VRRP 協議爲實現基礎,VRRP全稱Virtual Router Redundancy Protocol,即虛擬路由冗餘協議。

虛擬路由冗餘協議,可以認爲是實現路由器高可用的協議,即將N臺提供相同功能的路由器組成一個路由器組,這個組裏面有一個master和多個backup,master上面有一個對外提供服務的vip(該路由器所在局域網內其他機器的默認路由爲該vip),master會發組播vrrp包,用於高速backup自己還活着,當backup收不到vrrp包時就認爲master宕掉了,這時就需要根據VRRP的優先級來選舉一個backup當master。這樣的話就可以保證路由器的高可用了。保證業務的連續性,接管速度最快可以小於1秒。

3.3、keepalived主要有三個模塊,分別是core、check和vrrp。

core模塊爲keepalived的核心,負責主進程的啓動、維護以及全局配置文件的加載和解析。

check負責健康檢查,包括常見的各種檢查方式。

vrrp模塊是來實現VRRP協議的。

3.4、keepalived 與 zookeeper 高可用性區別

- Keepalived:

- 優點:簡單,基本不需要業務層面做任何事情,就可以實現高可用,主備容災。而且容災的宕機時間也比較短。

- 缺點:也是簡單,因爲VRRP、主備切換都沒有什麼複雜的邏輯,所以無法應對某些特殊場景,比如主備通信鏈路出問題,會導致腦裂。同時keepalived也不容易做負載均衡。

- Zookeeper:

- 優點:可以支持高可用,負載均衡。本身是個分佈式的服務。

- 缺點:跟業務結合的比較緊密。需要在業務代碼中寫好ZK使用的邏輯,比如註冊名字。拉取名字對應的服務地址等。

4、keepalived 配置

4.1、keepalived 安裝

master和backup所有節點都安裝

[root@node1 ~]# yum install keepalived -y

[root@node1 ~]# rpm -ql keepalived

/etc/keepalived

/etc/keepalived/keepalived.conf # keepalived服務主配置文件

/etc/rc.d/init.d/keepalived # 服務啓動腳本(centos 7 之前的用init.d 腳本啓動,之後的systemd啓動)

/etc/sysconfig/keepalived

/usr/bin/genhash

/usr/libexec/keepalived

/usr/sbin/keepalived

/usr/share/doc/keepalived-1.2.13

... ...

/usr/share/man/man1/genhash.1.gz

/usr/share/man/man5/keepalived.conf.5.gz

/usr/share/man/man8/keepalived.8.gz

/usr/share/snmp/mibs/KEEPALIVED-MIB.txt4.2、默認配置及說明

[root@node1 keepalived]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived # 全局定義

global_defs {

notification_email { # 指定keepalived在發生事件時(比如切換)發送通知郵件的郵箱

[email protected] # 設置報警郵件地址,可以設置多個,每行一個。 需開啓本機的sendmail服務

[email protected]

[email protected]

}

notification_email_from [email protected] # keepalived在發生諸如切換操作時需要發送email通知地址

smtp_server 192.168.200.1 # 指定發送email的smtp服務器

smtp_connect_timeout 30 # 設置連接smtp server的超時時間

router_id LVS_DEVEL # 運行keepalived的機器的一個標識,通常可設爲hostname。故障發生時,發郵件時顯示在郵件主題中的信息。

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

<br># 虛擬 IP 配置 vrrp

vrrp_instance VI_1 { # keepalived在同一virtual_router_id中priority(0-255)最大的會成爲master,也就是接管VIP,當priority最大的主機發生故障後次priority將會接管

state MASTER

# 指定keepalived的角色,MASTER表示此主機是主服務器,BACKUP表示此主機是備用服務器。

# 注意這裏的state指定instance(Initial)的初始狀態,就是說在配置好後,這臺服務器的初始狀態就是這裏指定的,但這裏指定的不算,還是得要通過競選通過優先級來確定。

# 如果這裏設置爲MASTER,但如若他的優先級不及另外一臺,那麼這臺在發送通告時,會發送自己的優先級,另外一臺發現優先級不如自己的高,那麼他會就回搶佔爲MASTER

interface eth1 # 綁定虛擬 IP 的網絡接口,與本機 IP 地址所在的網絡接口相同, 我的是 eth1;

virtual_router_id 51 # 虛擬路由標識,這個標識是一個數字,同一個vrrp實例使用唯一的標識。即同一vrrp_instance下,MASTER和BACKUP必須是一致的;

priority 100 # 定義優先級,數字越大,優先級越高,在同一個vrrp_instance下,MASTER的優先級必須大於BACKUP的優先級,值範圍 0-254;

advert_int 1 # 設定MASTER與BACKUP負載均衡器之間同步檢查的時間間隔,單位是秒;

authentication { # 設置驗證類型和密碼。主從必須一樣;

auth_type PASS # 設置vrrp驗證類型,主要有PASS和AH兩種;

auth_pass 1111 # #設置vrrp驗證密碼,在同一個vrrp_instance下,MASTER與BACKUP必須使用相同的密碼才能正常通信;

}<br> <br> ## 將 track_script 塊加入 instance 配置塊 <br> <br> track_script {<br> chk_nginx ## 執行 Nginx 監控的服務 <br> }

virtual_ipaddress { #VRRP HA 虛擬地址 如果有多個VIP,繼續換行填寫

192.168.200.16

192.168.200.17

192.168.200.18

}

}

virtual_server 192.168.200.100 443 {

delay_loop 6

lb_algo rr

lb_kind NAT

nat_mask 255.255.255.0

persistence_timeout 50

protocol TCP

real_server 192.168.201.100 443 {

weight 1

SSL_GET {

url {

path /

digest ff20ad2481f97b1754ef3e12ecd3a9cc

}

url {

path /mrtg/

digest 9b3a0c85a887a256d6939da88aabd8cd

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

virtual_server 10.10.10.2 1358 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

sorry_server 192.168.200.200 1358

real_server 192.168.200.2 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl3/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.200.3 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334c

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334c

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

virtual_server 10.10.10.3 1358 {

delay_loop 3

lb_algo rr

lb_kind NAT

nat_mask 255.255.255.0

persistence_timeout 50

protocol TCP

real_server 192.168.200.4 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl3/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.200.5 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl3/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}4.3、master主負載均衡服務器配置

[root@master ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_01

}

## keepalived 會定時執行腳本並對腳本執行的結果進行分析,動態調整 vrrp_instance 的優先級。

# 如果腳本執行結果爲 0,並且 weight 配置的值大於 0,則優先級相應的增加。

# 如果腳本執行結果非 0,並且 weight配置的值小於 0,則優先級相應的減少。

# 其他情況,維持原本配置的優先級,即配置文件中 priority 對應的值。

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh" # 檢測 nginx 狀態的腳本路徑

interval 2 # 腳本執行間隔,每2s檢測一次

weight -5 # 腳本結果導致的優先級變更,檢測失敗(腳本返回非0)則優先級 -5

fall 2 # 檢測連續2次失敗纔算確定是真失敗。會用weight減少優先級(1-255之間)

rise 1 # 檢測1次成功就算成功。但不修改優先級

}

vrrp_instance VI_1 {

state MASTER

interface eth1

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

## 將 track_script 塊加入 instance 配置塊

track_script { # 執行監控的服務。注意這個設置不能緊挨着寫在vrrp_script配置塊的後面(實驗中碰過的坑),否則nginx監控失效!!

chk_nginx # 引用VRRP腳本,即在 vrrp_script 部分指定的名字。定期運行它們來改變優先級,並最終引發主備切換。

}

virtual_ipaddress {

192.168.1.103

}

}

... ...

[root@master ~]#4.4、backup備負載均衡服務器配置

[root@slave ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_02

}

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh"

interval 3

weight -20

}

vrrp_instance VI_1 {

state SLAVE

interface eth1

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

# 將 track_script 塊加入 instance 配置塊

track_script {

chk_nginx # 執行 Nginx 監控的服務

}

virtual_ipaddress {

192.168.1.103

}

}

... ...

[root@slave ~]#5、測試

5.1、編寫 nginx 監測腳本

在所有的節點上面編寫Nginx狀態檢測腳本/etc/keepalived/nginx_check.sh(已在 keepalived.conf 中配置)

腳本要求:如果 nginx 停止運行,嘗試啓動,如果無法啓動則殺死本機的 keepalived 進程, keepalied將虛擬 ip 綁定到 BACKUP 機器上。

內容如下:

[root@master ~]# vim /etc/keepalived/nginx_check.sh

#!/bin/bash

set -x

nginx_status=`ps -C nginx --no-header |wc -l`

if [ ${nginx_status} -eq 0 ];then

service nginx start

sleep 1

if [ `ps -C nginx --no-header |wc -l` -eq 0 ];then #nginx重啓失敗

echo -e "$(date): nginx is not healthy, try to killall keepalived!" >> /etc/keepalived/keepalived.log

killall keepalived

fi

fi

echo $?

[root@master ~]# chmod +x /etc/keepalived/nginx_check.sh

[root@master ~]# ll /etc/keepalived/nginx_check.sh

-rwxr-xr-x 1 root root 338 2019-02-15 14:11 /etc/keepalived/nginx_check.sh5.2、啓動所有節點上的nginx和keepalived

-

啓動nginx

service nginx start - 啓動keepalived

相關操作命令如下:chkconfig keepalived on # keepalived服務開機啓動 service keepalived start # 啓動服務 service keepalived stop # 停止服務 service keepalived restart # 重啓服務

keepalived正常運行後,會啓動3個進程,其中一個是父進程,負責監控其子進程。一個是vrrp子進程,另外一個是checkers子進程。

[root@master ~]# ps -ef | grep keepalived

root 3653 1 0 14:18 ? 00:00:00 /usr/sbin/keepalived -D

root 3654 3653 0 14:18 ? 00:00:02 /usr/sbin/keepalived -D

root 3655 3653 0 14:18 ? 00:00:03 /usr/sbin/keepalived -D

root 7481 3655 0 15:19 ? 00:00:00 /usr/sbin/keepalived -D

root 7483 1323 0 15:19 pts/0 00:00:00 grep --color=auto keepalived

[root@master ~]#5.3、master主負載均衡服務器IP信息:192.168.1.101

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000

link/ether b0:51:8e:01:9b:b0 brd ff:ff:ff:ff:ff:ff

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:20:ae:75 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.101/24 brd 192.168.1.255 scope global eth1

inet 192.168.1.103/32 scope global eth1

inet6 fe80::20c:29ff:fe20:ae75/64 scope link

valid_lft forever preferred_lft forever

[root@master ~]#5.4、backup備負載均衡服務器查看IP信息:192.168.1.102

[root@slave ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000

link/ether b0:51:8e:01:9b:b0 brd ff:ff:ff:ff:ff:ff

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:7d:6a:24 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.102/24 brd 192.168.1.255 scope global eth1

inet6 fe80::20c:29ff:fe7d:6a24/64 scope link

valid_lft forever preferred_lft forever

[root@slave ~]#以上可以看到,虛擬ip(VIP)生效是在192.168.1.101服務器上。

5.5、測試

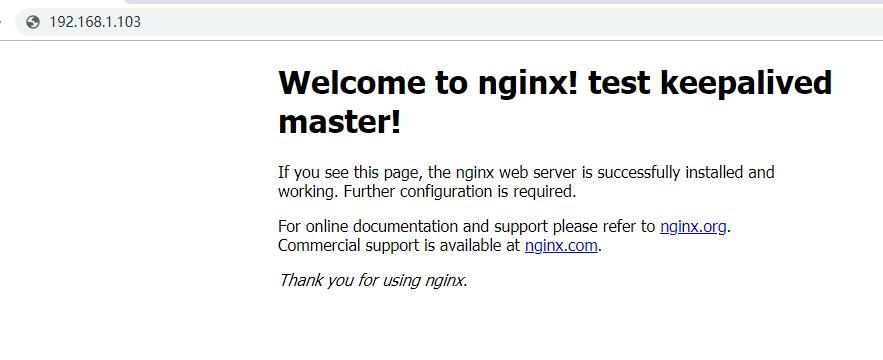

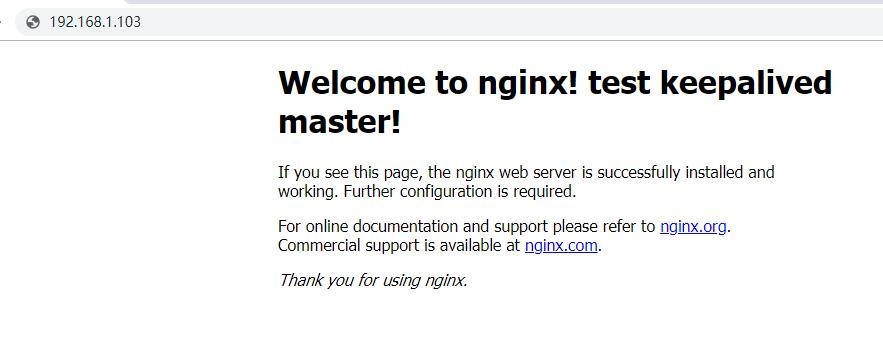

通過VIP(192.168.1.103)來訪問nginx,結果如下:

以上可知,現在生效的nginx代理機器是1.101;我們停掉機器1.101上面的keepalived

[root@master ~]# service keepalived stop

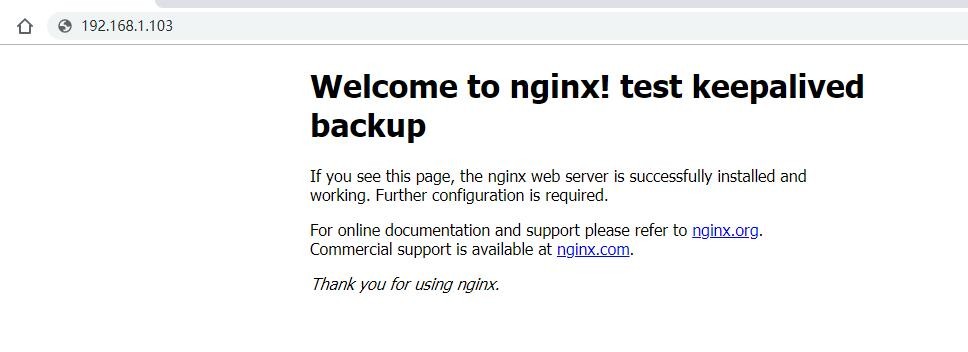

停止 keepalived: [確定]再使用VIP(192.168.1.103)訪問nginx服務,結果如下:

以上可知,現在生效的nginx代理機器是1.102;我們重啓機器1.101上面的keepalived

[root@master ~]# service keepalived start

正在啓動 keepalived: [確定]再使用VIP(192.168.1.103)訪問nginx服務,結果如下:

停止 nginx ;查看 nginx 監測腳本是否有效:

[root@master ~]# service nginx status

nginx (pid 19617) 正在運行...

[root@master ~]# service nginx stop

停止 nginx: [確定]

[root@master ~]# service nginx status

nginx (pid 23595) 正在運行...

[root@master ~]#至此,Keepalived + Nginx 實現高可用 Web 負載均衡搭建完畢!

5.6、keepalived服務監測腳本

由於keepalived服務也可能停止,可以寫一個keepalived服務檢測腳本並添加到定時任務裏;

所有服務器都要操作;

[root@master ~]# vim /opt/scripts/keepalived_monitor.sh

#!/bin/bash

set -x

keepalived_status=`ps -C keepalived --no-header |wc -l`

if [ ${keepalived_status} -eq 0 ];then

echo -e "$(date): keepalived is not healthy!\n" >> /etc/keepalived/keepalived.log

service keepalived start

sleep 1

if [ `ps -C keepalived --no-header |wc -l` -eq 0 ];then #nginx重啓失敗

echo -e "$(date): try to restart keepalived failure!\n" >> /etc/keepalived/keepalived.log

fi

fi

echo $?

[root@master ~]# chmod +x /opt/scripts/keepalived_monitor.sh

[root@master ~]# echo "* * * * * /opt/scripts/keepalived_monitor.sh > /dev/null 2>&1" >> /var/spool/cron/root #

[root@master ~]# crontab -l

*/30 * * * * /usr/sbin/ntpdate ntp1.aliyun.com > /dev/null 2>&1;/sbin/hwclock -w

* * * * * /opt/scripts/keepalived_monitor.sh > /dev/null 2>&1

[root@master ~]#參考

https://www.cnblogs.com/kevingrace/p/6138185.html

nginx官方文檔

keepalived官方文檔