elk分析nginx、dns日誌

部署環境

192.168.122.187 | Logstash-1.5.1 elasticsearch-1.6.0 kibana-4.1.1 | Centos6.4 |

192.168.122.1 | Redis-2.8 | Centos7.1 |

192.168.122.2 | Centos6.4 | |

192.168.122.247 | Bind9 logstash-1.5.2 supervisor-2.1-9 java-1.7 | Centos6.2 |

安裝過程就不復述了,參考http://kibana.logstash.es/content/logstash/get_start/install.html

安裝時注意的幾個地方

1、java最好是1.7

2、server上的logstash我直接用rpm裝的就能用,但是agent端的就不好使,沒有深究

3、elasticsearch、kibana還有agent端的logstash我都是用supervisor運行的

4、supervisor直接就是epel的yum裝的

貼下配置

192.168.122.187上:

Logstash的配置

server端的logstash是rpm安裝的

[root@c6test ~]# cat /etc/logstash/conf.d/central.conf

input { redis {host => "192.168.122.1"

port => 6379

type => "redis-input"

data_type => "list"

key => "logstash"

codec => 'json'

}

}

output { elasticsearch {host => "127.0.0.1"

}

}

elasticsearch

/usr/local/elasticsearch-1.6.0/config/elasticsearch.yml保持默認

Kibana

/usr/local/kibana-4.1.1-linux-x64/config/kibana.yml 保持默認

192.168.122.1上

Redis的配置也沒動。。。

192.168.122.2上

Nginx的

#nginx這裏的區別就是log這塊的配置,配成json格式

log_format json '{"@timestamp":"$time_iso8601",''"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"size":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"url":"$uri",'

'"xff":"$http_x_forwarded_for",'

'"referer":"$http_referer",'

'"agent":"$http_user_agent",'

'"status":"$status"}';

-----------------------------

access_log /var/log/nginx/zabbix_access.log json;

logstash的

[root@zabbixproxy-005002 ~]# cat /usr/local/logstash-1.5.2/conf/shipper.conf

input { file {type => "test-nginx"

path => ["/var/log/nginx/zabbix_access.log"]

codec => "json"

}

}

output { stdout {} redis {host => "192.168.122.1"

port => 6379

data_type => "list"

key => "logstash"

}

}

Supervisor的

[root@zabbixproxy-005002 ~]# cat /etc/supervisord.conf |grep -v \;

[supervisord]

[program:logstash]

command=/usr/local/logstash-1.5.2/bin/logstash agent --verbose --config /usr/local/logstash-1.5.2/conf/shipper.conf --log /usr/local/logstash-1.5.2/logs/stdout.log

process_name=%(program_name)s

numprocs=1

autostart=true

autorestart=true

startretries=5

exitcodes=0

stopsignal=KILL

stopwaitsecs=5

redirect_stderr=true

[supervisorctl]

192.168.122.247上

Bind的配置用默認的即可

Logstash的

[root@sys-247245 ~]# cat /usr/local/logstash/conf/shipper.conf

input { file {type => "dnslog"

path => ["/home/dnslog/*.log"]

}

}

filter {#由於dns日誌沒辦法定義成json,我又不會grok,所以這裏用mutate來切割

mutate {gsub => ["message","#"," "]

split => ["message"," "]

}

mutate { add_field => { "client" => "%{[message][5]}" "domain_name" => "%{[message][10]}" "server" => "%{[message][14]}"}

}

}

output { stdout {} redis {host => "192.168.122.1"

port => 6379

data_type => "list"

key => "logstash"

}

}

Supervisor的

[root@sys-247245 ~]# cat /etc/supervisord.conf |grep -v \;|grep -v ^$

[supervisord]

[supervisorctl]

[program:logstash]

command=/usr/local/logstash/bin/logstash agent --verbose --config /usr/local/logstash/conf/shipper.conf --log /usr/local/logstash/logs/stdout.log

process_name=%(program_name)s

numprocs=1

autostart=true

autorestart=true

startretries=5

exitcodes=0

stopsignal=KILL

stopwaitsecs=5

redirect_stderr=true

配置kibana

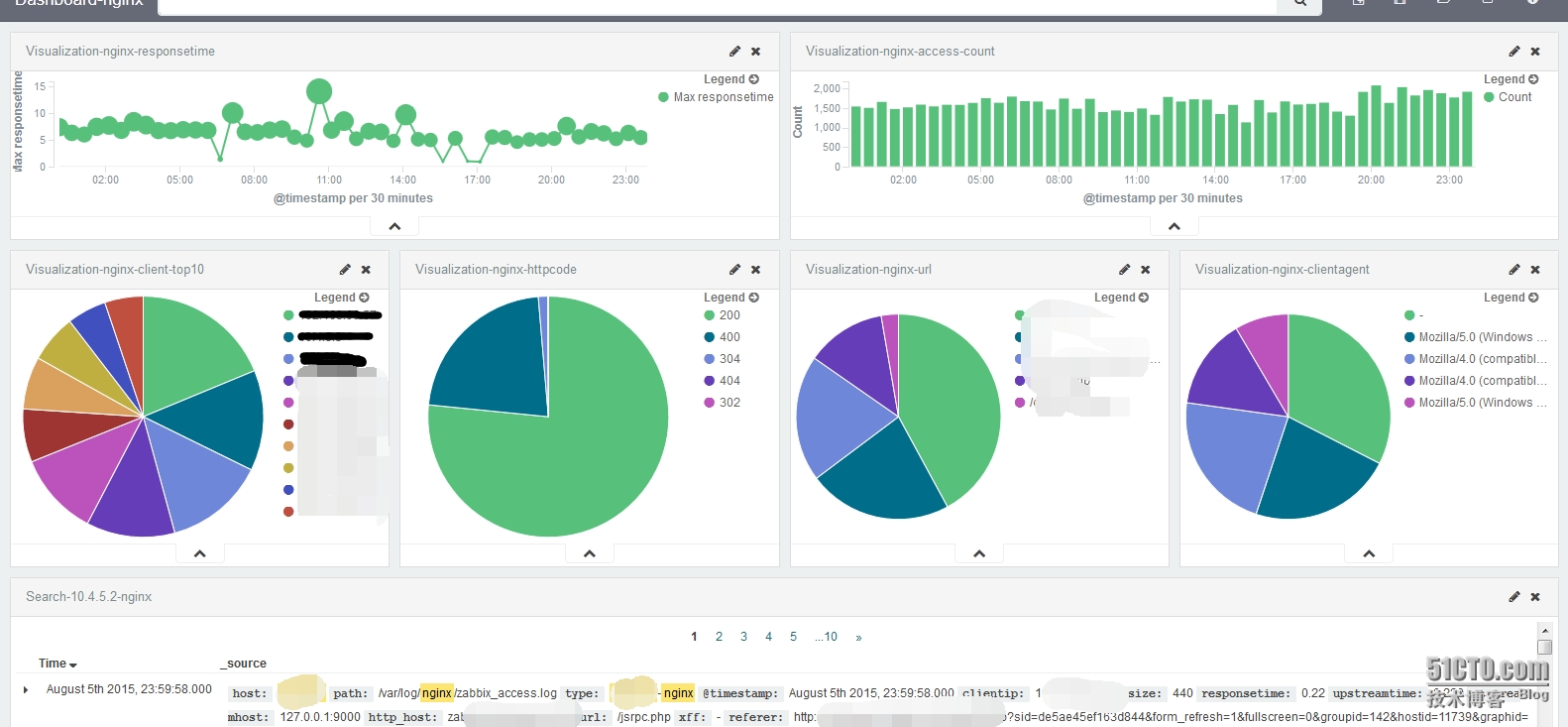

Nginx

1、在discover搜索nginx相關的日誌,之後保存

2、在visualize部署單個的圖表,之後保存

3、在dashboard將幾個nginx的visualize的圖表連起來

Dns

1、在discover搜索dns相關的日誌,之後保存

2、在visualize部署單個的圖表,之後保存

3、在dashboard將幾個dns的visualize的圖表連起來

遇到的問題

自定義的field在discover上能看到,但是在製作visualize時看不到

這種情況是由於沒有刷新索引的field導致的,默認的索引用的是logstash-*,在“Settings”—Indices中看到,點擊logstash-*進去之後,點擊刷新按鈕