Kubenetes 1.13.5 集羣源碼安裝

標籤(空格分隔): k8s2019年06月13日

本文截選https://k.i4t.com

更多k8s內容請持續關注https://i4t.com

一、K8s簡介

在1.11安裝的地方已經講過了,簡單的查看K8s原理可以通過k8s 1.11源碼安裝查看,或者通過https://k.i4t.com查看更深入的原理

二、K8s環境準備

本次安裝版本

Kubernetes v1.13.5 (v1.13.4有kubectl cp的bug)

CNI v0.7.5

Etcd v3.2.24

Calico v3.4

Docker CE 18.06.03

kernel 4.18.9-1 (不推薦使用內核5版本)

CentOS Linux release 7.6.1810 (Core)

K8s系統最好選擇7.4-7.6

docker 提示

Centos7.4之前的版本安裝docker會無法使用overlay2爲docker的默認存儲引擎。

關閉IPtables及NetworkManager

systemctl disable --now firewalld NetworkManager

setenforce 0

sed -ri '/^[^#]*SELINUX=/s#=.+$#=disabled#' /etc/selinux/configKubernetes v1.8+要求關閉系統Swap,若不關閉則需要修改kubelet設定參數( –fail-swap-on 設置爲 false 來忽略 swap on),在所有機器使用以下指令關閉swap並註釋掉/etc/fstab中swap的行

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab設置yum源

yum install -y wget

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

yum makecache

yum install wget vim lsof net-tools lrzsz -y因爲目前市面上包管理下內核版本會很低,安裝docker後無論centos還是ubuntu會有如下bug,4.15的內核依然存在

kernel:unregister_netdevice: waiting for lo to become free. Usage count = 1所以建議先升級內核

perl是內核的依賴包,如果沒有就安裝下

[ ! -f /usr/bin/perl ] && yum install perl -y升級內核需要使用 elrepo 的yum 源,首先我們導入 elrepo 的 key並安裝 elrepo 源

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm查看可用內核

(不導入升級內核的elrepo源,無法查看可用內核)

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available --showduplicates在yum的ELRepo源中,mainline 爲最新版本的內核,安裝kernel

下面鏈接可以下載到其他歸檔版本的

http://mirror.rc.usf.edu/compute_lock/elrepo/kernel/el7/x86_64/RPMS/

下載rpm包,手動yum自選版本內核安裝方法(求穩定我使用的是4.18內核版本)

export Kernel_Version=4.18.9-1

wget http://mirror.rc.usf.edu/compute_lock/elrepo/kernel/el7/x86_64/RPMS/kernel-ml{,-devel}-${Kernel_Version}.el7.elrepo.x86_64.rpm

yum localinstall -y kernel-ml*

#如果是手動下載內核rpm包,直接執行後面yum install -y kernel-ml*即可- 如果是不想升級後面的最新內核可以此時升級到保守內核去掉update的exclude即可

yum install epel-release -y

yum install wget git jq psmisc socat -y

yum update -y --exclude=kernel*重啓下加載保守內核

reboot我這裏直接就yum update -y

如果想安裝最新內核可以使用下面方法

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available --showduplicates | grep -Po '^kernel-ml.x86_64\s+\K\S+(?=.el7)'

yum --disablerepo="*" --enablerepo=elrepo-kernel install -y kernel-ml{,-devel}修改內核啓動順序,默認啓動的順序應該爲1,升級以後內核是往前面插入,爲0(如果每次啓動時需要手動選擇哪個內核,該步驟可以省略)

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg使用下面命令看看確認下是否啓動默認內核指向上面安裝的內核

grubby --default-kernel

#這裏的輸出結果應該爲我們升級後的內核信息docker官方的內核檢查腳本建議

(RHEL7/CentOS7: User namespaces disabled; add 'user_namespace.enable=1' to boot command line)使用下面命令開啓

grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"

重啓加載新內核

reboot所有機器安裝ipvs(ipvs性能甩iptables幾條街並且排錯更直觀)

爲什麼要使用IPVS,從k8s的1.8版本開始,kube-proxy引入了IPVS模式,IPVS模式與iptables同樣基於Netfilter,但是採用的hash表,因此當service數量達到一定規模時,hash查表的速度優勢就會顯現出來,從而提高service的服務性能。

- ipvs依賴於nf_conntrack_ipv4內核模塊,4.19包括之後內核裏改名爲nf_conntrack,1.13.1之前的kube-proxy的代碼裏沒有加判斷一直用的nf_conntrack_ipv4,好像是1.13.1後的kube-proxy代碼裏增加了判斷,我測試了是會去load nf_conntrack使用ipvs正常

在每臺機器上安裝依賴包:

yum install ipvsadm ipset sysstat conntrack libseccomp -y所有機器選擇需要開機加載的內核模塊,以下是 ipvs 模式需要加載的模塊並設置開機自動加載

:> /etc/modules-load.d/ipvs.conf

module=(

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

br_netfilter

)

for kernel_module in ${module[@]};do

/sbin/modinfo -F filename $kernel_module |& grep -qv ERROR && echo $kernel_module >> /etc/modules-load.d/ipvs.conf || :

done

systemctl enable --now systemd-modules-load.service上面如果systemctl enable命令報錯可以

systemctl status -l systemd-modules-load.service看看哪個內核模塊加載不了,在/etc/modules-load.d/ipvs.conf裏註釋掉它再enable試試

所有機器需要設定/etc/sysctl.d/k8s.conf的系統參數。

cat <<EOF > /etc/sysctl.d/k8s.conf

# https://github.com/moby/moby/issues/31208

# ipvsadm -l --timout

# 修復ipvs模式下長連接timeout問題 小於900即可

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_intvl = 30

net.ipv4.tcp_keepalive_probes = 10

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

net.ipv4.neigh.default.gc_stale_time = 120

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

net.ipv4.ip_forward = 1

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.tcp_synack_retries = 2

# 要求iptables不對bridge的數據進行處理

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-arptables = 1

net.netfilter.nf_conntrack_max = 2310720

fs.inotify.max_user_watches=89100

fs.may_detach_mounts = 1

fs.file-max = 52706963

fs.nr_open = 52706963

vm.swappiness = 0

vm.overcommit_memory=1

vm.panic_on_oom=0

EOF

sysctl --system檢查系統內核和模塊是否適合運行 docker (僅適用於 linux 系統)

curl https://raw.githubusercontent.com/docker/docker/master/contrib/check-config.sh > check-config.sh

bash ./check-config.sh這裏利用docker的官方安裝腳本來安裝,可以使用yum list --showduplicates 'docker-ce'查詢可用的docker版本,選擇你要安裝的k8s版本支持的docker版本即可,這裏我使用的是18.06.03

export VERSION=18.06

curl -fsSL "https://get.docker.com/" | bash -s -- --mirror Aliyun這裏說明一下,如果想使用yum list --showduplicates 'docker-ce'查詢可用的docker版本。需要先使用docker官方腳本安裝了一個docker,纔可以list到其他版本

https://get.docker.com 首頁是一個shell腳本,裏面有設置yum源

所有機器配置加速源並配置docker的啓動參數使用systemd,使用systemd是官方的建議,詳見 https://kubernetes.io/docs/setup/cri/

mkdir -p /etc/docker/

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://hjvrgh7a.mirror.aliyuncs.com"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

#這裏配置當時鏡像加速器,可以不進行配置,但是建議配置

要添加我們harbor倉庫需要在添加下面一行

"insecure-registries": ["harbor.i4t.com"],

默認docker hub需要https協議,使用上面配置不需要配置https設置docker開機啓動,CentOS安裝完成後docker需要手動設置docker命令補全

yum install -y epel-release bash-completion && cp /usr/share/bash-completion/completions/docker /etc/bash_completion.d/

systemctl enable --now docker切記所有機器需要自行設定ntp,否則不只HA下apiserver通信有問題,各種千奇百怪的問題。

yum -y install ntp

systemctl enable ntpd

systemctl start ntpd

ntpdate -u cn.pool.ntp.org

hwclock --systohc

timedatectl set-timezone Asia/Shanghai三、K8s 集羣安裝

| IP | Hostname | Mem | VIP | 服務 |

|---|---|---|---|---|

| 10.4.82.138 | k8s-master1 | 4G | 10.4.82.141 | keeplived haproxy |

| 10.4.82.139 | k8s-master2 | 4G | 10.4.82.141 | keeplived haproxy |

| 10.4.82.140 | k8s-node1 | 4G | ||

| 10.4.82.142 | k8s-node2 | 4G |

本次VIP爲,10.4.82.141,由master節點的keeplived+haporxy來選擇VIP的歸屬保持高可用

- 所有操作使用root用戶

- 本次軟件包證書等都在10.4.82.138主機進行操作

3.1 環境變量SSH免密及主機名修改

- SSH免密

- NTP時間同步

- 主機名修改

- 環境變量生成

- Host 解析

這裏需要說一下,所有的密鑰分發以及後期拷貝等都在master1上操作,因爲master1做免密了

K8S集羣所有的機器都需要進行host解析

cat >> /etc/hosts << EOF

10.4.82.138 k8s-master1

10.4.82.139 k8s-master2

10.4.82.140 k8s-node1

10.4.82.142 k8s-node2

EOF批量免密

# 做免密前請修改好主機名對應的host

ssh-keygen -t rsa -P "" -f /root/.ssh/id_rsa

for i in 10.4.82.138 10.4.82.139 10.4.82.140 10.4.82.142 k8s-master1 k8s-master2 k8s-node1 k8s-node2;do

expect -c "

spawn ssh-copy-id -i /root/.ssh/id_rsa.pub root@$i

expect {

\"*yes/no*\" {send \"yes\r\"; exp_continue}

\"*password*\" {send \"123456\r\"; exp_continue}

\"*Password*\" {send \"123456\r\";}

} "

done 批量修改主機名

ssh 10.4.82.138 "hostnamectl set-hostname k8s-master1" &&

ssh 10.4.82.139 "hostnamectl set-hostname k8s-master2" &&

ssh 10.4.82.140 "hostnamectl set-hostname k8s-node1" &&

ssh 10.4.82.142 "hostnamectl set-hostname k8s-node2"

執行完畢bash刷新一下即可3.2 下載kubernetes

這裏下載k8s二進制包分爲2種,第一種是push鏡像,將鏡像的軟件包拷貝出來,第二種是直接下載官網的軟件包

- 1.使用鏡像方式拷貝軟件包 (不需要×××)

docker run --rm -d --name abcdocker-test registry.cn-beijing.aliyuncs.com/abcdocker/k8s:v1.13.5 sleep 10

docker cp abcdocker-test:/kubernetes-server-linux-amd64.tar.gz .

tar -zxvf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}- 2.有×××可以直接下載官方軟件包

wget https://dl.k8s.io/v1.13.5/kubernetes-server-linux-amd64.tar.gz

tar -zxvf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

#可以在瀏覽器上下載,上傳到服務器分發master相關組件二進制文件到其他master上

for NODE in "k8s-master2"; do

scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin/

done

10.4.82.139爲我們的master第二臺節點,多個節點直接寫進去就可以了分發node的kubernetes二進制文件,我們之分發到node1和node2

for NODE in k8s-node1 k8s-node2; do

echo "--- k8s-node1 k8s-node2 ---"

scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/

done在k81-master1下載Kubernetes CNI 二進制文件並分發

以下2種方式選擇一種即可

1.官方下載

mkdir -p /opt/cni/bin

wget "${CNI_URL}/${CNI_VERSION}/cni-plugins-amd64-${CNI_VERSION}.tgz"

tar -zxf cni-plugins-amd64-${CNI_VERSION}.tgz -C /opt/cni/bin

# 分發cni文件 (所有主機)

for NODE in "${!Other[@]}"; do

echo "--- $NODE ${Other[$NODE]} ---"

ssh ${Other[$NODE]} 'mkdir -p /opt/cni/bin'

scp /opt/cni/bin/* ${Other[$NODE]}:/opt/cni/bin/

done

## 實際上下載地址就是https://github.com/containernetworking/plugins/releases/download/v0.7.5/cni-plugins-amd64-v0.7.5.tgz

2.abcdocker提供下載地址

mkdir -p /opt/cni/bin

wget http://down.i4t.com/cni-plugins-amd64-v0.7.5.tgz

tar xf cni-plugins-amd64-v0.7.5.tgz -C /opt/cni/bin

# 分發cni文件 (所有主機)

for NODE in k8s-master1 k8s-master2 k8s-node1 k8s-node2; do

echo "--- $NODE---"

ssh $NODE 'mkdir -p /opt/cni/bin'

scp /opt/cni/bin/* $NODE:/opt/cni/bin/

done

#這裏可以寫ip或者主機名3.3 創建集羣證書

需要創建Etcd、Kubernetes等證書,並且每個集羣都會有一個根數位憑證認證機構(Root Certificate Authority)被用在認證API Server 與Kubelet 端的憑證,本次使用openssl創建所有證書

配置openssl ip信息

mkdir -p /etc/kubernetes/pki/etcd

cat >> /etc/kubernetes/pki/openssl.cnf <<EOF

[ req ]

default_bits = 2048

default_md = sha256

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_ca ]

basicConstraints = critical, CA:TRUE

keyUsage = critical, digitalSignature, keyEncipherment, keyCertSign

[ v3_req_server ]

basicConstraints = CA:FALSE

keyUsage = critical, digitalSignature, keyEncipherment

extendedKeyUsage = serverAuth

[ v3_req_client ]

basicConstraints = CA:FALSE

keyUsage = critical, digitalSignature, keyEncipherment

extendedKeyUsage = clientAuth

[ v3_req_apiserver ]

basicConstraints = CA:FALSE

keyUsage = critical, digitalSignature, keyEncipherment

extendedKeyUsage = serverAuth

subjectAltName = @alt_names_cluster

[ v3_req_etcd ]

basicConstraints = CA:FALSE

keyUsage = critical, digitalSignature, keyEncipherment

extendedKeyUsage = serverAuth, clientAuth

subjectAltName = @alt_names_etcd

[ alt_names_cluster ]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster.local

DNS.5 = localhost

IP.1 = 10.96.0.1

IP.2 = 127.0.0.1

IP.3 = 10.4.82.141

IP.4 = 10.4.82.138

IP.5 = 10.4.82.139

IP.6 = 10.4.82.140

IP.7 = 10.4.82.142

[ alt_names_etcd ]

DNS.1 = localhost

IP.1 = 127.0.0.1

IP.2 = 10.4.82.138

IP.3 = 10.4.82.139

EOF

## 參數說明

alt_names_cluster 下面的IP.爲主機IP,所有集羣內的主機都需要添加進來,從2開頭

IP.3 是VIP

IP.4 是master1

IP.5 是master2

IP.6 是node1

IP.7 是node2

alt_names_etcd 爲ETCD的主機

IP.2 爲master1

IP.3 爲master2

將修改完畢的證書複製到證書目錄

cd /etc/kubernetes/pki生成證書

kubernetes-ca

[info] 準備 kubernetes CA 證書,證書的頒發機構名稱爲 kubernets

openssl genrsa -out ca.key 2048

openssl req -x509 -new -nodes -key ca.key -config openssl.cnf -subj "/CN=kubernetes-ca" -extensions v3_ca -out ca.crt -days 10000etcd-ca

[info] 用於etcd客戶端和服務器之間通信的證書

openssl genrsa -out etcd/ca.key 2048

openssl req -x509 -new -nodes -key etcd/ca.key -config openssl.cnf -subj "/CN=etcd-ca" -extensions v3_ca -out etcd/ca.crt -days 10000front-proxy-ca

openssl genrsa -out front-proxy-ca.key 2048

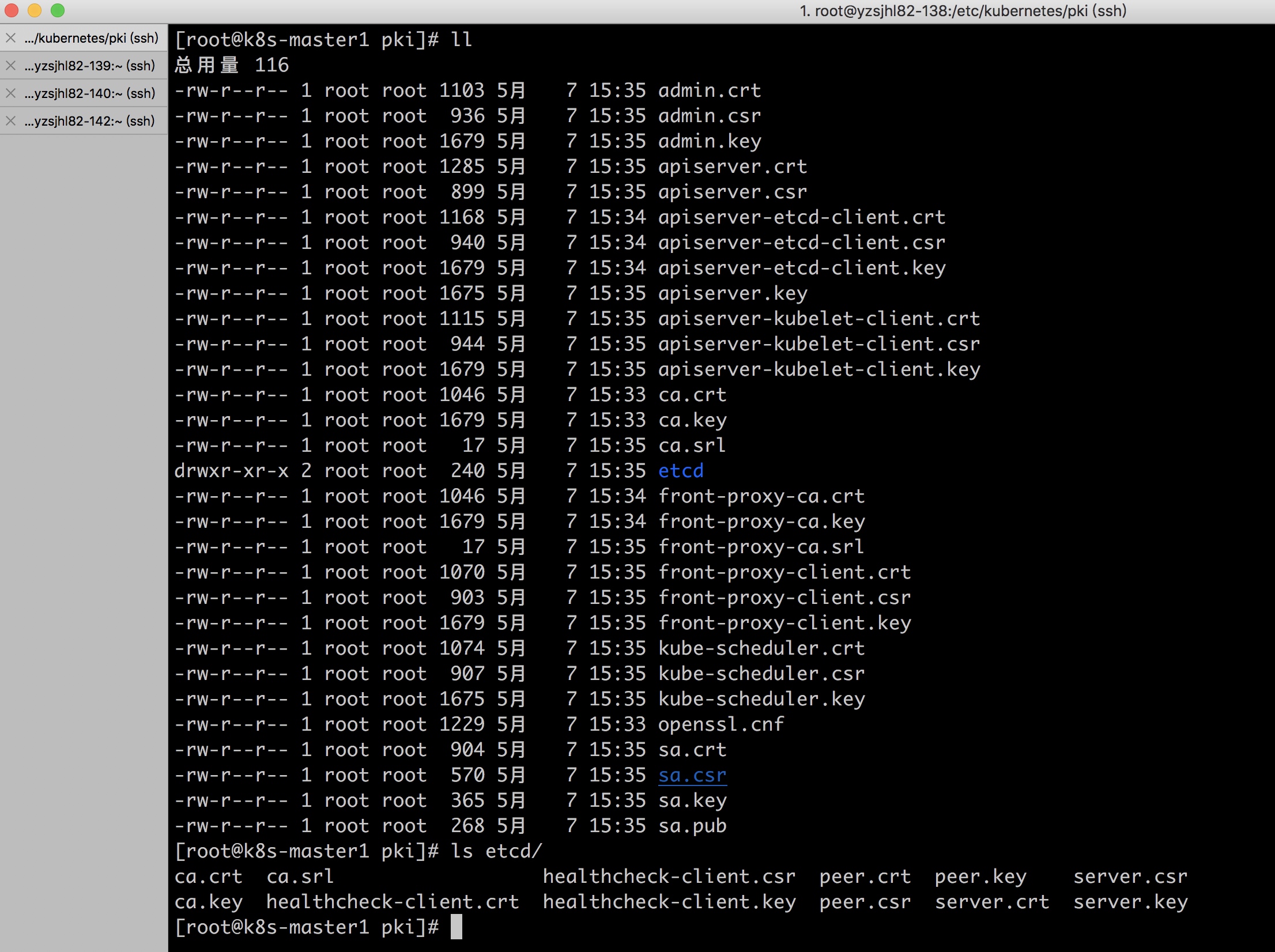

openssl req -x509 -new -nodes -key front-proxy-ca.key -config openssl.cnf -subj "/CN=kubernetes-ca" -extensions v3_ca -out front-proxy-ca.crt -days 10000當前證書路徑

[root@k8s-master1 pki]# ll

總用量 20

-rw-r--r-- 1 root root 1046 5月 7 15:33 ca.crt

-rw-r--r-- 1 root root 1679 5月 7 15:33 ca.key

drwxr-xr-x 2 root root 34 5月 7 15:34 etcd

-rw-r--r-- 1 root root 1046 5月 7 15:34 front-proxy-ca.crt

-rw-r--r-- 1 root root 1679 5月 7 15:34 front-proxy-ca.key

-rw-r--r-- 1 root root 1229 5月 7 15:33 openssl.cnf

[root@k8s-master1 pki]# tree

.

├── ca.crt

├── ca.key

├── etcd

│ ├── ca.crt

│ └── ca.key

├── front-proxy-ca.crt

├── front-proxy-ca.key

└── openssl.cnf生成所有的證書信息

apiserver-etcd-client

openssl genrsa -out apiserver-etcd-client.key 2048

openssl req -new -key apiserver-etcd-client.key -subj "/CN=apiserver-etcd-client/O=system:masters" -out apiserver-etcd-client.csr

openssl x509 -in apiserver-etcd-client.csr -req -CA etcd/ca.crt -CAkey etcd/ca.key -CAcreateserial -extensions v3_req_etcd -extfile openssl.cnf -out apiserver-etcd-client.crt -days 10000kube-etcd

openssl genrsa -out etcd/server.key 2048

openssl req -new -key etcd/server.key -subj "/CN=etcd-server" -out etcd/server.csr

openssl x509 -in etcd/server.csr -req -CA etcd/ca.crt -CAkey etcd/ca.key -CAcreateserial -extensions v3_req_etcd -extfile openssl.cnf -out etcd/server.crt -days 10000kube-etcd-peer

openssl genrsa -out etcd/peer.key 2048

openssl req -new -key etcd/peer.key -subj "/CN=etcd-peer" -out etcd/peer.csr

openssl x509 -in etcd/peer.csr -req -CA etcd/ca.crt -CAkey etcd/ca.key -CAcreateserial -extensions v3_req_etcd -extfile openssl.cnf -out etcd/peer.crt -days 10000kube-etcd-healthcheck-client

openssl genrsa -out etcd/healthcheck-client.key 2048

openssl req -new -key etcd/healthcheck-client.key -subj "/CN=etcd-client" -out etcd/healthcheck-client.csr

openssl x509 -in etcd/healthcheck-client.csr -req -CA etcd/ca.crt -CAkey etcd/ca.key -CAcreateserial -extensions v3_req_etcd -extfile openssl.cnf -out etcd/healthcheck-client.crt -days 10000kube-apiserver

openssl genrsa -out apiserver.key 2048

openssl req -new -key apiserver.key -subj "/CN=kube-apiserver" -config openssl.cnf -out apiserver.csr

openssl x509 -req -in apiserver.csr -CA ca.crt -CAkey ca.key -CAcreateserial -days 10000 -extensions v3_req_apiserver -extfile openssl.cnf -out apiserver.crtapiserver-kubelet-client

openssl genrsa -out apiserver-kubelet-client.key 2048

openssl req -new -key apiserver-kubelet-client.key -subj "/CN=apiserver-kubelet-client/O=system:masters" -out apiserver-kubelet-client.csr

openssl x509 -req -in apiserver-kubelet-client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -days 10000 -extensions v3_req_client -extfile openssl.cnf -out apiserver-kubelet-client.crtfront-proxy-client

openssl genrsa -out front-proxy-client.key 2048

openssl req -new -key front-proxy-client.key -subj "/CN=front-proxy-client" -out front-proxy-client.csr

openssl x509 -req -in front-proxy-client.csr -CA front-proxy-ca.crt -CAkey front-proxy-ca.key -CAcreateserial -days 10000 -extensions v3_req_client -extfile openssl.cnf -out front-proxy-client.crtkube-scheduler

openssl genrsa -out kube-scheduler.key 2048

openssl req -new -key kube-scheduler.key -subj "/CN=system:kube-scheduler" -out kube-scheduler.csr

openssl x509 -req -in kube-scheduler.csr -CA ca.crt -CAkey ca.key -CAcreateserial -days 10000 -extensions v3_req_client -extfile openssl.cnf -out kube-scheduler.crtsa.pub sa.key

openssl genrsa -out sa.key 2048

openssl ecparam -name secp521r1 -genkey -noout -out sa.key

openssl ec -in sa.key -outform PEM -pubout -out sa.pub

openssl req -new -sha256 -key sa.key -subj "/CN=system:kube-controller-manager" -out sa.csr

openssl x509 -req -in sa.csr -CA ca.crt -CAkey ca.key -CAcreateserial -days 10000 -extensions v3_req_client -extfile openssl.cnf -out sa.crtadmin

openssl genrsa -out admin.key 2048

openssl req -new -key admin.key -subj "/CN=kubernetes-admin/O=system:masters" -out admin.csr

openssl x509 -req -in admin.csr -CA ca.crt -CAkey ca.key -CAcreateserial -days 10000 -extensions v3_req_client -extfile openssl.cnf -out admin.crt清理 csr srl(csr只要key不變那每次生成都是一樣的,所以可以刪除,如果後期根據ca重新生成證書來添加ip的話可以此處不刪除)

find . -name "*.csr" -o -name "*.srl"|xargs rm -f證書結構如下

[root@k8s-master1 pki]# tree

.

├── admin.crt

├── admin.csr

├── admin.key

├── apiserver.crt

├── apiserver.csr

├── apiserver-etcd-client.crt

├── apiserver-etcd-client.csr

├── apiserver-etcd-client.key

├── apiserver.key

├── apiserver-kubelet-client.crt

├── apiserver-kubelet-client.csr

├── apiserver-kubelet-client.key

├── ca.crt

├── ca.key

├── ca.srl

├── etcd

│ ├── ca.crt

│ ├── ca.key

│ ├── ca.srl

│ ├── healthcheck-client.crt

│ ├── healthcheck-client.csr

│ ├── healthcheck-client.key

│ ├── peer.crt

│ ├── peer.csr

│ ├── peer.key

│ ├── server.crt

│ ├── server.csr

│ └── server.key

├── front-proxy-ca.crt

├── front-proxy-ca.key

├── front-proxy-ca.srl

├── front-proxy-client.crt

├── front-proxy-client.csr

├── front-proxy-client.key

├── kube-scheduler.crt

├── kube-scheduler.csr

├── kube-scheduler.key

├── openssl.cnf

├── sa.crt

├── sa.csr

├── sa.key

└── sa.pub利用證書生成組件的kubeconfig

kubectl的參數意義爲

- –certificate-authority:驗證根證書;

- –client-certificate、–client-key:生成的 組件證書和私鑰,連接 kube-apiserver 時會用到

- –embed-certs=true:將 ca.pem 和 組件.pem 證書內容嵌入到生成的 kubeconfig 文件中(不加時,寫入的是證書文件路徑)

- ${KUBE_APISERVER} 這裏我們apiserver使用haproxy ip+8443代替

定義apiserver變量,下面替換所使用

export KUBE_APISERVER=https://10.4.82.141:8443

#後面的IP爲我們的VIPkube-controller-manager

CLUSTER_NAME="kubernetes"

KUBE_USER="system:kube-controller-manager"

KUBE_CERT="sa"

KUBE_CONFIG="controller-manager.kubeconfig"

# 設置集羣參數

kubectl config set-cluster ${CLUSTER_NAME} \

--certificate-authority=/etc/kubernetes/pki/ca.crt \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 設置客戶端認證參數

kubectl config set-credentials ${KUBE_USER} \

--client-certificate=/etc/kubernetes/pki/${KUBE_CERT}.crt \

--client-key=/etc/kubernetes/pki/${KUBE_CERT}.key \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 設置上下文參數

kubectl config set-context ${KUBE_USER}@${CLUSTER_NAME} \

--cluster=${CLUSTER_NAME} \

--user=${KUBE_USER} \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 設置當前使用的上下文

kubectl config use-context ${KUBE_USER}@${CLUSTER_NAME} --kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 查看生成的配置文件

kubectl config view --kubeconfig=/etc/kubernetes/${KUBE_CONFIG}kube-scheduler

CLUSTER_NAME="kubernetes"

KUBE_USER="system:kube-scheduler"

KUBE_CERT="kube-scheduler"

KUBE_CONFIG="scheduler.kubeconfig"

# 設置集羣參數

kubectl config set-cluster ${CLUSTER_NAME} \

--certificate-authority=/etc/kubernetes/pki/ca.crt \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 設置客戶端認證參數

kubectl config set-credentials ${KUBE_USER} \

--client-certificate=/etc/kubernetes/pki/${KUBE_CERT}.crt \

--client-key=/etc/kubernetes/pki/${KUBE_CERT}.key \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 設置上下文參數

kubectl config set-context ${KUBE_USER}@${CLUSTER_NAME} \

--cluster=${CLUSTER_NAME} \

--user=${KUBE_USER} \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 設置當前使用的上下文

kubectl config use-context ${KUBE_USER}@${CLUSTER_NAME} --kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 查看生成的配置文件

kubectl config view --kubeconfig=/etc/kubernetes/${KUBE_CONFIG}admin(kubectl)

CLUSTER_NAME="kubernetes"

KUBE_USER="kubernetes-admin"

KUBE_CERT="admin"

KUBE_CONFIG="admin.kubeconfig"

# 設置集羣參數

kubectl config set-cluster ${CLUSTER_NAME} \

--certificate-authority=/etc/kubernetes/pki/ca.crt \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 設置客戶端認證參數

kubectl config set-credentials ${KUBE_USER} \

--client-certificate=/etc/kubernetes/pki/${KUBE_CERT}.crt \

--client-key=/etc/kubernetes/pki/${KUBE_CERT}.key \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 設置上下文參數

kubectl config set-context ${KUBE_USER}@${CLUSTER_NAME} \

--cluster=${CLUSTER_NAME} \

--user=${KUBE_USER} \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 設置當前使用的上下文

kubectl config use-context ${KUBE_USER}@${CLUSTER_NAME} --kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 查看生成的配置文件

kubectl config view --kubeconfig=/etc/kubernetes/${KUBE_CONFIG}分發證書

分發到k8s配置及證書其他 master 節點

for NODE in k8s-master2 k8s-master1; do

echo "--- $NODE---"

scp -r /etc/kubernetes $NODE:/etc

done3.4 配置ETCD

Etcd 二進制文件

- Etcd:用於保存集羣所有狀態的Key/Value存儲系統,所有Kubernetes組件會通過API Server來跟Etcd進行溝通從而保存或讀取資源狀態

- 我們將etcd存儲在master上,可以通過apiserver制定etcd集羣

etcd所有標準版本可以在下面url查看

https://github.com/etcd-io/etcd/releases

在k8s-master1上下載etcd的二進制文件

ETCD版本v3.1.9

下載etcd

第一種方式:

export ETCD_version=v3.2.24

wget https://github.com/etcd-io/etcd/releases/download/${ETCD_version}/etcd-${ETCD_version}-linux-amd64.tar.gz

tar -zxvf etcd-${ETCD_version}-linux-amd64.tar.gz --strip-components=1 -C /usr/local/bin etcd-${ETCD_version}-linux-amd64/etcd{,ctl}

第二種方式:

docker pull registry.cn-beijing.aliyuncs.com/abcdocker/etcd:v3.2.24

or

docker pull quay.io/coreos/etcd:v3.2.24

#可以選擇官網鏡像或者我提供的(以下選擇一個)

docker run --rm -d --name abcdocker-etcd quay.io/coreos/etcd:v3.2.24

docker run --rm -d --name abcdocker-etcd registry.cn-beijing.aliyuncs.com/abcdocker/etcd:v3.2.24

sleep 10

docker cp abcdocker-etcd:/usr/local/bin/etcd /usr/local/bin

docker cp abcdocker-etcd:/usr/local/bin/etcdctl /usr/local/bin在k8s-master1上分發etcd的二進制文件到其他master上

for NODE in "k8s-master2"; do

echo "--- $NODE ---"

scp /usr/local/bin/etcd* $NODE:/usr/local/bin/

done在k8s-master1上配置etcd配置文件並分發相關文件

配置文件路徑爲/etc/etcd/etcd.config.yml,參考官方 https://github.com/etcd-io/etcd/blob/master/etcd.conf.yml.sample

cat >> /opt/etcd.config.yml <<EOF

name: '{HOSTNAME}'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://{PUBLIC_IP}:2380'

listen-client-urls: 'https://{PUBLIC_IP}:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://{PUBLIC_IP}:2380'

advertise-client-urls: 'https://{PUBLIC_IP}:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master1=https://10.4.82.138:2380,k8s-master2=https://10.4.82.139:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

ca-file: '/etc/kubernetes/pki/etcd/ca.crt'

cert-file: '/etc/kubernetes/pki/etcd/server.crt'

key-file: '/etc/kubernetes/pki/etcd/server.key'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/ca.crt'

auto-tls: true

peer-transport-security:

ca-file: '/etc/kubernetes/pki/etcd/ca.crt'

cert-file: '/etc/kubernetes/pki/etcd/peer.crt'

key-file: '/etc/kubernetes/pki/etcd/peer.key'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/ca.crt'

auto-tls: true

debug: false

log-package-levels:

log-output: default

force-new-cluster: false

EOF

# 修改initial-cluster後面的主機及IP地址,etcd我們只是在master1和2上運行,如果其他機器也有逗號分隔即可創建etcd啓動文件

cat >> /opt/etcd.service <<EOF

[Unit]

Description=Etcd Service

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

EOF分發systemd和配置文件

cd /opt/

for NODE in k8s-master1 k8s-master2; do

echo "--- $NODE ---"

ssh $NODE "mkdir -p /etc/etcd /var/lib/etcd"

scp /opt/etcd.service $NODE:/usr/lib/systemd/system/etcd.service

scp /opt/etcd.config.yml $NODE:/etc/etcd/etcd.config.yml

done

#當etcd.config.yml拷貝到master1和master2上後,還需要對etcd進行配置修改

ssh k8s-master1 "sed -i "s/{HOSTNAME}/k8s-master1/g" /etc/etcd/etcd.config.yml" &&

ssh k8s-master1 "sed -i "s/{PUBLIC_IP}/10.4.82.138/g" /etc/etcd/etcd.config.yml" &&

ssh k8s-master2 "sed -i "s/{HOSTNAME}/k8s-master2/g" /etc/etcd/etcd.config.yml" &&

ssh k8s-master2 "sed -i "s/{PUBLIC_IP}/10.4.82.139/g" /etc/etcd/etcd.config.yml"

#如果有多臺etcd就都需要替換,我這裏的etcd爲master1、master2在k8s-master1上啓動所有etcd

etcd 進程首次啓動時會等待其它節點的 etcd 加入集羣,命令 systemctl start etcd 會卡住一段時間,爲正常現象

可以全部啓動後後面的etcdctl命令查看狀態確認正常否

for NODE in k8s-master1 k8s-master2; do

echo "--- $NODE ---"

ssh $NODE "systemctl daemon-reload"

ssh $NODE "systemctl enable --now etcd" &

done

wait檢查端口進程是否正常

[root@k8s-master1 master]# ps -ef|grep etcd

root 14744 1 3 18:42 ? 00:00:00 /usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

root 14754 12464 0 18:42 pts/0 00:00:00 grep --color=auto etcd

[root@k8s-master1 master]# lsof -i:2379

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

etcd 14744 root 6u IPv4 1951765 0t0 TCP localhost:2379 (LISTEN)

etcd 14744 root 7u IPv4 1951766 0t0 TCP k8s-master1:2379 (LISTEN)

etcd 14744 root 18u IPv4 1951791 0t0 TCP k8s-master1:53826->k8s-master1:2379 (ESTABLISHED)

etcd 14744 root 19u IPv4 1951792 0t0 TCP localhost:54924->localhost:2379 (ESTABLISHED)

etcd 14744 root 20u IPv4 1951793 0t0 TCP localhost:2379->localhost:54924 (ESTABLISHED)

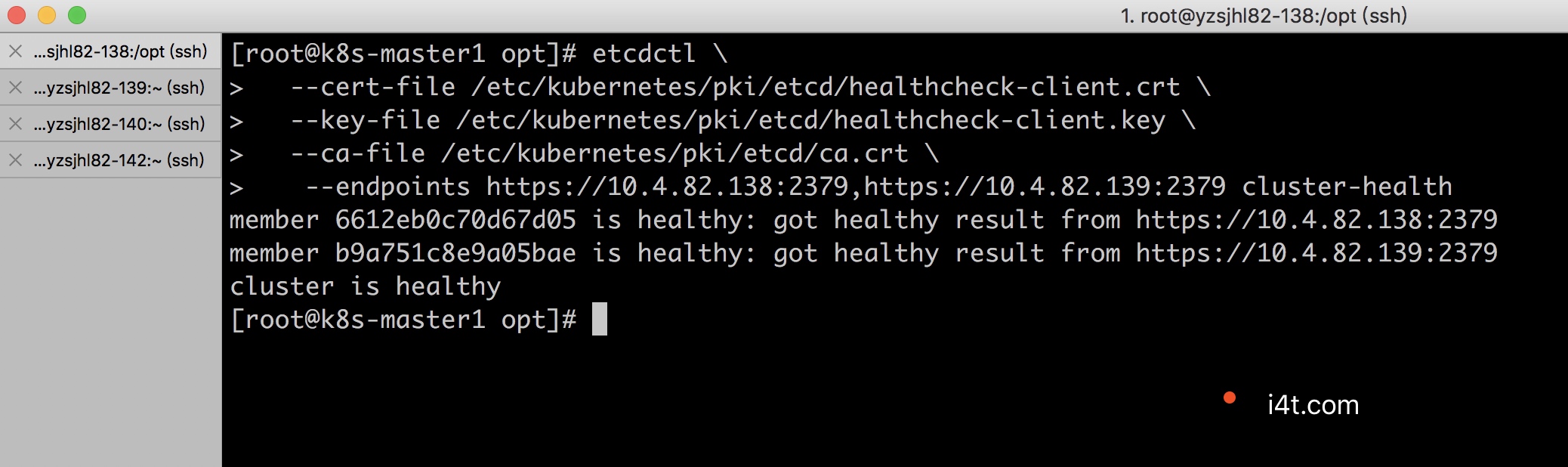

etcd 14744 root 21u IPv4 1951795 0t0 TCP k8s-master1:2379->k8s-master1:53826 (ESTABLISHED)k8s-master1上執行下面命令驗證 ETCD 集羣狀態,下面第二個是使用3的api去查詢集羣的鍵值

etcdctl \

--cert-file /etc/kubernetes/pki/etcd/healthcheck-client.crt \

--key-file /etc/kubernetes/pki/etcd/healthcheck-client.key \

--ca-file /etc/kubernetes/pki/etcd/ca.crt \

--endpoints https://10.4.82.138:2379,https://10.4.82.139:2379 cluster-health

#這裏需要填寫etcd的地址+端口使用3的api去查詢集羣的鍵值

ETCDCTL_API=3 \

etcdctl \

--cert /etc/kubernetes/pki/etcd/healthcheck-client.crt \

--key /etc/kubernetes/pki/etcd/healthcheck-client.key \

--cacert /etc/kubernetes/pki/etcd/ca.crt \

--endpoints https://IP+端口 get / --prefix --keys-only如果想了解更多etcdctl操作可以去官網etcdctl command 文章。

3.5 Kubernetes Master Install

接下來我們部署master上的服務器

首先介紹一下master部署的組件作用

- Kubelet

1.負責管理容器的聲明週期,定期從API Server獲取節點上的狀態(如網絡、存儲等等配置)資源,並讓對應的容器插件(CRI、CNI等)來達成這個狀態。

2.關閉只讀端口,在安全端口10250接收https請求,對請求進行認真和授權,拒絕匿名訪問和非授權訪問

3.使用kubeconfig訪問apiserver的安全端口

- Kube-Apiserver

1.以REST APIs 提供Kubernetes資源的CRUD,如授權、認真、存取控制與API註冊等機制

2.關閉非安全端口,在安全端口6443接收https請求

3.嚴格的認真和授權策略(RBAC、token)

4.開啓bootstrap token認證,支持kubelet TLS bootstrapping

5.使用https訪問kubelet、etcd、加密通信

- Kube-controller-manager

1.通過核心控制循環(Core Control Loop)監聽Kubernetes API的資源來維護集羣的狀態,這些資源會被不同的控制器所管理,如Replication Controller、Namespace Controller等等。而這些控制器會處理着自動擴展、滾動更新等等功能

2.關閉非安全端口,在安全端口10252接收https請求

3.使用kubeconfig訪問apiserver的安全端口

- Kube-scheduler

負責將一個或多個容器依據調度策略分配到對應節點上讓容器引擎執行,而調度收到QoS要求、軟硬性約束、親和力(Affinty)等因素影響

- HAProxy

提供多個API Server的負載均衡(Load Balance),確保haproxy的端口負載到所有的apiserver的6443端口

也可以使用nginx實現

- Keepalived

提供虛擬IP(VIP),讓VIP落在可用的master主機上供所有組件都能訪問到高可用的master,結合haproxy(nginx)能訪問到master上的apiserver的6443端口

部署說明

1.信息可以按照自己的環境填寫,或者和我相同

2.網卡名稱都爲eth0,如有不相同建議修改下面配置,或者直接修改centos7網卡爲eth0

3.cluster dns或domain有改變的話,需要修改kubelet-conf.yml

HA(haproxy+keepalived) 單臺master就不要用HA了

首先所有master安裝haproxy+keeplived

for NODE in k8s-master1 k8s-master2; do

echo "--- $NODE---"

ssh $NODE 'yum install haproxy keepalived -y' &

done安裝完記得檢查 (是每臺master進行檢查)

for NODE in k8s-master1 k8s-master2;do

echo "--- $NODE ---"

ssh $NODE "rpm -qa|grep haproxy"

ssh $NODE "rpm -qa|grep keepalived"

done在k8s-master1修改配置文件,並分發給其他master

- haproxy配置文件修改

cat >> /opt/haproxy.cfg <<EOF

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

listen stats

bind *:8006

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy\ Statistics

stats auth admin:admin

frontend k8s-api

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-api

backend k8s-api

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-api-1 10.4.82.138:6443 check

server k8s-api-2 10.4.82.139:6443 check

EOF

#在最後一行添加我們的master節點,端口默認是6443- keeplived配置文件修改

cat >> /opt/keepalived.conf <<EOF

vrrp_script haproxy-check {

script "/bin/bash /etc/keepalived/check_haproxy.sh"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance haproxy-vip {

state BACKUP

priority 101

interface eth0

virtual_router_id 47

advert_int 3

unicast_peer {

10.4.82.138

10.4.82.139

}

virtual_ipaddress {

10.4.82.141

}

track_script {

haproxy-check

}

}

EOF

#unicast_peer 爲master節點IP

#virtual_ipaddress 爲VIP地址,自行修改

#interface 物理網卡地址添加keeplived健康檢查腳本

cat >> /opt/check_haproxy.sh <<EOF

#!/bin/bash

errorExit() {

echo "*** $*" 1>&2

exit 1

}

if ip addr | grep -q $VIRTUAL_IP ; then

curl -s --max-time 2 --insecure https://10.4.82.141:8443/ -o /dev/null || errorExit "Error GET https://10.4.82.141:8443/"

fi

EOF

##注意修改VIP地址分發keeplived及haproxy文件給所有master

# 分發文件

for NODE in k8s-master1 k8s-master2; do

echo "--- $NODE ---"

scp -r /opt/haproxy.cfg $NODE:/etc/haproxy/

scp -r /opt/keepalived.conf $NODE:/etc/keepalived/

scp -r /opt/check_haproxy.sh $NODE:/etc/keepalived/

ssh $NODE 'systemctl enable --now haproxy keepalived'

doneping下vip看看能通否,先等待大概四五秒等keepalived和haproxy起來

ping 10.4.82.141

這裏的141位我們漂移IP (VIP)如果vip沒起來就是keepalived沒起來就每個節點上去restart下keepalived或者確認下配置文件/etc/keepalived/keepalived.conf裏網卡名和ip是否注入成功

for NODE in k8s-master1 k8s-master2; do

echo "--- $NODE ---"

ssh $NODE 'systemctl restart haproxy keepalived'

done配置master 組件

- kube-apiserver啓動文件

編輯apiserver啓動文件

vim /opt/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https:/github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--authorization-mode=Node,RBAC \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeClaimResize,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota,Priority,PodPreset \

--advertise-address={{ NODE_IP }} \

--bind-address={{ NODE_IP }} \

--insecure-port=0 \

--secure-port=6443 \

--allow-privileged=true \

--apiserver-count=2 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/audit.log \

--enable-swagger-ui=true \

--storage-backend=etcd3 \

--etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt \

--etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt \

--etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key \

--etcd-servers=https://10.4.82.138:2379,https://10.4.82.139:2379 \

--event-ttl=1h \

--enable-bootstrap-token-auth \

--client-ca-file=/etc/kubernetes/pki/ca.crt \

--kubelet-https \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--runtime-config=api/all,settings.k8s.io/v1alpha1=true \

--service-cluster-ip-range=10.96.0.0/12 \

--service-node-port-range=30000-32767 \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--tls-cert-file=/etc/kubernetes/pki/apiserver.crt \

--tls-private-key-file=/etc/kubernetes/pki/apiserver.key \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt \

--requestheader-username-headers=X-Remote-User \

--requestheader-group-headers=X-Remote-Group \

--requestheader-allowed-names=front-proxy-client \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key \

--feature-gates=PodShareProcessNamespace=true \

--v=2

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

#配置修改參數解釋

--etcd-servers 爲etcd節點ip,逗號分隔

--apiserver-count (最好根據master節點創建)指定集羣運行模式,多臺 kube-apiserver 會通過 leader 選舉產生一個工作節點,其它節點處於阻塞狀態

--advertise-address 將IP修改爲當前節點的IP

--bind-address 將IP修改爲當前節點的IP - kube-controller-manager.service 啓動文件

cat >> /opt/kube-controller-manager.service <<EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--allocate-node-cidrs=true \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--authentication-kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--authorization-kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--client-ca-file=/etc/kubernetes/pki/ca.crt \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt \

--cluster-signing-key-file=/etc/kubernetes/pki/ca.key \

--bind-address=127.0.0.1 \

--leader-elect=true \

--cluster-cidr=10.244.0.0/16 \

--service-cluster-ip-range=10.96.0.0/12 \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--root-ca-file=/etc/kubernetes/pki/ca.crt \

--use-service-account-credentials=true \

--controllers=*,bootstrapsigner,tokencleaner \

--experimental-cluster-signing-duration=86700h \

--feature-gates=RotateKubeletClientCertificate=true \

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF- kube-scheduler.service 啓動文件

cat >> /opt/kube-scheduler.service <<EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--leader-elect=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig \

--address=127.0.0.1 \

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF分發文件

for NODE in 10.4.82.138 10.4.82.139; do

echo "--- $NODE ---"

ssh $NODE 'mkdir -p /etc/kubernetes/manifests /var/lib/kubelet /var/log/kubernetes'

scp /opt/kube-*.service $NODE:/usr/lib/systemd/system/

#注入網卡ip

ssh $NODE "sed -ri '/bind-address/s#=[^\]+#=$NODE #' /usr/lib/systemd/system/kube-apiserver.service && sed -ri '/--advertise-address/s#=[^\]+#=$NODE #' /usr/lib/systemd/system/kube-apiserver.service"

done

#這裏for循環要寫ip地址,不可以寫host,因爲下面配置文件有替換地址的步驟

For 循環拷貝以下文件

kube-apiserver.service

kube-controller-manager.service

kube-scheduler.service在k8s-master1上給所有master機器啓動kubelet 服務

for NODE in k8s-master1 k8s-master2; do

echo "--- $NODE---"

ssh $NODE 'systemctl enable --now kube-apiserver kube-controller-manager kube-scheduler;

mkdir -p ~/.kube/

cp /etc/kubernetes/admin.kubeconfig ~/.kube/config;

kubectl completion bash > /etc/bash_completion.d/kubectl'

done

#apiserver默認端口爲8080端口,但是我們k8s內部定義端口爲8443,如果不復制環境變量,通過kubectl 命令就會提示8080端口連接異常驗證組件

完成後,在任意一臺master節點通過簡單指令驗證:

# 這裏需要等待一會,等api server和其他服務啓動成功

[root@k8s-master1 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

etcd-1 Healthy {"health": "true"}配置Bootstrap

本次安裝啓用了TLS認證,需要每個節點的kubelet都必須使用kube-apiserver的CA憑證後,才能與kube-apiserver進行溝通,而該過程需要手動針對每臺節點單獨簽署憑證是一件繁瑣的事情,可以通過kubelet先使用一個預定低權限使用者連接到kube-apiserver,然後在對kube-apiserver申請憑證簽署,當授權Token一致時,Node節點的kubelet憑證將由kube-apiserver動態簽署提供。具體作法可以參考TLS Bootstrapping與Authenticating with Bootstrap Tokens。

說明

以下步驟是屬於自動簽發認證證書的步驟,如果不需要可以不進行創建 (k8s集羣node節點加入需要apiserver簽發證書)

下面的步驟在任意一臺master上執行就可以

首先在k8s-master1建立一個BOOTSTRAP_TOKEN,並建立bootstrap的kubeconfig文件,接着在k8s-master1建立TLS bootstrap secret來提供自動簽證使用

TOKEN_PUB=$(openssl rand -hex 3)

TOKEN_SECRET=$(openssl rand -hex 8)

BOOTSTRAP_TOKEN="${TOKEN_PUB}.${TOKEN_SECRET}"

kubectl -n kube-system create secret generic bootstrap-token-${TOKEN_PUB} \

--type 'bootstrap.kubernetes.io/token' \

--from-literal description="cluster bootstrap token" \

--from-literal token-id=${TOKEN_PUB} \

--from-literal token-secret=${TOKEN_SECRET} \

--from-literal usage-bootstrap-authentication=true \

--from-literal usage-bootstrap-signing=true建立bootstrap的kubeconfig文件

KUBE_APISERVER=https://10.4.82.141:8443

CLUSTER_NAME="kubernetes"

KUBE_USER="kubelet-bootstrap"

KUBE_CONFIG="bootstrap.kubeconfig"

# 設置集羣參數

kubectl config set-cluster ${CLUSTER_NAME} \

--certificate-authority=/etc/kubernetes/pki/ca.crt \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 設置上下文參數

kubectl config set-context ${KUBE_USER}@${CLUSTER_NAME} \

--cluster=${CLUSTER_NAME} \

--user=${KUBE_USER} \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 設置客戶端認證參數

kubectl config set-credentials ${KUBE_USER} \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 設置當前使用的上下文

kubectl config use-context ${KUBE_USER}@${CLUSTER_NAME} --kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

# 查看生成的配置文件

kubectl config view --kubeconfig=/etc/kubernetes/${KUBE_CONFIG}授權 kubelet 可以創建 csr

kubectl create clusterrolebinding kubeadm:kubelet-bootstrap \

--clusterrole system:node-bootstrapper --group system:bootstrappers批准 csr 請求

允許 system:bootstrappers 組的所有 csr

cat <<EOF | kubectl apply -f -

# Approve all CSRs for the group "system:bootstrappers"

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: auto-approve-csrs-for-group

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

apiGroup: rbac.authorization.k8s.io

EOF允許 kubelet 能夠更新自己的證書

cat <<EOF | kubectl apply -f -

# Approve renewal CSRs for the group "system:nodes"

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: auto-approve-renewals-for-nodes

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

apiGroup: rbac.authorization.k8s.io

EOF說明

以上步驟是屬於自動簽發認證證書的步驟,如果不需要可以不進行創建 (k8s集羣node節點加入需要apiserver簽發證書)

3.6 Kubernetes ALL Node

本部分操作主要是將node節點添加到k8s集羣中,在開始之前,先在k8s-master1將需要用到的文件複製到所有其他節點上

Kubelet的配置選項官方建議大多數的參數寫一個yaml裏用

--config去指定https://godoc.org/k8s.io/kubernetes/pkg/kubelet/apis/config#KubeletConfiguration

1.在所有節點創建存儲證書目錄

2.拷貝ca證書及bootstrap.kubeconfig(kubelet需要用到裏面的配置)拷貝到節點上

for NODE in k8s-master1 k8s-master2 k8s-node1 k8s-node2; do

echo "--- $NODE ---"

ssh $NODE "mkdir -p /etc/kubernetes/pki /etc/kubernetes/manifests /var/lib/kubelet/"

for FILE in /etc/kubernetes/pki/ca.crt /etc/kubernetes/bootstrap.kubeconfig; do

scp ${FILE} $NODE:${FILE}

done

done生成kubelet.service(啓動腳本)及kubelet-conf.yaml(配置文件)

1.生成kubelet.service啓動腳本

cat >> /opt/kubelet.service <<EOF

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/local/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet-conf.yml \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1 \

--allow-privileged=true \

--network-plugin=cni \

--cni-conf-dir=/etc/cni/net.d \

--cni-bin-dir=/opt/cni/bin \

--cert-dir=/etc/kubernetes/pki \

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF

#--pod-infra-container-image 爲Pod基礎鏡像

#--bootstrap-kubeconfig 上面拷貝的步驟

#--kubeconfig 這裏是連接apiserver的信息

#--config 配置文件地址

2.生成kubelet.yaml配置文件

cat >> /opt/kubelet-conf.yml <<EOF

address: 0.0.0.0

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

configMapAndSecretChangeDetectionStrategy: Watch

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuCFSQuotaPeriod: 100ms

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kind: KubeletConfiguration

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeLeaseDurationSeconds: 40

nodeStatusReportFrequency: 1m0s

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

port: 10250

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

EOF拷貝kubelet服務及配置

#這裏分發的時候要寫ip,因爲後面有sed直接引用ip了

for NODE in 10.4.82.138 10.4.82.139 10.4.82.140 10.4.82.142; do

echo "--- $NODE ---"

#拷貝啓動文件

scp /opt/kubelet.service $NODE:/lib/systemd/system/kubelet.service

#拷貝配置文件

scp /opt/kubelet-conf.yml $NODE:/etc/kubernetes/kubelet-conf.yml

#替換相關配置

ssh $NODE "sed -ri '/0.0.0.0/s#\S+\$#$NODE#' /etc/kubernetes/kubelet-conf.yml"

ssh $NODE "sed -ri '/127.0.0.1/s#\S+\$#$NODE#' /etc/kubernetes/kubelet-conf.yml"

done

###########

#sed 替換隻是將原來的ip替換爲本機IP

[root@k8s-master1 kubernetes]# grep -rn "10.4.82.138" kubelet-conf.yml

1:address: 10.4.82.138

44:healthzBindAddress: 10.4.82.138在k8s-master1 節點上啓動所有節點的kubelet

#這裏寫主機名或者ip都ok

for NODE in 10.4.82.138 10.4.82.139 10.4.82.140 10.4.82.142; do

echo "--- $NODE ---"

ssh $NODE 'systemctl enable --now kubelet.service'

done驗證集羣

完成後在任意一臺master節點並通過簡單的指令驗證

[root@k8s-master1 kubernetes]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 NotReady <none> 19s v1.13.5

k8s-master2 NotReady <none> 17s v1.13.5

k8s-node1 NotReady <none> 17s v1.13.5

k8s-node2 NotReady <none> 17s v1.13.5

這裏同時自動簽發認證

[root@k8s-master1 kubernetes]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-0PvaysNOBlR86YaXTgkjKwoRFVXVVVxCUYR_X-_SboM 69s system:bootstrap:8c5a8c Approved,Issued

node-csr-4lKI3gwJ5Mv4Lh96rJn--mF8mmAr9dh5RC0r2iogYlo 67s system:bootstrap:8c5a8c Approved,Issued

node-csr-aXKiI5FgkYq0vL5IHytfrY5VB7UEfxr-AnL1DkprmWo 67s system:bootstrap:8c5a8c Approved,Issued

node-csr-pBZ3_Qd-wnkmISuipHkyE6zDYhI0CQ6P94LVi0V0nGw 67s system:bootstrap:8c5a8c Approved,Issued

#手動簽發證書

kubectl certificate approve csr-l9d25

#csr-l9d25 爲證書名稱

或者執行kubectl get csr | grep Pending | awk '{print $1}' | xargs kubectl certificate approve3.7 Kubernetes Proxy Install

Kube-proxy概念

1.Service在很多情況下只是一個概念,而真正將Service實現的是kube-proxy

2.每個Node節點上都會運行一個kube-proxy服務進程

3.對每一個TCP類型的Kubernetes Service,Kube-proxy都會在本地Node節點上簡歷一個Socket Server來負責接收請求,然後均勻發送到後端某個Pod的端口上。這個過程默認採用Round Robin負載均衡算法。4.Kube-proxy在運行過程中動態創建於Service相關的Iptables規則,這些規則實現了Clusterip及NodePort的請求流量重定向到kube-proxy進行上對應服務的代理端口功能

5.Kube-proxy通過查詢和監聽API Server中Service和Endpoints的變化,爲每個Service都建立一個"服務代理對象",並自動同步。服務代理對象是kube-proxy程序內部的一種數據結構,它包括一個用於監聽此服務請求的Socker Server,Socker Server的端口是隨機選擇一個本地空閒端口,此外,kube-proxy內部創建了一個負載均衡器-LoadBalancer

6.針對發生變化的Service列表,kube-proxy會逐個處理

a.如果沒有設置集羣IP,則不做任何處理,否則,取該Service的所有端口定義和列表

b.爲Service端口分配服務代理對象併爲該Service創建相關的IPtables規則

c.更新負載均衡器組件中對應Service的轉發地址列表7.Kube-proxy在啓動時和監聽到Service或Endpoint的變化後,會在本機Iptables的NAT表中添加4條規則鏈

a.KUBE-PORTALS-CONTAINER: 從容器中通過Cluster IP 和端口號訪問service.

b.KUBE-PORTALS-HOST: 從主機中通過Cluster IP 和端口號訪問service.

c.KUBE-NODEPORT-CONTAINER:從容器中通過NODE IP 和端口號訪問service.

d. KUBE-NODEPORT-HOST:從主機中通過Node IP 和端口號訪問service.

Kube-proxy是實現Service的關鍵插件,kube-proxy會在每臺節點上執行,然後監聽API Server的Service與Endpoint資源物件的改變,然後來依據變化執行iptables來實現網路的轉發。這邊我們會需要建議一個DaemonSet來執行,並且建立一些需要的Certificates。

a.二進制部署方式

創建一個kube-proxy的service account

Service Account爲Pod中的進程和外部用戶提供身份信息。所有的kubernetes集羣中賬戶分爲兩類,Kubernetes管理的serviceaccount(服務賬戶)和useraccount(用戶賬戶)

kubectl -n kube-system create serviceaccount kube-proxy將 kube-proxy 的 serviceaccount 綁定到 clusterrole system:node-proxier 以允許 RBAC

kubectl create clusterrolebinding kubeadm:kube-proxy \

--clusterrole system:node-proxier \

--serviceaccount kube-system:kube-proxy創建kube-proxy的kubeconfig

CLUSTER_NAME="kubernetes"

KUBE_CONFIG="kube-proxy.kubeconfig"

SECRET=$(kubectl -n kube-system get sa/kube-proxy \

--output=jsonpath='{.secrets[0].name}')

JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET \

--output=jsonpath='{.data.token}' | base64 -d)

kubectl config set-cluster ${CLUSTER_NAME} \

--certificate-authority=/etc/kubernetes/pki/ca.crt \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

kubectl config set-context ${CLUSTER_NAME} \

--cluster=${CLUSTER_NAME} \

--user=${CLUSTER_NAME} \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

kubectl config set-credentials ${CLUSTER_NAME} \

--token=${JWT_TOKEN} \

--kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

kubectl config use-context ${CLUSTER_NAME} --kubeconfig=/etc/kubernetes/${KUBE_CONFIG}

kubectl config view --kubeconfig=/etc/kubernetes/${KUBE_CONFIG}k8s-master1分發kube-proxy 的相關文件到所有節點

for NODE in k8s-master2 k8s-node1 k8s-node2; do

echo "--- $NODE ---"

scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

done創建kube-proxy啓動文件及配置文件

cat >> /opt/kube-proxy.conf <<EOF

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

qps: 5

clusterCIDR: "10.244.0.0/16"

configSyncPeriod: 15m0s

conntrack:

max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: true

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

resourceContainer: /kube-proxy

udpIdleTimeout: 250ms

EOF

#生成啓動文件

cat >> /opt/kube-proxy.service <<EOF

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.conf \

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF拷貝kube-proxy及啓動文件到所有節點

for NODE in 10.4.82.138 10.4.82.139 10.4.82.140 10.4.82.142;do

echo "--- $NODE ---"

scp /opt/kube-proxy.conf $NODE:/etc/kubernetes/kube-proxy.conf

scp /opt/kube-proxy.service $NODE:/usr/lib/systemd/system/kube-proxy.service

ssh $NODE "sed -ri '/0.0.0.0/s#\S+\$#$NODE#' /etc/kubernetes/kube-proxy.conf"

done

#sed替換bindAddress及healthzBindAddress在所有節點上啓動kube-proxy服務

for NODE in 10.4.82.138 10.4.82.139 10.4.82.140 10.4.82.142; do

echo "--- $NODE ---"

ssh $NODE 'systemctl enable --now kube-proxy'

done通過ipvsadm查看proxy規則

[root@k8s-master1 k8s-manual-files]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 10.4.82.138:6443 Masq 1 0 0

-> 10.4.82.139:6443 Masq 1 0 0確認使用ipvs模式

curl localhost:10249/proxyMode

ipvsb.daemonSet部署方式

等會寫

四、Kubernetes 集羣網絡

Kubernetes和Docker的網絡有所不同,在Kuernetes中有四個問題是需要被解決的

- 高耦合的容器到容器通信:通過Pods內部localhost來解決

- Pod到Pod的通信:通過實現網絡模型來解決

- Pod到Service通信:由解析服務結合kube-proxy來解決

- 外部到Service通信:一樣由解析服務結合kube-proxy來解決

而Kubernetes對於任何網絡的實現都有一下基本要求

- 所有容器能夠在沒有NAT模式下通信

- 所有節點可以在沒有NAT模式下通信

Kubernetes 已經有非常多種的網絡模型作爲網絡插件(Network Plugins)方式被實現,因此可以選用滿足自己需求的網絡功能來使用。另外 Kubernetes 中的網絡插件有以下兩種形式

- CNI plugins:以 appc/CNI 標準規範所實現的網絡,CNI插件負責將網絡接口插入容器網絡命名空間並在主機上進行任何必要的更改。然後,它應該通過調用適當的IPAM插件將IP分配給接口並設置與IP地址管理部分一致的路由。詳細可以閱讀 CNI Specification。

- Kubenet plugin:使用 CNI plugins 的 bridge 與 host-local 來實現基本的 cbr0。這通常被用在公有云服務上的 Kubernetes 集羣網絡。

網絡部署

以下部署方式任選其一

4.1 Flannel部署

flannel 使用 vxlan 技術爲各節點創建一個可以互通的 Pod 網絡,使用的端口爲 UDP 8472,需要開放該端口(如公有云 AWS 等)。

flannel 第一次啓動時,從 etcd 獲取 Pod 網段信息,爲本節點分配一個未使用的 /24 段地址,然後創建 flannel.1(也可能是其它名稱,如 flannel1 等) 接口

本次安裝需要所有節點pull鏡像版本v0.11.0

for NODE in 10.4.82.138 10.4.82.139 10.4.82.140 10.4.82.142;do

echo "--- $NODE ---"

ssh $NODE "docker pull quay.io/coreos/flannel:v0.11.0-amd64"

done

##網絡不好可以使用我的方法

for NODE in 10.4.82.138 10.4.82.139 10.4.82.140 10.4.82.142;do

echo "--- $NODE ---"

ssh $NODE "wget -P /opt/ http://down.i4t.com/flannel_v0.11.tar"

ssh $NODE "docker load -i /opt/flannel_v0.11.tar"

done當所有節點pull完鏡像,我們修改yaml文件

wget http://down.i4t.com/kube-flannel.yml

sed -ri "s#\{\{ interface \}\}#eth0#" kube-flannel.yml

kubectl apply -f kube-flannel.yml

#配置網卡在master節點執行

$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-amd64-bjfdf 1/1 Running 0 28s

kube-flannel-ds-amd64-tdzbr 1/1 Running 0 28s

kube-flannel-ds-amd64-wxkgb 1/1 Running 0 28s

kube-flannel-ds-amd64-xnks7 1/1 Running 0 28s4.2 Calico部署

Calico

Calico整合了雲原生平臺(Docker、Mesos與OPenStack等),且Calico不採用vSwitch,而是在每個Kubernetes節點使用vRouter功能,並通過Linux Kernel既有的L3 forwarding功能,而當資料中心複雜度增加時,Calico也可以利用BGP route reflector來達成

Calico提供了Kubernetes Yaml文件用來快速以容器方式部署網絡至所有節點上,因此只需要在Master上創建yaml文件即可

本次calico版本還是使用3.1

我們需要下載calico.yaml文件,同時在所有節點pull鏡像

wget -P /opt/ http://down.i4t.com/calico.yml

wget -P /opt/ http://down.i4t.com/rbac-kdd.yml

wget -P /opt/ http://down.i4t.com/calicoctl.yml

for NODE in 10.4.82.138 10.4.82.139 10.4.82.140 10.4.82.142;do

echo "--- $NODE ---"

ssh $NODE "docker pull quay.io/calico/typha:v0.7.4"

ssh $NODE "docker pull quay.io/calico/node:v3.1.3"

ssh $NODE "docker pull quay.io/calico/cni:v3.1.3"

done

###如果網絡不好,可以使用下面我提供的方式

for NODE in 10.4.82.138 10.4.82.139 10.4.82.140 10.4.82.142;do

echo "--- $NODE ---"

ssh $NODE "wget -P /opt/ http://down.i4t.com/calico.tar"

ssh $NODE "docker load -i /opt/calico.tar"

done

執行yaml文件

#替換網卡,我們默認使用eth0

sed -ri "s#\{\{ interface \}\}#eth0#" /opt/calico.yml

執行yaml文件

kubectl apply -f /opt/calico.yml

kubectl apply -f /opt/rbac-kdd.yml

kubectl apply -f /opt/calicoctl.yml檢查服務是否正常

kubectl get pod -n kube-system --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-node-94hpv 2/2 Running 0 2m33s

kube-system calico-node-bzvj5 2/2 Running 0 2m33s

kube-system calico-node-kltt6 2/2 Running 0 2m33s

kube-system calico-node-r96k8 2/2 Running 0 2m33s

kube-system calicoctl-54567cf646-7xrw5 1/1 Running 0 2m32s通過 kubectl exec calicoctl pod 執行命令來檢查功能是否正常

calicoctl 1.0之後calicoctl管理的都是資源(resource),之前版本的ip pool,profile, policy等都是資源。資源通過yaml或者json格式方式來定義,通過calicoctl create或者apply來創建和應用,通過calicoctl get命令來查看

1.找到calicoctl 容器

kubectl -n kube-system get po -l k8s-app=calicoctl

NAME READY STATUS RESTARTS AGE

calicoctl-54567cf646-7xrw5 1/1 Running 0 6m22s

2.檢查是否正常

kubectl -n kube-system exec calicoctl-54567cf646-7xrw5 -- calicoctl get profiles -o wide

NAME LABELS

kns.default map[]

kns.kube-public map[]

kns.kube-system map[]

kubectl -n kube-system exec calicoctl-54567cf646-7xrw5 -- calicoctl get node -o wide

NAME ASN IPV4 IPV6

k8s-master1 (unknown) 10.4.82.138/24

k8s-master2 (unknown) 10.4.82.139/24

k8s-node1 (unknown) 10.4.82.140/24

k8s-node2 (unknown) 10.4.82.142/24網絡安裝完畢,此時k8s小集羣已經可以使用,Node節點狀態爲Ready

4.3 CoreDNS

1.11後CoreDNS 已取代 Kube DNS 作爲集羣服務發現元件,由於 Kubernetes 需要讓 Pod 與 Pod 之間能夠互相通信,然而要能夠通信需要知道彼此的 IP 才行,而這種做法通常是通過 Kubernetes API 來獲取,但是 Pod IP 會因爲生命週期變化而改變,因此這種做法無法彈性使用,且還會增加 API Server 負擔,基於此問題 Kubernetes 提供了 DNS 服務來作爲查詢,讓 Pod 能夠以 Service 名稱作爲域名來查詢 IP 位址,因此使用者就再不需要關心實際 Pod IP,而 DNS 也會根據 Pod 變化更新資源記錄(Record resources)

CoreDNS 是由 CNCF 維護的開源 DNS 方案,該方案前身是 SkyDNS,其採用了 Caddy 的一部分來開發伺服器框架,使其能夠建立一套快速靈活的 DNS,而 CoreDNS 每個功能都可以被當作成一個插件的中介軟體,如 Log、Cache、Kubernetes 等功能,甚至能夠將源記錄存儲在 Redis、Etcd 中

同樣的步驟,所有節點pull鏡像

for NODE in 10.4.82.138 10.4.82.139 10.4.82.140 10.4.82.142;do

echo "--- $NODE ---"

ssh $NODE "docker pull coredns/coredns:1.4.0"

done

###網絡不好可以直接拉去我提供的鏡像

for NODE in 10.4.82.138 10.4.82.139 10.4.82.140 10.4.82.142;do

echo "--- $NODE ---"

ssh $NODE "wget -P /opt/ http://down.i4t.com/coredns_v1.4.tar"

ssh $NODE "docker load -i /opt/coredns_v1.4.tar"

done

#拉完鏡像下載yaml文件,直接執行即可

wget http://down.i4t.com/coredns.yml

kubectl apply -f coredns.yml執行完畢後,pod啓動成功 (Running狀態爲正常)

kubectl get pod -n kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

coredns-d7964c8db-vgl5l 1/1 Running 0 21s

coredns-d7964c8db-wvz5k 1/1 Running 0 21s現在我們查看node節點,已經恢復正常

kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready <none> 105m v1.13.5

k8s-master2 Ready <none> 105m v1.13.5

k8s-node1 Ready <none> 105m v1.13.5

k8s-node2 Ready <none> 105m v1.13.5CoreDNS安裝完畢,可以直接跳到4.5進行驗證

4.4 KubeDNS

Kube DNS是Kubernetes集羣內部Pod之前互相溝通的重要插件,它允許Pod可以通過Domain Name方式來連接Service,通過Kube DNS監聽Service與Endpoint變化,來進行解析地址

如果不想使用CoreDNS,需要先刪除它,確保pod和svc不存在,纔可以安裝kubeDNS

kubectl delete -f /opt/coredns.yml

kubectl -n kube-system get pod,svc -l k8s-app=kube-dns

No resources found.創建KubeDNS

wget -P /opt/ http://down.i4t.com/kubedns.yml

kubectl apply -f /opt/kubedns.yml創建完成後我們需要查看pod狀態

kubectl -n kube-system get pod,svc -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

pod/kube-dns-57f56f74cb-gtn99 3/3 Running 0 107s

pod/kube-dns-57f56f74cb-zdj92 3/3 Running 0 107s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 108s安裝完畢,可以直接跳到4.5進行驗證

4.5 驗證

溫馨提示:busybox高版本有nslookup Bug,不建議使用高版本,請按照我的版本進行操作即可!

創建一個yaml文件測試是否正常

cat<<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- name: busybox

image: busybox:1.28.3

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Always

EOF創建成功後,我們進行檢查

kubectl get pod

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 0 4s使用nslookup查看是否能返回地址

kubectl exec -ti busybox -- nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local溫馨提示一下:busybox鏡像不要使用太高版本,否則容易出問題

https://my.oschina.net/zlhblogs/blog/298076

原文地址:https://k.i4t.com/kubernetes1.13_install.html

有問題可以直接在下面提問,看到問題我會馬上解決!