一、有一個ceph cluster,假設已經準備好了,文檔網上一大堆

二、開始集成ceph和kuberntes

2.1 禁用rbd features

rbd p_w_picpath有4個 features,layering, exclusive-lock, object-map, fast-diff, deep-flatten

因爲目前內核僅支持layering,修改默認配置

每個ceph node的/etc/ceph/ceph.conf 添加一行

rbd_default_features = 1

這樣之後創建的p_w_picpath 只有這一個feature

驗證方式:

ceph --show-config|grep rbd|grep features rbd_default_features = 1

2.2 創建ceph-secret這個k8s secret對象,這個secret對象用於k8s volume插件訪問ceph集羣:

獲取client.admin的keyring值,並用base64編碼:

# ceph auth get-key client.admin AQBRIaFYqWT8AhAAUtmJgeNFW/o1ylUzssQQhA==

# echo "AQBRIaFYqWT8AhAAUtmJgeNFW/o1ylUzssQQhA=="|base64 QVFCUklhRllxV1Q4QWhBQVV0bUpnZU5GVy9vMXlsVXpzc1FRaEE9PQo=

創建ceph-secret.yaml文件,data下的key字段值即爲上面得到的編碼值:

apiVersion: v1 kind: Secret metadata: name: ceph-secret data: key: QVFCUklhRllxV1Q4QWhBQVV0bUpnZU5GVy9vMXlsVXpzc1FRaEE9PQo=

創建ceph-secret:

# kubectl create -f ceph-secret.yamlsecret "ceph-secret" created # kubectl get secret NAME TYPE DATA AGE ceph-secret Opaque 1 2d default-token-5vt3n kubernetes.io/service-account-token 3 106d

三、Kubernetes Persistent Volume和Persistent Volume Claim

概念:PV是集羣的資源,PVC請求資源並檢查資源是否可用

注意:以下操作設計到name的參數,一定要一致

3.1 創建disk p_w_picpath (以jdk保存到ceph舉例)

# rbd create jdk-p_w_picpath -s 1G # rbd info jdk-p_w_picpath rbd p_w_picpath 'jdk-p_w_picpath': size 1024 MB in 256 objects order 22 (4096 kB objects) block_name_prefix: rbd_data.37642ae8944a format: 2 features: layering flags:

3.2 創建pv(仍然使用之前創建的ceph-secret)

創建jdk-pv.yaml:

monitors: 就是ceph的mon,有幾個寫幾個

apiVersion: v1 kind: PersistentVolume metadata: name: jdk-pv spec: capacity: storage: 2Gi accessModes: - ReadWriteOnce rbd: monitors: - 10.10.10.1:6789 pool: rbd p_w_picpath: jdk-p_w_picpath user: admin secretRef: name: ceph-secret fsType: xfs readOnly: false persistentVolumeReclaimPolicy: Recycle

執行創建操作:

# kubectl create -f jdk-pv.yamlpersistentvolume "jdk-pv" created #kubectl get pv NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM REASON AGE ceph-pv 1Gi RWO Recycle Bound default/ceph-claim 1d jdk-pv 2Gi RWO Recycle Available 1m

3.3 創建pvc

創建jdk-pvc.yaml

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: jdk-claim spec: accessModes: - ReadWriteOnce resources: requests: storage: 1Gi

執行創建操作:

# kubectl create -f jdk-pvc.yamlpersistentvolumeclaim "jdk-claim" created # kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESSMODES AGE ceph-claim Bound ceph-pv 1Gi RWO 2d jdk-claim Bound jdk-pv 2Gi RWO 39s

3.4 創建掛載ceph rbd的pod:

創建 ceph-busyboxpod.yaml

apiVersion: v1 kind: Pod metadata: name: ceph-busybox spec: containers: - name: ceph-busybox p_w_picpath: busybox command: ["sleep", "600000"] volumeMounts: - name: ceph-vol1 mountPath: /usr/share/busybox readOnly: false volumes: - name: ceph-vol1 persistentVolumeClaim: claimName: jdk-claim

執行創建操作:

kubectl create -f ceph-busyboxpod.yaml

ceph rbd 持久化 這裏描述下:

1、穩定性在於ceph

2、只能同一node掛載,不能跨node

3、讀寫只能一個pod,其他pod只能讀

官方url描述

https://kubernetes.io/docs/user-guide/volumes/#rbd

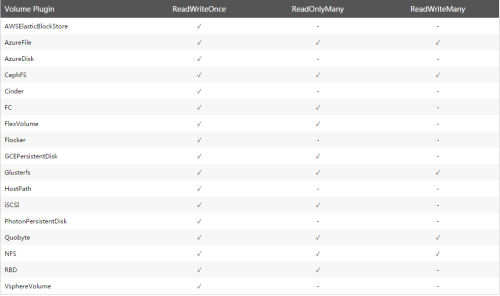

附官方關於kubernetes的volume的mode