此實驗開始前必須要先部署單節master的k8s羣集

單節點部署博客地址:

https://blog.51cto.com/14449528/2469980

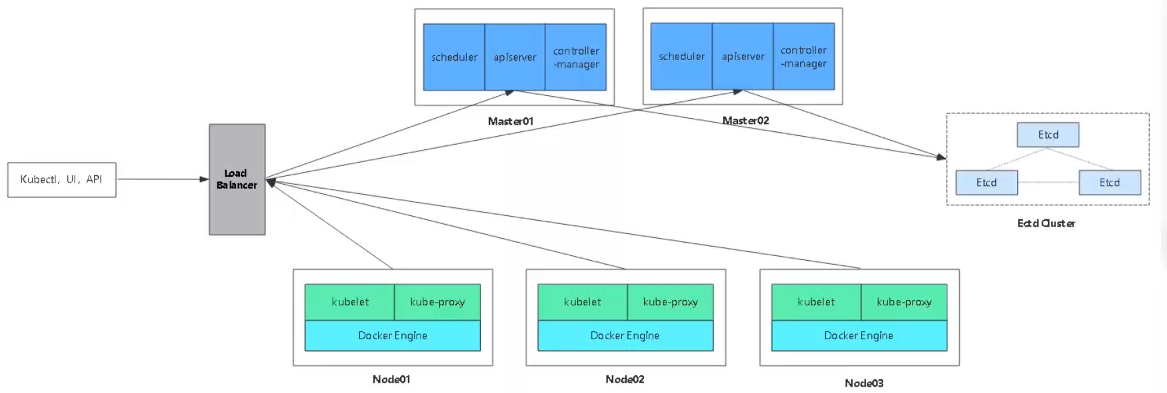

多master羣集架構圖:

master2部署

1、優先關閉master2的防火牆服務

[root@master2 ~]# systemctl stop firewalld.service

[root@master2 ~]# setenforce 02、在master1上操作,複製kubernetes目錄、server組件到master2

[root@master1 k8s]# scp -r /opt/kubernetes/ [email protected]:/opt

[root@master1 k8s]# scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service [email protected]:/usr/lib/systemd/system/3、修改master02中的配置文件

[root@master2 ~]# cd /opt/kubernetes/cfg/

[root@master2 cfg]# vim kube-apiserver

5 --bind-address=192.168.18.140 \

7 --advertise-address=192.168.18.140 \

#第5和7行IP地址需要改爲master2的地址4、拷貝master1上已有的etcd證書給master2使用

(注意:master2一定要有etcd證書,否則apiserver服務無法啓動)

[root@master1 k8s]# scp -r /opt/etcd/ [email protected]:/opt/

[email protected]'s password:

etcd 100% 516 535.5KB/s 00:00

etcd 100% 18MB 90.6MB/s 00:00

etcdctl 100% 15MB 80.5MB/s 00:00

ca-key.pem 100% 1675 1.4MB/s 00:00

ca.pem 100% 1265 411.6KB/s 00:00

server-key.pem 100% 1679 2.0MB/s 00:00

server.pem 100% 1338 429.6KB/s 00:005、啓動master2中的三個組件服務

[root@master2 cfg]# systemctl start kube-apiserver.service ##開啓服務

[root@master2 cfg]# systemctl enable kube-apiserver.service ##服務開機自啓

[root@master2 cfg]# systemctl start kube-controller-manager.service

[root@master2 cfg]# systemctl enable kube-controller-manager.service

[root@master2 cfg]# systemctl start kube-scheduler.service

[root@master2 cfg]# systemctl enable kube-scheduler.service6、修改環境變量

[root@master2 cfg]# vim /etc/profile

export PATH=$PATH:/opt/kubernetes/bin/ ##添加環境變量

[root@master2 cfg]# source /etc/profile ##刷新配置文件

[root@master2 cfg]# kubectl get node ##查看羣集節點信息

NAME STATUS ROLES AGE VERSION

192.168.18.129 Ready <none> 21h v1.12.3

192.168.18.130 Ready <none> 22h v1.12.3

#此時可以看到node1和node2的加入情況------此時master2部署完畢------

Nginx負載均衡部署

lb01和lb02進行相同操作

安裝nginx服務,把nginx.sh和keepalived.conf腳本拷貝到家目錄

[root@localhost ~]# ls

anaconda-ks.cfg keepalived.conf 公共 視頻 文檔 音樂

initial-setup-ks.cfg nginx.sh 模板 圖片 下載 桌面[root@lb1 ~]# systemctl stop firewalld.service

[root@lb1 ~]# setenforce 0

[root@lb1 ~]# vim /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

##重新加載yum倉庫

[root@lb1 ~]# yum list

##安裝nginx服務

[root@lb1 ~]# yum install nginx -y

[root@lb1 ~]# vim /etc/nginx/nginx.conf

##在12行下插入stream模塊

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.18.128:6443; #此處爲master1的ip地址

server 192.168.18.140:6443; #此處爲master2的ip地址

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

##檢測語法

[root@lb1 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

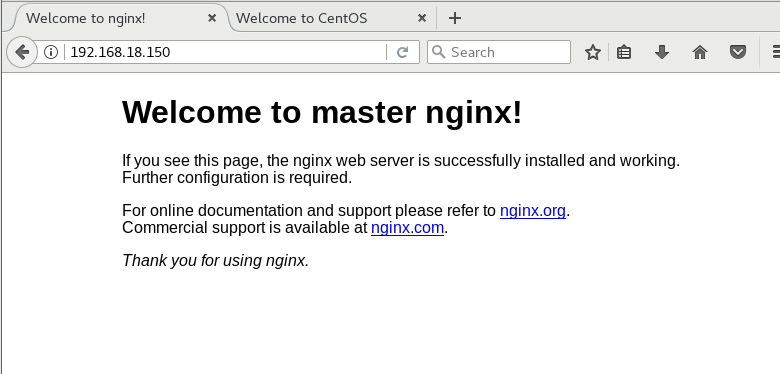

##修改主頁進行區分

[root@lb1 ~]# cd /usr/share/nginx/html/

[root@lb1 html]# ls

50x.html index.html

[root@lb1 html]# vim index.html

14 <h1>Welcome to mater nginx!</h1> #14行中添加master以作區分

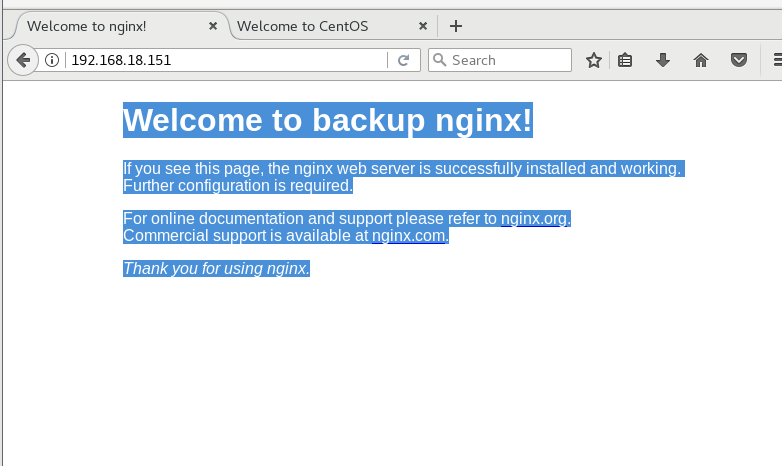

[root@lb2 ~]# cd /usr/share/nginx/html/

[root@lb2 html]# ls

50x.html index.html

[root@lb1 html]# vim index.html

14 <h1>Welcome to backup nginx!</h1> #14行中添加backup以作區分

##啓動服務

[root@lb1 ~]# systemctl start nginx

[root@lb2 ~]# systemctl start nginx瀏覽器驗證訪問,輸入192.168.18.150,可以訪問master的nginx主頁

瀏覽器驗證訪問,輸入192.168.18.151,可以訪問backup的nginx主頁

keepalived安裝部署

lb01和lb02操作相同

1、安裝keeplived

[root@lb1 html]# yum install keepalived -y2、修改配置文件

[root@lb1~]# ls

anaconda-ks.cfg keepalived.conf 公共 視頻 文檔 音樂

initial-setup-ks.cfg nginx.sh 模板 圖片 下載 桌面

[root@lb1 ~]# cp keepalived.conf /etc/keepalived/keepalived.conf

cp:是否覆蓋"/etc/keepalived/keepalived.conf"? yes

[root@lb1 ~]# vim /etc/keepalived/keepalived.conf

#lb01是Master配置如下:

! Configuration File for keepalived

global_defs {

# 接收郵件地址

notification_email {

[email protected]

[email protected]

[email protected]

}

# 郵件發送地址

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51 # VRRP 路由 ID實例,每個實例是唯一的

priority 100 # 優先級,備服務器設置 90

advert_int 1 # 指定VRRP 心跳包通告間隔時間,默認1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.18.100/24

}

track_script {

check_nginx

}

}

#lb02是Backup配置如下:! Configuration File for keepalived

global_defs {

# 接收郵件地址

notification_email {

[email protected]

[email protected]

[email protected]

}

# 郵件發送地址

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51 # VRRP 路由 ID實例,每個實例是唯一的

priority 90 # 優先級,備服務器設置 90

advert_int 1 # 指定VRRP 心跳包通告間隔時間,默認1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.18.100/24

}

track_script {

check_nginx

}

}

3、製作管理腳本

[root@lb1 ~]# vim /etc/nginx/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi4、賦予執行權限並開啓服務

[root@lb1 ~]# chmod +x /etc/nginx/check_nginx.sh

[root@lb1 ~]# systemctl start keepalived5、查看地址信息

lb01地址信息

[root@lb1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ba:e6:18 brd ff:ff:ff:ff:ff:ff

inet 192.168.18.150/24 brd 192.168.35.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.18.100/24 scope global secondary ens33 ##漂移地址在lb01中

valid_lft forever preferred_lft forever

inet6 fe80::6ec5:6d7:1b18:466e/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::2a3:b621:ca01:463e/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::d4e2:ef9e:6820:145a/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN qlen 1000

link/ether 52:54:00:14:39:99 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN qlen 1000

link/ether 52:54:00:14:39:99 brd ff:ff:ff:ff:ff:ff

lb02地址信息

[root@lb2 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:1d:ec:b0 brd ff:ff:ff:ff:ff:ff

inet 192.168.18.151/24 brd 192.168.35.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::6ec5:6d7:1b18:466e/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::2a3:b621:ca01:463e/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::d4e2:ef9e:6820:145a/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN qlen 1000

link/ether 52:54:00:14:39:99 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN qlen 1000

link/ether 52:54:00:14:39:99 brd ff:ff:ff:ff:ff:ff

6、測試故障時轉移切換

使Ib01故障,驗證地址漂移

[root@lb1 ~]# pkill nginx

[root@lb1 ~]# systemctl status nginx

● nginx.service - nginx - high performance web server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; disabled; vendor preset: disabled)

Active: failed (Result: exit-code) since 六 2020-02-08 16:54:45 CST; 11s ago

Docs: http://nginx.org/en/docs/

Process: 13156 ExecStop=/bin/kill -s TERM $MAINPID (code=exited, status=1/FAILURE)

Main PID: 6930 (code=exited, status=0/SUCCESS)

[root@localhost ~]# systemctl status keepalived.service #keepalived服務也隨之關閉,說明nginx中的check_nginx.sh生效

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: inactive (dead)

查看Ib01地址:

[root@lb1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ba:e6:18 brd ff:ff:ff:ff:ff:ff

inet 192.168.18.150/24 brd 192.168.35.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::6ec5:6d7:1b18:466e/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::2a3:b621:ca01:463e/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::d4e2:ef9e:6820:145a/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN qlen 1000

link/ether 52:54:00:14:39:99 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN qlen 1000

link/ether 52:54:00:14:39:99 brd ff:ff:ff:ff:ff:ff

查看Ib02地址:

[root@Ib2 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:1d:ec:b0 brd ff:ff:ff:ff:ff:ff

inet 192.168.18.151/24 brd 192.168.35.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.18.100/24 scope global secondary ens33 #漂移地址轉移到lb02中

valid_lft forever preferred_lft forever

inet6 fe80::6ec5:6d7:1b18:466e/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::2a3:b621:ca01:463e/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::d4e2:ef9e:6820:145a/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN qlen 1000

link/ether 52:54:00:14:39:99 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN qlen 1000

link/ether 52:54:00:14:39:99 brd ff:ff:ff:ff:ff:ff

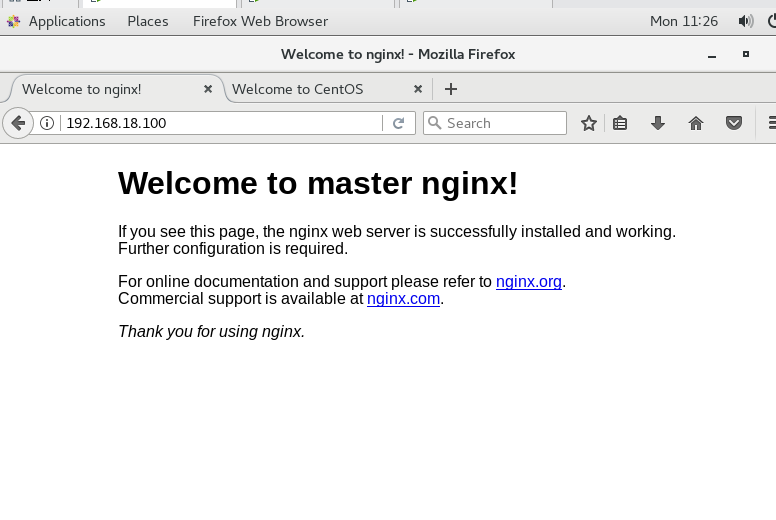

恢復操作,在Ib01中先後啓動nginx服務與keepalived服務

[root@localhost ~]# systemctl start nginx

[root@localhost ~]# systemctl start keepalived.service

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ba:e6:18 brd ff:ff:ff:ff:ff:ff

inet 192.168.35.104/24 brd 192.168.35.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.35.200/24 scope global secondary ens33 #漂移地址又轉移回lb01中

valid_lft forever preferred_lft forever

inet6 fe80::6ec5:6d7:1b18:466e/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::2a3:b621:ca01:463e/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::d4e2:ef9e:6820:145a/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN qlen 1000

link/ether 52:54:00:14:39:99 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN qlen 1000

link/ether 52:54:00:14:39:99 brd ff:ff:ff:ff:ff:ff

因爲漂移地址是在lb01上,所以訪問漂移地址時現實的nginx首頁應該是包含master的

node節點綁定VIP地址

1、修改node節點配置文件統一VIP

[root@localhost ~]# vim /opt/kubernetes/cfg/bootstrap.kubeconfig

[root@localhost ~]# vim /opt/kubernetes/cfg/kubelet.kubeconfig

[root@localhost ~]# vim /opt/kubernetes/cfg/kube-proxy.kubeconfig

#全部都改爲VIP地址

server: https://192.168.18.100:6443

2、替換完成直接自檢並重啓服務

[root@node1 ~]# cd /opt/kubernetes/cfg/

[root@node1 cfg]# grep 100 *

bootstrap.kubeconfig: server: https://192.168.18.100:6443

kubelet.kubeconfig: server: https://192.168.18.100:6443

kube-proxy.kubeconfig: server: https://192.168.18.100:6443

[root@node1 cfg]# systemctl restart kubelet.service

[root@node1 cfg]# systemctl restart kube-proxy.service3、在lb01上查看nginx的k8s日誌

[root@lb1 ~]# tail /var/log/nginx/k8s-access.log

192.168.18.130 192.168.18.128:6443 - [07/Feb/2020:14:18:54 +0800] 200 1119

192.168.18.130 192.168.18.140:6443 - [07/Feb/2020:14:18:54 +0800] 200 1119

192.168.18.129 192.168.18.128:6443 - [07/Feb/2020:14:18:57 +0800] 200 1120

192.168.18.129 192.168.18.140:6443 - [07/Feb/2020:14:18:57 +0800] 200 11204、在master1上操作

#測試創建pod

[root@master1 ~]# kubectl run nginx --image=nginx

kubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.

deployment.apps/nginx created

#查看狀態

[root@master1 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-7hdfj 0/1 ContainerCreating 0 32s

#此時狀態爲ContainerCreating正在創建中

[root@master1 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-7hdfj 1/1 Running 0 73s

#此時狀態爲Running,表示創建完成,運行中

#注意:日誌問題

[root@master1 ~]# kubectl logs nginx-dbddb74b8-7hdfj

Error from server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, subresource=proxy) ( pods/log nginx-dbddb74b8-7hdfj)

#此時日誌不可看,需要開啓權限

#綁定羣集中的匿名用戶賦予管理員權限

[root@master1 ~]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created

[root@master1 ~]# kubectl logs nginx-dbddb74b8-7hdfj #此時就不會報錯了

查看pod網絡#

[root@master1 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-dbddb74b8-7hdfj 1/1 Running 0 20m 172.17.32.2 192.168.18.129 <none>5、在對應網段的node1節點上操作可以直接訪問

[root@node1 ~]# curl 172.17.32.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

#此時看到的就是容器中nginx的信息訪問就會產生日誌,我們就可以回到master1上查看日誌

[root@master1 ~]# kubectl logs nginx-dbddb74b8-7hdfj

172.17.32.1 - - [07/Feb/2020:06:52:53 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"

#此時就可以看到node1使用網關(172.17.32.1)進行訪問的記錄