實驗環境:rhel6.5 iptables&selinux disabled

實驗主機:192.168.2.251(luci節點)

192.168.2.137 192.168.2.138(ricci節點)

三臺主機都必須配置高可用yum源:

[base]

name=Instructor Server Repository

baseurl=http://192.168.2.251/pub/rhel6.5

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

# HighAvailability rhel6.5

[HighAvailability]

name=Instructor HighAvailability Repository

baseurl=http://192.168.2.251/pub/rhel6.5/HighAvailability

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

# LoadBalancer packages

[LoadBalancer]

name=Instructor LoadBalancer Repository

baseurl=http://192.168.2.251/pub/rhel6.5/LoadBalancer

# ResilientStorage

[ResilientStorage]

name=Instructor ResilientStorage Repository

baseurl=http://192.168.2.251/pub/rhel6.5/ResilientStorage

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

# ScalableFileSystem

[ScalableFileSystem]

name=Instructor ScalableFileSystem Repository

baseurl=http://192.168.2.251/pub/rhel6.5/ScalableFileSystem

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

並且三臺主機都得時間同步。

192.168.2.251給自身和另兩個主機做解析,137和138給自身和彼此做解析!

在137和138上yum安裝ricci yum install ricci -y 在251上yum安裝luci yum install luci -y

安裝完成之後,給ricci添加密碼。啓動ricci並且設置成開機自啓動。

#passwd ricci

#/etc/init.d/ricci start

#chkconfig ricci on

#chkconfig luci on

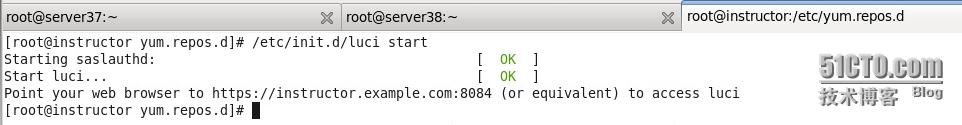

啓動luci

然後在firefox上訪問上圖的鏈接地址

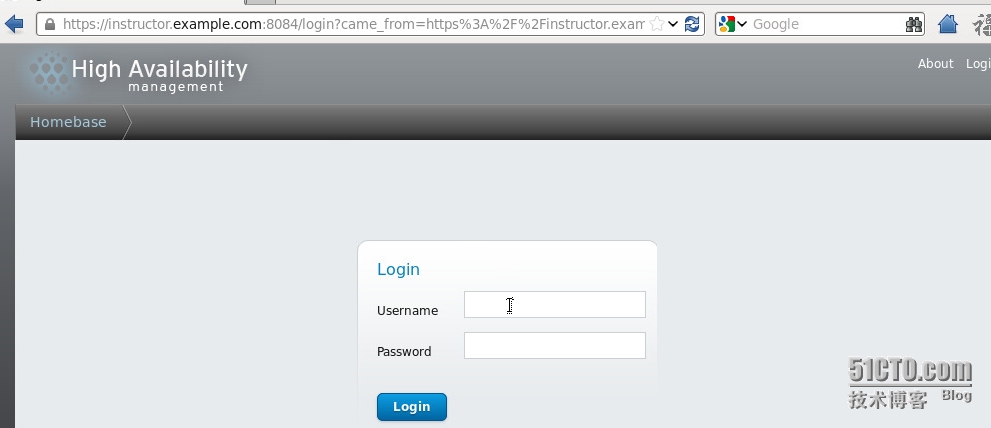

輸入root用戶和密碼進入web配置界面

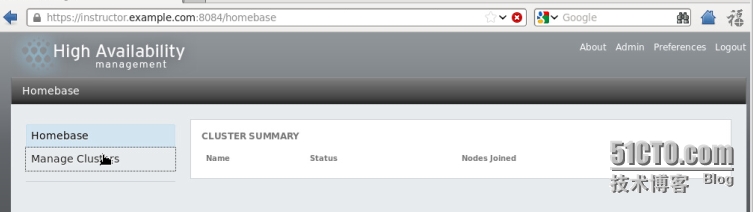

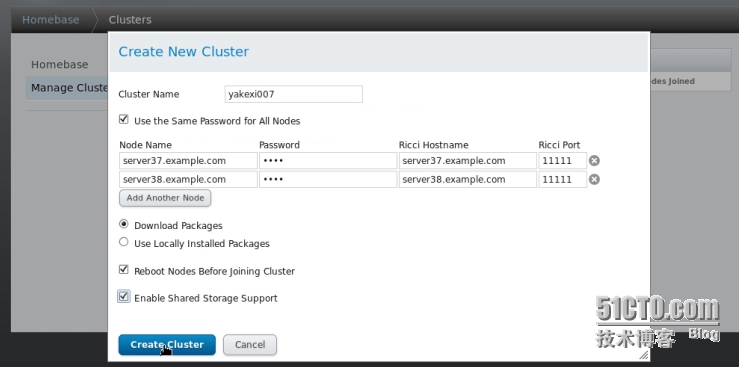

添加集羣

創建這個集羣的過程中它會自動安裝所需要的安裝包,並且兩個節點會重新啓動。

創建完成之後要確定以下幾個服務是開啓的狀態:

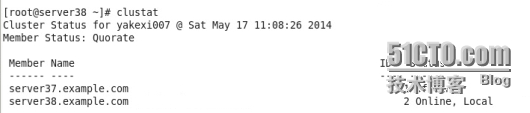

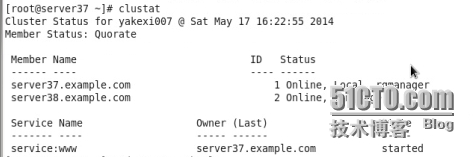

在命令行用clustat查詢兩個節點的連接情況

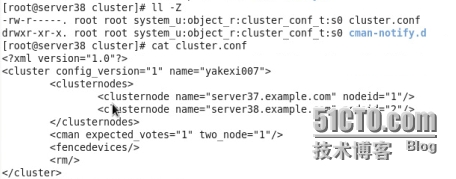

在137和138兩個節點的/etc/cluster下會自動生成cluster.conf和cman-notify.d

添加fence隔離設備

在251上安裝fence-virtd-libvirt,fence-virtd-multicast,fence-virtd這幾個yum包

# fence_virtd -c

Module search path [/usr/lib64/fence-virt]:

Available backends:

libvirt 0.1

Available listeners:

multicast 1.0

Listener modules are responsible for accepting requests

from fencing clients.

Listener module [multicast]:

The multicast listener module is designed for use environments

where the guests and hosts may communicate over a network using

multicast.

The multicast address is the address that a client will use to

send fencing requests to fence_virtd.

Multicast IP Address [225.0.0.12]:

Using ipv4 as family.

Multicast IP Port [1229]:

Setting a preferred interface causes fence_virtd to listen only

on that interface. Normally, it listens on all interfaces.

In environments where the virtual machines are using the host

machine as a gateway, this *must* be set (typically to virbr0).

Set to 'none' for no interface.

Interface [none]: br0

The key file is the shared key information which is used to

authenticate fencing requests. The contents of this file must

be distributed to each physical host and virtual machine within

a cluster.

Key File [/etc/cluster/fence_xvm.key]:

Backend modules are responsible for routing requests to

the appropriate hypervisor or management layer.

Backend module [libvirt]:

The libvirt backend module is designed for single desktops or

servers. Do not use in environments where virtual machines

may be migrated between hosts.

Libvirt URI [qemu:///system]:

Configuration complete.

=== Begin Configuration ===

backends {

libvirt {

uri = "qemu:///system";

}

}

listeners {

multicast {

key_file = "/etc/cluster/fence_xvm.key";

interface = "br0";

port = "1229";

address = "225.0.0.12";

family = "ipv4";

}

}

fence_virtd {

backend = "libvirt";

listener = "multicast";

module_path = "/usr/lib64/fence-virt";

}

=== End Configuration ===

Replace /etc/fence_virt.conf with the above [y/N]? y

除了紅色部分改動之外,其他部分一路回車就行

然後在251的/etc/cluster目錄下用下面這個命令生成key文件:

#dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=128 count=1

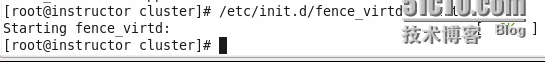

把生成的fence_xvm.key這個文件scp到137和138的/etc/cluster下,然後啓動fence

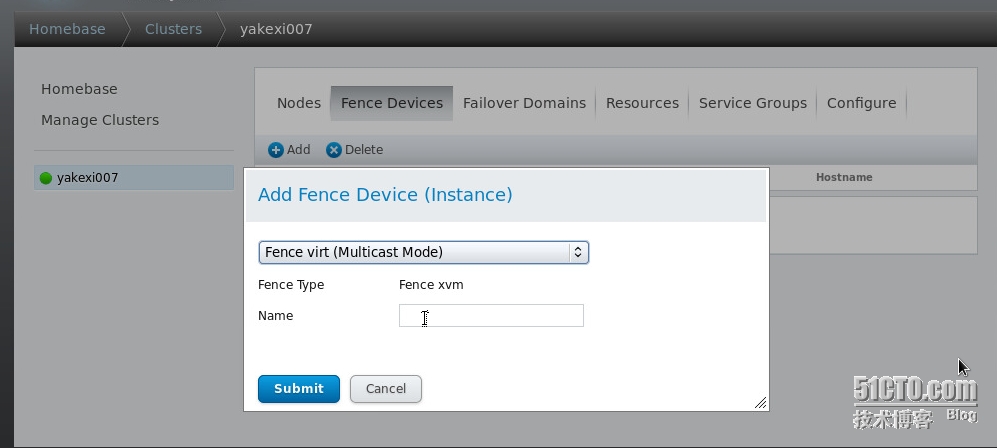

在web界面添加fence Devices

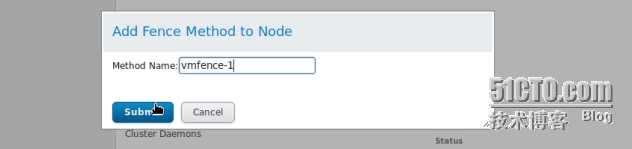

然後進到集羣節點添加fence method

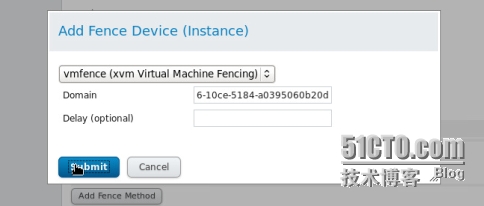

再添加 fence instance

上圖中的Domain是此節點主機的UUID

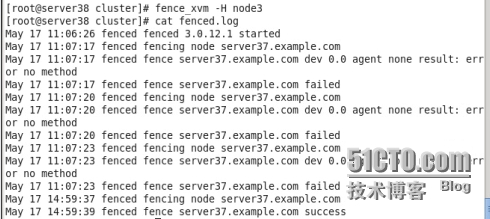

用fence命令隔離其中一個節點測試一下,在/var/log/cluster/下查看fence日誌 cat fence.log

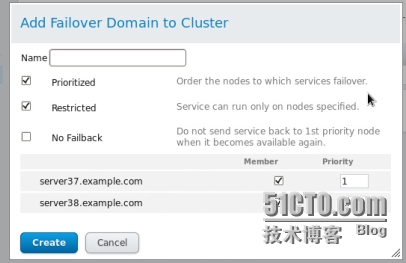

添加失效備援(Failover Domain)名字自己隨便定義

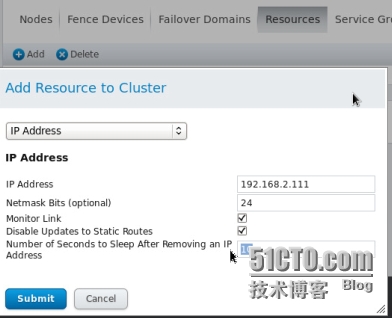

添加資源Resource

浮動IP

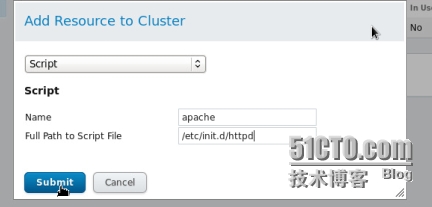

添加腳本

在137和138兩個節點上安裝httpd

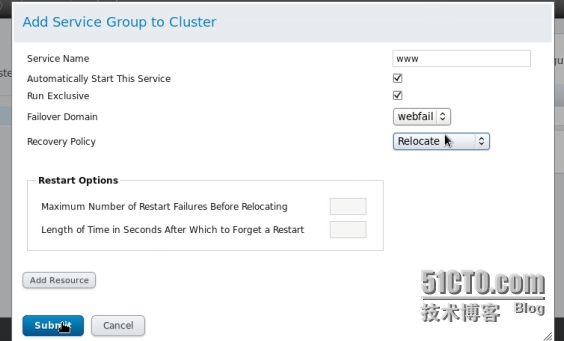

添加服務組

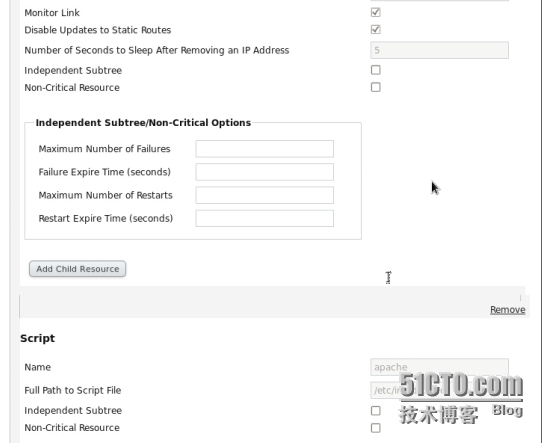

把資源都添加到組裏面

然後啓動剛剛添加的那個組,這個組在哪個節點上運行,哪個節點的httpd就會自動開啓

在137的/var/www/html/下寫一個測試文件

#echo `hostname` > index.html

然後用瀏覽器訪問剛剛綁定的那個浮動IP 192.168.2.111 就會看到你寫的那個測試文件

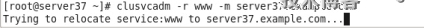

用clusvcadm -e www 和 clusvcadm -d www 可以啓動和關閉www服務組

這個命令可以用來遷移服務組到另一個節點。

添加分佈式存儲

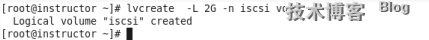

在251上劃分出一塊LVM分區

在251上安裝

# yum install scsi-target-utils.x86_64 -y

在137和138上安裝

#yum install iscsi-initiator-utils -y

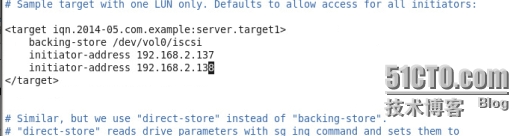

然後編輯/etc/tgt/targets.conf

然後啓動tgtd

/etc/init.d/tgtd start

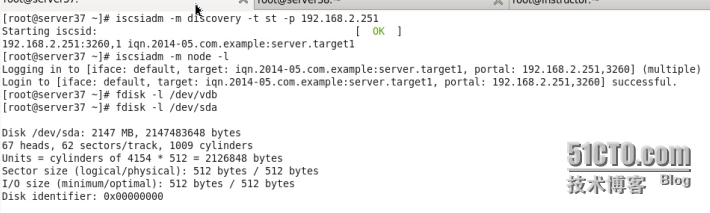

在137和138上用iscsi命令發現並登錄這塊共享的分區

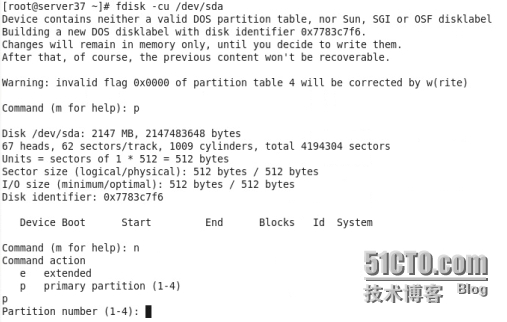

在這塊磁盤上劃分出一塊住分區

劃分完成之後格式化 mkfs.ext4 /dev/sda1

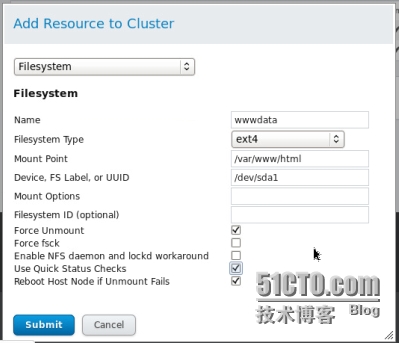

在web界面添加filesystem這個資源

同上把它添加到服務組

#clusvcadm -d www

現在將此設備先掛載到/var/www/html下

啓動服務

#clusvcadm -e www

#clustat 查看服務是否啓動成功

網絡文件系統 gfs2

clusvcadm -d www 先停掉服務組

然後在web界面刪掉wwwdata這個資源

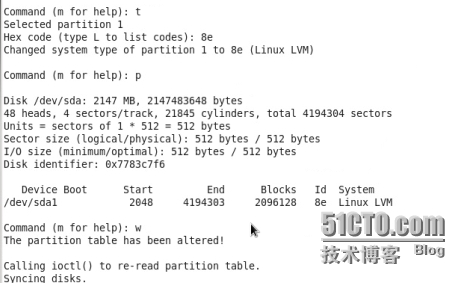

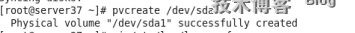

在共享的那塊磁盤劃分出一塊id爲8e的分區

然後把它做成LVM

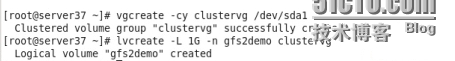

配置/etc/lvm/lvm.conf

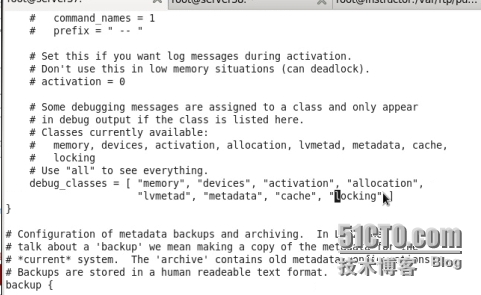

格式化gfs2分區並且掛載

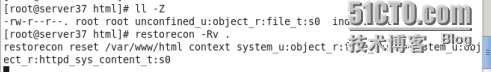

設置安全上下文

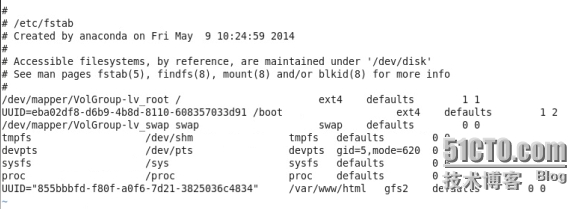

把這快分區寫到/etc/fstab裏

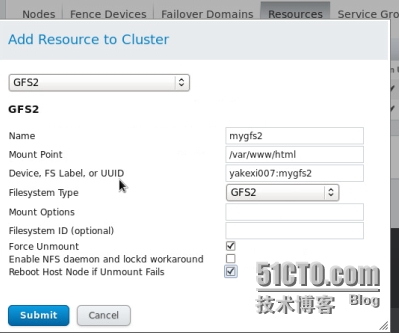

在web界面添加資源全局文件系統(gfs2)並添加到資源組

到這,就基本上RHCS配置可以告一段落了!!