一、測試環境準備

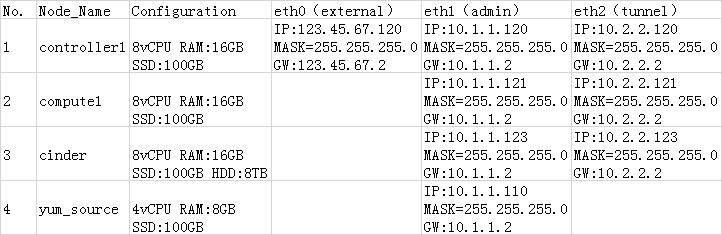

- 主機節點準備及網絡規劃

我物理節點是一臺塔式服務器,40核CPU,64G內存,SSD盤800G,HDD盤4T。

操作系統:win7 x64

虛擬化軟件:VMware WorkStation 11 - 系統環境準備

--最小化安裝CentOS7.2系統(CentOS-7-x86_64-Minimal-1511.iso)

--關閉防火牆、關閉SELinuxsystemctl stop firewalld.service systemctl disable firewalld.service--關閉SELinux

setenforce 0 sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config - 分別在三臺節點上更改hostname

hostnamectl set-hostname controller1 hostnamectl set-hostname compute1 hostnamectl set-hostname cinder然後每個節點配置/etc/hosts文件:

10.1.1.120 controller1 10.1.1.121 compute1 10.1.1.122 cinder3.必須的軟件包的安裝

yum -y install ntp vim-enhanced wget bash-completion net-tools - NTP同步系統時間

ntpdate cn.pool.ntp.org

編輯ntp.conf添加時間自動同步vim /etc/ntp.conf cn.pool.ntp.org - 搭建openstack本地yum源

見我另一篇文檔OpenStack Ocata本地yum源搭建

二、 安裝Mariadb

- 安裝mariadb

yum install -y mariadb-server mariadb-client` - 配置mariadb

vim /etc/my.cnf.d/mariadb-openstack.cnf添加如下內容:

[mysqld] default-storage-engine = innodb innodb_file_per_table collation-server = utf8_general_ci init-connect = 'SET NAMES utf8' character-set-server = utf8 bind-address = 10.1.1.1203、啓動數據庫並設置mariadb開機啓動

systemctl start mariadb.service systemctl enable mariadb.service systemctl status mariadb.service systemctl list-unit-files | grep mariadb.service - 初始化mariadb、設置密碼

mysql_secure_installation

三、安裝RabbitMQ

- 安裝RabbitMQ

yum install -y erlang rabbitmq-server - 啓動MQ及設置開機啓動

systemctl start rabbitmq-server.service systemctl enable rabbitmq-server.service systemctl status rabbitmq-server.service systemctl list-unit-files | grep rabbitmq-server.service - 創建MQ用戶openstack

rabbitmqctl add_user openstack xuml26 - 將openstack用戶賦予權限

rabbitmqctl set_permissions openstack ".*" ".*" ".*" rabbitmqctl set_user_tags openstack administrator rabbitmqctl list_users - 驗證MQ監聽端口5672

netstat -ntlp |grep 5672 - 查看RabbitMQ插件

/usr/lib/rabbitmq/bin/rabbitmq-plugins list - 打開RabbitMQ相關插件

/usr/lib/rabbitmq/bin/rabbitmq-plugins enable rabbitmq_management mochiweb webmachine rabbitmq_web_dispatch amqp_client rabbitmq_management_agent

打開相關插件後,重啓下rabbitmq服務systemctl restart rabbitmq-server瀏覽器輸入:http://123.45.67.120:15672 默認用戶名密碼:guest/guest或者openstack/xuml26

通過這個界面能很直觀的看到rabbitmq的運行和負載情況

四、安裝配置Keystone

1、創建keystone數據庫

mysql -uroot -p

CREATE DATABASE keystone;2、創建數據庫keystone用戶&root用戶及賦予權限

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'xuml26';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'xuml26';3、安裝keystone和memcachedyum -y install openstack-keystone httpd mod_wsgi python-openstackclient memcached python-memcached openstack-utils

4、啓動memcache服務並設置開機自啓動

systemctl start memcached.service

systemctl enable memcached.service

systemctl status memcached.service

systemctl list-unit-files | grep memcached.service

netstat -ntlp | grep 112115、配置/etc/keystone/keystone.conf文件

cp /etc/keystone/keystone.conf /etc/keystone/keystone.conf.bak

>/etc/keystone/keystone.conf

openstack-config --set /etc/keystone/keystone.conf DEFAULT transport_url rabbit://openstack:xuml26@controller1

openstack-config --set /etc/keystone/keystone.conf database connection mysql://keystone:xuml26@controller1/keystone

openstack-config --set /etc/keystone/keystone.conf cache backend oslo_cache.memcache_pool

openstack-config --set /etc/keystone/keystone.conf cache enabled true

openstack-config --set /etc/keystone/keystone.conf cache memcache_servers controller1:11211

openstack-config --set /etc/keystone/keystone.conf memcache servers controller1:11211

openstack-config --set /etc/keystone/keystone.conf token expiration 3600

openstack-config --set /etc/keystone/keystone.conf token provider fernet6、配置httpd.conf

sed -i "s/#ServerName www.example.com:80/ServerName controller1/" /etc/httpd/conf/httpd.conf`配置memcached

sed -i 's/OPTIONS*.*/OPTIONS="-l 127.0.0.1,::1,10.1.1.120"/' /etc/sysconfig/memcached`7、keystone與httpd關聯

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/`8、數據庫同步

su -s /bin/sh -c "keystone-manage db_sync" keystone9、初始化fernet

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone10、啓動httpd,並設置httpd開機啓動

systemctl start httpd.service

systemctl enable httpd.service

systemctl status httpd.service11、創建 admin 用戶角色

keystone-manage bootstrap \

--bootstrap-password xuml26 \

--bootstrap-username admin \

--bootstrap-project-name admin \

--bootstrap-role-name admin \

--bootstrap-service-name keystone \

--bootstrap-region-id RegionOne \

--bootstrap-admin-url http://controller1:35357/v3 \

--bootstrap-internal-url http://controller1:35357/v3 \

--bootstrap-public-url http://controller1:5000/v3驗證:openstack project list --os-username admin --os-project-name admin --os-user-domain-id default --os-project-domain-id default --os-identity-api-version 3 --os-auth-url http://controller1:5000 --os-password xuml26

- 創建admin用戶環境變量:

vim /root/admin-openrc添加以下內容:

export OS_USER_DOMAIN_ID=default export OS_PROJECT_DOMAIN_ID=default export OS_USERNAME=admin export OS_PROJECT_NAME=admin export OS_PASSWORD=xuml26 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 export OS_AUTH_URL=http://controller1:35357/v313、創建service項目

source /root/admin-openrc openstack project create --domain default --description "Service Project" service14、創建demo項目

openstack project create --domain default --description "Demo Project" demo

15、創建demo用戶openstack user create --domain default demo --password xuml2616、創建user角色將demo用戶賦予user角色

openstack role create user openstack role add --project demo --user demo user17、驗證keystone

unset OS_TOKEN OS_URL openstack --os-auth-url http://controller1:35357/v3 --os-project-domain-name default --os-user-domain-name default --os-project-name admin --os-username admin token issue --os-password xuml26 openstack --os-auth-url http://controller1:5000/v3 --os-project-domain-name default --os-user-domain-name default --os-project-name demo --os-username demo token issue --os-password xuml26

五、安裝配置glance

1、創建glance數據庫

CREATE DATABASE glance;2、創建數據庫用戶並賦予權限

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'xuml26';GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'xuml26';3、創建glance用戶及賦予admin權限

source /root/admin-openrc

openstack user create --domain default glance --password xuml26

openstack role add --project service --user glance admin4、創建image服務

openstack service create --name glance --description "OpenStack Image service" image5、創建glance的endpoint

openstack endpoint create --region RegionOne image public http://controller1:9292

openstack endpoint create --region RegionOne image internal http://controller1:9292

openstack endpoint create --region RegionOne image admin http://controller1:92926、安裝glance相關rpm包

yum -y install openstack-glance7、修改glance配置文件/etc/glance/glance-api.conf

cp /etc/glance/glance-api.conf /etc/glance/glance-api.conf.bak

>/etc/glance/glance-api.conf

openstack-config --set /etc/glance/glance-api.conf DEFAULT transport_url rabbit://openstack:xuml26@controller1

openstack-config --set /etc/glance/glance-api.conf database connection mysql+pymysql://glance:xuml26@controller1/glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_uri http://controller1:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_url http://controller1:35357

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken memcached_servers controller1:11211

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken password xuml26

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone

openstack-config --set /etc/glance/glance-api.conf glance_store stores file,http

openstack-config --set /etc/glance/glance-api.conf glance_store default_store file

openstack-config --set /etc/glance/glance-api.conf glance_store filesystem_store_datadir /var/lib/glance/images/8、修改glance配置文件/etc/glance/glance-registry.conf:

cp /etc/glance/glance-registry.conf /etc/glance/glance-registry.conf.bak

>/etc/glance/glance-registry.conf

openstack-config --set /etc/glance/glance-registry.conf DEFAULT transport_url rabbit://openstack:xuml26@controller1

openstack-config --set /etc/glance/glance-registry.conf database connection mysql+pymysql://glance:xuml26@controller1/glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_uri http://controller1:5000

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_url http://controller1:35357

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken memcached_servers controller1:11211

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken password xuml26

openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone9、同步glance數據庫

su -s /bin/sh -c "glance-manage db_sync" glance10、啓動glance及設置開機啓動

systemctl enable openstack-glance-api.service openstack-glance-registry.service

systemctl start openstack-glance-api.service openstack-glance-registry.service

systemctl status openstack-glance-api.service openstack-glance-registry.service

systemctl list-unit-files | grep openstack-glance-api.service

systemctl list-unit-files | grep openstack-glance-registry.service12、下載測試鏡像文件

wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img13、上傳鏡像到glance

source /root/admin-openrc

glance image-create --name "cirros-0.3.4-x86_64" --file cirros-0.3.4-x86_64-disk.img --disk-format qcow2 --container-format bare --visibility public --progress查看鏡像列表:

glance image-list六、安裝配置nova

1、創建nova數據庫

CREATE DATABASE nova;

CREATE DATABASE nova_api;

CREATE DATABASE nova_cell0;2、創建數據庫用戶並賦予權限

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'xuml26';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'xuml26';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'xuml26';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'xuml26';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'xuml26';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'xuml26';

GRANT ALL PRIVILEGES ON *.* TO 'root'@'controller1' IDENTIFIED BY 'xuml26';

flush privileges;3、創建nova用戶及賦予admin權限

source /root/admin-openrc

openstack user create --domain default nova --password xuml26

openstack role add --project service --user nova admin4、創建computer服務

openstack service create --name nova --description "OpenStack Compute" compute5、創建nova的endpoint

openstack endpoint create --region RegionOne compute public http://controller1:8774/v2.1/%\(tenant_id\)s

openstack endpoint create --region RegionOne compute internal http://controller1:8774/v2.1/%\(tenant_id\)s

openstack endpoint create --region RegionOne compute admin http://controller1:8774/v2.1/%\(tenant_id\)s6、安裝nova相關軟件

yum install -y openstack-nova-api openstack-nova-conductor openstack-nova-cert openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler7、配置nova的配置文件/etc/nova/nova.conf

cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

>/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.1.1.120

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:xuml26@controller1

openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql://nova:xuml26@controller1/nova

openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql://nova:xuml26@controller1/nova_api

openstack-config --set /etc/nova/nova.conf scheduler discover_hosts_in_cells_interval -1

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller1:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller1:35357

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller1:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password xuml26

openstack-config --set /etc/nova/nova.conf keystone_authtoken service_token_roles_required True

openstack-config --set /etc/nova/nova.conf vnc vncserver_listen 10.1.1.120

openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address 10.1.1.120

openstack-config --set /etc/nova/nova.conf glance api_servers http://controller1:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp8、設置單元格cell

同步nova數據庫

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage db sync" nova設置cell_v2關聯上創建好的數據庫nova_cell0

nova-manage cell_v2 map_cell0 --database_connection mysql+pymysql://root:xuml26@controller1/nova_cell0創建一個常規cell,名字叫cell1,這個單元格里面將會包含計算節點

nova-manage cell_v2 create_cell --verbose --name cell1 --database_connection mysql+pymysql://root:xuml26@controller1/nova_cell0 --transport-url rabbit://openstack:xuml26@controller1:5672/檢查部署是否正常,這個時候肯定是會報錯的,因爲此時沒有沒有compute節點,也沒有部署placement

nova-status upgrade check創建和映射cell0,並將現有計算主機和實例映射到單元格中

nova-manage cell_v2 simple_cell_setup查看已經創建好的單元格列表

nova-manage cell_v2 list_cells --verbose9、安裝placement

從Ocata開始,需要安裝配置placement參與nova調度了,不然虛擬機將無法創建。

yum install -y openstack-nova-placement-api創建placement用戶和placement 服務

openstack user create --domain default placement --password xuml26

openstack role add --project service --user placement admin

openstack service create --name placement --description "OpenStack Placement" placement創建placement endpoint

openstack endpoint create --region RegionOne placement public http://controller1:8778

openstack endpoint create --region RegionOne placement admin http://controller1:8778

openstack endpoint create --region RegionOne placement internal http://controller1:8778把placement 整合到nova.conf裏

openstack-config --set /etc/nova/nova.conf placement auth_url http://controller1:35357

openstack-config --set /etc/nova/nova.conf placement memcached_servers controller1:11211

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement project_domain_name default

openstack-config --set /etc/nova/nova.conf placement user_domain_name default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password xuml26

openstack-config --set /etc/nova/nova.conf placement os_region_name RegionOne配置修改00-nova-placement-api.conf文件,這步沒做創建虛擬機的時候會出現禁止訪問資源的問題

cd /etc/httpd/conf.d/

cp 00-nova-placement-api.conf 00-nova-placement-api.conf.bak

>00-nova-placement-api.conf

vim 00-nova-placement-api.conf添加以下內容:

Listen 8778

<VirtualHost *:8778>

WSGIProcessGroup nova-placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

WSGIDaemonProcess nova-placement-api processes=3 threads=1 user=nova group=nova

WSGIScriptAlias / /usr/bin/nova-placement-api

<Directory "/">

Order allow,deny

Allow from all

Require all granted

</Directory>

<IfVersion >= 2.4>

ErrorLogFormat "%M"

</IfVersion>

ErrorLog /var/log/nova/nova-placement-api.log

</VirtualHost>

Alias /nova-placement-api /usr/bin/nova-placement-api

<Location /nova-placement-api>

SetHandler wsgi-script

Options +ExecCGI

WSGIProcessGroup nova-placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

</Location>重啓下httpd服務

systemctl restart httpd檢查下是否配置成功

nova-status upgrade check10、啓動nova服務:

systemctl start openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstacknova-novncproxy.service

設置nova相關服務開機啓動

systemctl enable openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstacknova-novncproxy.service

查看nova服務:

systemctl status openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstacknova-novncproxy.service

systemctl list-unit-files |grep openstack-nova-*11、驗證nova服務

unset OS_TOKEN OS_URL

source /root/admin-openrc

nova service-list查看endpoint list,看是否有結果正確輸出

openstack endpoint list七、安裝配置neutron

1、創建neutron數據庫

CREATE DATABASE neutron;2、創建數據庫用戶並賦予權限

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'xuml26';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'xuml26';3、創建neutron用戶及賦予admin權限

source /root/admin-openrc

openstack user create --domain default neutron --password xuml26

openstack role add --project service --user neutron admin4、創建network服務

openstack service create --name neutron --description "OpenStack Networking" network5、創建endpoint

openstack endpoint create --region RegionOne network public http://controller1:9696

openstack endpoint create --region RegionOne network internal http://controller1:9696

openstack endpoint create --region RegionOne network admin http://controller1:96966、安裝neutron相關軟件

yum -y install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables7、配置neutron配置文件/etc/neutron/neutron.conf

cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak

>/etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins router

openstack-config --set /etc/neutron/neutron.conf DEFAULT allow_overlapping_ips True

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:xuml26@controller1

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes True

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes True

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller1:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller1:35357

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller1:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password xuml26

openstack-config --set /etc/neutron/neutron.conf database connection mysql+pymysql://neutron:xuml26@controller1/neutron

openstack-config --set /etc/neutron/neutron.conf nova auth_url http://controller1:35357

openstack-config --set /etc/neutron/neutron.conf nova auth_type password

openstack-config --set /etc/neutron/neutron.conf nova project_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova user_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova region_name RegionOne

openstack-config --set /etc/neutron/neutron.conf nova project_name service

openstack-config --set /etc/neutron/neutron.conf nova username nova

openstack-config --set /etc/neutron/neutron.conf nova password xuml26

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp8、配置/etc/neutron/plugins/ml2/ml2_conf.ini

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers flat,vlan,vxlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers linuxbridge,l2population

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 extension_drivers port_security

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types vxlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 path_mtu 1500

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_flat flat_networks provider

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_vxlan vni_ranges 1:1000

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_ipset True9、配置/etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini DEFAULT debug false

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eno16777736

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan True

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan local_ip 10.2.2.120

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan l2_population True

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini agent prevent_arp_spoofing True

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group True

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver注意eno16是外網網卡,如果不是外網網卡,那麼VM就會與外界網絡隔離。

10、配置 /etc/neutron/l3_agent.ini

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT interface_driver neutron.agent.linux.interface.BridgeInterfaceDriver

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT external_network_bridge

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT debug false11、配置/etc/neutron/dhcp_agent.ini

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver neutron.agent.linux.interface.BridgeInterfaceDriver

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasq

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT enable_isolated_metadata True

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT verbose True

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT debug false12、重新配置/etc/nova/nova.conf,配置這步的目的是讓compute節點能使用上neutron網絡

openstack-config --set /etc/nova/nova.conf neutron url http://controller1:9696

openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller1:35357

openstack-config --set /etc/nova/nova.conf neutron auth_plugin password

openstack-config --set /etc/nova/nova.conf neutron project_domain_id default

openstack-config --set /etc/nova/nova.conf neutron user_domain_id default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password xuml26

openstack-config --set /etc/nova/nova.conf neutron service_metadata_proxy True

openstack-config --set /etc/nova/nova.conf neutron metadata_proxy_shared_secret xuml2613、將dhcp-option-force=26,1450寫入/etc/neutron/dnsmasq-neutron.conf

echo "dhcp-option-force=26,1450" >/etc/neutron/dnsmasq-neutron.conf14、配置/etc/neutron/metadata_agent.ini

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_ip controller1

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret xuml26

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_workers 4

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT verbose True

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT debug false

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_protocol http15、創建硬鏈接

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini16、同步數據庫

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron17、重啓nova服務,因爲剛纔改了nova.conf

systemctl restart openstack-nova-api.service

systemctl status openstack-nova-api.service18、重啓neutron服務並設置開機啓動

systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl restart neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl status neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service19、啓動neutron-l3-agent.service並設置開機啓動

systemctl enable neutron-l3-agent.service

systemctl restart neutron-l3-agent.service

systemctl status neutron-l3-agent.service20、執行驗證

source /root/admin-openrc

neutron ext-list

neutron agent-list22、檢查網絡服務

neutron agent-list看服務是否是笑臉

八、安裝Dashboard

1、安裝dashboard相關軟件包

yum install openstack-dashboard -y2、修改配置文件/etc/openstack-dashboard/local_settings

vim /etc/openstack-dashboard/local_settings將171行OPENSTACK_HOST的值改爲controller1

3、啓動dashboard服務並設置開機啓動

systemctl restart httpd.service memcached.service

systemctl status httpd.service memcached.service到此,Controller節點搭建完畢,打開firefox瀏覽器即可訪問http://123.45.67.120/dashboard/ 可進入openstack界面!

九、安裝配置cinder

1、創建數據庫用戶並賦予權限

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'xuml26';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'xuml26';2、創建cinder用戶並賦予admin權限

source /root/admin-openrc

openstack user create --domain default cinder --password xuml26

openstack role add --project service --user cinder admin3、創建volume服務

openstack service create --name cinder --description "OpenStack Block Storage" volume

openstack service create --name cinderv2 --description "OpenStack Block Storage" volumev24、創建endpoint

openstack endpoint create --region RegionOne volume public http://controller1:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne volume internal http://controller1:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne volume admin http://controller1:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne volumev2 public http://controller1:8776/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne volumev2 internal http://controller1:8776/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne volumev2 admin http://controller1:8776/v2/%\(tenant_id\)s5、安裝cinder相關服務

yum -y install openstack-cinder6、配置cinder配置文件

cp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak

>/etc/cinder/cinder.conf

openstack-config --set /etc/cinder/cinder.conf DEFAULT transport_url rabbit://openstack:xuml26@controller1

openstack-config --set /etc/cinder/cinder.conf DEFAULT my_ip 10.1.1.120

openstack-config --set /etc/cinder/cinder.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/cinder/cinder.conf database connection mysql+pymysql://cinder:xuml26@controller1/cinder

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_uri http://controller1:5000

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_url http://controller1:35357

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken memcached_servers controller1:11211

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_type password

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken project_name service

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken username cinder

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken password xuml26

openstack-config --set /etc/cinder/cinder.conf oslo_concurrency lock_path /var/lib/cinder/tmp7、同步數據庫

su -s /bin/sh -c "cinder-manage db sync" cinder8、在controller上啓動cinder服務,並設置開機啓動

systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl enbale openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl status openstack-cinder-api.service openstack-cinder-scheduler.service十、Cinder節點部署

1、安裝Cinder節點

yum -y install lvm22、啓動服務並設置爲開機自啓

systemctl start lvm2-lvmetad.service

systemctl enable lvm2-lvmetad.service

systemctl status lvm2-lvmetad.service3、創建lvm

fdisk -l

pvcreate /dev/sdb

vgcreate cinder-volumes /dev/sdb- 編輯cinder節點lvm.conf文件

vim /etc/lvm/lvm.conf在[devices]下面添加

filter = [ "a/sda/", "a/sdb/", "r/.*/"],130行

重啓lvm2systemctl restart lvm2-lvmetad.service13、安裝openstack-cinder和targetcli

yum -y install openstack-cinder openstack-utils targetcli python-keystone ntpdate14、配置cinder配置文件

cp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak >/etc/cinder/cinder.conf openstack-config --set /etc/cinder/cinder.conf DEFAULT auth_strategy keystone openstack-config --set /etc/cinder/cinder.conf DEFAULT my_ip 10.1.1.122 openstack-config --set /etc/cinder/cinder.conf DEFAULT enabled_backends lvm openstack-config --set /etc/cinder/cinder.conf DEFAULT glance_api_servers http://controller1:9292 openstack-config --set /etc/cinder/cinder.conf DEFAULT glance_api_version 2 openstack-config --set /etc/cinder/cinder.conf DEFAULT enable_v1_api True openstack-config --set /etc/cinder/cinder.conf DEFAULT enable_v2_api True openstack-config --set /etc/cinder/cinder.conf DEFAULT enable_v3_api True openstack-config --set /etc/cinder/cinder.conf DEFAULT storage_availability_zone nova openstack-config --set /etc/cinder/cinder.conf DEFAULT default_availability_zone nova openstack-config --set /etc/cinder/cinder.conf DEFAULT os_region_name RegionOne openstack-config --set /etc/cinder/cinder.conf DEFAULT api_paste_config /etc/cinder/api-paste.ini openstack-config --set /etc/cinder/cinder.conf DEFAULT transport_url rabbit://openstack:xuml26@controller1 openstack-config --set /etc/cinder/cinder.conf database connection mysql+pymysql://cinder:xuml26@controller1/cinder openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_uri http://controller1:5000 openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_url http://controller1:35357 openstack-config --set /etc/cinder/cinder.conf keystone_authtoken memcached_servers controller1:11211 openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_type password openstack-config --set /etc/cinder/cinder.conf keystone_authtoken project_domain_name default openstack-config --set /etc/cinder/cinder.conf keystone_authtoken user_domain_name default openstack-config --set /etc/cinder/cinder.conf keystone_authtoken project_name service openstack-config --set /etc/cinder/cinder.conf keystone_authtoken username cinder openstack-config --set /etc/cinder/cinder.conf keystone_authtoken password xuml26 openstack-config --set /etc/cinder/cinder.conf lvm volume_driver cinder.volume.drivers.lvm.LVMVolumeDriver openstack-config --set /etc/cinder/cinder.conf lvm volume_group cinder-volumes openstack-config --set /etc/cinder/cinder.conf lvm iscsi_protocol iscsi openstack-config --set /etc/cinder/cinder.conf lvm iscsi_helper lioadm openstack-config --set /etc/cinder/cinder.conf oslo_concurrency lock_path /var/lib/cinder/tmp15、啓動openstack-cinder-volume和target並設置開機啓動

systemctl start openstack-cinder-volume.service target.service systemctl enbale openstack-cinder-volume.service target.service systemctl status openstack-cinder-volume.service target.service16、驗證cinder服務是否正常

source /root/admin-openrc cinder service-list

十一、Compute節點部署

安裝相關依賴包

yum -y install openstack-selinux python-openstackclient yum-plugin-priorities openstack-nova-compute openstack-utils ntpdate- 配置nova.conf

cp /etc/nova/nova.conf /etc/nova/nova.conf.bak >/etc/nova/nova.conf openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.1.1.121 openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:xuml26@controller1 openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller1:5000 openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller1:35357 openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller1:11211 openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova openstack-config --set /etc/nova/nova.conf keystone_authtoken password xuml26 openstack-config --set /etc/nova/nova.conf placement auth_uri http://controller1:5000 openstack-config --set /etc/nova/nova.conf placement auth_url http://controller1:35357 openstack-config --set /etc/nova/nova.conf placement memcached_servers controller1:11211 openstack-config --set /etc/nova/nova.conf placement auth_type password openstack-config --set /etc/nova/nova.conf placement project_domain_name default openstack-config --set /etc/nova/nova.conf placement user_domain_name default openstack-config --set /etc/nova/nova.conf placement project_name service openstack-config --set /etc/nova/nova.conf placement username placement openstack-config --set /etc/nova/nova.conf placement password xuml26 openstack-config --set /etc/nova/nova.conf placement os_region_name RegionOne openstack-config --set /etc/nova/nova.conf vnc enabled True openstack-config --set /etc/nova/nova.conf vnc keymap en-us openstack-config --set /etc/nova/nova.conf vnc vncserver_listen 0.0.0.0 openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address 10.1.1.121 openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://123.45.67.120:6080/vnc_auto.html openstack-config --set /etc/nova/nova.conf glance api_servers http://controller1:9292 openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp openstack-config --set /etc/nova/nova.conf libvirt virt_type qemu - 設置libvirtd.service 和openstack-nova-compute.service開機啓動

systemctl enable libvirtd.service openstack-nova-compute.service systemctl restart libvirtd.service openstack-nova-compute.service systemctl status libvirtd.service openstack-nova-compute.service - 到controller上執行驗證

source /root/admin-openrc openstack compute service list

十二、安裝Neutron

- 安裝相關軟件包

yum -y install openstack-neutron-linuxbridge ebtables ipset - 配置neutron.conf

cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak >/etc/neutron/neutron.conf openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone openstack-config --set /etc/neutron/neutron.conf DEFAULT advertise_mtu True openstack-config --set /etc/neutron/neutron.conf DEFAULT dhcp_agents_per_network 2 openstack-config --set /etc/neutron/neutron.conf DEFAULT control_exchange neutron openstack-config --set /etc/neutron/neutron.conf DEFAULT nova_url http://controller1:8774/v2 openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:xuml26@controller1 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller1:5000 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller1:35357 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller1:11211 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password xuml26 openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp - 配置/etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan True openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan local_ip 10.2.2.121 openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan l2_population True openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group True openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver - 配置nova.conf

openstack-config --set /etc/nova/nova.conf neutron url http://controller1:9696 openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller1:35357 openstack-config --set /etc/nova/nova.conf neutron auth_type password openstack-config --set /etc/nova/nova.conf neutron project_domain_name default openstack-config --set /etc/nova/nova.conf neutron user_domain_name default openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne openstack-config --set /etc/nova/nova.conf neutron project_name service openstack-config --set /etc/nova/nova.conf neutron username neutron openstack-config --set /etc/nova/nova.conf neutron password xuml26 - 重啓和enable相關服務

systemctl restart libvirtd.service openstack-nova-compute.service systemctl enable neutron-linuxbridge-agent.service systemctl start neutron-linuxbridge-agent.service systemctl status libvirtd.service openstack-nova-compute.service neutron-linuxbridge-agent.service

十三、計算節點結合Cinder

1.計算節點要是想用cinder,那麼需要配置nova配置文件

openstack-config --set /etc/nova/nova.conf cinder os_region_name RegionOne

systemctl restart openstack-nova-compute.service2.controller重啓nova服務

systemctl restart openstack-nova-api.service`十四. 在controller上執行:

nova-manage cell_v2 simple_cell_setup

source /root/admin-openrc

neutron agent-list

nova-manage cell_v2 discover_hosts查看新加入的compute1節點nova host-list