RHEL6.6-x86-64

軟件源:

epel源

本地yum源

RHCS安裝及配置

192.168.1.5 安裝luci 兩塊硬盤, 其中/sdb提供共享存儲。

集羣節點

192.168.1.6 安裝ricci node1.mingxiao.info node1

192.168.1.7 安裝ricci node2.mingxiao.info node2

192.168.1.8 安裝ricci node3.mingxiao.info node3

前提:

1> 192.168.1.5分別與node1,node2,node3互信

2> 時間同步

3> 節點主機名分別爲node1.mingxiao.info、node2.mingxiao.info、node3.mingxiao.info

進主機192.168.1.5

vim /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.1.6 node1.mingxiao.info node1 192.168.1.7 node2.mingxiao.info node2 192.168.1.8 node3.mingxiao.info node3

# for I in {1..3}; do scp /etc/hosts node$I:/etc;done

時間同步

# for I in {1..3}; do ssh node$I 'ntpdate time.windows.com';done

節點間互信。

.....

# yum -y install luci

# for I in {1..3}; do ssh node$I 'yum -y install ricci'; done

# for I in {1..3}; do ssh node$I 'echo xiaoming | passwd --stdin ricci'; done

# for I in {1..3}; do ssh node$I 'service ricci start;chkconfig ricci on'; done

# for I in {1..3}; do ssh node$I 'yum -y install httpd'; done

# service luci start

瀏覽器輸入https://192.168.1.5:8084訪問web管理界面,輸入root用戶和root密碼

創建集羣,Password輸入各節點ricci用戶密碼,

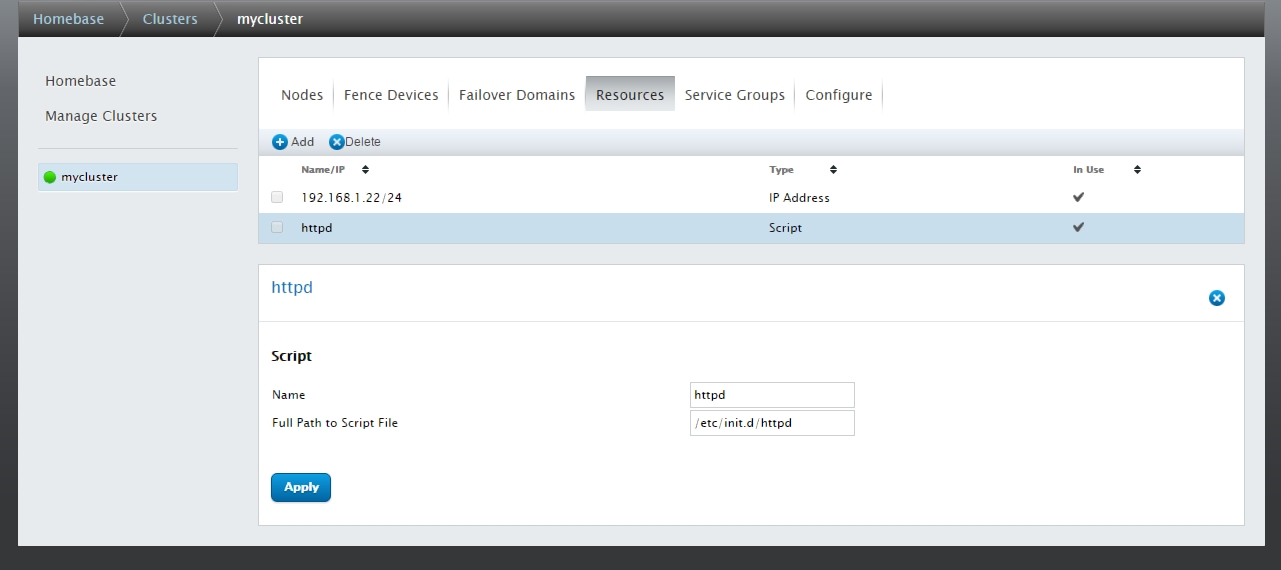

添加資源,這裏添加兩個一個爲VIP,一個爲httpd,添好如下圖界面

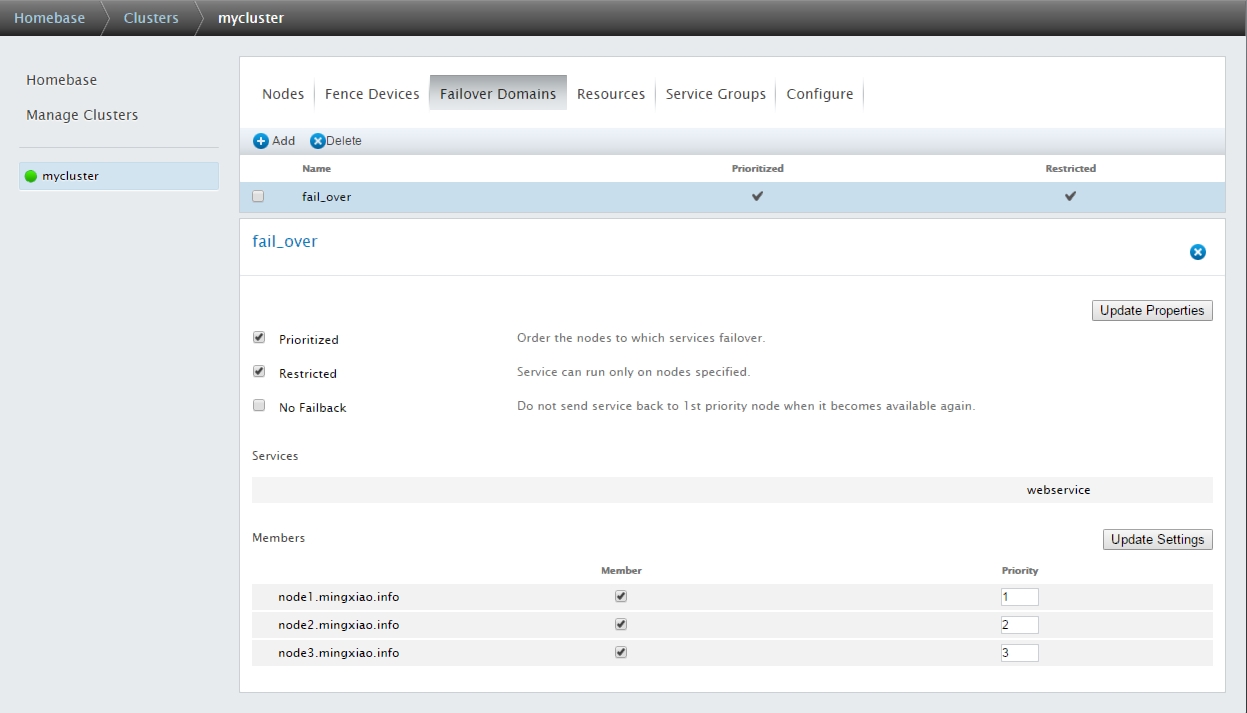

添加故障轉移域,如下圖:

由於沒有fences設備,所以不再添加。

接下來要配置iscsi,提供共享存儲:

進192.168.1.5

# yum -y install scsi-target-utils

# for I in {1..3}; do ssh node$I 'yum -y install iscsi-initiator-utils'; done

vim /etc/tgt/targets.conf 添加如下內容 <target iqn.2015-05.ingo.mingxiao:ipsan.sdb> <backing-store /dev/sdb> vendor_id Xiaoming lun 1 </backing-store> initiator-address 192.168.1.0/24 incominguser iscsiuser xiaoming </target>

# service tgtd start

# tgtadm --lld iscsi --mode target --op show #可查看到如下信息 Target 1: iqn.2015-05.ingo.mingxiao:ipsan.sdb System information: Driver: iscsi State: ready LUN information: LUN: 0 Type: controller SCSI ID: IET 00010000 SCSI SN: beaf10 Size: 0 MB, Block size: 1 Online: Yes Removable media: No Prevent removal: No Readonly: No Backing store type: null Backing store path: None Backing store flags: LUN: 1 Type: disk SCSI ID: IET 00010001 SCSI SN: beaf11 Size: 21475 MB, Block size: 512 Online: Yes Removable media: No Prevent removal: No Readonly: No Backing store type: rdwr Backing store path: /dev/sdb Backing store flags: Account information: iscsiuser ACL information: 192.168.1.0/24

# for I in {1..3}; do ssh node$I 'echo "InitiatorName=`iscsi-iname -p iqn.2015-05.info.mingxiao`" > /etc/iscsi/initiatorname.iscsi'; donevim /etc/iscsi/iscsid.conf #在node1、node2、node3啓用下面三行 node.session.auth.authmethod = CHAP node.session.auth.username = iscsiuser node.session.auth.password = xiaoming

# ha ssh node$I 'iscsiadm -m discovery -t st -p 192.168.1.5';done [ OK ] iscsid: [ OK ] 192.168.1.5:3260,1 iqn.2015-05.ingo.mingxiao:ipsan.sdb [ OK ] iscsid: [ OK ] 192.168.1.5:3260,1 iqn.2015-05.ingo.mingxiao:ipsan.sdb [ OK ] iscsid: [ OK ] 192.168.1.5:3260,1 iqn.2015-05.ingo.mingxiao:ipsan.sdb

# ha ssh node$I 'iscsiadm -m node -T iqn.2015-05.ingo.mingxiao:ipsan.sdb -p 192.168.1.5 -l ';done Logging in to [iface: default, target: iqn.2015-05.ingo.mingxiao:ipsan.sdb, portal: 192.168.1.5,3260] (multiple) Login to [iface: default, target: iqn.2015-05.ingo.mingxiao:ipsan.sdb, portal: 192.168.1.5,3260] successful. Logging in to [iface: default, target: iqn.2015-05.ingo.mingxiao:ipsan.sdb, portal: 192.168.1.5,3260] (multiple) Login to [iface: default, target: iqn.2015-05.ingo.mingxiao:ipsan.sdb, portal: 192.168.1.5,3260] successful. Logging in to [iface: default, target: iqn.2015-05.ingo.mingxiao:ipsan.sdb, portal: 192.168.1.5,3260] (multiple) Login to [iface: default, target: iqn.2015-05.ingo.mingxiao:ipsan.sdb, portal: 192.168.1.5,3260] successful.

node1,node2,node3分別安裝以下兩個rpm包,rpmfind.net可找到

# rpm -ivh lvm2-cluster-2.02.111-2.el6.x86_64.rpm gfs2-utils-3.0.12.1-68.el6.x86_64.rpm warning: lvm2-cluster-2.02.111-2.el6.x86_64.rpm: Header V3 RSA/SHA1 Signature, key ID c105b9de: NOKEY Preparing... ########################################### [100%] 1:gfs2-utils ########################################### [ 50%] 2:lvm2-cluster ########################################### [100%]

進node1

# pvcreate /dev/sdb

# vgcreate clustervg /dev/sdb

# lvcreate -L 5G -n clusterlv clustervg

# mkfs.gfs2 -j 2 -p lock_dlm -t mycluster:sdb /dev/clustervg/clusterlv

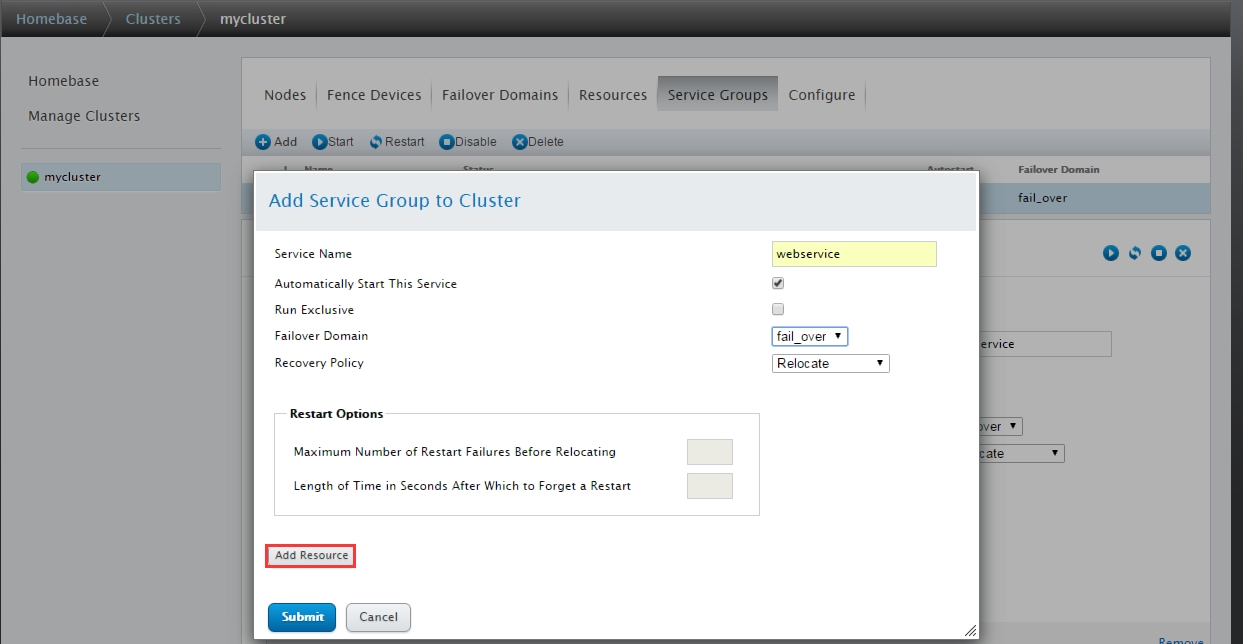

添加一個服務,名爲webservice

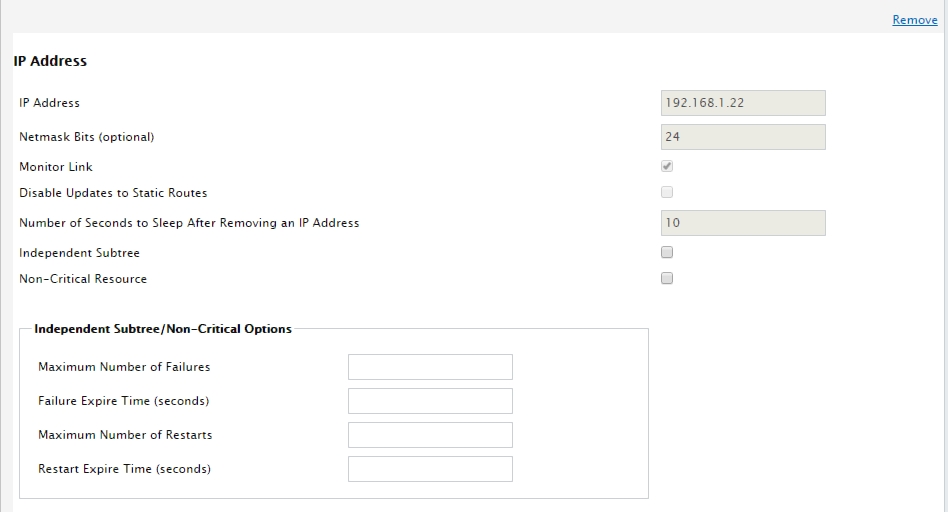

將先前VIP資源加入

新建一個GFS2資源,並加入

將先前httpd資源加入

然後啓動即可。

查看狀態:

# clustat Cluster Status for mycluster @ Thu May 7 00:07:21 2015 Member Status: Quorate Member Name ID Status ------ ---- ---- ------ node1.mingxiao.info 1 Online, Local, rgmanager node2.mingxiao.info 2 Online, rgmanager node3.mingxiao.info 3 Online, rgmanager Service Name Owner (Last) State ------- ---- ----- ------ ----- service:webservice node1.mingxiao.info started