apache: tomcats

(1) apache:

mod_proxy

mod_proxy_http 實現代理

mod_proxy_balancer 實現負載均衡

tomcat:

http connector http連接器

(2) apache:

mod_proxy

mod_proxy_ajp ajp模塊

mod_proxy_balancer

tomcat:

ajp connector ajp連接器

(3) apache:

mod_jk

tomcat:

ajp connector

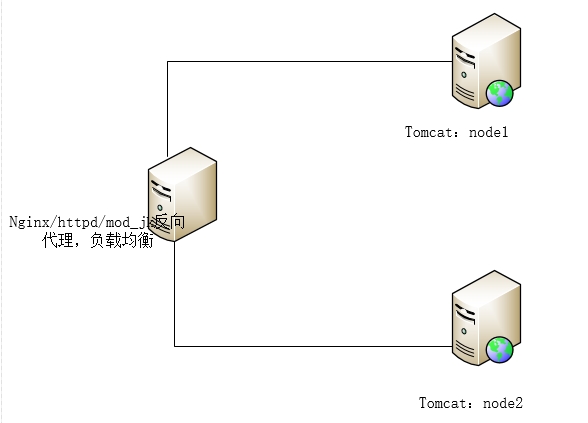

方案一:使用nginx反代用戶請求到tomcat:(實現負載均衡和session綁定)

配置hosts文件:

192.168.20.1 node1.lee.com node1

192.168.20.2 node2.lee.com node2

192.168.20.8 node4.lee.com node4

192.168.20.7 node3.lee.com node3

前端nginx配置實現負載均衡:

1.在http上下文定義upstream server

upstream tcsrvs {

ip_hash; 實現session綁定

server node1.lee.com:8080;

server node2.lee.com:8080;

}

2.在server段中調用:

location / {

root /usr/share/nginx/html;

}

location ~* \.(jsp|do)$ {

proxy_pass http://tcsrvs;

}

後端兩個tomcat配置server.xml:

示例只給了第一臺的配置,第二臺的只需將所有node1改爲node2即可

<Engine name="Catalina" defaultHost="node1.lee.com">

<Host name="node1.lee.com" appBase="/data/webapps/" unpackWARs="true" autoDeploy="true">

<Context path="" docBase="/data/webapps" reloadable="true">

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="/data/logs"

prefix="web1_access_log" suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

/data/webapps/index.jsp文件:

<%@ page language="java" %>

<%@ page import="java.util.*" %>

<html>

<head>

<title>JSP Test Page</title>

</head>

<body>

<% out.println("Hello, world."); %>

</body>

</html>方案二:使用httpd反代用戶請求到tomcat

前端httpd反代配置:

<proxy balancer://lbcluster1>

BalancerMember http://172.16.100.68:8080 loadfactor=10 route=TomcatA

BalancerMember http://172.16.100.69:8080 loadfactor=10 route=TomcatB

</proxy>

<VirtualHost *:80>

ServerName web1.lee.com

ProxyVia On

ProxyRequests Off

ProxyPreserveHost On

<Proxy *>

Order Deny,Allow

Allow from all

</Proxy>

ProxyPass /status !

ProxyPass / balancer://lbcluster1/

ProxyPa***everse / balancer://lbcluster1/

<Location />

Order Deny,Allow

Allow from all

</Location>

</VirtualHost>

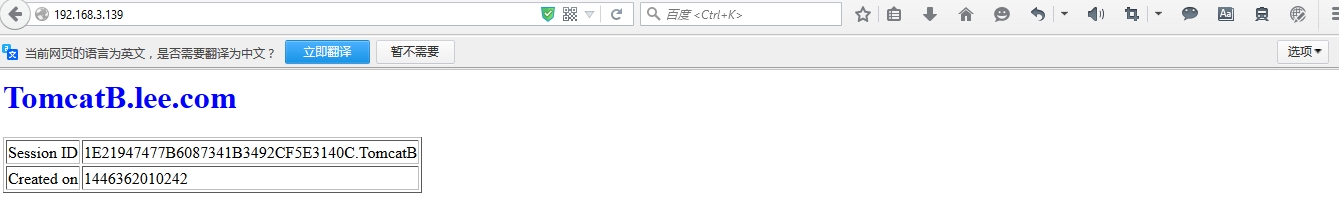

後端tomcat主機配置:此處爲node1主機,node2主機設置爲 jvmRoute="TomcatB",測試頁也做相應替換

<Engine name="Catalina" defaultHost="node1.lee.com" jvmRoute="TomcatA"> #jvmRoute爲了讓前端httpd可以精確識別自己,使用jvmRoute作爲標示

編輯測試頁面:/data/webapps/index.jsp

<%@ page language="java" %>

<html>

<head><title>TomcatA</title></head>

<body>

<h1><font color="red">TomcatA.lee.com</font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td> <% session.setAttribute("lee.com","lee.com"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>測試:

發現即便調度到同一主機session也會變,更不用說不調度在同一主機

解決:修改這兩行,使用session粘×××

Header add Set-Cookie "ROUTEID=.%{BALANCER_WORKER_ROUTE}e; path=/" env=BALANCER_ROUTE_CHANGED

<proxy balancer://lbcluster1>

BalancerMember http://192.168.20.1:8080 loadfactor=10 route=TomcatA

BalancerMember http://192.168.20.2:8080 loadfactor=10 route=TomcatB

ProxySet stickysession=ROUTEID

</proxy>測試後發現session綁定成功

使用ajp連接:只需要修改兩行

#Header add Set-Cookie "ROUTEID=.%{BALANCER_WORKER_ROUTE}e; path=/" env=BALANCER_ROUTE_CHANGED

註釋上面一行是因爲使用ajp協議的話只需要ProxySet stickysession=ROUTEID一條語句即可綁定

<proxy balancer://lbcluster1>

BalancerMember ajp://172.16.100.68:8009 loadfactor=10 route=TomcatA

BalancerMember ajp://172.16.100.69:8009 loadfactor=10 route=TomcatB

ProxySet stickysession=ROUTEID

</proxy>方案三:使用mod_jk作反向代理,使用mod_jk後端連接只能使用ajp協議 tar xf tomcat-connectors-1.2.40-src.tar.gz cd tomcat-connectors-1.2.40-src/native 準備編譯環境: yum install httpd-devel gcc glibc-devel yum groupinstall "Development tools" mod_jk依賴於apxs [root@node3 rpm]# which apxs /usr/sbin/apxs 在native目錄下: ./configure --with-apxs=/usr/sbin/apxs 裝載mod_jk模塊:在httpd.conf文件中: LoadModule jk_module modules/mod_jk.so 查看是否裝載成功: [root@node3 conf]# httpd -M | grep jk Syntax OK jk_module (shared) 配置jk_module屬性:httpd.conf中 JkWorkersFile /etc/httpd/conf.d/workers.properties JkLogFile logs/mod_jk.log JkLogLevel debug JkMount /* TomcatA #此處的TomcatA必須與後端Tomcat的engine中定義哪個TomcatA的一致 JkMount /status/ stat1 創建/etc/httpd/conf.d/workers.properties worker.list=TomcatA,stat1 worker.TomcatA.port=8009 worker.TomcatA.host=192.168.20.1 worker.TomcatA.type=ajp13 worker.TomcatA.lbfactor=1 worker.stat1.type = status 訪問mod_jk自帶的status頁面:此頁面也有管理功能

改爲負載均衡:並且會話綁定功能 修改httpd.conf: JkWorkersFile /etc/httpd/conf.d/workers.properties JkLogFile logs/mod_jk.log JkLogLevel debug JkMount /* lbcluster1 JkMount /jkstatus/ stat1 修改/etc/httpd/conf.d/workers.properties worker.list = lbcluster1,stat1 worker.TomcatA.type = ajp13 worker.TomcatA.host = 192.168.20.1 worker.TomcatA.port = 8009 worker.TomcatA.lbfactor = 5 worker.TomcatB.type = ajp13 worker.TomcatB.host = 192.168.20.2 worker.TomcatB.port = 8009 worker.TomcatB.lbfactor = 5 worker.lbcluster1.type = lb worker.lbcluster1.sticky_session = 1 worker.lbcluster1.balance_workers = TomcatA, TomcatB worker.stat1.type = status 測試成功

介紹:proxy-balancer-manager模塊頁面的使用

<proxy balancer://lbcluster1> BalancerMember http://192.168.20.1:8080 loadfactor=10 route=TomcatA BalancerMember http://192.168.20.2:8080 loadfactor=10 route=TomcatB ProxySet stickysession=ROUTEID </proxy> <VirtualHost *:80> ServerName web1.lee.com ProxyVia On ProxyRequests Off ProxyPreserveHost On <Location /balancer-manager> SetHandler balancer-manager ProxyPass ! Order Deny,Allow Allow from all </Location> <Proxy *> Order Deny,Allow Allow from all </Proxy> ProxyPass /status ! ProxyPass / balancer://lbcluster1/ ProxyPa***everse / balancer://lbcluster1/ <Location /> Order Deny,Allow Allow from all </Location> </VirtualHost>

測試:

delta-manager實現會話複製集羣實現

在server.xml中Host上下文中添加:

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.0.1.7"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="192.168.20.1" #node2改成192.168.20.2

port="4000"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=""/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/"

deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/"

watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

配置我們的特定應用程序調用上面的cluster功能

[root@node1 conf]# cp web.xml /data/webapps/WEB-INF/

[root@node1 conf]# vim /data/webapps/WEB-INF/web.xml

添加<distributable/>

[root@node1 conf]# scp /data/webapps/WEB-INF/web.xml node2:/data/webapps/WEB-INF/

web.xml 100% 163KB 162.7KB/s 00:00

查看日誌,發現集羣中加入了主機:

tail -100 /usr/local/tomcat/logs/catalina.out

01-Nov-2015 00:09:04.215 INFO [Membership-MemberAdded.] org.apache.catalina.ha.tcp.SimpleTcpCluster.memberAdded Replication member added:org.apache.catalina.tribes.membership.MemberImpl[tcp://{192, 168, 20, 2}:4000,{192, 168, 20, 2},4000, alive=1036, securePort=-1, UDP Port=-1, id={-40 -58 -73 -47 -114 -18 76 74 -81 -66 125 -30 -36 -78 -87 -23 }, payload={}, command={}, domain={}, ]測試發現儘管負載均衡切換了主機,但是session不會改變

同理,使用Mod_jk和ajp連接後端也成功,使用nginx做反代也行,這裏就不綴餘了

使用msm實現session服務器實現:

藉助於memcached:

yum install memcached [root@node3 ~]# cat /etc/sysconfig/memcached PORT="11211" USER="memcached" MAXCONN="1024" CACHESIZE="64" OPTIONS="" 提供四個java類庫: [root@node1 msm-1.8.3]# ls memcached-session-manager-1.8.3.jar msm-javolution-serializer-1.8.3.jar memcached-session-manager-tc8-1.8.3.jar spymemcached-2.10.2.jar javolution-5.5.1.jar 放置於兩臺tomcat服務器的/usr/local/tomcat/lib目錄下: [root@node1 ~]# scp -r msm-1.8.3/ node2:/usr/local/tomcat/lib The authenticity of host 'node2 (192.168.20.2)' can't be established. RSA key fingerprint is d5:69:d0:fc:ce:90:14:14:6d:4c:52:82:53:a5:ed:0b. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'node2,192.168.20.2' (RSA) to the list of known hosts. root@node2's password: spymemcached-2.10.2.jar 100% 429KB 428.8KB/s 00:00 memcached-session-manager-tc8-1.8.3.jar 100% 10KB 10.2KB/s 00:00 msm-javolution-serializer-1.8.3.jar 100% 69KB 69.4KB/s 00:00 memcached-session-manager-1.8.3.jar 100% 144KB 143.6KB/s 00:00 javolution-5.5.1.jar 100% 144KB 143.6KB/s 00:00 編輯server.xml文件Host上下文中定義context <Context path="" docBase="/data/webapps" reloadable="true"> <Manager className="de.javakaffee.web.msm.MemcachedBackupSessionManager" memcachedNodes="n1:192.168.20.7:11211,n2:192.168.20.8:11211" failoverNodes="n1" requestUriIgnorePattern=".*\.(ico|png|gif|jpg|css|js)$" transcoderFactoryClass="de.javakaffee.web.msm.serializer.javolution.JavolutionTranscoderFactory" /> <Valve className="org.apache.catalina.valves.RemoteAddrValve" deny="172\.16\.100\.100"/> <Valve className="org.apache.catalina.valves.AccessLogValve" directory="/data/logs" prefix="web1_access_log" suffix=".txt" pattern="%h %l %u %t "%r" %s %b" /> </Context>