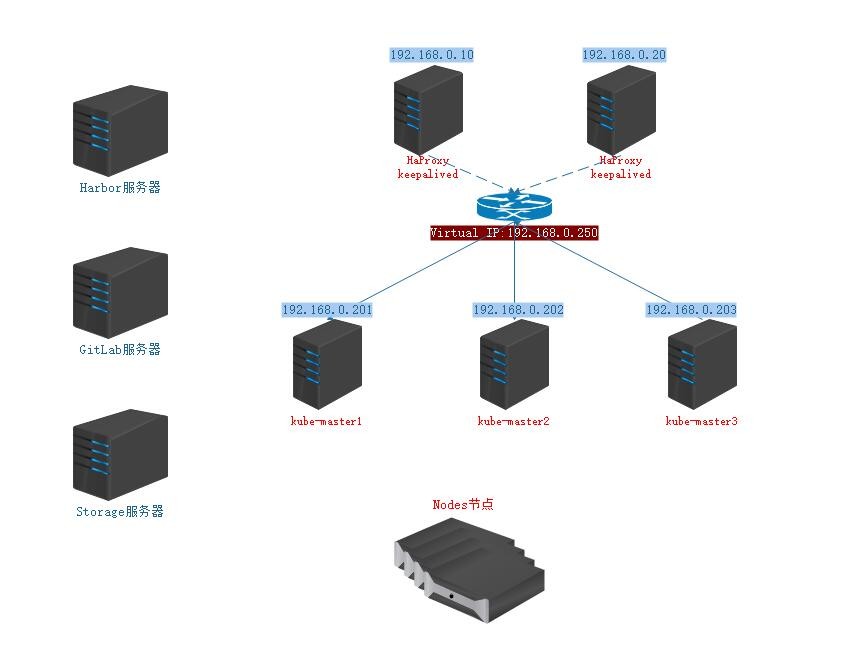

1. 架構設計和環境設計

1.1. 架構設計

- 部署 Haproxy 爲 Kubernetes 提供 Endpoint 訪問入口

- 使用 Keepalived 將 Endpoint 入口地址設置爲 Virtual IP 並通過部署多臺節點的方式實現冗餘

- 使用 kubeadm 部署高可用 Kubernetes 集羣, 指定 Endpoint IP 爲 Keepalived 生成的 Virtual IP

- 使用 prometheus 作爲 Kubernetes 的集羣監控系統, 使用 grafana 作爲圖表監控圖表展示系統, 使用 alertmanager 作爲報警系統

- 使用 jenkins + gitlab + harbor 構建 CI/CD 系統

- 使用單獨的域名在 Kubernetes 集羣內進行通信, 在內網搭建 DNS 服務用於解析域名

![Kubernetes 系列第二篇: Kubernetes 架構設計和部署]()

1.2. 環境設計

| 主機名 |

IP |

角色 |

| kube-master-01.sk8s.io-01.sk8s.io |

192.168.0.201 |

k8s master, haprxoy + keepalived(虛擬IP: 192.168.0.250) |

| kube-master-01.sk8s.io-02.sk8s.io |

192.168.0.202 |

k8s master, haprxoy + keepalived(虛擬IP: 192.168.0.250) |

| kube-master-01.sk8s.io-03.sk8s.io |

192.168.0.203 |

k8s master, DNS, Storage, GitLab, Harbor |

| kube-node-01.sk8s.io |

192.168.0.204 |

node |

| kube-node-02.sk8s.io |

192.168.0.205 |

node |

2. 操作系統初始化設置

2.1. 關閉 SELINUX

[root@localhost ~]# setenforce 0

[root@localhost ~]# sed -i 's#^SELINUX=.*#SELINUX=disabled#' /etc/sysconfig/selinux

[root@localhost ~]# sed -i 's#^SELINUX=.*#SELINUX=disabled#' /etc/selinux/config

2.2. 關閉無用服務

[root@localhost ~]# systemctl disable firewalld postfix auditd kdump NetworkManager

2.3. 升級系統內核

[root@master ~]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

[root@master ~]# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

[root@master ~]# yum -y --disablerepo=\* --enablerepo=elrepo-kernel install kernel-lt.x86_64 kernel-lt-devel.x86_64 kernel-lt-headers.x86_64

[root@master ~]# yum -y remove kernel-tools-libs.x86_64 kernel-tools.x86_64

[root@master ~]# yum -y --disablerepo=\* --enablerepo=elrepo-kernel install kernel-lt-tools.x86_64

[root@master ~]# cat <<EOF > /etc/default/grub

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)"

GRUB_DEFAULT=0

GRUB_DISABLE_SUBMENU=true

GRUB_TERMINAL_OUTPUT="console"

GRUB_CMDLINE_LINUX="crashkernel=auto console=ttyS0 console=tty0 panic=5"

GRUB_DISABLE_RECOVERY="true"

GRUB_TERMINAL="serial console"

GRUB_TERMINAL_OUTPUT="serial console"

GRUB_SERIAL_COMMAND="serial --speed=9600 --unit=0 --word=8 --parity=no --stop=1"

EOF

[root@localhost ~]# grub2-mkconfig -o /boot/grub2/grub.cfg

2.4. 統一網卡名稱

[root@localhost ~]# grub_cinfig='GRUB_CMDLINE_LINUX="crashkernel=auto ipv6.disable=1 net.ifnames=0 rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet"'

[root@localhost ~]# sed -i "s#GRUB_CMDLINE_LINUX.*#${grub_cinfig}#" /etc/default/grub

[root@localhost ~]# grub2-mkconfig -o /boot/grub2/grub.cfg

# ATTR{address} 爲網卡 MAC 地址, NAME 爲修改後的地址

[root@localhost ~]# cat /etc/udev/rules.d/70-persistent-net.rules

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="ec:xx:yy:cc:b6:xx", NAME="eth0"

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="ec:xx:yy:cc:b6:xx", NAME="eth1"

# 重啓之前先修改 /etc/sysconfig/network-scripts/ 下面的網卡配置文件

[root@localhost ~]# reboot

2.5. 其他配置

[root@localhost ~]# yum -y install vim net-tools lrzsz lbzip2 bzip2 ntpdate curl wget psmisc

[root@localhost ~]# timedatectl set-timezone Asia/Shanghai

[root@localhost ~]# echo "nameserver 223.5.5.5" > /etc/resolv.conf

[root@localhost ~]# echo "nameserver 114.114.114.114" >> /etc/resolv.conf

[root@localhost ~]# echo 'LANG="en_US.UTF-8"' > /etc/locale.conf

[root@localhost ~]# echo 'export LANG="en_US.UTF-8"' >> /etc/profile.d/custom.sh

[root@localhost ~]# cat >> /etc/security/limits.conf <<EOF

* soft nproc 65530

* hard nproc 65530

* soft nofile 65530

* hard nofile 65530

EOF

[root@localhost ~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv4.tcp_sack = 0

EOF

[root@localhost ~]# sysctl -p /etc/sysctl.d/k8s.conf

2.6. 配置 Hosts Deny

[root@localhost ~]# echo "sshd:192.168.0." > /etc/hosts.allow

[root@localhost ~]# echo "sshd:ALL" > /etc/hosts.deny

2.7. ssh 配置

# 創建管理員用戶, 並生成 ssh key(將私鑰下載下來, 禁止在服務器留存; 將公鑰複製到其他服務器上的 ~/.ssh/authorized_keys)

[root@localhost ~]# useradd huyuan

[root@localhost ~]# echo "sycx123" | passwd --stdin huyuan

[root@localhost ~]# su - huyuan

[root@localhost ~]# ssh-keygen -b 4096

[root@localhost ~]# mv ~/.ssh/id_rsa.pub ~/.ssh/authorized_keys

# 回到 root 用戶

[root@localhost ~]# exit

# 禁止 DNS 反解, 優化 SSH 連接速度

[root@localhost ~]# sed -i 's/^#UseDNS.*/UseDNS no/' /etc/ssh/sshd_config

# 禁用密碼認證

[root@localhost ~]# sed -i 's/^PasswordAuthentication.*/PasswordAuthentication no/' /etc/ssh/sshd_config

# 禁止 root 用戶登錄

[root@localhost ~]# sed -i 's/#PermitRootLogin.*/PermitRootLogin no/' /etc/ssh/sshd_config

# 只允許 huyuan 登錄服務器, 多個用戶使用空格進行分隔

[root@localhost ~]# echo "AllowUsers huyuan" >> /etc/ssh/sshd_config

# 重啓服務

[root@localhost ~]# systemctl restart sshd

2.7. 設置統一 root 密碼

[root@localhost ~]# echo "xxxxx" | passwd --stdin root

2.8. 設置主機名

[root@localhost ~]# hostnamectl set-hostname kube-master-01.sk8s.io-01.sk8s.io

[root@localhost ~]# echo "192.168.0.201 kube-master-01.sk8s.io-01.sk8s.io" >> /etc/hosts

[root@localhost ~]# echo "192.168.0.202 kube-master-01.sk8s.io-02.sk8s.io" >> /etc/hosts

[root@localhost ~]# echo "192.168.0.203 kube-master-01.sk8s.io-03.sk8s.io" >> /etc/hosts

[root@localhost ~]# echo "192.168.0.204 kube-node-01.sk8s.io" >> /etc/hosts

[root@localhost ~]# echo "192.168.0.205 kube-node-02.sk8s.io" >> /etc/hosts

3. 初始化 Kubernetes 集羣

3.1. 安裝和配置 docker (所有節點)

[[email protected] ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[[email protected] ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[[email protected] ~]# yum -y install docker-ce-18.09.6 docker-ce-cli-18.09.6

[[email protected] ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://c7i79lkw.mirror.aliyuncs.com"],

"insecure-registries": ["122.228.208.72:9000"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"graph": "/opt/docker",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

[[email protected] ~]# systemctl enable docker

[[email protected] ~]# systemctl start docker

3.2. 配置 haproxy 作爲 ApiServer 代理

# 在 kube-master-01.sk8s.io01 和 kube-master-01.sk8s.io02 主機上安裝和配置

[[email protected] ~]# yum -y install haproxy

[[email protected] ~]# cat > /etc/haproxy/haproxy.cfg << EOF

global

log 127.0.0.1 local2 notice

chroot /var/lib/haproxy

stats socket /var/run/haproxy.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

nbproc 1

defaults

log global

timeout connect 5000

timeout client 10m

timeout server 10m

listen admin_stats

bind 0.0.0.0:8000

mode http

stats refresh 30s

stats uri /status

stats realm welcome login\ Haproxy

stats auth admin:tuitui99

stats hide-version

stats admin if TRUE

listen kube-master-01.sk8s.io

bind 0.0.0.0:8443

mode tcp

option tcplog

balance source

server 192.168.0.201 192.168.0.201:6443 check inter 2000 fall 2 rise 2 weight 1

server 192.168.0.202 192.168.0.202:6443 check inter 2000 fall 2 rise 2 weight 1

server 192.168.0.203 192.168.0.203:6443 check inter 2000 fall 2 rise 2 weight 1

EOF

[[email protected] ~]# systemctl enable haproxy

[[email protected] ~]# systemctl start haproxy

3.3. 配置 keepalived 爲 haproxy 做主從備份

# 在 kube-master-01.sk8s.io01 和 kube-master-01.sk8s.io02 主機上安裝和配置

[[email protected] ~]# yum -y install keepalived

[[email protected] ~]# cp /etc/keepalived/keepalived.conf{,.bak}

[[email protected] ~]# cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

# 唯一表示 192.168.0.201 節點 ip

router_id master-192.168.0.201

# 執行 notify_master notify_backup notify_fault 等腳本的用戶

script_user root

}

vrrp_script check-haproxy {

# 檢測進程是否存在

script "/bin/killall -0 haproxy &>/dev/null"

interval 5

weight -30

user root

}

vrrp_instance k8s {

state MASTER

# 設置優先級, 主服務器爲 120, 從服務器爲 100

priority 120

dont_track_primary

interface eth0

virtual_router_id 80

advert_int 3

track_script {

check-haproxy

}

authentication {

auth_type PASS

auth_pass tuitui99

}

virtual_ipaddress {

# 設置虛擬 ip

192.168.0.254

}

# 腳本參考 https://blog.51cto.com/hongchen99/2298896

notify_master "/bin/python /etc/keepalived/notify_keepalived.py master"

notify_backup "/bin/python /etc/keepalived/notify_keepalived.py backup"

notify_fault "/bin/python /etc/keepalived/notify_keepalived.py fault"

}

EOF

[[email protected] ~]# chmod +x /etc/keepalived/notify_keepalived.py

[[email protected] ~]# systemctl enable keepalived

[[email protected] ~]# systemctl start keepalived

3.4. 配置 haproxy 和 keepalived 日誌

# 配置 haproxy 日誌

[[email protected] ~]# echo "local2.* /var/log/haproxy.log" >> /etc/rsyslog.conf

# 配置 keepalived 日誌

[[email protected] ~]# cp /etc/sysconfig/keepalived{,.bak}

[[email protected] ~]# echo KEEPALIVED_OPTIONS="-D -d -S 0" > /etc/sysconfig/keepalived

[[email protected] ~]# echo "local0.* /var/log/keepalived.log" >> /etc/rsyslog.conf

# 由於 haproxy 日誌通過 udp 傳輸, 需要打開 rsyslog 的 udp 端口, 在 rsyslog 裏面, 去掉下面兩個變量的註釋

[[email protected] ~]# cat /etc/rsyslog.conf

$ModLoad imudp

$UDPServerRun 514

[[email protected] ~]# systemctl restart rsyslog

[[email protected] ~]# systemctl restart haproxy

[[email protected] ~]# systemctl restart keepalived

3.5. 安裝 kubelet kubeadm 和 kubectl

[[email protected] ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[[email protected] ~]# yum install -y kubelet-1.14.0 kubeadm-1.14.0 kubectl-1.14.0

[[email protected] ~]# systemctl enable kubelet

3.6. 初始化 kubernetes 集羣

# 加載 ipvs 模塊

[[email protected] ~]# cat > /etc/sysconfig/modules/ipvs.modules << EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

[[email protected] ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

[[email protected] ~]# sh /etc/sysconfig/modules/ipvs.modules

[[email protected] ~]# lsmod | grep ip_vs

# 安裝 ipvsadm 管理 ipvs

[[email protected] ~]# yum -y install ipvsadm

# 編寫初始化配置文件

[[email protected] ~]# cat > kubeadm-init.yaml << EOF

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

kubernetesVersion: v1.14.0

# 192.168.0.254 爲虛擬 IP, 8443 爲 haproxy 監聽的端口

controlPlaneEndpoint: "192.168.0.254:8443"

# 設置拉取初始化鏡像地址

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

networking:

podSubnet: 10.244.0.0/16

apiServer:

certSANs:

- "kube-master-01.sk8s.io01"

- "kube-master-01.sk8s.io02"

- "kube-master-01.sk8s.io03"

- "192.168.0.201"

- "192.168.0.202"

- "192.168.0.203"

- "192.168.0.254"

- "127.0.0.1"

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

EOF

# 初始化 kubernetes 集羣 --experimental-upload-certs 共享證書

[[email protected] ~]# kubeadm init --config=kubeadm-init.yaml --experimental-upload-certs

# 創建 kubernetes 集羣管理用戶

[[email protected] ~]# groupadd -g 5000 kubelet

[[email protected] ~]# useradd -c "kubernetes-admin-user" -G docker -u 5000 -g 5000 kubelet

[[email protected] ~]# echo "kubelet" | passwd --stdin kubelet

# 複製 kubernetes 集羣配置文件到管理用戶

[[email protected] ~]# mkdir /home/kubelet/.kube

[[email protected] ~]# cp -i /etc/kubernetes/admin.conf /home/kubelet/.kube/config

[[email protected] ~]# chown -R kubelet:kubelet /home/kubelet/.kube

3.7. 更新 coredns

[[email protected] ~]# su - kubelet

[[email protected] ~]$ cat > coredns.yaml << EOF

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: kube-dns

name: coredns

namespace: kube-system

spec:

replicas: 3

selector:

matchLabels:

k8s-app: kube-dns

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

k8s-app: kube-dns

spec:

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values:

- kube-dns

topologyKey: kubernetes.io/hostname

containers:

- args:

- -conf

- /etc/coredns/Corefile

image: coredns/coredns:1.5.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /etc/coredns

name: config-volume

readOnly: true

dnsPolicy: Default

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: coredns

serviceAccountName: coredns

terminationGracePeriodSeconds: 30

tolerations:

- key: CriticalAddonsOnly

operator: Exists

- effect: NoSchedule

key: node-role.kubernetes.io/master

- effect: NoSchedule

key: node.kubernetes.io/not-ready

volumes:

- configMap:

defaultMode: 420

items:

- key: Corefile

path: Corefile

name: coredns

name: config-volume

EOF

[[email protected] ~]$ kubectl apply -f coredns.yaml

3.8. 其他節點加入集羣

# 其他 master 加入集羣

[[email protected] ~]$ kubeadm join 192.168.0.254:8443 --token h4n7uy.5qibssxu27vveko5 \

--discovery-token-ca-cert-hash sha256:a27738a4457d57ee611dd1c0281aeaabd32bc834797fe307980b95755b052e41 \

--experimental-control-plane --certificate-key eb37e5810fe300a42c5b610117ad57acf682a92da928cf94435a135aa338bc12

# 其他 node 加入集羣

[[email protected] ~]$ kubeadm join 192.168.0.254:8443 --token h4n7uy.5qibssxu27vveko5 \

--discovery-token-ca-cert-hash sha256:a27738a4457d58ee611dd1c0281aeaabd34bc834797fe307980b95755b052e41