神经网路识别手写(Mnist)

前言

本文是之前我们实现的神经网路对手写体识别的一个实践。使用的数据是经典的Mnist数据。

下载链接为:

http://www.iro.umontreal.ca/~lisa/deep/data/mnist/mnist.pkl.gz

官网地址是http://yann.lecun.com/exdb/mnist/

数据读取

这里直接使用别人提供的读取函数。

# -*- coding: utf-8 -*-

# @Time : 2017/11/24 上午10:27

# @Author : SkullFang

# @Email : [email protected]

# @File : mnist_loader.py

# @Software: PyCharm

"""

mnist_loader

~~~~~~~~~~~~

A library to load the MNIST image data. For details of the data

structures that are returned, see the doc strings for ``load_data``

and ``load_data_wrapper``. In practice, ``load_data_wrapper`` is the

function usually called by our neural network code.

"""

#### Libraries

# Standard library

import cPickle

import gzip

# Third-party libraries

import numpy as np

def load_data():

"""Return the MNIST data as a tuple containing the training data,

the validation data, and the test data.

The ``training_data`` is returned as a tuple with two entries.

The first entry contains the actual training images. This is a

numpy ndarray with 50,000 entries. Each entry is, in turn, a

numpy ndarray with 784 values, representing the 28 * 28 = 784

pixels in a single MNIST image.

The second entry in the ``training_data`` tuple is a numpy ndarray

containing 50,000 entries. Those entries are just the digit

values (0...9) for the corresponding images contained in the first

entry of the tuple.

The ``validation_data`` and ``test_data`` are similar, except

each contains only 10,000 images.

This is a nice data format, but for use in neural networks it's

helpful to modify the format of the ``training_data`` a little.

That's done in the wrapper function ``load_data_wrapper()``, see

below.

"""

f = gzip.open('../data/mnist.pkl.gz', 'rb')

training_data, validation_data, test_data = cPickle.load(f)

f.close()

return (training_data, validation_data, test_data)

def load_data_wrapper():

"""Return a tuple containing ``(training_data, validation_data,

test_data)``. Based on ``load_data``, but the format is more

convenient for use in our implementation of neural networks.

In particular, ``training_data`` is a list containing 50,000

2-tuples ``(x, y)``. ``x`` is a 784-dimensional numpy.ndarray

containing the input image. ``y`` is a 10-dimensional

numpy.ndarray representing the unit vector corresponding to the

correct digit for ``x``.

``validation_data`` and ``test_data`` are lists containing 10,000

2-tuples ``(x, y)``. In each case, ``x`` is a 784-dimensional

numpy.ndarry containing the input image, and ``y`` is the

corresponding classification, i.e., the digit values (integers)

corresponding to ``x``.

Obviously, this means we're using slightly different formats for

the training data and the validation / test data. These formats

turn out to be the most convenient for use in our neural network

code."""

tr_d, va_d, te_d = load_data()

training_inputs = [np.reshape(x, (784, 1)) for x in tr_d[0]]

training_results = [vectorized_result(y) for y in tr_d[1]]

training_data = zip(training_inputs, training_results)

validation_inputs = [np.reshape(x, (784, 1)) for x in va_d[0]]

validation_data = zip(validation_inputs, va_d[1])

test_inputs = [np.reshape(x, (784, 1)) for x in te_d[0]]

test_data = zip(test_inputs, te_d[1])

return (training_data, validation_data, test_data)

def vectorized_result(j):

"""Return a 10-dimensional unit vector with a 1.0 in the jth

position and zeroes elsewhere. This is used to convert a digit

(0...9) into a corresponding desired output from the neural

network."""

e = np.zeros((10, 1))

e[j] = 1.0

return e

def load_data_wrapper2():

"""Return a tuple containing ``(training_data, validation_data,

test_data)``. Based on ``load_data``, but the format is more

convenient for use in our implementation of neural networks.

In particular, ``training_data`` is a list containing 50,000

2-tuples ``(x, y)``. ``x`` is a 784-dimensional numpy.ndarray

containing the input image. ``y`` is a 10-dimensional

numpy.ndarray representing the unit vector corresponding to the

correct digit for ``x``.

``validation_data`` and ``test_data`` are lists containing 10,000

2-tuples ``(x, y)``. In each case, ``x`` is a 784-dimensional

numpy.ndarry containing the input image, and ``y`` is the

corresponding classification, i.e., the digit values (integers)

corresponding to ``x``.

Obviously, this means we're using slightly different formats for

the training data and the validation / test data. These formats

turn out to be the most convenient for use in our neural network

code."""

tr_d, va_d, te_d = load_data()

training_inputs = [np.reshape(x, (784, 1)) for x in tr_d[0]]

training_results = [vectorized_result(y) for y in tr_d[1]]

training_data = zip(training_inputs, training_results)

validation_inputs = [np.reshape(x, (784, 1)) for x in va_d[0]]

validation_data = zip(validation_inputs, va_d[1])

test_inputs = [np.reshape(x, (784, 1)) for x in te_d[0]]

test_data = zip(test_inputs, te_d[1])

return (training_inputs, training_results, validation_data, test_data)

数据集分析

数据集其实上面已经写的很清楚了。我们import之后直接调用即可。

import mnist_loader

traning_data, validation_data, test_data = mnist_loader.load_data_wrapper()输入数据

输入数据是一个28*28的图片,还有一个标签。

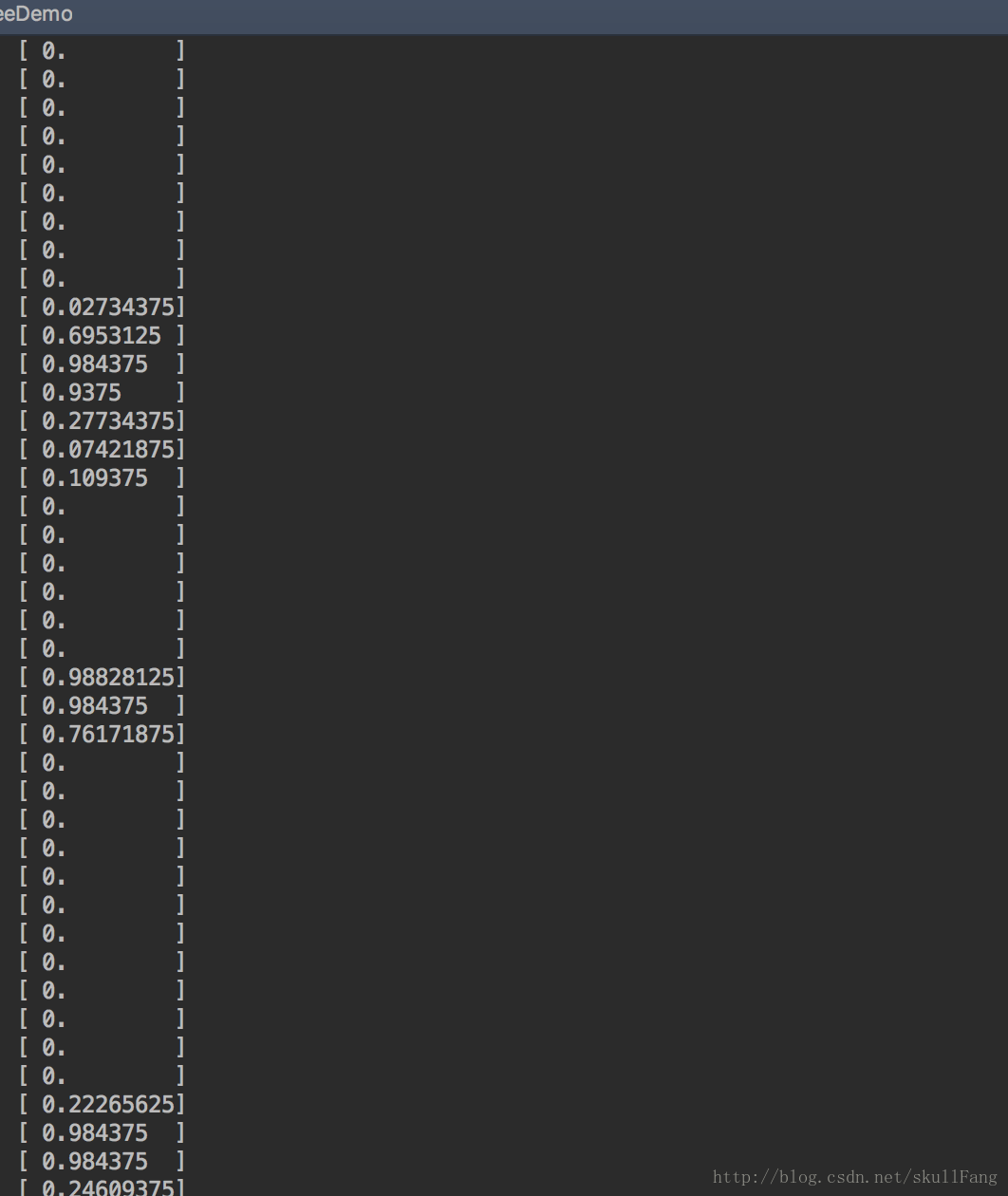

首先看输入x

这是片段,我们很容易看出其实每个像素点都是一个输入。共有28*28=784个输入。

所以我们的**输入层**一定是**784个**神经元。一个神经元对应一个输入。

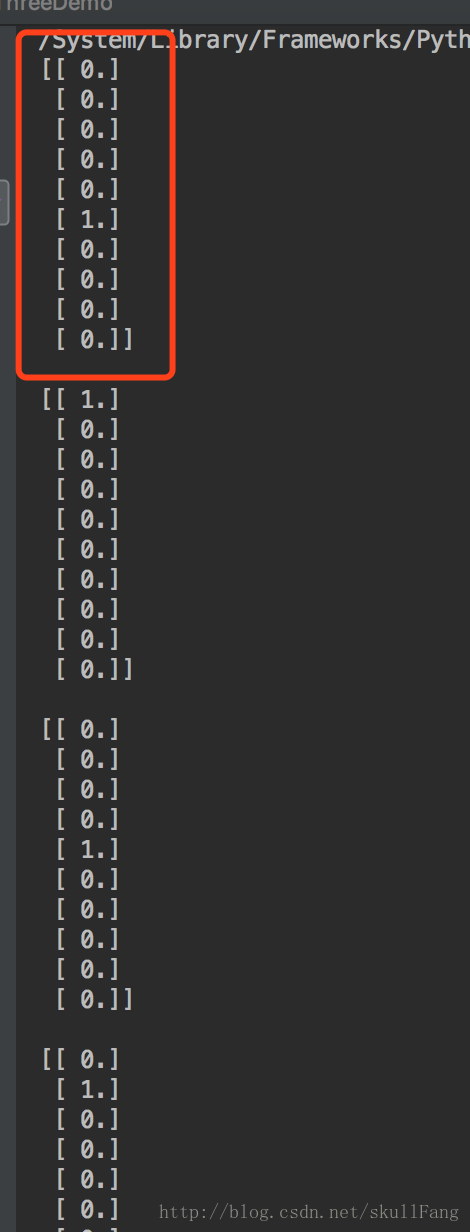

然后看标签y

这一组代表一个标签,我们可以看出,是一个10维的向量。其中只有一个1。说明这是个维度分别代表0、1、2、3、4、5、6、7、8、9。如果哪个维度是1就说明这个是属于哪个数字。例如上图对应的应该就是5。

所以我们的输出层应该是**10个神经元**分别输出是个数字,其中最大的那个数字就是最有可能的预测。

**隐藏层随意编写...**

<h1>随机梯度下降</h1>

在真的训练模型的时候需要说一下随机梯度下降的算法。为什么要介绍这个呢?

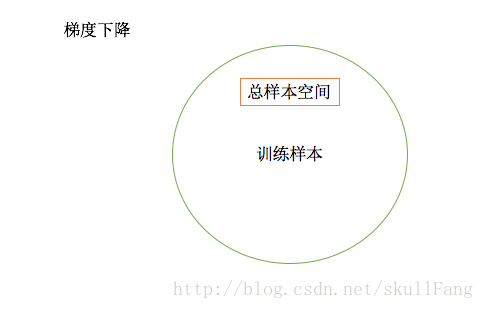

如果我们直接用梯度下降的话。因为每次训练要算出所有维的w,b然后进行计算更新,这个计算量是十分庞大的。如果维度太大,并且一定要使用sigmod激励函数的话。还有可能导致。

```python

RuntimeWarning: overflow encountered in exp

return 1.0/(1.0+np.exp(-z))

<div class="se-preview-section-delimiter"></div>

这是一个比较严重的问题。于是就有了随机梯度下降,其实随机梯度下降思路很简单。

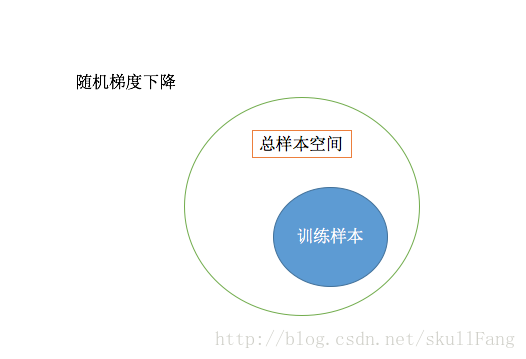

梯度下降每次会计算出所有的训练样本从而更新w和b。而随机梯度下降会每次从训练样本中抽取部分进行训练更新w,b。如图。

随机梯度下降

如图随机梯度下降在每一轮学习的时候只会随机挑选总样本空间的一部分进行训练。这一部分就是一个batch size。

随机梯度下降没有什么新的东西,其实就是 牺牲精度换效率

代码部分

在前面的基础上我们在随机梯度下降函数中加入一个参数mini_batch。另外更新的时候要用eta除以minibatch的长度(因为是要更新一个minibatch,而不是所有)

“`

这是一个比较严重的问题。于是就有了随机梯度下降,其实随机梯度下降思路很简单。

梯度下降每次会计算出所有的训练样本从而更新w和b。而随机梯度下降会每次从训练样本中抽取部分进行训练更新w,b。如图。

随机梯度下降

如图随机梯度下降在每一轮学习的时候只会随机挑选总样本空间的一部分进行训练。这一部分就是一个batch size。

随机梯度下降没有什么新的东西,其实就是 **牺牲精度换效率**

<h1>代码部分</h1>

在前面的基础上我们在随机梯度下降函数中加入一个参数mini_batch。另外更新的时候要用eta除以minibatch的长度(因为是要更新一个minibatch,而不是所有)

```python

<div class="se-preview-section-delimiter"></div>

# -*- coding: utf-8 -*-

import random

import numpy as np

class Network(object):

def __init__(self, sizes):

# 网络层数

self.num_layers = len(sizes)

# 网络每层神经元个数

self.sizes = sizes

# 初始化每层的偏置

self.biases = [np.random.randn(y, 1) for y in sizes[1:]]

# 初始化每层的权重

self.weights = [np.random.randn(y, x)

for x, y in zip(sizes[:-1], sizes[1:])]

def feedforward(self, a):

"""

前向传播 就是计算每个输入产生的结果

:param a:

:return:

"""

for b, w in zip(self.biases, self.weights):

a = sigmoid(np.dot(w, a) + b)

return a

# 随机梯度下降

def SGD(self, training_data, epochs, mini_batch_size, eta,

test_data=None):

"""

随机梯度想讲

:param training_data:

:param epochs:

:param mini_batch_size: 等分成几份

:param eta:

:param test_data:

:return:

"""

if test_data: n_test = len(test_data)

# 训练数据总个数

n = len(training_data)

# 开始训练 循环每一个epochs

for j in xrange(epochs):

# 打乱训练数据

random.shuffle(training_data)

# mini_batch

#把数据集按照mini_batch大小等分。

mini_batches = [training_data[k:k + mini_batch_size]

for k in range(0, n, mini_batch_size)]

# 训练mini_batch,每次训练的不再是总的而是mini_batch个

for mini_batch in mini_batches:

self.update_mini_batch(mini_batch, eta)

if test_data:

print "Epoch {0}: {1} / {2}".format(

j, self.evaluate(test_data), n_test)

print "Epoch {0} complete".format(j)

# 更新mini_batch

def update_mini_batch(self, mini_batch, eta):

"""

更新权重和偏置

:param mini_batch:

:param eta:

:return:

"""

# 保存每层偏倒

nabla_b = [np.zeros(b.shape) for b in self.biases]

nabla_w = [np.zeros(w.shape) for w in self.weights]

# 训练每一个mini_batch

for x, y in mini_batch:

delta_nable_b, delta_nabla_w = self.update(x, y)

# 保存一次训练网络中每层的偏倒

nabla_b = [nb + dnb for nb, dnb in zip(nabla_b, delta_nable_b)]

nabla_w = [nw + dnw for nw, dnw in zip(nabla_w, delta_nabla_w)]

#训练完了之后就更新,注意这里更新要处以len(mini_batch)

# 更新权重和偏置 Wn+1 = wn - eta/len(mini_batch) * nw

self.weights = [w - (eta / len(mini_batch)) * nw

for w, nw in zip(self.weights, nabla_w)]

self.biases = [b - (eta / len(mini_batch)) * nb

for b, nb in zip(self.biases, nabla_b)]

# 前向传播和反向传播

def update(self, x, y):

"""

算出更新的偏导

:param x:

:param y:

:return:

"""

# 保存每层偏导

nabla_b = [np.zeros(b.shape) for b in self.biases]

nabla_w = [np.zeros(w.shape) for w in self.weights]

activation = x

# 保存每一层的激励值a=sigmoid(z)

activations = [x]

# 保存每一层的z=wx+b

zs = []

# 前向传播

for b, w in zip(self.biases, self.weights):

# 计算每层的z

z = np.dot(w, activation) + b

# 保存每层的z

zs.append(z)

# 计算每层的a

activation = sigmoid(z)

# 保存每一层的a

activations.append(activation)

# 反向传播

# 计算最后一层的误差

delta = self.cost_derivative(activations[-1], y) * sigmoid_prime(zs[-1])

# 最后一层权重和偏置的倒数

nabla_b[-1] = delta

nabla_w[-1] = np.dot(delta, activations[-2].transpose())

# 倒数第二层一直到第一层 权重和偏置的倒数

for l in range(2, self.num_layers):

z = zs[-l]

sp = sigmoid_prime(z)

# 当前层的误差

delta = np.dot(self.weights[-l+1].transpose(), delta) * sp

# 当前层偏置和权重的倒数

nabla_b[-l] = delta

nabla_w[-l] = np.dot(delta, activations[-l - 1].transpose())

return (nabla_b, nabla_w)

def evaluate(self, test_data):

"""

计算一下正确的个数

:param test_data:

:return:

"""

test_results = [(np.argmax(self.feedforward(x)), y)

for (x, y) in test_data]

return sum(int(x == y) for (x, y) in test_results)

def cost_derivative(self, output_activation, y):

"""

计算输出和真是值的偏差

:param output_activation:

:param y:

:return:

"""

return (output_activation - y)

def sigmoid(z):

"""

激励函数

:param z:

:return:

"""

return 1.0 / (1.0 + np.exp(-z))

def sigmoid_prime(z):

"""

激励函数的导数

:param z:

:return:

"""

return sigmoid(z) * (1 - sigmoid(z))

if __name__ == '__main__':

from ProjectOne import mnist_loader

traning_data, validation_data, test_data = mnist_loader.load_data_wrapper()

net = Network([784, 30,20, 10])

net.SGD(traning_data, 30, 10, 0.5, test_data=test_data)

<div class="se-preview-section-delimiter"></div>

我的网络是三层,输入层不算。隐藏层两层,输出层一层。

net = Network([784, 30,20, 10])

net.SGD(traning_data, 30, 10, 0.5, test_data=test_data)

我的网络是三层,输入层不算。隐藏层两层,输出层一层。

```python

net = Network([784, 30,20, 10])

net.SGD(traning_data, 30, 10, 0.5, test_data=test_data)

<div class="se-preview-section-delimiter"></div>

训练的结果并不是很好

“`

训练的结果并不是很好Epoch 0: 8274 / 10000

Epoch 0 complete

Epoch 1: 8759 / 10000

Epoch 1 complete

Epoch 2: 8956 / 10000

Epoch 2 complete

Epoch 3: 9041 / 10000

Epoch 3 complete

Epoch 4: 9094 / 10000

Epoch 4 complete

Epoch 5: 9140 / 10000

Epoch 5 complete

Epoch 6: 9187 / 10000

Epoch 6 complete

Epoch 7: 9208 / 10000

Epoch 7 complete

Epoch 8: 9235 / 10000

Epoch 8 complete

Epoch 9: 9246 / 10000

Epoch 9 complete

Epoch 10: 9247 / 10000

Epoch 10 complete

Epoch 11: 9280 / 10000

Epoch 11 complete

Epoch 12: 9271 / 10000

Epoch 12 complete

Epoch 13: 9290 / 10000

Epoch 13 complete

Epoch 14: 9293 / 10000

Epoch 14 complete

Epoch 15: 9313 / 10000

Epoch 15 complete

Epoch 16: 9320 / 10000

Epoch 16 complete

Epoch 17: 9316 / 10000

Epoch 17 complete

Epoch 18: 9328 / 10000

Epoch 18 complete

Epoch 19: 9312 / 10000

Epoch 19 complete

Epoch 20: 9332 / 10000

Epoch 20 complete

Epoch 21: 9337 / 10000

Epoch 21 complete

Epoch 22: 9366 / 10000

Epoch 22 complete

Epoch 23: 9354 / 10000

Epoch 23 complete

Epoch 24: 9358 / 10000

Epoch 24 complete

Epoch 25: 9364 / 10000

Epoch 25 complete

Epoch 26: 9369 / 10000

Epoch 26 complete

Epoch 27: 9370 / 10000

Epoch 27 complete

Epoch 28: 9376 / 10000

Epoch 28 complete

Epoch 29: 9374 / 10000

Epoch 29 complete

“`

其中还有很大的改进空间。