一、Docker相關內容

二、Kubernets簡介

三、kuadm安裝k8s

一、Docker相關內容

1、Docker工具集

Docker三劍客:Compse、swarm、machine

docker compose:適用於單機,容器編排定義

docker swarm:對所有Docker宿主機資源整合,集羣管理

docker machine:初始化Docker環境,跨平臺支持mesos+marathon

mesos:主機資源整合分配調度管理工具的框架

marathon:基於Mesos的私有PaaS

kubernets:

2、Compse

Docker_Compse: https://docs.docker.com/compose/overview/

Compose定義的Yaml格式資源定義

version: '3'

services:

web:

build: .

ports:

- "5000:5000"

volumes:

- .:/code

- logvolume01:/var/log

links:

- redis

redis:

image: redis

volumes:

logvolume01: {}3、CI、CD&CD

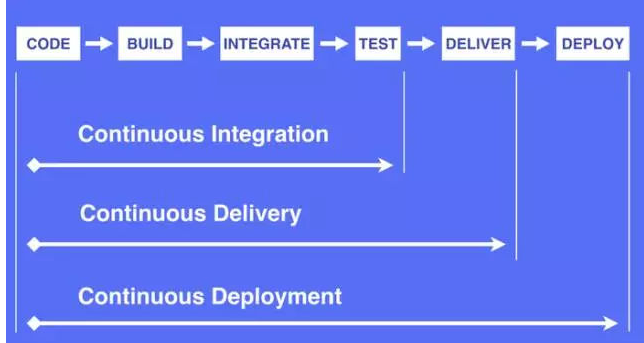

CI:持續集成Continnuous Intergration //Dev提交代碼->測試->通過[合併主線到代碼倉庫]

CD:持續交付Continnuous Delivery //將應用發佈出去(灰度) 目的是最小化部署或發佈過程中團隊固有的摩擦

CD:持續部署Continnuous Deployment //一種更高程度的自動化,無論何時代碼有較大改動,都會自動進行構建/部署

圖1

二、Kubernets簡介

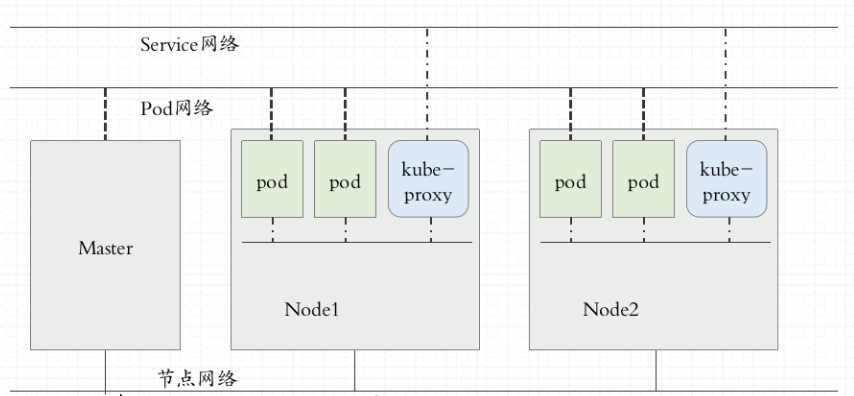

kubernets爲多個主機資源整合,提供一個統一的資源管理、調度

k8s爲Google內部Borg的翻版,CoreOS被RedHat收購

官網介紹:https://kubernetes.io/zh/docs/

1、k8s特性:

1)自動裝箱、自我修復,水平擴展,服務發現和負載均衡,自動發佈和回滾

2)密鑰和配置管理

之前是基於原生file文件修改配置,最好是基於環境變量修改 //entrypoint 腳本(用戶傳遞的環境變量替換到配置文件中)

鏡像啓動時,動態加載配置信息 //

假如要修改一個配置 //ansible推送 或者 應用程序的配置信息保存在配置服務器上,啓動時加載(修改只需要修改配置服務器即可)

3)存儲編排、任務批量處理

2、集羣模型:

有中心節點 //master+多slave,k8s:一般三個master(HA),其他都是node

無中心節點 //任何一個shard,都可以接受請求,並路由到其他節點

控制器:監控容器狀態->有控制器管理器->控制器管理器(冗餘HA)

Controller manager:監控每一個控制器的健康,Controller manager自身做冗餘

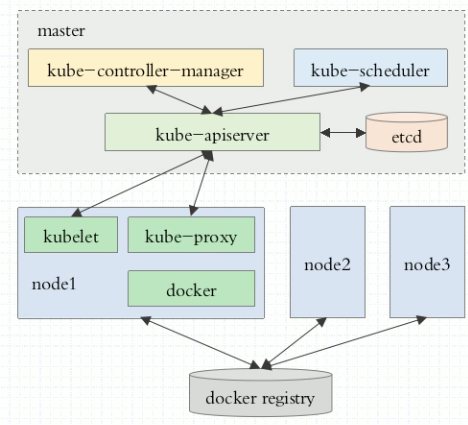

3、組件

- Master:集羣控制節點,一般建議3HA

API Server:集羣內增刪改查的入口

Scheduler:調度Pod

Controller-Manager:所有資源對象的自動化控制中心(大總管)- Node:(也被稱作Minion),實際的工作節點

kubelet: 負責Pod的創建,啓動等;並且與Master節點密切協作,實現集羣管理(向master註冊自己,週期彙報自身情況)

kube-proxy:實現Kubernets Service的通信與負載均衡機制

docker:Docker引擎- Pod: Pod是容器的外殼,對容器的封裝,k8s最小的調度單位

pod內放容器,一個pod內可以包含多個容器;

pod內容器共享網絡名稱空間 //net ,ups,ipc空間 uer ,mnt和pid是隔離的

volume屬於pod,不再屬於同一個容器;一個pod內的所有容器,只能運行在同一個node上

一般一個pod內只放一個容器, 如果需要一個pod內放置多個容器,一般是有一個主的,其他的是slave的

爲了方便Pod管理,可以爲pod打上標籤(label,key/value格式) ; label selector :標籤選擇器Label:

對各種資源對象添加的屬性(可以對Pod,Node,Service,RC等添加)

Label Selector:實現複雜的條件選擇,類似於sql的where查詢

kube-controller進程通過Service定義的Label Selector監控Pod副本個數

kube-proxy通過Service的label selector選擇對應的PodReplicatin Controller:一個期望的場景

Pod期待的副本數

用於篩選目標的Pod的Label selector

當Pod的數量小於預期數量時,用於創建新的Pod的Pode模板

kubernetes v1.2後升級修改名稱爲Replica Sets(下一代的RC)Deployment: 解決Pod編排問題

內部使用Replication Set來實現

對無狀態Pod集合管理

和RC功能非常接近Horizontal Pod Autoscaler:(HPA)

HPA:自動根據流量、負載等,自動擴縮容StatefulSet:

Pod的管理對象:RC,Deploymnet、DaemonSet、Job都是無狀態的。

有狀態服務特點:

1)每個節點有固定的身份ID,集羣中的成員需要相互發現和通信,例如MySQL、zk等

2)集羣規模固定,不能隨意變動

3)每個節點都是有狀態的,通常會持久化數據到永久存儲中

4)如果磁盤損壞,則集羣的某個節點無法正常運行,集羣功能受損

Statefuleset從本質上來說和Deployment/RC一樣,但是具有以下特點:

StatefulSet中的每個Pod都有穩定的、唯一的網絡標識,用來發現集羣內的其他成員;示例pod1的StatefuleSet名稱爲(mq-0),第二個爲mq-1,第三個爲mq-2

StatefulSet中容器的啓停順序是受控的,操作第n個時,前n-1個Pod已經啓動

StatefulSet中的每個Pod採用穩定的持久化存儲卷,通過PV/PVC來實現,刪除Pod時默認不會刪除 StatefulSet相關的存儲卷

還要與Headless Service結合使用,格式爲:${podname}.${headless service name}Service:服務,也稱爲微服務

前端應用->[label selector]--->{[Pod1(label=bk1)],[Pod2(label=bk1],[],[]...}

kube-proxy,負責接收Service的請求兵轉發到後端Pod實例上,內部實現負載均衡和會話保持

k8s在此基礎上提升:使用Cluster IP解決TCP網絡通信問題

kubectl get endpoints;獲取容器暴露端口Job

控制一組Pod去完成類似於批處理的任務,其中每個Docker只運行一次,當Job控制的所有Pod副本都運行結束時,對應的Job也就結束了

k8s在1.5version之後提供了CronJob來實現定時反覆執行的能力Persistent Volume:

Volume: Pod中能夠被多個容器訪問的共享目錄,支持Ceph、ClusterFS等分佈式文件系統

Persistent Volume(PV)&Persistent Volume Chain(PVC):網絡存儲,並且支持讀寫權限隔離Namespace:

實現多租戶的資源隔離,默認namespace爲defaut

kubectl get namespacesAnnotation:

註解和Label類似,key/value形式定義;Label具有更嚴格的命名規則,定義的對象爲元數據,Annotation則是用戶任意定義的附加信息configMap

配置信息key/value,存儲在etcd數據庫中,然後提供API方便k8s相關組件或客戶應用CRUD操作這些數據- 其他相關概念

etcd:存儲Master數據信息,類zookeeper,需要HA

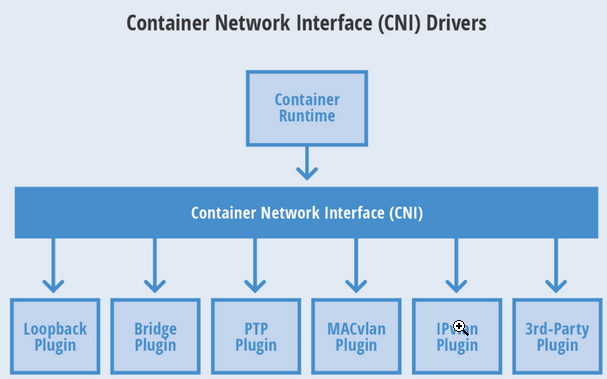

CNI(Conteinre Network Interface) 是 google 和 CoreOS 主導制定的容器網絡標準

圖4:

這個協議連接了兩個組件:容器管理系統和網絡插件。它們之間通過 JSON 格式的文件進行通信,實現容器的網絡功能。具體的事情都是插件來實現的,包括:創建容器網絡空間(network namespace)、把網絡接口(interface)放到對應的網絡空間、給網絡接口分配 IP 等等。

flannel:支持網絡配置,不支持網絡策略,簡單

calico:網絡配置、網絡策略,較爲複雜

canel:集合flannel和calico

Docker公司提供 CNM 標準。目前 CNM 只能使用在 docker 中,CNI應用場景更廣

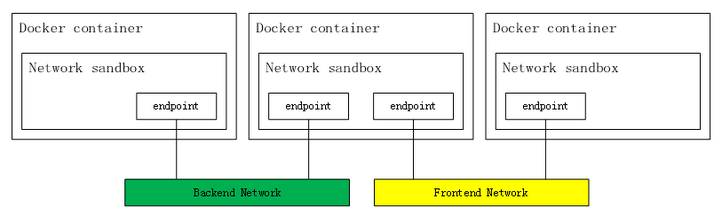

圖6

有興趣的可以瞭解下

* K8s網絡 8

Pod級別

多個Pod內部容器見通信:lo

各Pod間的通信:橋接、overlay network、

Service級別

Node級別

圖2

其他組件:https://github.com/kubernetes/

三、kuadm安裝k8s

圖3:

安裝方式1:使用進程方式,接受systemctl管控(比較繁瑣)

安裝方式2:使用kubeadm方式,所有節點都安裝docker和kubelet,k8s的管控節點也運行爲Pod

controller-manager,scheduler,apiserver,etcd

kube-proxy,kubelet,flannel都要運行爲Pod

1、實驗環境:

============================

主機ip 主機名 主機配置

192.168.170.135 master (2c2g)

192.168.170.137 node1 (2c2g)

192.168.170.139 node2 (2c2g)

============================2、基礎環境準備(所有節點都要安裝)

1)hostnamectl set-hostname $hostname //設置主機名

2)設置hosts

[root@master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.170.135 matser

192.168.170.137 node1

192.168.170.139 node23)關閉iptable和SELinux

systemctl stop fireward;systemctl disbale fireward

setenforce 0;sed -i 's@SELINUX=.*@SELINUX=disabled@' /etc/selinux/config4)關閉swap

swapoff -a;sed -i 's/.*swap.*/#&/' /etc/fstab5)配置yum

kubernets源

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgDocker源:

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo6)安裝工具

yum install docker

yum -y install kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable docker //node1和node2;Kubelet負責與其他節點集羣通信,並進行本節點Pod和容器生命週期的管理3、配置master

kubeadm config print init-defaults > /etc/kubernetes/init.default.yaml

cp init.default.yaml init-config.yaml並且修改init-config.yaml

示例:

[root@master kubernetes]# cat init-config.yaml

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

imageRepository: registry.aliyuncs.com/google_containers

kubernetesVersion: v1.14.0

networking:

podSubnet: "192.168.3.0/24"advertiseAddress:master機器ip

image-repository:鏡像倉庫,建議修改爲registry.aliyuncs.com/google_containers

service-cidr:服務發現地址

pod-network-cidr:pod網段

其他參數可自行設置

下載鏡像

[root@master kubernetes]# kubeadm config images pull --config=/etc/kubernetes/init-config.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.14.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.14.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.14.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.14.0

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.3.10

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.3.1

[root@master kubernetes]# kubeadm init --config=init-config.yaml

[root@master kubernetes]# kubeadm init --config=init-config.yaml

[init] Using Kubernetes version: v1.14.0

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Hostname]: hostname "i" could not be reached

[WARNING Hostname]: hostname "i": lookup i on 192.168.170.2:53: server misbehaving

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR DirAvailable--var-lib-etcd]: /var/lib/etcd is not empty

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

[root@master kubernetes]# rm -rf /var/lib/etcd/

[root@master kubernetes]# kubeadm init --config=init-config.yaml

[init] Using Kubernetes version: v1.14.0

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Hostname]: hostname "i" could not be reached

[WARNING Hostname]: hostname "i": lookup i on 192.168.170.2:53: server misbehaving

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [i localhost] and IPs [192.168.170.135 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [i localhost] and IPs [192.168.170.135 127.0.0.1 ::1]

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [i kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.170.135]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 29.511659 seconds

[upload-config] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.14" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --experimental-upload-certs

[mark-control-plane] Marking the node i as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node i as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: ls1ey1.uf8m218idns3bjs8

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.170.135:6443 --token ls1ey1.uf8m218idns3bjs8 \

--discovery-token-ca-cert-hash sha256:ef81ea9df5425aeb92ac34c08bb8a8646f82f50445cccdb6eff1e6c84aa00101 4、配置node

[root@node1 kubernetes]# kubeadm config print init-defaults &> ./init.default.yaml

[root@node1 kubernetes]# cp init.default.yaml init-config.yaml

[root@node1 kubernetes]# cat init-config.yaml //修改後的配置

apiVersion: kubeadm.k8s.io/v1beta1

kind: JoinConfiguration

discovery:

bootstrapToken:

apiServerEndpoint: 192.168.170.135:6443

token: ls1ey1.uf8m218idns3bjs8

unsafeSkipCAVerification: true

tlsBootstrapToken: ls1ey1.uf8m218idns3bjs8

[root@node1 kubernetes]# kubeadm join --config=init-config.yaml

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.在node1和node2上分別做同樣的操作

5、安裝網絡插件

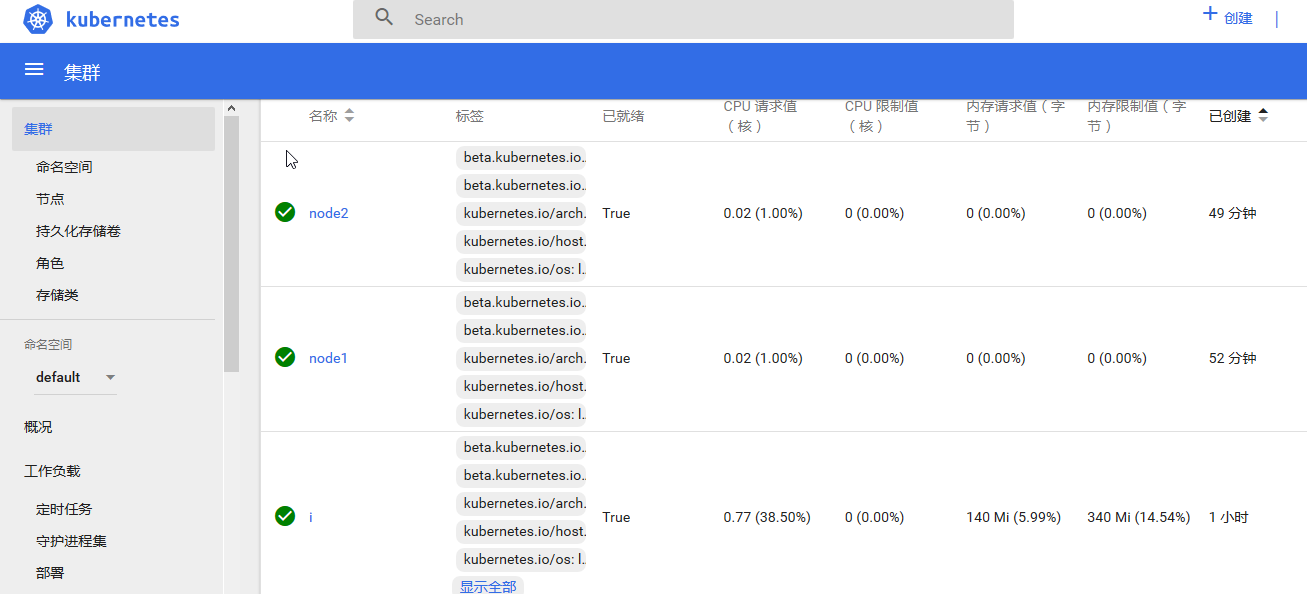

[root@master kubernetes]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

i NotReady master 13m v1.14.3

node1 NotReady <none> 4m46s v1.14.3

node2 NotReady <none> 74s v1.14.3

[root@master kubernetes]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

[root@master kubernetes]# kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version |base64 |tr -d '\n')"

serviceaccount/weave-net created

clusterrole.rbac.authorization.k8s.io/weave-net created

clusterrolebinding.rbac.authorization.k8s.io/weave-net created

role.rbac.authorization.k8s.io/weave-net created

rolebinding.rbac.authorization.k8s.io/weave-net created

daemonset.extensions/weave-net created

[root@master kubernetes]# kubectl get nodes --all-namespaces

NAME STATUS ROLES AGE VERSION

i Ready master 24m v1.14.3

node1 Ready <none> 15m v1.14.3

node2 Ready <none> 11m v1.14.3

[root@master kubernetes]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-8686dcc4fd-cvd7k 1/1 Running 0 24m

kube-system coredns-8686dcc4fd-ntb22 1/1 Running 0 24m

kube-system etcd-i 1/1 Running 0 23m

kube-system kube-apiserver-i 1/1 Running 0 23m

kube-system kube-controller-manager-i 1/1 Running 0 23m

kube-system kube-proxy-fvd2t 1/1 Running 0 15m

kube-system kube-proxy-jcfvp 1/1 Running 0 24m

kube-system kube-proxy-jr6lj 1/1 Running 0 12m

kube-system kube-scheduler-i 1/1 Running 0 23m

kube-system weave-net-bjmt2 2/2 Running 0 104s

kube-system weave-net-kwg5l 2/2 Running 0 104s

kube-system weave-net-v54m4 2/2 Running 0 104s

[root@master kubernetes]# kubectl --namespace=kube-system describe pod etcd-i //如果發現pod狀態問題,可查看錯誤原因

kubeadmin reset 可作用於主機恢復原狀,然後重新執行kubeadm init再次安裝容器分佈情況:

master:pause 8個、apiserver、controller、scheduler、kube-proxy、etcd、weave-npc、weave-kube、coredns

node1和node2:kube-proxy、kube-proxy(pause)、weave-npc、weave-kube、weave-kube(pause)

6、安裝dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

[root@master kubernetes]# sed -i 's/k8s.gcr.io/loveone/g' kubernetes-dashboard.yaml

[root@master kubernetes]# sed -i "160a \ \ \ \ \ \ nodePort: 30001" kubernetes-dashboard.yaml

[root@master kubernetes]# sed -i "161a \ \ type:\ NodePort" kubernetes-dashboard.yaml

[root@master kubernetes]# kubectl create -f kubernetes-dashboard.yaml

[root@master kubernetes]# kubectl get deployment kubernetes-dashboard -n kube-system

[root@master kubernetes]# kubectl get pods -n kube-system -o wide

[root@master kubernetes]# kubectl get services -n kube-system

[root@master kubernetes]# netstat -ntlp|grep 30001獲取令牌:使用令牌方式登陸

[root@master kubernetes]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')圖5

7、創建容器測試

[root@master kubernetes]# kubectl create deployment nginx --image=nginx

[root@master kubernetes]# kubectl expose deployment nginx --port=80 --type=NodePort

[root@master kubernetes]# kubectl get pod,svc

[root@master kubernetes]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-65f88748fd-9tgtv 1/1 Running 0 4m19s** 問題1:***

[root@master kubernetes]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

centos latest 49f7960eb7e4 12 months ago 200MB

swarm latest ff454b4a0e84 12 months ago 12.7MB

[root@master kubernetes]# docker rmi -f ff454b4a0e84

Error: No such image: ff454b4a0e84解決方法:

systemctl stop docker;rm -rf /var/lib/docker;systemctl start docker 參考鏈接:

Kubernets中文社區:https://www.kubernetes.org.cn/doc-16

Kubernets官網:https://kubernetes.io/zh/docs/

Kubernets Git:https://github.com/kubernetes/kubernetes

kuadm安裝k8s:https://www.kubernetes.org.cn/5462.html

kubernetes下載鏈接:https://github.com/kubernetes/kubernetes/releases