一、安裝docker

二、安裝kubelet

三、安裝kube-proxy

四、驗證集羣可用性

五、bootstrap說明

一、安裝docker

[root@master1 yaml]# docker version

Client:

Version: 17.06.0-ce

API version: 1.30

Go version: go1.8.3

Git commit: 02c1d87

Built: Fri Jun 23 21:20:36 2017

OS/Arch: linux/amd64這裏不再說明docker的安裝方法

1、創建service

實際操作中建議先備份service文件

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m shell -a "systemctl daemon-reload;systemctl stop docker"

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m shell -a "rm -rf /usr/lib/systemd/system/docker.service /etc/docker/daemon.json" #清理默認的參數配置文件

[root@master1 service]# vim docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.io

[Service]

WorkingDirectory=/data/k8s/docker

Environment="PATH=/opt/k8s/bin:/bin:/sbin:/usr/bin:/usr/sbin"

EnvironmentFile=-/run/flannel/docker

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m copy -a "src=./docker.service dest=/etc/systemd/system" 備註; $DOCKER_NETWORK_OPTIONS 將會應用flannel的環境變量加入到docker的啓動參數中

2、創建docker-daemon配置文件

[root@master1 service]# vim docker-daemon.json

{

"registry-mirrors": ["192.168.192.234:888"],

"max-concurrent-downloads": 20,

"live-restore": true,

"max-concurrent-uploads": 10,

"debug": true,

"data-root": "/data/k8s/docker/data",

"exec-root": "/data/k8s/docker/exec",

"log-opts": {

"max-size": "100m",

"max-file": "5"

}

}

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m copy -a "src=./docker-daemon.json dest=/etc/docker/daemon.json " 3、啓動和檢查

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m shell -a "systemctl daemon-reload;systemctl restart docker;systemctl status docker"

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m shell -a "systemctl status docker" |grep -i active #確保都是running

[root@master1 service]# ansible master -i /root/udp/hosts.ini -m shell -a "systemctl enable docker"

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m shell -a "ip addr show docker0 ; ip addr show flannel.1"

success => 192.168.192.225 => rc=0 =>

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1450 qdisc noqueue state DOWN

link/ether 02:42:28:1d:d2:69 brd ff:ff:ff:ff:ff:ff

inet 172.30.56.1/21 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN

link/ether 96:6c:a6:5a:01:21 brd ff:ff:ff:ff:ff:ff

inet 172.30.56.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m shell -a "docker info" #查看參數是否生效確保flannel.1的網段和docker0的網段在同一個網段內

Registry參考:https://blog.51cto.com/hmtk520/2422947 #實測中搭建registry在192.168.192.234

二、安裝kubelet

kubelet運行在所有node上,向api-server註冊並接收api-server的請求對pod進行管理,如果master同時扮演node角色,master也是需要部署kubelet、flannel、docker、kube-proxy等角色

1、創建kubelet bootstrap kubeconfig 文件

[root@master1 service]# NODE_NAMES=(master1 master2 master3 node1 node2)

[root@master1 service]# source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NAMES[@]}

do

export BOOTSTRAP_TOKEN=$(kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:${node_name} --kubeconfig ~/.kube/config) # 創建 token

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/cert/ca.pem --embed-certs=true --server=https://127.0.0.1:8443 --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig # 設置集羣參數

kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig # 設置客戶端認證參數

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig # 設置上下文參數

kubectl config use-context default --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig # 設置默認上下文

done

[root@master1 service]# ls |grep bootstrap

kubelet-bootstrap-master1.kubeconfig

kubelet-bootstrap-master2.kubeconfig

kubelet-bootstrap-master3.kubeconfig

kubelet-bootstrap-node1.kubeconfig

kubelet-bootstrap-node2.kubeconfig

[root@master1 service]# kubeadm token list --kubeconfig ~/.kube/config

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

5vk286.d0r0fjv1hnfmnpmp 23h 2019-07-22T13:01:00+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:node1

6b3u55.99wxedj52ldsl2d7 23h 2019-07-22T13:00:59+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:master2

h6cex2.g3fc48703ob47x0o 23h 2019-07-22T13:00:59+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:master1

losmls.877d58c6rd9g5qvh 23h 2019-07-22T13:01:00+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:master3

xn0i2f.lvdf3vy5aw3d4tl0 23h 2019-07-22T13:01:01+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:node2kubeconfig包含token、bootstrap等信息、認證通過後kube-controller-manager 爲 kubelet 創建 client 和 server 證書

token 有效期爲 1 天,超期後將不能再被用來 boostrap kubelet,且會被 kube-controller-manager 的 tokencleaner 清理;

kube-apiserver 接收 kubelet 的 bootstrap token 後,將請求的 user 設置爲 system:bootstrap:<Token ID>,group 設置爲 system:bootstrappers,後續將爲這個 group 設置 ClusterRoleBinding;

[root@master1 yaml]# kubectl explain secret.type #Secret共有多種類型的,常見類型如下

kubernetes.io/service-account-token

bootstrap.kubernetes.io/token

Opaque

[root@master1 service]# kubectl get secrets -n kube-system|grep bootstrap-token #查看token關聯的Secret:

bootstrap-token-5vk286 bootstrap.kubernetes.io/token 7 6m30s

bootstrap-token-6b3u55 bootstrap.kubernetes.io/token 7 6m31s

bootstrap-token-h6cex2 bootstrap.kubernetes.io/token 7 6m31s

bootstrap-token-losmls bootstrap.kubernetes.io/token 7 6m30s

bootstrap-token-xn0i2f bootstrap.kubernetes.io/token 7 6m29s

[root@master1 yaml]# NODE_NAMES=(master1 master2 master3 node1 node2)

[root@master1 service]# for node_name in ${NODE_NAMES[@]} ; do scp kubelet-bootstrap-${node_name}.kubeconfig root@${node_name}:/etc/kubernetes/kubelet-bootstrap.kubeconfig ;done2、kubelet配置文件

[root@master1 yaml]# vim kubelet-config.yaml.template

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: "##NODE_IP##"

staticPodPath: ""

syncFrequency: 1m

fileCheckFrequency: 20s

httpCheckFrequency: 20s

staticPodURL: ""

port: 10250

readOnlyPort: 0

rotateCertificates: true

serverTLSBootstrap: true

authentication:

anonymous:

enabled: false

webhook:

enabled: true

x509:

clientCAFile: "/etc/kubernetes/cert/ca.pem"

authorization:

mode: Webhook

registryPullQPS: 0

registryBurst: 20

eventRecordQPS: 0

eventBurst: 20

enableDebuggingHandlers: true

enableContentionProfiling: true

healthzPort: 10248

healthzBindAddress: "##NODE_IP##"

clusterDomain: "cluster.local"

clusterDNS:

- "10.244.0.2"

nodeStatusUpdateFrequency: 10s

nodeStatusReportFrequency: 1m

imageMinimumGCAge: 2m

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

volumeStatsAggPeriod: 1m

kubeletCgroups: ""

systemCgroups: ""

cgroupRoot: ""

cgroupsPerQOS: true

cgroupDriver: cgroupfs

runtimeRequestTimeout: 10m

hairpinMode: promiscuous-bridge

maxPods: 220

podCIDR: "172.30.0.0/16"

podPidsLimit: -1

resolvConf: /etc/resolv.conf

maxOpenFiles: 1000000

kubeAPIQPS: 1000

kubeAPIBurst: 2000

serializeImagePulls: false

evictionHard:

memory.available: "100Mi"

nodefs.available: "10%"

nodefs.inodesFree: "5%"

imagefs.available: "15%"

evictionSoft: {}

enableControllerAttachDetach: true

failSwapOn: true

containerLogMaxSize: 20Mi

containerLogMaxFiles: 10

systemReserved: {}

kubeReserved: {}

systemReservedCgroup: ""

kubeReservedCgroup: ""

enforceNodeAllocatable: ["pods"]參數說明:

address:kubelet 安全端口(https,10250)

readOnlyPort=0:關閉只讀端口(默認 10255)

authentication.anonymous.enabled:設置爲false,不允許匿名訪問 10250 端口;

authentication.x509.clientCAFile:指定簽名客戶端證書的 CA 證書,開啓 HTTP 證書認證;

authentication.webhook.enabled=true:開啓 HTTPs bearer token 認證;對於未通過 x509 證書和 webhook 認證的請求(kube-apiserver 或其他客戶端),將被拒絕,提示 Unauthorized;

authroization.mode=Webhook:kubelet 使用 SubjectAcce***eview API 查詢 kube-apiserver 某 user、group 是否具有操作資源的權限(RBAC);

eatureGates.RotateKubeletClientCertificate、featureGates.RotateKubeletServerCertificate:自動 rotate 證書,證書的有效期取決於 kube-controller-manager 的 --experimental-cluster-signing-duration 參數;

[root@master1 yaml]# NODE_IPS=(192.168.192.222 192.168.192.223 192.168.192.224 192.168.192.225 192.168.192.226)

[root@master1 yaml]# NODE_NAMES=(master1 master2 master3 node1 node2)

[root@master1 yaml]# for i in `seq 0 4`;do sed "s@##NODE_IP##@${NODE_IPS["$i"]}@g" ./kubelet-config.yaml.template &> ./kubelet-config.${NODE_NAMES[$i]};done

[root@master1 yaml]# for i in `seq 0 4`;do scp ./kubelet-config.${NODE_NAMES[$i]} root@${NODE_IPS[$i]}:/etc/kubernetes/kubelet-config.yaml; done 3、service配置

[root@master1 service]# vim kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/data/k8s/k8s/kubelet

ExecStart=/opt/k8s/bin/kubelet \

--allow-privileged=true \

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/cert \

--cni-conf-dir=/etc/cni/net.d \

--container-runtime=docker \

--container-runtime-endpoint=unix:///var/run/dockershim.sock \

--root-dir=/data/k8s/k8s/kubelet \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet-config.yaml \

--hostname-override==##NODE_NAME## \

--pod-infra-container-image=192.168.192.234:888/pause:latest \

--image-pull-progress-deadline=15m \

--volume-plugin-dir=/data/k8s/k8s/kubelet/kubelet-plugins/volume/exec/ \

--logtostderr=true \

--v=2

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

[root@master1 service]# bootstrap-kubeconfig:指向 bootstrap kubeconfig 文件,kubelet 使用該文件中的用戶名和 token 向 kube-apiserver 發送 TLS Bootstrapping 請求

cert-dir # k8s簽署證書後存放證書和私鑰的文件和目錄,然後寫入--kubeconfig 文件

[root@master1 service]# NODE_NAMES=(master1 master2 master3 node1 node2)

[root@master1 service]# for i in ${NODE_NAMES[@]} ; do sed "s@##NODE_NAME##@$i@g" ./kubelet.service.template &> ./kubelet.service.$i ;done

[root@master1 service]# for i in ${NODE_NAMES[@]} ;do scp ./kubelet.service.$i root@$i:/etc/systemd/system/kubelet.service; done 4、啓動服務和檢查

[root@master1 docker]# ansible all -i /root/udp/hosts.ini -m shell -a "mkdir /data/k8s/k8s/kubelet/kubelet-plugins/volume/exec/ -pv "

[root@master1 docker]# ansible all -i /root/udp/hosts.ini -m shell -a "systemctl daemon-reload;systemctl restart kubelet"

[root@master1 docker]# ansible all -i /root/udp/hosts.ini -m shell -a "systemctl status kubelet"

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m shell -a "systemctl enable kubelet" 5、檢查發現2個node無法加入集羣

[root@master2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready <none> 179m v1.14.3

master2 Ready <none> 4h10m v1.14.3

master3 Ready <none> 4h10m v1.14.3node1上:systemctl status kubelet報錯

Jul 21 23:06:08 node1 kubelet[21844]: E0721 23:06:08.081728 21844 reflector.go:126] k8s.io/kubernetes/pkg/kubelet/kubelet.go:442: Failed to list *v1.Service: Get https://127.0.0.1:8443/api/v1/services?limit=500&resourceVersion=0: dial tcp 127.0.0.1:8443: connect: connection refused

Jul 21 23:06:08 node1 kubelet[21844]: E0721 23:06:08.113704 21844 kubelet.go:2244] node "node1" not found 解決方法:

方法1:把kube-nginx也在node1和node2上安裝下

方法2:修改node1和node2的/etc/kubernetes/kubelet-bootstrap.kubeconfig的server爲master1/master2/master3 的6443端口

在https://blog.51cto.com/hmtk520/2423306 中已做訂正

6、Boostrap Token Auth和授予權限

1)kubelet 啓動時查找 --kubeletconfig 參數對應的文件(user的證書信息)是否存在,如果不存在則使用 --bootstrap-kubeconfig 指定的 kubeconfig 文件向 kube-apiserver 發送證書籤名請求 (CSR)

kube-apiserver 收到 CSR 請求後,對其中的 Token 進行認證,認證通過後將請求的 user 設置爲 system:bootstrap:<Token ID>,group 設置爲 system:bootstrappers,這一過程稱爲 Bootstrap Token Auth。

默認情況下,這個 user 和 group 沒有創建 CSR 的權限,kubelet 啓動失敗

解決辦法是:創建一個 clusterrolebinding,將 group system:bootstrappers 和 clusterrole system:node-bootstrapper 綁定:

[root@master1 ~]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers

2)kubelet啓動後使用 --bootstrap-kubeconfig 向 kube-apiserver 發送 CSR 請求,當這個 CSR 被 approve 後,kube-controller-manager 爲 kubelet 創建 TLS 客戶端證書、私鑰和 --kubeletconfig 文件

注意:kube-controller-manager 需要配置 --cluster-signing-cert-file 和 --cluster-signing-key-file 參數,纔會爲 TLS Bootstrap 創建證書和私鑰。

3)自動簽署csr

創建三個 ClusterRoleBinding,分別用於自動 approve client、renew client、renew server 證書

[root@master1 service]# kubectl get csr #有很多pending狀態的

[root@master1 yaml]# vim csr-crb.yaml

# Approve all CSRs for the group "system:bootstrappers"

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: auto-approve-csrs-for-group

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

apiGroup: rbac.authorization.k8s.io

---

# To let a node of the group "system:nodes" renew its own credentials

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-client-cert-renewal

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

apiGroup: rbac.authorization.k8s.io

---

# A ClusterRole which instructs the CSR approver to approve a node requesting a

# serving cert matching its client cert.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: approve-node-server-renewal-csr

rules:

- apiGroups: ["certificates.k8s.io"]

resources: ["certificatesigningrequests/selfnodeserver"]

verbs: ["create"]

---

# To let a node of the group "system:nodes" renew its own server credentials

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-server-cert-renewal

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: approve-node-server-renewal-csr

apiGroup: rbac.authorization.k8s.io

[root@master1 yaml]# kubectl apply -f csr-crb.yaml

auto-approve-csrs-for-group:自動 approve node 的第一次 CSR; 注意第一次 CSR 時,請求的 Group 爲 system:bootstrappers;

node-client-cert-renewal:自動 approve node 後續過期的 client 證書,自動生成的證書 Group 爲 system:nodes;

node-server-cert-renewal:自動 approve node 後續過期的 server 證書,自動生成的證書 Group 爲 system:nodes;7、檢查和驗證

[root@master1 yaml]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready <none> 18h v1.14.3

master2 Ready <none> 19h v1.14.3

master3 Ready <none> 19h v1.14.3

node1 Ready <none> 15h v1.14.3

node2 Ready <none> 15h v1.14.3

kube-controller-manager 爲各 node 生成了 kubeconfig 文件和公私鑰:

[root@master1 yaml]# ansible all -i /root/udp/hosts.ini -m shell -a "ls /etc/kubernetes/kubelet.kubeconfig"

[root@master1 yaml]# ansible all -i /root/udp/hosts.ini -m shell -a "ls -l /etc/kubernetes/cert/ |grep kubelet"

[root@master1 yaml]# kubectl get csr |grep Pending #獲取pending狀態的csr

[root@master1 yaml]# kubectl certificate approve csr-zzhxb #手動簽署證書

[root@node1 ~]# ls -l /etc/kubernetes/cert/kubelet-*

-rw------- 1 root root 1269 Jul 21 23:16 /etc/kubernetes/cert/kubelet-client-2019-07-21-23-16-26.pem

lrwxrwxrwx 1 root root 59 Jul 21 23:16 /etc/kubernetes/cert/kubelet-client-current.pem -> /etc/kubernetes/cert/kubelet-client-2019-07-21-23-16-26.pem

-rw------- 1 root root 1305 Jul 22 14:49 /etc/kubernetes/cert/kubelet-server-2019-07-22-14-49-22.pem

lrwxrwxrwx 1 root root 59 Jul 22 14:49 /etc/kubernetes/cert/kubelet-server-current.pem -> /etc/kubernetes/cert/kubelet-server-2019-07-22-14-49-22.pem

kubelet監聽情況

[root@node1 ~]# netstat -tunlp |grep kubelet

tcp 0 0 192.168.192.225:10248 0.0.0.0:* LISTEN 8931/kubelet #10248: healthz http服務

tcp 0 0 127.0.0.1:19529 0.0.0.0:* LISTEN 8931/kubelet

tcp 0 0 192.168.192.225:10250 0.0.0.0:* LISTEN 8931/kubelet #10250: https服務,訪問該端口時需要認證和授權(即使訪問 /healthz 也需要)8、kubelet api認證和授權

kubelet:10250端口監聽https請求,提供的接口:

/pods、/runningpods

/metrics、/metrics/cadvisor、/metrics/probes

/spec

/stats、/stats/container

/logs

/run/、/exec/, /attach/, /portForward/, /containerLogs/參考:https://github.com/kubernetes/kubernetes/blob/master/pkg/kubelet/server/server.go#L434:3

由於關閉了匿名認證,同時開啓了 webhook 授權,所有訪問 10250 端口 https API 的請求都需要被認證和授權。

預定義的 ClusterRole system:kubelet-api-admin 授予訪問 kubelet 所有 API 的權限(kube-apiserver 使用的 kubernetes 證書 User 授予了該權限):

[root@master1 yaml]# kubectl describe clusterrole system:kubelet-api-admin

Name: system:kubelet-api-admin

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

nodes/log [] [] [*]

nodes/metrics [] [] [*]

nodes/proxy [] [] [*]

nodes/spec [] [] [*]

nodes/stats [] [] [*]

nodes [] [] [get list watch proxy]kubelet配置瞭如下授權參數:

- authentication.anonymous.enabled:設置爲 false,不允許匿名訪問 10250 端口;

- authentication.x509.clientCAFile:指定簽名客戶端證書的 CA 證書,開啓 HTTPs 證書認證;

- authentication.webhook.enabled=true:開啓 HTTPs bearer token 認證;

- authroization.mode=Webhook:開啓 RBAC 授權;

kubelet 收到請求後,使用 clientCAFile 對證書籤名進行認證,或者查詢 bearer token 是否有效。如果兩者都沒通過,則拒絕請求,提示 Unauthorized:[root@master1 yaml]# curl -s --cacert /etc/kubernetes/cert/ca.pem https://172.27.137.240:10250/metrics Unauthorized [root@master1 yaml]# curl -s --cacert /etc/kubernetes/cert/ca.pem -H "Authorization: Bearer 123456" https://172.27.137.240:10250/metrics Unauthorized通過認證後,kubelet 使用 SubjectAcce***eview API 向 kube-apiserver 發送請求,查詢證書或 token 對應的 user、group 是否有操作資源的權限(RBAC)

9、證書認證和授權

[root@master1 yaml]# curl -s --cacert /etc/kubernetes/cert/ca.pem --cert /etc/kubernetes/cert/kube-controller-manager.pem --key /etc/kubernetes/cert/kube-controller-manager-key.pem https://192.168.192.222:10250/metrics

Forbidden (user=system:kube-controller-manager, verb=get, resource=nodes, subresource=metrics)[root@master1 yaml]#

[root@master1 yaml]#

[root@master1 yaml]# curl -s --cacert /etc/kubernetes/cert/ca.pem --cert /opt/k8s/work/cert/admin.pem --key /opt/k8s/work/cert/admin-key.pem https://192.168.192.222:10250/metrics|head

# HELP apiserver_audit_event_total Counter of audit events generated and sent to the audit backend.

# TYPE apiserver_audit_event_total counter

apiserver_audit_event_total 0

# HELP apiserver_audit_requests_rejected_total Counter of apiserver requests rejected due to an error in audit logging backend.

# TYPE apiserver_audit_requests_rejected_total counter

apiserver_audit_requests_rejected_total 0

# HELP apiserver_client_certificate_expiration_seconds Distribution of the remaining lifetime on the certificate used to authenticate a request.

# TYPE apiserver_client_certificate_expiration_seconds histogram

apiserver_client_certificate_expiration_seconds_bucket{le="0"} 0

apiserver_client_certificate_expiration_seconds_bucket{le="1800"} 010、bear token認證和授權

[root@master1 yaml]# kubectl create clusterrolebinding kubelet-api-test --clusterrole=system:kubelet-api-admin --serviceaccount=default:kubelet-api-test

clusterrolebinding.rbac.authorization.k8s.io/kubelet-api-test created

[root@master1 yaml]# SECRET=$(kubectl get secrets | grep kubelet-api-test | awk '{print $1}')

[root@master1 yaml]# TOKEN=$(kubectl describe secret ${SECRET} | grep -E '^token' | awk '{print $2}')

[root@master1 yaml]# echo ${TOKEN}

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6Imt1YmVsZXQtYXBpLXRlc3QtdG9rZW4tc2hnNnAiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoia3ViZWxldC1hcGktdGVzdCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImVhZDI4ZjMzLWFlZjAtMTFlOS05MDMxLTAwMTYzZTAwMDdmZiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpkZWZhdWx0Omt1YmVsZXQtYXBpLXRlc3QifQ.Ej1D4q-ekLNi_1QEDXq4RxvNdPBWHNPNwUk9NE-O6-4a003yHZtU019ykqBu7WEc8yi1MB75D1in3I9oXh5bL1-bpJbDcK6z7_M8qGl2-20yFnppprP99tUJUQo7d6G3XS7jtmMbpwPgJyVrwvIX_ex2pvRkWqijR0jzGLWbWt7oEmY206o5ePF1vycpHdKznkEh4lXmJt-Nx8kzeuOLcBrc8MM2luIi26E3Tec793cQWsf6uMCgqaivkI0PlYY_jLmUJyg3pY8bsFUSCj6C6_5SqFMv2NjiMOtX6AjBDdtq64EHZcfaQd3O7hlXNgK4txlNlaqwE1vO8viexk1ONQ

[root@master1 yaml]# curl -s --cacert /etc/kubernetes/cert/ca.pem -H "Authorization: Bearer ${TOKEN}" https://192.168.192.222:10250/metrics|head

# HELP apiserver_audit_event_total Counter of audit events generated and sent to the audit backend.

# TYPE apiserver_audit_event_total counter

apiserver_audit_event_total 0

# HELP apiserver_audit_requests_rejected_total Counter of apiserver requests rejected due to an error in audit logging backend.

# TYPE apiserver_audit_requests_rejected_total counter

apiserver_audit_requests_rejected_total 0

# HELP apiserver_client_certificate_expiration_seconds Distribution of the remaining lifetime on the certificate used to authenticate a request.

# TYPE apiserver_client_certificate_expiration_seconds histogram

apiserver_client_certificate_expiration_seconds_bucket{le="0"} 0

apiserver_client_certificate_expiration_seconds_bucket{le="1800"} 011、cadvisor和metric

cadvisor 是內嵌在 kubelet 二進制中的,統計所在節點各容器的資源(CPU、內存、磁盤、網卡)使用情況的服務。

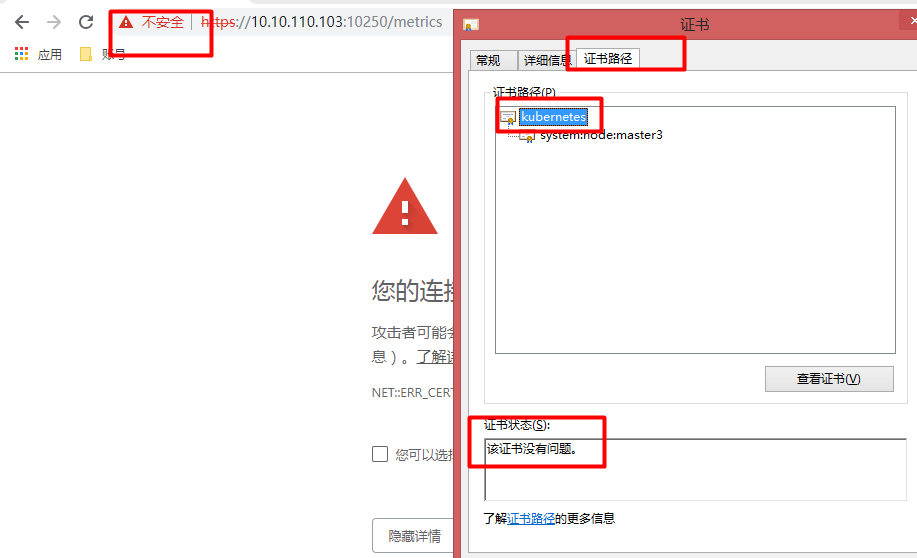

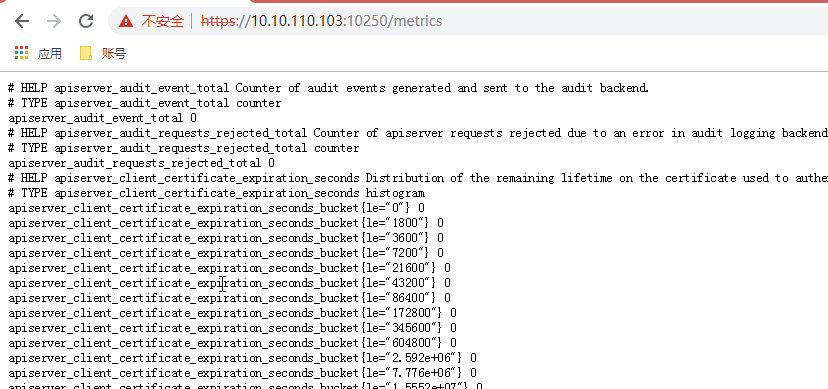

瀏覽器訪問 https://192.168.192.222:10250/metrics 和https://192.168.192.222:10250/metrics/cadvisor 分別返回 kubelet 和 cadvisor 的 metrics。

kubelet.config.json 設置 authentication.anonymous.enabled 爲 false,不允許匿名證書訪問 10250 的 https 服務;

需要手動安裝kubernetes的CA證書

本案例:在metric之前加了一個代理,代理後的地址是:https://10.10.110.103:10250/metrics和https://10.10.110.103:10250/metrics/cadvisor

1)把ca.pem的證書下載到本地

重命名爲ca.crt

2)安裝證書

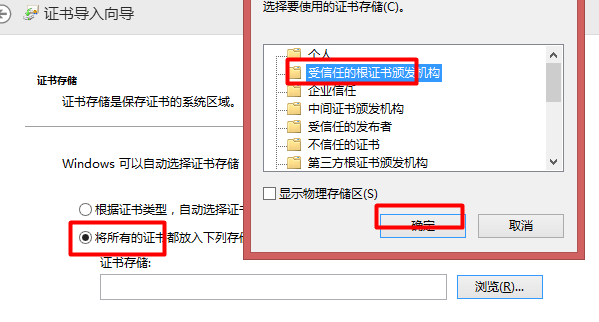

雙擊導出的根證書文件(ca.crt)->安裝證書->存儲位置:本地計算機->圖3

3)查看證書狀態

4)導出個人證書

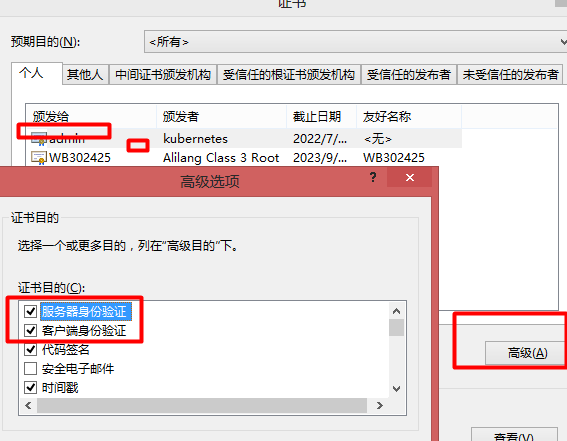

kubelet的https端口需要雙向認證

[root@master1 ~]# openssl pkcs12 -export -out admin.pfx -inkey admin-key.pem -in admin.pem -certfile ca.pem

5)導入個人證書

chrome->設置->高級->管理證書->個人->導入[按照提示導入該證書]

12、獲取kubelet配置

[root@master1 yaml]# curl -sSL --cacert /etc/kubernetes/cert/ca.pem --cert /opt/k8s/work/cert/admin.pem --key /opt/k8s/work/cert/admin-key.pem https://127.0.0.1:8443/api/v1/nodes/master1/proxy/configz | jq '.kubeletconfig|.kind=KubeletConfiguration|.apiVersion=kubelet.config.k8s.io/v1beta1'三、安裝kube-proxy

kube-proxy運行在所有worker節點上,監聽 apiserver 中 service 和 endpoint 的變化情況,創建路由規則以提供服務 IP 和負載均衡功能。

本文講解使用 ipvs 模式的 kube-proxy 的部署過程。

1、創建證書

[root@master1 cert]# vim kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HangZhou",

"L": "HangZhou",

"O": "k8s",

"OU": "FirstOne"

}

]

}

[root@master1 cert]# cfssl gencert -ca=./ca.pem -ca-key=./ca-key.pem -config=./ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy2、配置kubeconfig

Master的地址信息和認證信息

[root@master1 cert]# kubectl config set-cluster kubernetes --certificate-authority=./ca.pem --embed-certs=true --server=https://127.0.0.1:8443 --kubeconfig=kube-proxy.kubeconfig

[root@master1 cert]# kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

[root@master1 cert]# kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

[root@master1 cert]# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

[root@master1 cert]# ansible all -i /root/udp/hosts.ini -m copy -a "src=./kube-proxy.kubeconfig dest=/etc/kubernetes/" 3、創建kube-proxy配置文件

[root@master1 cert]# vim kube-proxy-config.yaml.template

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

burst: 200

kubeconfig: "/etc/kubernetes/kube-proxy.kubeconfig"

qps: 100

bindAddress: ##NODE_IP##

healthzBindAddress: ##NODE_IP##:10256

metricsBindAddress: ##NODE_IP##:10249

enableProfiling: true

clusterCIDR: 172.30.0.0/16

hostnameOverride: ##NODE_NAME##

mode: "ipvs"

portRange: ""

kubeProxyIPTablesConfiguration:

masqueradeAll: false

kubeProxyIPVSConfiguration:

scheduler: rr

excludeCIDRs: []

[root@master1 cert]# NODE_IPS=(192.168.192.222 192.168.192.223 192.168.192.224 192.168.192.225 192.168.192.226)

[root@master1 cert]# NODE_NAMES=(master1 master2 master3 node1 node2)

[root@master1 cert]# for i in `seq 0 4 `;do sed "s@##NODE_NAME##@${NODE_NAMES[$i]}@g;s@##NODE_IP##@${NODE_IPS[$i]}@g" ./kube-proxy-config.yaml.template &> ./kube-proxy-config.yaml.${NODE_NAMES[$i]};done

[root@master1 cert]# for i in ${NODE_NAMES[@]};do scp ./kube-proxy-config.yaml.$i root@$i:/etc/kubernetes/kube-proxy-config.yaml ;done 4、配置systemd文件

[root@master1 service]# vim kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/data/k8s/k8s/kube-proxy

ExecStart=/opt/k8s/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy-config.yaml \

--logtostderr=true \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.targe

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m copy -a "src=./kube-proxy.service dest=/etc/systemd/system/"

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m shell -a "mkdir /data/k8s/k8s/kube-proxy"

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m shell -a "modprobe ip_vs_rr"

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m shell -a "lsmod |grep vs"

[root@master1 service]# ansible all -i /root/udp/hosts.ini -m shell -a "systemctl daemon-reload && systemctl enable kube-proxy && systemctl restart kube-proxy" 四、驗證集羣可用性

1、檢查node狀態

[root@master1 cert]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready <none> 20h v1.14.3

master2 Ready <none> 21h v1.14.3

master3 Ready <none> 21h v1.14.3

node1 Ready <none> 17h v1.14.3

node2 Ready <none> 17h v1.14.32、創建測試yaml

[root@master1 yaml]# cat dp.yaml

apiVersion: v1

kind: Service

metadata:

name: baseservice

namespace: default

spec:

ports:

- name: ssh

port: 22

targetPort: 22

selector:

name: base

version: v1

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: base

namespace: default

spec:

replicas: 5

selector:

matchLabels:

name: base

version: v1

template:

metadata:

labels:

name: base

version: v1

spec:

containers:

- name: base-v1

image: 192.168.192.234:888/base:v1

ports:

- name: ssh

containerPort: 22

[root@master1 yaml]# kubectl apply -f dp.yaml

service/baseservice created

deployment.apps/base created

[root@master1 yaml]# kubectl get pods

NAME READY STATUS RESTARTS AGE

base-775b9cd6f4-6hg4k 1/1 Running 0 3s

base-775b9cd6f4-hkhfm 1/1 Running 0 3s

base-775b9cd6f4-hvsrm 1/1 Running 0 3s

base-775b9cd6f4-mmkgj 1/1 Running 0 3s

base-775b9cd6f4-rsn79 0/1 CrashLoopBackOff 1 3s

[root@master1 yaml]# kubectl describe pods base-775b9cd6f4-rsn79

Name: base-775b9cd6f4-rsn79

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: node1/192.168.192.225

Start Time: Mon, 22 Jul 2019 23:08:15 +0800

Labels: name=basev1

pod-template-hash=775b9cd6f4

version=v1

Annotations: <none>

Status: Running

IP:

Controlled By: ReplicaSet/base-775b9cd6f4

Containers:

base:

Container ID: docker://4d0ac70c18989fb1aefd80ae5202449c71e9c63690dc3da44de5ee100b3d7959

Image: 192.168.192.234:888/base:latest

Image ID: docker-pullable://192.168.192.234:888/base@sha256:1fff451e7371bbe609536604a17fd73920e1644549c93308b9f4d035ea4d8740

Port: 22/TCP

Host Port: 0/TCP

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: ContainerCannotRun

Message: cannot join network of a non running container: 8df53e746bb9e80c9249a08eb6c4da0e9fcf0a8435f2f26a7f02dca6e50ba77b

Exit Code: 128

Started: Mon, 22 Jul 2019 23:08:26 +0800

Finished: Mon, 22 Jul 2019 23:08:26 +0800

Ready: False

Restart Count: 2

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-lf2bh (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

default-token-lf2bh:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-lf2bh

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 360s

node.kubernetes.io/unreachable:NoExecute for 360s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 27s default-scheduler Successfully assigned default/base-775b9cd6f4-rsn79 to node1

Normal Pulling 26s (x2 over 27s) kubelet, node1 Pulling image "192.168.192.234:888/base:latest"

Normal Pulled 26s (x2 over 26s) kubelet, node1 Successfully pulled image "192.168.192.234:888/base:latest"

Normal Created 26s (x2 over 26s) kubelet, node1 Created container base

Warning Failed 26s kubelet, node1 Error: failed to start container "base": Error response from daemon: cannot join network of a non running container: 177fae54ba52402deb52609f98c24177695ef849dd929f5166923324e431997e

Warning Failed 26s kubelet, node1 Error: failed to start container "base": Error response from daemon: cannot join network of a non running container: 1a3d1c9f9ec7cf6ae2011777c60d7347d54efe4e1a225f1e75794bc51ec55556

Normal SandboxChanged 18s (x9 over 26s) kubelet, node1 Pod sandbox changed, it will be killed and re-created.

Warning BackOff 18s (x8 over 25s) kubelet, node1 Back-off restarting failed container

[root@master1 yaml]# 嘗試了各種方法,暫時沒有找到根本原因 //最後通過對該node重新初始化操作系統並重新安裝配置docker、kubelet、kube-proxy解決

3、問題記錄:

kueblet啓動報錯

failed to start ContainerManager failed to initialize top level QOS containers: failed to update top level Burstable QOS cgroup : failed to set supported cgroup subsystems for cgroup [kubepods burstable]: Failed to find subsystem mount for required subsystem: pids解決方法:docker.service 配置中增加 --exec-opt native.cgroupdriver=systemd 選項。

解法1:重啓主機

解法2:手動掛載pid:mount -t cgroup -o pids,rw,nosuid,nodev,noexec,relatime,pids pids /sys/fs/cgroup/pids

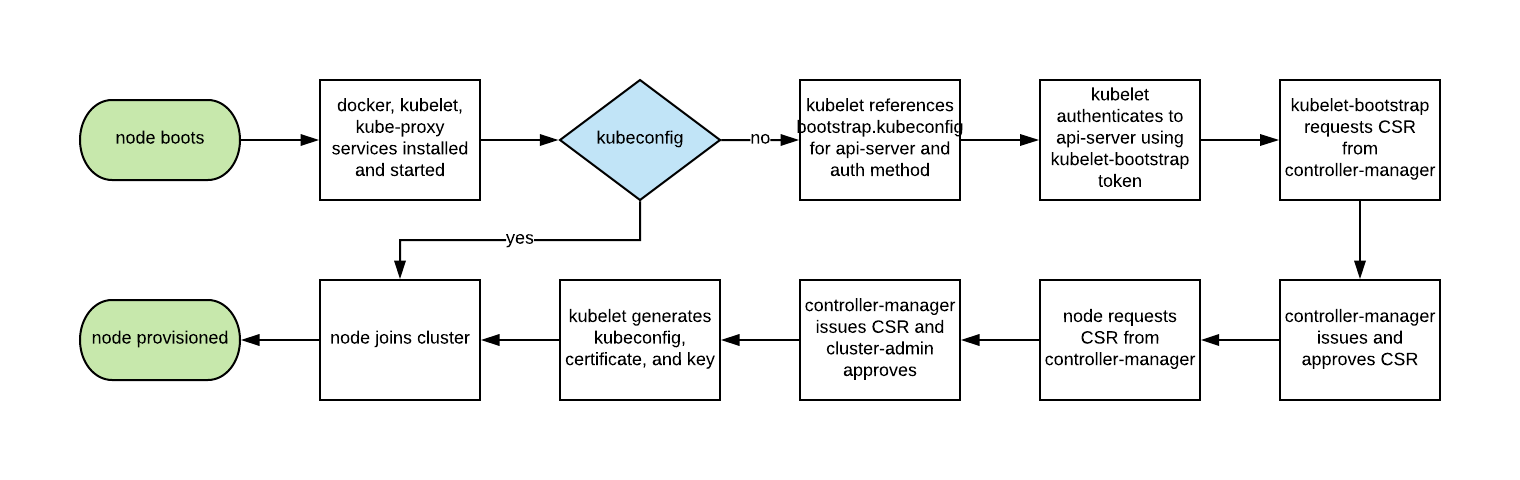

五、bootstrap說明

1、bootstrap

node節點默認只安裝了docker和kubelet,kubelet要和apiserver通信,但是雙方誰都不認識誰。所以,kubelet bootstrap要以自動化的方式解決如下幾個問題:

1)在只知道 api server IP 地址的情況下,node 如何獲取 api server 的 CA 證書?

2)如何讓 api server 信任 worker?因爲在 api server 信任 worker 之前,worker 沒有途徑拿到自己的證書,有點雞生蛋蛋生雞的感覺

2、bootstrap token創建

kubelet要讓apiserver信任自己,worker 得需要先過 master 認證鑑權這一關

k8s支持的認證方式:Bootstrap Token Authentication、token文件、x509證書、service Account等等

使用 Bootstrap Token Authentication時,只需告訴kubelet一個特殊的token,kubelet自然就能通過api server的是認證

[root@master1 yaml]# cat token.yaml

apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-abcdef #token 的 name 必須是 bootstrap-token-<token-id> 的格式

namespace: kube-system

type: bootstrap.kubernetes.io/token #token 的 type 必須是 bootstrap.kubernetes.io/token

stringData:

description: "The bootstrap token for testing."

token-id: abcdef #token 的 token-id 和 token-secret 分別是6位和16位數字和字母的組合

token-secret: 0123456789abcdef

expiration: 2019-09-16T00:00:00Z #token的有效期,token 失效,kubelet 就無法使用 token 跟 api server 通信

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:test-nodes #定義了 token 代表的用戶所屬的額外的 group,而默認 group 名爲 system:bootstrappers

[root@master1 yaml]# kubectl explain secret.type

secret的type主要有2中kubernetes.io/service-account-token、bootstrap.kubernetes.io/token、Opaque

[root@master1 bootstrap]# kubectl describe secret bootstrap-token-abcdef -n kube-system

[root@master1 bootstrap]# kubectl get secret bootstrap-token-abcdef -n kube-system -o yaml3、生成kubelet證書

token定義有expiration過期時間,過了有效期後kubelet就無法使用token和api server通信。所以這個token只能作爲kubelet初始化時跟api server的臨時通信

kubelet最終還是要使用證書和apiserver通信,如果讓運維人員爲每個node維護一個證書,太過於繁瑣。

bootstrap解決了這個問題:kubelet 使用低權限的 bootstrap token 跟 api server 建立連接後,要能夠自動向 api server 申請自己的證書,並且 api server 要能夠自動審批證書

4、證書

[root@master1 bootstrap]# name=vip ;group=newlands

[root@master1 bootstrap]# cat vip.json

{

"hosts": [],

"CN": "vip",

"key": {

"algo": "ecdsa",

"size": 256

},

"names": [

{

"C": "NZ",

"ST": "Wellington",

"L": "Wellington",

"O": "newlands",

"OU": "Test"

}

]

}

[root@master1 bootstrap]# cat vip.json | cfssl genkey - | cfssljson -bare $name

生成:vip.csr vip-key.pem5、創建 csr

[root@master1 bootstrap]# cat <<EOF | kubectl apply -f -

apiVersion: certificates.k8s.io/v1beta1

kind: CertificateSigningRequest

metadata:

name: $name

spec:

groups:

- $group

request: $(cat ${name}.csr | base64 | tr -d '\n')

usages:

- key encipherment

- digital signature

- client auth

[root@master1 bootstrap]# kubectl get csr vip -o yaml

[root@master1 bootstrap]# kubectl get csr vip

NAME AGE REQUESTOR CONDITION

vip 14m admin Pending6、爲vip用戶綁定clusterrole

目標:讓vip用戶發起的csr能夠被自動審批,那麼vip 用戶需要訪問csr api(授權給vip用戶該clusterrole即可)

[root@master1 cert]# kubectl describe clusterrole system:node-bootstrapper

Name: system:node-bootstrapper

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

certificatesigningrequests.certificates.k8s.io [] [] [create get list watch]

[root@master1 cert]# kubectl create clusterrolebinding csr-vip --clusterrole system:node-bootstrapper --user vip其次,我們需要給 vip 用戶另一個特殊的 clusterrole

[root@master1 cert]# kubectl describe clusterrole system:certificates.k8s.io:certificatesigningrequests:nodeclient

Name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

certificatesigningrequests.certificates.k8s.io/nodeclient [] [] [create]

[root@master1 cert]# kubectl create clusterrolebinding nodeclient-vip --clusterrole system:certificates.k8s.io:certificatesigningrequests:nodeclient --user vip7、vip用戶測試

因爲自動審批目前只針對 kubelet,所以 vip 申請的 csr 用戶名必須是 system:node:<name> 的形式,group 必須是 system:nodes,並且 usages 也必須是命令中所示:

然後查看 csr,可以看到 csr 的狀態已經是 Approved,Issued,實驗結束:

$ kubectl get csr

NAME AGE REQUESTOR CONDITION

system:node:test-node 3s vip Approved,Issued

[root@master1 cert]# kubectl describe clusterrole system:certificates.k8s.io:certificatesigningrequests:nodeclient

Name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

certificatesigningrequests.certificates.k8s.io/nodeclient [] [] [create]

參考文檔:

https://feisky.gitbooks.io/kubernetes/content/troubleshooting/cluster.html

https://k8s-install.opsnull.com/07-2.kubelet.html

https://docs.docker.com/engine/reference/commandline/dockerd/ #Dockerd更多參數

https://docs.docker.com/engine/reference/commandline/dockerd//#daemon-configuration-file #daemon.json 配置文件

https://kubernetes.io/docs/reference/command-line-tools-reference/kubelet-authentication-authorization/ #kubelet認證和授權

https://k8s-install.opsnull.com/08.%E9%AA%8C%E8%AF%81%E9%9B%86%E7%BE%A4%E5%8A%9F%E8%83%BD.html

https://lingxiankong.github.io/2018-09-18-kubelet-bootstrap-process.html

https://github.com/kubernetes/kubernetes/blob/master/pkg/kubelet/apis/config/types.go #kubelet配置文件