本節課分成二部分講解:

一、Spark Streaming on Pulling from Flume實戰

二、Spark Streaming on Pulling from Flume源碼解析

先簡單介紹下Flume的兩種模式:推模式(Flume push to Spark Streaming)和 拉模式(Spark Streaming pull from Flume )

採用推模式:推模式的理解就是Flume作爲緩存,存有數據。監聽對應端口,如果服務可以連接,就將數據push過去。(簡單,耦合要低),缺點是Spark Streaming程序沒有啓動的話,Flume端會報錯,同時會導致Spark Streaming程序來不及消費的情況。

採用拉模式:拉模式就是自己定義一個sink,Spark Streaming自己去channel裏面取數據,根據自身條件去獲取數據,穩定性好。

Flume pull實戰:

第一步:安裝Flume,本節課不在說明,參考(第87課:Flume推送數據到SparkStreaming案例實戰和內幕源碼解密)

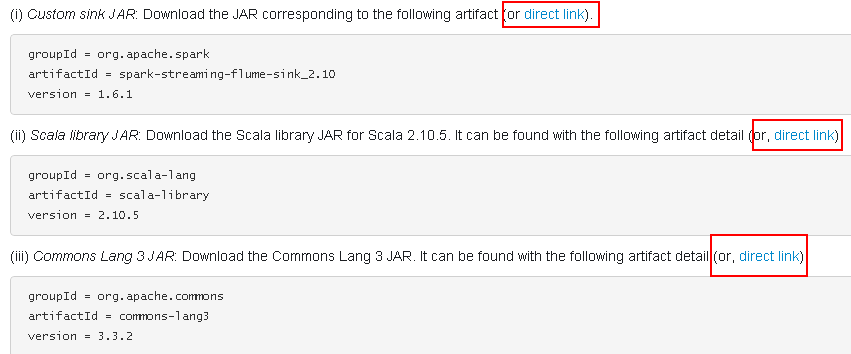

第二步:配置Flume,首先參照官網(http://spark.apache.org/docs/latest/streaming-flume-integration.html)要求添加依賴或直接下載3個jar包,並將其放入Flume安裝目錄下的lib目錄中

spark-streaming-flume-sink_2.10-1.6.0.jar、scala-library-2.10.5.jar、commons-lang3-3.3.2.jar

第三步:配置Flume環境參數,修改flume-conf.properties,從flume-conf.properties.template複製一份進行修改

#Flume pull模式

agent0.sources = source1

agent0.channels = memoryChannel

agent0.sinks = sink1

#配置Source1

agent0.sources.source1.type = spooldir

agent0.sources.source1.spoolDir = /home/hadoop/flume/tmp/TestDir

agent0.sources.source1.channels = memoryChannel

agent0.sources.source1.fileHeader = false

agent0.sources.source1.interceptors = il

agent0.sources.source1.interceptors.il.type = timestamp

#配置Sink1

agent0.sinks.sink1.type = org.apache.spark.streaming.flume.sink.SparkSink

agent0.sinks.sink1.hostname = SparkMaster

agent0.sinks.sink1.port = 9999

agent0.sinks.sink1.channel = memoryChannel

#配置channel

agent0.channels.memoryChannel.type = file

agent0.channels.memoryChannel.checkpointDir = /home/hadoop/flume/tmp/checkpoint

agent0.channels.memoryChannel.dataDirs = /home/hadoop/flume/tmp/dataDir

啓動flume命令:

root@SparkMaster:~/flume/flume-1.6.0/bin# ./flume-ng agent --conf ../conf/ --conf-file ../conf/flume-conf.properties --name agent0 -Dflume.root.logger=INFO,console

或者root@SparkMaster:~/flume/flume-1.6.0# flume-ng agent --conf ./conf/ --conf-file ./conf/flume-conf.properties --name agent0 -Dflume.root.logger=INFO,console

第四步:編寫簡單的業務代碼(Java版)

package com.dt.spark.SparkApps.sparkstreaming;

import java.util.Arrays;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.function.FlatMapFunction;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFunction;

import org.apache.spark.streaming.Durations;

import org.apache.spark.streaming.api.java.JavaDStream;

import org.apache.spark.streaming.api.java.JavaPairDStream;

import org.apache.spark.streaming.api.java.JavaReceiverInputDStream;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import org.apache.spark.streaming.flume.FlumeUtils;

import org.apache.spark.streaming.flume.SparkFlumeEvent;

import scala.Tuple2;

public class SparkStreamingPullDataFromFlume {

public static void main(String[] args) {

SparkConf conf = new SparkConf().setMaster("spark://SparkMaster:7077");

conf.setAppName("SparkStreamingPullDataFromFlume");

JavaStreamingContext jsc = new JavaStreamingContext(conf, Durations.seconds(30));

// 獲取數據

JavaReceiverInputDStream lines = FlumeUtils.createPollingStream(jsc, "SparkMaster", 9999);

// 進行單詞切分

JavaDStream<String> words = lines.flatMap(new FlatMapFunction<SparkFlumeEvent, String>() {

public Iterable<String> call(SparkFlumeEvent event) throws Exception {

String line = new String(event.event().getBody().toString());

return Arrays.asList(line.split(" "));

}

});

// 進行map操作,轉換成(key,value)格式

JavaPairDStream<String, Integer> pairs = words.mapToPair(new PairFunction<String, String, Integer>() {

public Tuple2<String, Integer> call(String word) throws Exception {

return new Tuple2<String, Integer>(word, 1);

}

});

// 進行reduceByKey動作,將key相同的value值進行合併

JavaPairDStream<String, Integer> wordsCount = pairs.reduceByKey(new Function2<Integer, Integer, Integer>() {

public Integer call(Integer v1, Integer v2) throws Exception {

return v1 + v2;

}

});

wordsCount.print();

jsc.start();

jsc.awaitTermination();

jsc.close();

}

}

將程序打包成jar文件上傳到Spark集羣中

第五步:啓動HDFS、Spark集羣和Flume

啓動Flume:root@SparkMaster:~/flume/flume-1.6.0/bin# ./flume-ng agent --conf ../conf/ --conf-file ../conf/flume-conf.properties --name agent0 -Dflume.root.logger=INFO,console

第六步:往/home/hadoop/flume/tmp/TestDir目錄中上傳測試文件,查看Flume的日誌變化

第七步:通過spark-submit命令運行程序:

./spark-submit --class com.dt.spark.SparkApps.SparkStreamingPullDataFromFlume --name SparkStreamingPullDataFromFlume /home/hadoop/spark/SparkStreamingPullDataFromFlume.jar

每隔30秒查看運行結果

第二部分:源碼分析

1、創建createPollingStream (FlumeUtils.scala )

注意:默認的存儲方式是MEMORY_AND_DISK_SER_2

/**

* Creates an input stream that is to be used with the Spark Sink deployed on a Flume agent.

* This stream will poll the sink for data and will pull events as they are available.

* This stream will use a batch size of 1000 events and run 5 threads to pull data.

* @param hostname Address of the host on which the Spark Sink is running

* @param port Port of the host at which the Spark Sink is listening

* @param storageLevel Storage level to use for storing the received objects

*/

def createPollingStream(

ssc: StreamingContext,

hostname: String,

port: Int,

storageLevel: StorageLevel = StorageLevel.MEMORY_AND_DISK_SER_2

): ReceiverInputDStream[SparkFlumeEvent] = {

createPollingStream(ssc, Seq(new InetSocketAddress(hostname, port)), storageLevel)

}

2、參數配置:默認的全局參數,private 級別配置無法修改

private val DEFAULT_POLLING_PARALLELISM = 5

private val DEFAULT_POLLING_BATCH_SIZE = 1000

/**

* Creates an input stream that is to be used with the Spark Sink deployed on a Flume agent.

* This stream will poll the sink for data and will pull events as they are available.

* This stream will use a batch size of 1000 events and run 5 threads to pull data.

* @param addresses List of InetSocketAddresses representing the hosts to connect to.

* @param storageLevel Storage level to use for storing the received objects

*/

def createPollingStream(

ssc: StreamingContext,

addresses: Seq[InetSocketAddress],

storageLevel: StorageLevel

): ReceiverInputDStream[SparkFlumeEvent] = {

createPollingStream(ssc, addresses, storageLevel,

DEFAULT_POLLING_BATCH_SIZE, DEFAULT_POLLING_PARALLELISM)

}

3、創建FlumePollingInputDstream對象

/**

* Creates an input stream that is to be used with the Spark Sink deployed on a Flume agent.

* This stream will poll the sink for data and will pull events as they are available.

* @param addresses List of InetSocketAddresses representing the hosts to connect to.

* @param maxBatchSize Maximum number of events to be pulled from the Spark sink in a

* single RPC call

* @param parallelism Number of concurrent requests this stream should send to the sink. Note

* that having a higher number of requests concurrently being pulled will

* result in this stream using more threads

* @param storageLevel Storage level to use for storing the received objects

*/

def createPollingStream(

ssc: StreamingContext,

addresses: Seq[InetSocketAddress],

storageLevel: StorageLevel,

maxBatchSize: Int,

parallelism: Int

): ReceiverInputDStream[SparkFlumeEvent] = {

new FlumePollingInputDStream[SparkFlumeEvent](ssc, addresses, maxBatchSize,

parallelism, storageLevel)

}

4、繼承自ReceiverInputDstream並覆寫getReciver方法,調用FlumePollingReciver接口

private[streaming] class FlumePollingInputDStream[T: ClassTag](

_ssc: StreamingContext,

val addresses: Seq[InetSocketAddress],

val maxBatchSize: Int,

val parallelism: Int,

storageLevel: StorageLevel

) extends ReceiverInputDStream[SparkFlumeEvent](_ssc) {

override def getReceiver(): Receiver[SparkFlumeEvent] = {

new FlumePollingReceiver(addresses, maxBatchSize, parallelism, storageLevel)

}

}

5、ReceiverInputDstream 構建了一個線程池,設置爲後臺線程;並使用lazy和工廠方法創建線程和NioClientSocket(NioClientSocket底層使用NettyServer的方式)

lazy val channelFactoryExecutor =

Executors.newCachedThreadPool(new ThreadFactoryBuilder().setDaemon(true).

setNameFormat("Flume Receiver Channel Thread - %d").build())

lazy val channelFactory =

new NioClientSocketChannelFactory(channelFactoryExecutor, channelFactoryExecutor)

6、receiverExecutor 內部也是線程池;connections是指鏈接分佈式Flume集羣的FlumeConnection實體句柄的個數,線程拿到實體句柄訪問數據。

lazy val receiverExecutor = Executors.newFixedThreadPool(parallelism,

new ThreadFactoryBuilder().setDaemon(true).setNameFormat("Flume Receiver Thread - %d").build())

private lazy val connections = new LinkedBlockingQueue[FlumeConnection]()

7、啓動時創建NettyTransceiver,根據並行度(默認5個)循環提交FlumeBatchFetcher

override def onStart(): Unit = {

// Create the connections to each Flume agent.

addresses.foreach(host => {

val transceiver = new NettyTransceiver(host, channelFactory)

val client = SpecificRequestor.getClient(classOf[SparkFlumeProtocol.Callback], transceiver)

connections.add(new FlumeConnection(transceiver, client))

})

for (i <- 0 until parallelism) {

logInfo("Starting Flume Polling Receiver worker threads..")

// Threads that pull data from Flume.

receiverExecutor.submit(new FlumeBatchFetcher(this))

}

}

8、FlumeBatchFetcher run方法中從Receiver中獲取connection鏈接句柄ack跟消息確認有關

def run(): Unit = {

while (!receiver.isStopped()) {

val connection = receiver.getConnections.poll()

val client = connection.client

var batchReceived = false

var seq: CharSequence = null

try {

getBatch(client) match {

case Some(eventBatch) =>

batchReceived = true

seq = eventBatch.getSequenceNumber

val events = toSparkFlumeEvents(eventBatch.getEvents)

if (store(events)) {

sendAck(client, seq)

} else {

sendNack(batchReceived, client, seq)

}

case None =>

}

} catch {

9、獲取一批一批數據方法

/**

* Gets a batch of events from the specified client. This method does not handle any exceptions

* which will be propogated to the caller.

* @param client Client to get events from

* @return [[Some]] which contains the event batch if Flume sent any events back, else [[None]]

*/

private def getBatch(client: SparkFlumeProtocol.Callback): Option[EventBatch] = {

val eventBatch = client.getEventBatch(receiver.getMaxBatchSize)

if (!SparkSinkUtils.isErrorBatch(eventBatch)) {

// No error, proceed with processing data

logDebug(s"Received batch of ${eventBatch.getEvents.size} events with sequence " +

s"number: ${eventBatch.getSequenceNumber}")

Some(eventBatch)

} else {

logWarning("Did not receive events from Flume agent due to error on the Flume agent: " +

eventBatch.getErrorMsg)

None

}

}

備註:

資料來源於:DT_大數據夢工廠

更多私密內容,請關注微信公衆號:DT_Spark

如果您對大數據Spark感興趣,可以免費聽由王家林老師每天晚上20:00開設的Spark永久免費公開課,地址YY房間號:68917580