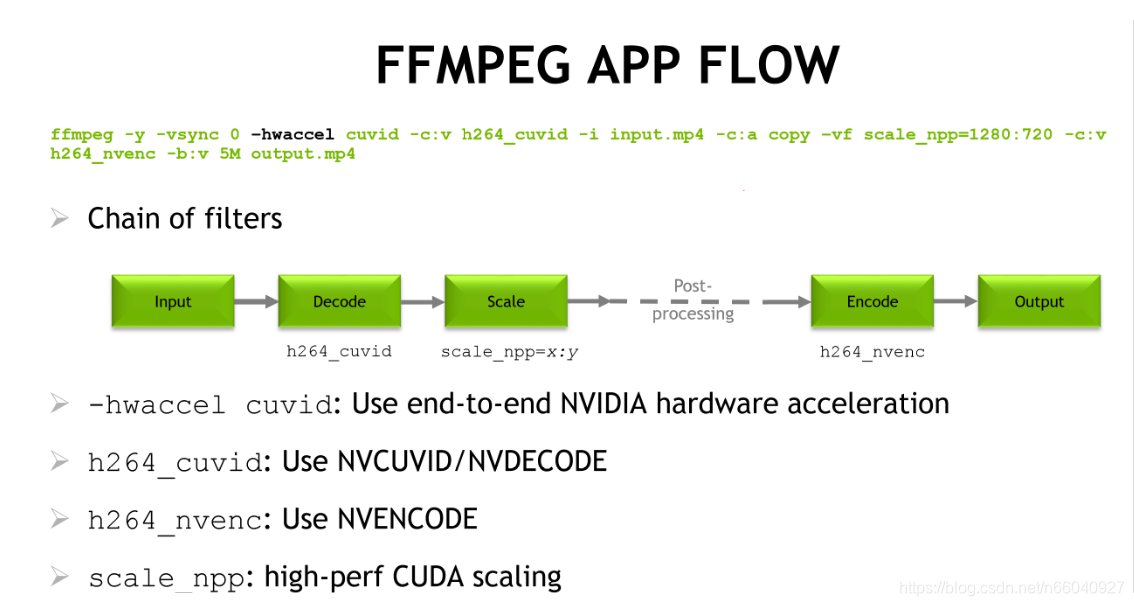

在nvidia硬件加速編解碼官方文檔發現了一個vf的用法可以進行高效率的轉分辨率轉碼,具體的貼個圖

實際測試發現這種用法1080p轉720p居然可以做到500+fps,這遠遠超過了分別使用cuvid和nvenc的轉碼效率,用nvidia-smi查看在運行時gpu裏的enc/dec模塊,發現佔用率60%-70%也是遠遠高於之前的7%,這才真正體現了gpu的性能嘛!按照官方的說法,上圖的filter chain是完全在gpu內進行的,而普通的transcoding like this(ffmpeg -c:v h264_cuvid -i input -c:v h264_nvenc -preset slow output.mkv )的中間產物(解碼後的視頻幀數據)是要送到系統內存裏的(ps:所以效率低),pps:目前scale filter只支持到8bit。

然後,想要使用上述功能,需要在編譯ffmpeg的時候configure enable libnpp,如果報錯libnpp not found, 指定cuda的include和lib path, 參考configure配置

./configure --enable-cuda --enable-cuvid --enable-nvenc --enable-nonfree --enable-libnpp

--extra-cflags=-I/usr/local/cuda/include --extra-ldflags=-L/usr/local/cuda/lib64

目前h264的nv加速最高支持4096x4096,而hevc supports up to 8k

然後後面貼一些參考資料

Many platforms offer access to dedicated hardware to perform a range of video-related tasks. Using such hardware allows some operations like decoding, encoding or filtering to be completed faster or using less of other resources (particularly CPU), but may give different or inferior results, or impose additional restrictions which are not present when using software only. On PC-like platforms, video hardware is typically integrated into a GPU (from AMD, Intel or NVIDIA), while on mobile SoC-type platforms it is generally an independent IP core (many different vendors).

Hardware decoders will generate equivalent output to software decoders, but may use less power and CPU to do so. Feature support varies – for more complex codecs with many different profiles, hardware decoders rarely implement all of them (for example, hardware decoders tend not to implement anything beyond YUV 4:2:0 at 8-bit depth for H.264). A common feature of many hardware decoders to be able to generate output in hardware surfaces suitable for use by other components (with discrete graphics cards, this means surfaces in the memory on the card rather than in system memory) – this is often useful for playback, as no further copying is required before rendering the output, and in some cases it can also be used with encoders supporting hardware surface input to avoid any copying at all in transcode cases.

Hardware encoders typically generate output of significantly lower quality than good software encoders like x264, but are generally faster and do not use much CPU resource. (That is, they require a higher bitrate to make output with the same perceptual quality, or they make output with a lower perceptual quality at the same bitrate.)

Systems with decode and/or encode capability may also offer access to other related filtering features. Things like scaling and deinterlacing are common, other postprocessing may be available depending on the system. Where hardware surfaces are usable, these filters will generally act on them rather than on normal frames in system memory.

There are a lot of different APIs of varying standardisation status available. FFmpeg offers access to many of these, with varying support.

Platform API Availability

| Linux | Windows | Android | Apple | Other | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| AMD | Intel | NVIDIA | AMD | Intel | NVIDIA | macOS | iOS | Raspberry Pi | ||

| CUDA / CUVID / NVENC | N | N | Y | N | N | Y | N | N | N | N |

| Direct3D 11 | N | N | N | Y | Y | Y | N | N | N | N |

| Direct3D 9 (DXVA2) | N | N | N | Y | Y | Y | N | N | N | N |

| libmfx | N | Y | N | N | Y | N | N | N | N | N |

| MediaCodec | N | N | N | N | N | N | Y | N | N | N |

| Media Foundation | N | N | N | Y | Y | Y | N | N | N | N |

| MMAL | N | N | N | N | N | N | N | N | N | Y |

| OpenCL | Y | Y | Y | Y | Y | Y | P | Y | N | N |

| OpenMAX | P | N | N | N | N | N | P | N | N | Y |

| V4L2 M2M | N | N | N | N | N | N | P | N | N | N |

| VAAPI | P | Y | P | N | N | N | N | N | N | N |

| VDA | N | N | N | N | N | N | N | Y | N | N |

| VDPAU | P | N | Y | N | N | N | N | N | N | N |

| VideoToolbox | N | N | N | N | N | N | N | Y | Y | N |

Key:

- Y Fully usable.

- P Partial support (some devices / some features).

- N Not possible.

FFmpeg API Implementation Status

| Decoder | Encoder | Other support | ||||||

|---|---|---|---|---|---|---|---|---|

| Internal | Standalone | Hardware output | Standalone | Hardware input | Filtering | Hardware context | Usable from ffmpeg CLI | |

| CUDA / CUVID / NVENC | N | Y | Y | Y | Y | Y | Y | Y |

| Direct3D 11 | Y | - | Y | - | - | F | Y | Y |

| Direct3D 9 / DXVA2 | Y | - | Y | - | - | N | Y | Y |

| libmfx | - | Y | Y | Y | Y | Y | Y | Y |

| MediaCodec | - | Y | Y | N | N | - | N | N |

| Media Foundation | - | N | N | N | N | N | N | N |

| MMAL | - | Y | Y | N | N | - | N | N |

| OpenCL | - | - | - | - | - | Y | F | F |

| OpenMAX | - | N | N | Y | N | N | N | Y |

| RockChip MPP | - | Y | Y | N | N | - | Y | Y |

| V4L2 M2M | - | Y | N | Y | N | N | N | Y |

| VAAPI | Y | - | Y | Y | Y | Y | Y | Y |

| VDA | Y | N | Y | - | - | - | N | Y |

| VDPAU | Y | - | Y | - | - | N | Y | Y |

| VideoToolbox | Y | N | Y | Y | Y | - | Y | Y |

Key:

- - Not applicable to this API.

- Y Working.

- N Possible but not implemented.

- F Not yet integrated, but work is being done in this area.

Use with the FFmpeg Utility

Internal hwaccel decoders are enabled via the -hwaccel option. The software decoder starts normally, but if it detects a stream which is decodable in hardware then it will attempt to delegate all significant processing to that hardware. If the stream is not decodable in hardware (for example, it is an unsupported codec or profile) then it will still be decoded in software automatically. If the hardware requires a particular device to function (or needs to distinguish between multiple devices, say if several graphics cards are available) then one can be selected using -hwaccel_device.

External wrapper decoders are used by setting a specific decoder with the -codec:v option. Typically they are named codec_api (for example: h264_cuvid). These decoders require the codec to be known in advance, and do not support any fallback to software if the stream is not supported.

Encoder wrappers are selected by -codec:v. Encoders generally have lots of options – look at the documentation for the particular encoder for details.

Hardware filters can be used in a filter graph like any other filter. Note, however, that they may not support any formats in common with software filters – in such cases it may be necessary to make use of hwupload and hwdownload filter instances to move frame data between hardware surfaces and normal memory.

VDPAU

Video Decode and Presentation API for Unix. Developed by NVIDIA for Unix/Linux? systems. To enable this you typically need the libvdpau development package in your distribution, and a compatible graphics card.

Note that VDPAU cannot be used to decode frames in memory, the compressed frames are sent by libavcodec to the GPU device supported by VDPAU and then the decoded image can be accessed using the VDPAU API. This is not done automatically by FFmpeg, but must be done at the application level (check for example the ffmpeg_vdpau.c file used by ffmpeg.c). Also, note that with this API it is not possible to move the decoded frame back to RAM, for example in case you need to encode again the decoded frame (e.g. when doing transcoding on a server).

Several decoders are currently supported through VDPAU in libavcodec, in particular H.264, MPEG-1/2/4, and VC-1.

VAAPI

Video Acceleration API (VAAPI) is a non-proprietary and royalty-free open source software library ("libva") and API specification, initially developed by Intel but can be used in combination with other devices.

It can be used to access the Quick Sync hardware in Intel GPUs and the UVD/VCE hardware in AMD GPUs. See VAAPI.

DXVA2

Direct-X Video Acceleration API, developed by Microsoft (supports Windows and XBox360).

Link to MSDN documentation: http://msdn.microsoft.com/en-us/library/windows/desktop/cc307941%28v=vs.85%29.aspx

Several decoders are currently supported, in particular H.264, MPEG-2, VC-1 and WMV 3.

DXVA2 hardware acceleration only works on Windows. In order to build FFmpeg with DXVA2 support, you need to install the dxva2api.h header. For MinGW this can be done by downloading the header maintained by VLC and installing it in the include patch (for example in /usr/include/).

For MinGW64, dxva2api.h is provided by default. One way to install mingw-w64 is through a pacman repository, and can be installed using one of the two following commands, depending on the architecture:

pacman -S mingw-w64-i686-gcc pacman -S mingw-w64-x86_64-gcc

To enable DXVA2, use the --enable-dxva2 ffmpeg configure switch.

To test decoding, use the following command:

ffmpeg -hwaccel dxva2 -threads 1 -i INPUT -f null - -benchmark

VDA

Video Decode Acceleration Framework, only supported on macOS. H.264 decoding is available in FFmpeg/libavcodec.

NVENC

NVENC is an API developed by NVIDIA which enables the use of NVIDIA GPU cards to perform H.264 and HEVC encoding. FFmpeg supports NVENC through the h264_nvenc and hevc_nvenc encoders. In order to enable it in FFmpeg you need:

- A supported GPU

- Supported drivers

- ffmpeg configured without --disable-nvenc

Check the NVIDIA website for more info on the supported GPUs and drivers.

Usage example:

ffmpeg -i input -c:v h264_nvenc -profile high444p -pixel_format yuv444p -preset default output.mp4

You can see available presets, other options, and encoder info with ffmpeg -h encoder=h264_nvenc or ffmpeg -h encoder=hevc_nvenc.

Note: If you get the No NVENC capable devices found error make sure you're encoding to a supported pixel format. See encoder info as shown above.

CUDA/CUVID/NvDecode

CUVID, which is also called nvdec by NVIDIA now, can be used for decoding on Windows and Linux. In combination with nvenc it offers full hardware transcoding.

CUVID offers decoders for H.264, HEVC, MJPEG, MPEG-1/2/4, VP8/VP9, VC-1. Codec support varies by hardware. The full set of codecs being available only on Pascal hardware, which adds VP9 and 10 bit support.

While decoding 10 bit video is supported, it is not possible to do full hardware transcoding currently (see the partial hardware example below).

Sample decode using CUVID, the cuvid decoder copies the frames to system memory in this case:

ffmpeg -c:v h264_cuvid -i input output.mkv

Full hardware transcode with CUVID and NVENC:

ffmpeg -hwaccel cuvid -c:v h264_cuvid -i input -c:v h264_nvenc -preset slow output.mkv

Partial hardware transcode, with frames passed through system memory (This is necessary for transcoding 10bit content):

ffmpeg -c:v h264_cuvid -i input -c:v h264_nvenc -preset slow output.mkv

If ffmpeg was compiled with support for libnpp, it can be used to insert a GPU based scaler into the chain:

ffmpeg -hwaccel_device 0 -hwaccel cuvid -c:v h264_cuvid -i input -vf scale_npp=-1:720 -c:v h264_nvenc -preset slow output.mkv

The -hwaccel_device option can be used to specify the GPU to be used by the cuvid hwaccel in ffmpeg.

libmfx

libmfx is a proprietary library from Intel for use of Quick Sync hardware on both Linux and Windows. On Windows it is the primary way to use more advanced functions beyond those accessible via DXVA2/D3D11VA, particularly encode. On Linux it has a very restricted feature set and is hard to use, but may be helpful for some use-cases desiring maximum throughput.

See QuickSync.

OpenCL

OpenCL is currently only used for filtering (deshake and unsharp filters). In order to use OpenCL code you need to enable the build with --enable-opencl. An API to use OpenCL API from FFmpeg is provided in libavutil/opencl.h. No decoding/encoding is currently supported (yet).

For enable-opencl to work you need to basically install your local graphics cards drivers, as well as SDK, then use its .lib files and headers.

AMD UVD/VCE

AMD UVD is usable for decode via VDPAU and VAAPI in Mesa on Linux. VCE also has some initial support for encode via VAAPI, but should be considered experimental.

For Windows there are port attempts, but nothing official has been released yet.

GPU-accelerated video processing integrated into the most popular open-source multimedia tools.

FFmpeg and libav are among the most popular open-source multimedia manipulation tools with a library of plugins that can be applied to various parts of the audio and video processing pipelines and have achieved wide adoption across the world.

Video encoding, decoding and transcoding are some of the most popular applications of FFmpeg. Thanks to the support of the FFmpeg and libav community and contributions from NVIDIA engineers, both of these tools now support native NVIDIA GPU hardware accelerated video encoding and decoding through the integration of the NVIDIA Video Codec SDK.

Leveraging FFmpeg’s Audio codec, stream muxing, and RTP protocols, the FFmpeg’s integration of NVIDIA Video Codec SDK enables high performance hardware accelerated video pipelines.

FFmpeg uses Video Codec SDK

If you have an NVIDIA GPU which supports hardware-accelerated video encoding and decoding, it’s simply a matter of compiling FFmpeg binary with the required support for NVIDIA libraries and using the resulting binaries to speed up video encoding/decoding.

FFmpeg supports following functionality accelerated by video hardware on NVIDIA GPUs:

- Hardware-accelerated encoding of H.264 and HEVC*

- Hardware-accelerated decoding** of H.264, HEVC, VP9, VP8, MPEG2, and MPEG4*

- Granular control over encoding settings such as encoding preset, rate control and other video quality parameters

- Create high-performance end-to-end hardware-accelerated video processing, 1:N encoding and 1:N transcoding pipeline using built-in filters in FFmpeg

- Ability to add your own custom high-performance CUDA filters using the shared CUDA context implementation in FFmpeg

- Windows/Linux support

* Support is dependent on HW. For a full list of GPUs and formats supported, please see the available GPU Support Matrix.

** HW decode support will be added to libav in the near future

What's New in FFmpeg

- Includes Video Codec SDK 8.0 headers (both encode/decode)

- 10-bit hwaccel accelerated pipeline

- Support for fractional CQ

- Support for Weighted Prediction

- CUDA Scale filter (supports both 8 and 10 bit scaling).

- Decode Capability Query

- More...

Download FFmpeg (main tree): GitHub

| Operating System | Windows 7, 8, 10, and Linux |

| Dependencies | NVENCODE API - NVIDIA Quadro, Tesla, GRID or GeForce products with Kepler, Maxwell and Pascal generation GPUs. NVDECODE API - NVIDIA Quadro, Tesla, GRID or GeForce products with Fermi, Kepler, Maxwell and Pascal generation GPUs. GPU Support Matrix Appropriate NVIDIA Display Driver DirectX SDK (Windows only)CUDA Toolkit |

| Development Environment | Windows: Visual Studio 2013SP5/2015, MSYS/MinGW Linux: gcc 4.8 or higher |

FFmpeg GPU HW-Acceleration Support Table

| Fermi | Kepler | Maxwell (1st Gen) | Maxwell (2nd Gen) | Maxwell (GM206) | Pascal | |

|---|---|---|---|---|---|---|

| H.264 encoding | N/A | FFmpeg v3.3 | FFmpeg v3.3 | FFmpeg v3.3 | FFmpeg v3.3 | FFmpeg v3.3 |

| HEVC encoding | N/A | N/A | N/A | FFmpeg v3.3 | FFmpeg v3.3 | FFmpeg v3.3 |

| MPEG2, MPEG-4, H.264 decoding | FFmpeg v3.3 | FFmpeg v3.3 | FFmpeg v3.3 | FFmpeg v3.3 | FFmpeg v3.3 | FFmpeg v3.3 |

| HEVC decoding | N/A | N/A | N/A | N/A | FFmpeg v3.3 | FFmpeg v3.3 |

| VP9 decoding | N/A | N/A | N/A | FFmpeg v3.3 | FFmpeg v3.3 | FFmpeg v3.3 |

For guidelines about NVIDIA GPU-accelerated video encoding/decoding performance, please visit the Video Codec SDK page for more details.

Getting Started with FFmpeg/libav using NVIDIA GPUs

Using NVIDIA hardware acceleration in FFmpeg/libav requires the following steps

- Download the latest FFmpeg or libav source code, by cloning the corresponding GIT repositories

- FFmpeg: https://git.ffmpeg.org/ffmpeg.git

- Libav: https://github.com/libav/libav

- Download and install the compatible driver from NVIDIA web site

- Downoad and install the CUDA Toolkit CUDA toolkit

- Use the following configure command (Use correct CUDA library path in config command below)

./configure --enable-cuda --enable-cuvid --enable-nvenc --enable-nonfree --enable-libnpp

--extra-cflags=-I/usr/local/cuda/include --extra-ldflags=-L/usr/local/cuda/lib64 - Use following command for build: make -j 10

- Use FFmpeg/libav binary as required. To start with FFmpeg, try the below sample command line for 1:2 transcoding

ffmpeg -y -hwaccel cuvid -c:v h264_cuvid -vsync 0 -i <input.mp4> -vf scale_npp=1920:1072

-vcodec h264_nvenc <output0.264> -vf scale_npp=1280:720 -vcodec h264_nvenc <output1.264>

For more information on FFmpeg licensing, please see this page.

FFmpeg in Action

FFmpeg is used by many projects, including Google Chrome and VLC player. You can easily integrate NVIDIA hardware-acceleration to these applications by configuring FFmpeg to use NVIDIA GPUs for video encoding and decoding tasks.

HandBrake is an open-source video transcoder available for Linux, Mac, and Windows.

HandBrake works with most common video files and formats, including ones created by consumer and professional video cameras, mobile devices such as phones and tablets, game and computer screen recordings, and DVD and Blu-ray discs. HandBrake leverages tools such as Libav, x264, and x265 to create new MP4 or MKV video files from these.

Plex Media Server is a client-server media player system and software suite that runs on Windows, macOS, Linux, FreeBSD or a NAS. Plex organizes all of the videos, music, and photos from your computer’s personal media library and let you stream to your devices.

The Plex Transcoder uses FFmpeg to handle and translates your media into that the format your client device supports.

How to use FFmpeg/libav with NVIDIA GPU-acceleration

Decode a single H.264 to YUV

To decode a single H.264 encoded elementary bitstream file into YUV, use the following command:

FFMPEG: ffmpeg -vsync 0 -c:v h264_cuvid -i <input.mp4> -f rawvideo <output.yuv>

LIBAV: avconv -vsync 0 -c:v h264_cuvid -i <input.mp4> -f rawvideo <output.yuv>

Example applications:

- Video analytics, video inferencing

- Video post-processing

- Video playback

Encode a single YUV file to a bitstream

To encode a single YUV file into an H.264/HEVC bitstream, use the following command:

H.264

FFMPEG: ffmpeg -f rawvideo -s:v 1920x1080 -r 30 -pix_fmt yuv420p -i <input.yuv> -c:v h264_nvenc -preset slow -cq 10 -bf 2 -g 150 <output.mp4>

LIBAV: avconv -f rawvideo -s:v 1920x1080 -r 30 -pix_fmt yuv420p -i <input.yuv> -c:v h264_nvenc -preset slow -cq 10 -bf 2 -g 150 <output.mp4>

HEVC (No B-frames)

FFMPEG: ffmpeg -f rawvideo -s:v 1920x1080 -r 30 -pix_fmt yuv420p -i <input.yuv> -vcodec hevc_nvenc -preset slow -cq 10 -g 150 <output.mp4>

LIBAV: avconv -f rawvideo -s:v 1920x1080 -r 30 -pix_fmt yuv420p -i <input.yuv> -vcodec hevc_nvenc -preset slow -cq 10 -g 150 <output.mp4>

Example applications:

- Surveillance

- Archiving footages from remote cameras

- Archiving raw captured video from a single camera

Transcode a single video file

To do 1:1 transcode, use the following command:

FFMPEG: ffmpeg -hwaccel cuvid -c:v h264_cuvid -i <input.mp4> -vf scale_npp=1280:720 -c:v h264_nvenc <output.mp4>

LIBAV: avconv -hwaccel cuvid -c:v h264_cuvid -i <input.mp4> -vf scale_npp=1280:720 -c:v h264_nvenc <output.mp4>

Example applications:

- Accelerated transcoding of consumer videos

Transcode a single video file to N streams

To do 1:N transcode, use the following command:

FFMPEG: ffmpeg -hwaccel cuvid -c:v h264_cuvid -i <input.mp4> -vf scale_npp=1280:720 -vcodec h264_nvenc <output0.mp4> -vf scale_npp 640:480 -vcodec h264_nvenc <output1.mp4>

LIBAV: avconv -hwaccel cuvid -c:v h264_cuvid -i <input.mp4> -vf scale_npp=1280:720 -vcodec h264_nvenc <output0.mp4> -vf scale_npp 640:480 -vcodec h264_nvenc <output1.mp4>

Example applications:

- Commercial (data center) video transcoding